Navigating the Path to CyberPeace: Insights and Strategies

Featured #factCheck Blogs

Executive Summary

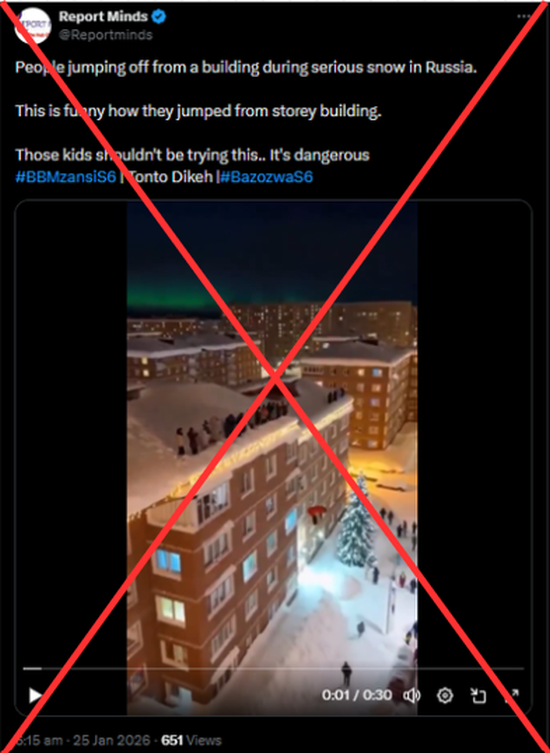

A dramatic video showing several people jumping from the upper floors of a building into what appears to be thick snow has been circulating on social media, with users claiming that it captures a real incident in Russia during heavy snowfall. In the footage, individuals can be seen leaping one after another from a multi-storey structure onto a snow-covered surface below, eliciting reactions ranging from amusement to concern. The claim accompanying the video suggests that it depicts a reckless real-life episode in a snow-hit region of Russia.

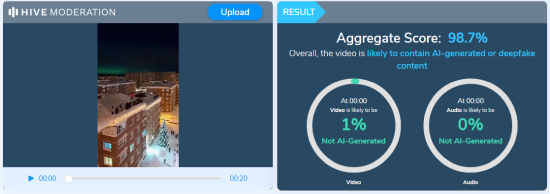

A thorough analysis by CyberPeace confirmed that the video is not a real-world recording but an AI-generated creation. The footage exhibits multiple signs of synthetic media, including unnatural human movements, inconsistent physics, blurred or distorted edges, and a glossy, computer-rendered appearance. In some frames, a partial watermark from an AI video generation tool is visible. Further verification using the Hive Moderation AI-detection platform indicated that 98.7% of the video is AI-generated, confirming that the clip is entirely digitally created and does not depict any actual incident in Russia.

Claim:

The video was shared on social media by an X (formerly Twitter) user ‘Report Minds’ on January 25, claiming it showed a real-life event in Russia. The post caption read: "People jumping off from a building during serious snow in Russia. This is funny, how they jumped from a storey building. Those kids shouldn't be trying this. It's dangerous." Here is the link to the post, and below is a screenshot.

Fact Check:

The Desk used the InVid tool to extract keyframes from the viral video and conducted a reverse image search, which revealed multiple instances of the same video shared by other users with similar claims. Upon close visual examination, several anomalies were observed, including unnatural human movements, blurred and distorted sections, a glossy, digitally-rendered appearance, and a partially concealed logo of the AI video generation tool ‘Sora AI’ visible in certain frames. Screenshots highlighting these inconsistencies were captured during the research .

- https://x.com/DailyLoud/status/2015107152772297086?s=20

- https://x.com/75secondes/status/2015134928745164848?s=20

The video was analyzed on Hive Moderation, an AI-detection platform, which confirmed that 98.7% of the content is AI-generated.

The viral video showing people jumping off a building into snow, claimed to depict a real incident in Russia, is entirely AI-generated. Social media users who shared it presented the digitally created footage as if it were real, making the claim false and misleading.

Executive Summary

A video circulating on social media shows Uttar Pradesh Chief Minister Yogi Adityanath and Gorakhpur MP Ravi Kishan walking with a group of people. Users are claiming that the two leaders were participating in a protest against the University Grants Commission (UGC). Research by CyberPeace has found the viral claim to be misleading. Our research revealed that the video is from September 2025 and is being shared out of context with recent events. The video was recorded when Chief Minister Yogi Adityanath undertook a foot march in Gorakhpur on a Monday. Ravi Kishan, MP from Gorakhpur, was also present. During the march, the Chief Minister visited local markets, malls, and shops, interacting with traders and gathering information on the implementation of GST rate cuts.

Claim Details:

On Instagram, a user shared the viral video on 27 January 2026. The video shows the Chief Minister and the MP walking with a group of people. The text “UGC protest” appears on the video, suggesting that it is connected to a protest against the University Grants Commission.

Fact Check:

To verify the claim, we searched Google using relevant keywords, but found no credible media reports confirming it.Next, we extracted key frames from the video and searched them using Google Lens. The video was traced to NBT Uttar Pradesh’s X (formerly Twitter) account, posted on 22 September 2025.

According to NBT Uttar Pradesh, CM Yogi Adityanath undertook a foot march in Gorakhpur, visiting malls and shops to interact with traders and check the implementation of GST rate cuts.

Conclusion:

The viral video is not related to any recent UGC guidelines. It dates back to September 2025, showing CM Yogi Adityanath and MP Ravi Kishan on a foot march in Gorakhpur, interacting with traders about GST rate cuts.The claim that the video depicts a protest against the University Grants Commission is therefore false and misleading.

Executive Summary:

A viral post on X (formerly Twitter) has been spreading misleading captions about a video that falsely claims to depict severe wildfires in Los Angeles similar to the real wildfire happening in Los Angeles. Using AI Content Detection tools we confirmed that the footage shown is entirely AI-generated and not authentic. In this report, we’ll break down the claims, fact-check the information, and provide a clear summary of the misinformation that has emerged with this viral clip.

Claim:

A video shared across social media platforms and messaging apps alleges to show wildfires ravaging Los Angeles, suggesting an ongoing natural disaster.

Fact Check:

After taking a close look at the video, we noticed some discrepancy such as the flames seem unnatural, the lighting is off, some glitches etc. which are usually seen in any AI generated video. Further we checked the video with an online AI content detection tool hive moderation, which says the video is AI generated, meaning that the video was deliberately created to mislead viewers. It’s crucial to stay alert to such deceptions, especially concerning serious topics like wildfires. Being well-informed allows us to navigate the complex information landscape and distinguish between real events and falsehoods.

Conclusion:

This video claiming to display wildfires in Los Angeles is AI generated, the case again reflects the importance of taking a minute to check if the information given is correct or not, especially when the matter is of severe importance, for example, a natural disaster. By being careful and cross-checking of the sources, we are able to minimize the spreading of misinformation and ensure that proper information reaches those who need it most.

- Claim: The video shows real footage of the ongoing wildfires in Los Angeles, California

- Claimed On: X (Formerly Known As Twitter)

- Fact Check: Fake Video

Executive Summary:

A viral post on X (formerly Twitter) gained much attention, creating a false narrative of recent damage caused by the earthquake in Tibet. Our findings confirmed that the clip was not filmed in Tibet, instead it came from an earthquake that occurred in Japan in the past. The origin of the claim is traced in this report. More to this, analysis and verified findings regarding the evidence have been put in place for further clarification of the misinformation around the video.

Claim:

The viral video shows collapsed infrastructure and significant destruction, with the caption or claims suggesting it is evidence of a recent earthquake in Tibet. Similar claims can be found here and here

Fact Check:

The widely circulated clip, initially claimed to depict the aftermath of the most recent earthquake in Tibet, has been rigorously analyzed and proven to be misattributed. A reverse image search based on the Keyframes of the claimed video revealed that the footage originated from a devastating earthquake in Japan in the past. According to an article published by a Japanese news website, the incident occurred in February 2024. The video was authenticated by news agencies, as it accurately depicted the scenes of destruction reported during that event.

Moreover, the same video was already uploaded on a YouTube channel, which proves that the video was not recent. The architecture, the signboards written in Japanese script, and the vehicles appearing in the video also prove that the footage belongs to Japan, not Tibet. The video shows news from Japan that occurred in the past, proving the video was shared with different context to spread false information.

The video was uploaded on February 2nd, 2024.

Snap from viral video

Snap from Youtube video

Conclusion:

The video viral about the earthquake recently experienced by Tibet is, therefore, wrong as it appears to be old footage from Japan, a previous earthquake experienced by this nation. Thus, the need for information verification, such that doing this helps the spreading of true information to avoid giving false data.

- Claim: A viral video claims to show recent earthquake destruction in Tibet.

- Claimed On: X (Formerly Known As Twitter)

- Fact Check: False and Misleading

.webp)

Executive Summary:

Recently, a viral post on social media claiming that actor Allu Arjun visited a Shiva temple to pray in celebration after the success of his film, PUSHPA 2. The post features an image of him visiting the temple. However, an investigation has determined that this photo is from 2017 and does not relate to the film's release.

Claims:

The claim states that Allu Arjun recently visited a Shiva temple to express his thanks for the success of Pushpa 2, featuring a photograph that allegedly captures this moment.

Fact Check:

The image circulating on social media, that Allu Arjun visited a Shiva temple to celebrate the success of Pushpa 2, is misleading.

After conducting a reverse image search, we confirmed that this photograph is from 2017, taken during the actor's visit to the Tirumala Temple for a personal event, well before Pushpa 2 was ever announced. The context has been altered to falsely connect it to the film's success. Additionally, there is no credible evidence or recent reports to support the claim that Allu Arjun visited a temple for this specific reason, making the assertion entirely baseless.

Before sharing viral posts, take a brief moment to verify the facts. Misinformation spreads quickly and it’s far better to rely on trusted fact-checking sources.

Conclusion:

The claim that Allu Arjun visited a Shiva temple to celebrate the success of Pushpa 2 is false. The image circulating is actually from an earlier time. This situation illustrates how misinformation can spread when an old photo is used to construct a misleading story. Before sharing viral posts, take a moment to verify the facts. Misinformation spreads quickly, and it is far better to rely on trusted fact-checking sources.

- Claim: The image claims Allu Arjun visited Shiva temple after Pushpa 2’s success.

- Claimed On: Facebook

- Fact Check: False and Misleading

.webp)

Executive Summary:

A post on X (formerly Twitter) has gained widespread attention, featuring an image inaccurately asserting that Houthi rebels attacked a power plant in Ashkelon, Israel. This misleading content has circulated widely amid escalating geopolitical tensions. However, investigation shows that the footage actually originates from a prior incident in Saudi Arabia. This situation underscores the significant dangers posed by misinformation during conflicts and highlights the importance of verifying sources before sharing information.

Claims:

The viral video claims to show Houthi rebels attacking Israel's Ashkelon power plant as part of recent escalations in the Middle East conflict.

Fact Check:

Upon receiving the viral posts, we conducted a Google Lens search on the keyframes of the video. The search reveals that the video circulating online does not refer to an attack on the Ashkelon power plant in Israel. Instead, it depicts a 2022 drone strike on a Saudi Aramco facility in Abqaiq. There are no credible reports of Houthi rebels targeting Ashkelon, as their activities are largely confined to Yemen and Saudi Arabia.

This incident highlights the risks associated with misinformation during sensitive geopolitical events. Before sharing viral posts, take a brief moment to verify the facts. Misinformation spreads quickly and it’s far better to rely on trusted fact-checking sources.

Conclusion:

The assertion that Houthi rebels targeted the Ashkelon power plant in Israel is incorrect. The viral video in question has been misrepresented and actually shows a 2022 incident in Saudi Arabia. This underscores the importance of being cautious when sharing unverified media. Before sharing viral posts, take a moment to verify the facts. Misinformation spreads quickly, and it is far better to rely on trusted fact-checking sources.

- Claim: The video shows massive fire at Israel's Ashkelon power plant

- Claimed On:Instagram and X (Formerly Known As Twitter)

- Fact Check: False and Misleading

.webp)

Executive Summary:

A viral online video claims to show a Syrian prisoner experiencing sunlight for the first time in 13 years. However, the CyberPeace Research Team has confirmed that the video is a deep fake, created using AI technology to manipulate the prisoner’s facial expressions and surroundings. The original footage is unrelated to the claim that the prisoner has been held in solitary confinement for 13 years. The assertion that this video depicts a Syrian prisoner seeing sunlight for the first time is false and misleading.

Claim A viral video falsely claims that a Syrian prisoner is seeing sunlight for the first time in 13 years.

Factcheck:

Upon receiving the viral posts, we conducted a Google Lens search on keyframes from the video. The search led us to various legitimate sources featuring real reports about Syrian prisoners, but none of them included any mention of such an incident. The viral video exhibited several signs of digital manipulation, prompting further investigation.

We used AI detection tools, such as TrueMedia, to analyze the video. The analysis confirmed with 97.0% confidence that the video was a deepfake. The tools identified “substantial evidence of manipulation,” particularly in the prisoner’s facial movements and the lighting conditions, both of which appeared artificially generated.

Additionally, a thorough review of news sources and official reports related to Syrian prisoners revealed no evidence of a prisoner being released from solitary confinement after 13 years, or experiencing sunlight for the first time in such a manner. No credible reports supported the viral video’s claim, further confirming its inauthenticity.

Conclusion:

The viral video claiming that a Syrian prisoner is seeing sunlight for the first time in 13 years is a deep fake. Investigations using tools like Hive AI detection confirm that the video was digitally manipulated using AI technology. Furthermore, there is no supporting information in any reliable sources. The CyberPeace Research Team confirms that the video was fabricated, and the claim is false and misleading.

- Claim: Syrian prisoner sees sunlight for the first time in 13 years, viral on social media.

- Claimed on: Facebook and X(Formerly Twitter)

- Fact Check: False & Misleading

Executive Summary:

A post on X (formerly Twitter) featuring an image that has been widely shared with misleading captions, claiming to show men riding an elephant next to a tiger in Bihar, India. This post has sparked both fascination and skepticism on social media. However, our investigation has revealed that the image is misleading. It is not a recent photograph; rather, it is a photo of an incident from 2011. Always verify claims before sharing.

Claims:

An image purporting to depict men riding an elephant next to a tiger in Bihar has gone viral, implying that this astonishing event truly took place.

Fact Check:

After investigation of the viral image using Reverse Image Search shows that it comes from an older video. The footage shows a tiger that was shot after it became a man-eater by forest guard. The tiger killed six people and caused panic in local villages in the Ramnagar division of Uttarakhand in January, 2011.

Before sharing viral posts, take a brief moment to verify the facts. Misinformation spreads quickly and it’s far better to rely on trusted fact-checking sources.

Conclusion:

The claim that men rode an elephant alongside a tiger in Bihar is false. The photo presented as recent actually originates from the past and does not depict a current event. Social media users should exercise caution and verify sensational claims before sharing them.

- Claim: The video shows people casually interacting with a tiger in Bihar

- Claimed On:Instagram and X (Formerly Known As Twitter)

- Fact Check: False and Misleading

Executive Summary:

A social media viral post claims to show a mosque being set on fire in India, contributing to growing communal tensions and misinformation. However, a detailed fact-check has revealed that the footage actually comes from Indonesia. The spread of such misleading content can dangerously escalate social unrest, making it crucial to rely on verified facts to prevent further division and harm.

Claim:

The viral video claims to show a mosque being set on fire in India, suggesting it is linked to communal violence.

Fact Check

The investigation revealed that the video was originally posted on 8th December 2024. A reverse image search allowed us to trace the source and confirm that the footage is not linked to any recent incidents. The original post, written in Indonesian, explained that the fire took place at the Central Market in Luwuk, Banggai, Indonesia, not in India.

Conclusion: The viral claim that a mosque was set on fire in India isn’t True. The video is actually from Indonesia and has been intentionally misrepresented to circulate false information. This event underscores the need to verify information before spreading it. Misinformation can spread quickly and cause harm. By taking the time to check facts and rely on credible sources, we can prevent false information from escalating and protect harmony in our communities.

- Claim: The video shows a mosque set on fire in India

- Claimed On: Social Media

- Fact Check: False and Misleading

Executive Summary:

A viral online image claims to show Arvind Kejriwal, Chief Minister of Delhi, welcoming Elon Musk during his visit to India to discuss Delhi’s administrative policies. However, the CyberPeace Research Team has confirmed that the image is a deep fake, created using AI technology. The assertion that Elon Musk visited India to discuss Delhi’s administrative policies is false and misleading.

Claim

A viral image claims that Arvind Kejriwal welcomed Elon Musk during his visit to India to discuss Delhi’s administrative policies.

Fact Check:

Upon receiving the viral posts, we conducted a reverse image search using InVid Reverse Image searching tool. The search traced the image back to different unrelated sources featuring both Arvind Kejriwal and Elon Musk, but none of the sources depicted them together or involved any such event. The viral image displayed visible inconsistencies, such as lighting disparities and unnatural blending, which prompted further investigation.

Using advanced AI detection tools like TrueMedia.org and Hive AI Detection tool, we analyzed the image. The analysis confirmed with 97.5% confidence that the image was a deepfake. The tools identified “substantial evidence of manipulation,” particularly in the merging of facial features and the alignment of clothes and background, which were artificially generated.

Moreover, a review of official statements and credible reports revealed no record of Elon Musk visiting India to discuss Delhi’s administrative policies. Neither Arvind Kejriwal’s office nor Tesla or SpaceX made any announcement regarding such an event, further debunking the viral claim.

Conclusion:

The viral image claiming that Arvind Kejriwal welcomed Elon Musk during his visit to India to discuss Delhi’s administrative policies is a deep fake. Tools like Reverse Image search and AI detection confirm the image’s manipulation through AI technology. Additionally, there is no supporting evidence from any credible sources. The CyberPeace Research Team confirms the claim is false and misleading.

- Claim: Arvind Kejriwal welcomed Elon Musk to India to discuss Delhi’s administrative policies, viral on social media.

- Claimed on: Facebook and X(Formerly Twitter)

- Fact Check: False & Misleading

Executive Summary:

A viral online video claims of an attack on Prime Minister Benjamin Netanyahu in the Israeli Senate. However, the CyberPeace Research Team has confirmed that the video is fake, created using video editing tools to manipulate the true essence of the original footage by merging two very different videos as one and making false claims. The original footage has no connection to an attack on Mr. Netanyahu. The claim that endorses the same is therefore false and misleading.

Claims:

A viral video claims an attack on Prime Minister Benjamin Netanyahu in the Israeli Senate.

Fact Check:

Upon receiving the viral posts, we conducted a Reverse Image search on the keyframes of the video. The search led us to various legitimate sources featuring an attack on an ethnic Turkish leader of Bulgaria but not on the Prime Minister Benjamin Netanyahu, none of which included any attacks on him.

We used AI detection tools, such as TrueMedia.org, to analyze the video. The analysis confirmed with 68.0% confidence that the video was an editing. The tools identified "substantial evidence of manipulation," particularly in the change of graphics quality of the footage and the breakage of the flow in footage with the change in overall background environment.

Additionally, an extensive review of official statements from the Knesset revealed no mention of any such incident taking place. No credible reports were found linking the Israeli PM to the same, further confirming the video’s inauthenticity.

Conclusion:

The viral video claiming of an attack on Prime Minister Netanyahu is an old video that has been edited. The research using various AI detection tools confirms that the video is manipulated using edited footage. Additionally, there is no information in any official sources. Thus, the CyberPeace Research Team confirms that the video was manipulated using video editing technology, making the claim false and misleading.

- Claim: Attack on the Prime Minister Netanyahu Israeli Senate

- Claimed on: Facebook, Instagram and X(Formerly Twitter)

- Fact Check: False & Misleading

Executive Summary:

A viral post on social media shared with misleading captions about a National Highway being built with large bridges over a mountainside in Jammu and Kashmir. However, the investigation of the claim shows that the bridge is from China. Thus the video is false and misleading.

Claim:

A video circulating of National Highway 14 construction being built on the mountain side in Jammu and Kashmir.

Fact Check:

Upon receiving the image, Reverse Image Search was carried out, an image of an under-construction road, falsely linked to Jammu and Kashmir has been proven inaccurate. After investigating we confirmed the road is from a different location that is G6911 Ankang-Laifeng Expressway in China, highlighting the need to verify information before sharing.

Conclusion:

The viral claim mentioning under-construction Highway from Jammu and Kashmir is false. The post is actually from China and not J&K. Misinformation like this can mislead the public. Before sharing viral posts, take a brief moment to verify the facts. This highlights the importance of verifying information and relying on credible sources to combat the spread of false claims.

- Claim: Under-Construction Road Falsely Linked to Jammu and Kashmir

- Claimed On: Instagram and X (Formerly Known As Twitter)

- Fact Check: False and Misleading

Executive Summary:

A viral post on X (formerly twitter) shared with misleading captions about Gautam Adani being arrested in public for fraud, bribery and corruption. The charges accuse him, his nephew Sagar Adani and 6 others of his group allegedly defrauding American investors and orchestrating a bribery scheme to secure a multi-billion-dollar solar energy project awarded by the Indian government. Always verify claims before sharing posts/photos as this came out to be AI-generated.

Claim:

An image circulating of public arrest after a US court accused Gautam Adani and executives of bribery.

Fact Check:

There are multiple anomalies as we can see in the picture attached below, (highlighted in red circle) the police officer grabbing Adani’s arm has six fingers. Adani’s other hand is completely absent. The left eye of an officer (marked in blue) is inconsistent with the right. The faces of officers (marked in yellow and green circles) appear distorted, and another officer (shown in pink circle) appears to have a fully covered face. With all this evidence the picture is too distorted for an image to be clicked by a camera.

A thorough examination utilizing AI detection software concluded that the image was synthetically produced.

Conclusion:

A viral image circulating of the public arrest of Gautam Adani after a US court accused of bribery. After analysing the image, it is proved to be an AI-Generated image and there is no authentic information in any news articles. Such misinformation spreads fast and can confuse and harm public perception. Always verify the image by checking for visual inconsistency and using trusted sources to confirm authenticity.

- Claim: Gautam Adani arrested in public by law enforcement agencies

- Claimed On: Instagram and X (Formerly Known As Twitter)

- Fact Check: False and Misleading

Executive Summary:

A viral online video claims visuals of a massive rally organised in Manipur for stopping the violence in Manipur. However, the CyberPeace Research Team has confirmed that the video is a deep fake, created using AI technology to manipulate the crowd into existence. There is no original footage in connection to any similar protest. The claim that promotes the same is therefore, false and misleading.

Claims:

A viral post falsely claims of a massive rally held in Manipur.

Fact Check:

Upon receiving the viral posts, we conducted a Google Lens search on the keyframes of the video. We could not locate any authentic sources mentioning such event held recently or previously. The viral video exhibited signs of digital manipulation, prompting a deeper investigation.

We used AI detection tools, such as TrueMedia and Hive AI Detection tool, to analyze the video. The analysis confirmed with 99.7% confidence that the video was a deepfake. The tools identified "substantial evidence of manipulation," particularly in the crowd and colour gradience , which were found to be artificially generated.

Additionally, an extensive review of official statements and interviews with Manipur State officials revealed no mention of any such rally. No credible reports were found linking to such protests, further confirming the video’s inauthenticity.

Conclusion:

The viral video claims visuals of a massive rally held in Manipur. The research using various tools such as truemedia.org and other AI detection tools confirms that the video is manipulated using AI technology. Additionally, there is no information in any official sources. Thus, the CyberPeace Research Team confirms that the video was manipulated using AI technology, making the claim false and misleading.

- Claim: Massive rally held in Manipur against the ongoing violence viral on social media.

- Claimed on: Instagram and X(Formerly Twitter)

- Fact Check: False & Misleading