Navigating the Path to CyberPeace: Insights and Strategies

Featured #factCheck Blogs

Executive Summary

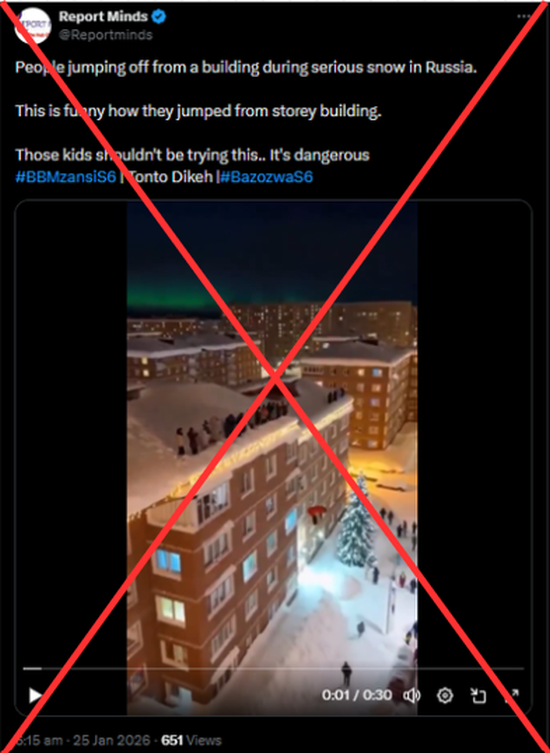

A dramatic video showing several people jumping from the upper floors of a building into what appears to be thick snow has been circulating on social media, with users claiming that it captures a real incident in Russia during heavy snowfall. In the footage, individuals can be seen leaping one after another from a multi-storey structure onto a snow-covered surface below, eliciting reactions ranging from amusement to concern. The claim accompanying the video suggests that it depicts a reckless real-life episode in a snow-hit region of Russia.

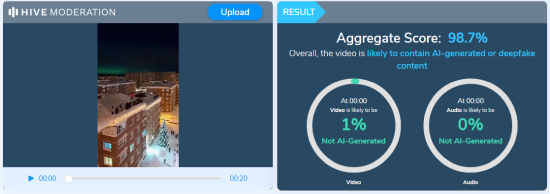

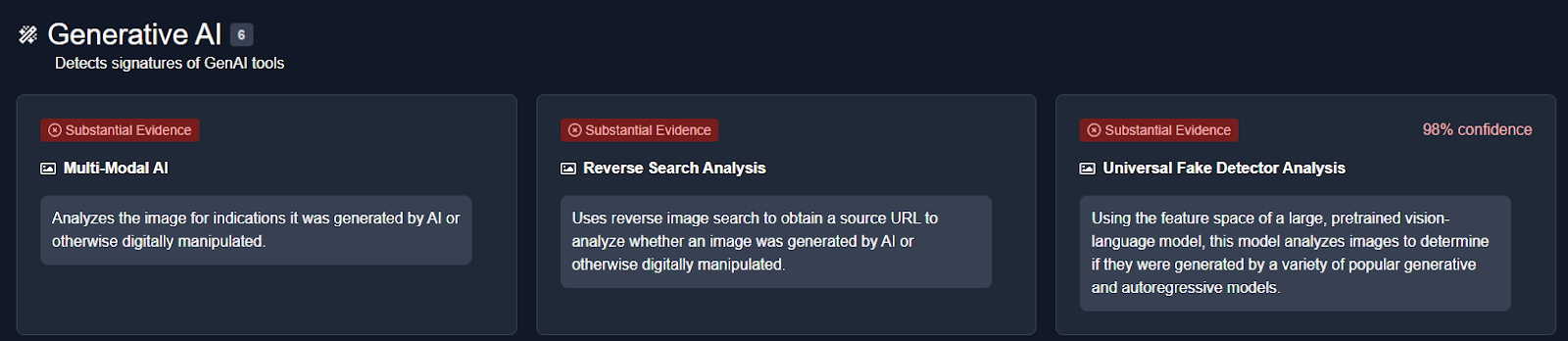

A thorough analysis by CyberPeace confirmed that the video is not a real-world recording but an AI-generated creation. The footage exhibits multiple signs of synthetic media, including unnatural human movements, inconsistent physics, blurred or distorted edges, and a glossy, computer-rendered appearance. In some frames, a partial watermark from an AI video generation tool is visible. Further verification using the Hive Moderation AI-detection platform indicated that 98.7% of the video is AI-generated, confirming that the clip is entirely digitally created and does not depict any actual incident in Russia.

Claim:

The video was shared on social media by an X (formerly Twitter) user ‘Report Minds’ on January 25, claiming it showed a real-life event in Russia. The post caption read: "People jumping off from a building during serious snow in Russia. This is funny, how they jumped from a storey building. Those kids shouldn't be trying this. It's dangerous." Here is the link to the post, and below is a screenshot.

Fact Check:

The Desk used the InVid tool to extract keyframes from the viral video and conducted a reverse image search, which revealed multiple instances of the same video shared by other users with similar claims. Upon close visual examination, several anomalies were observed, including unnatural human movements, blurred and distorted sections, a glossy, digitally-rendered appearance, and a partially concealed logo of the AI video generation tool ‘Sora AI’ visible in certain frames. Screenshots highlighting these inconsistencies were captured during the research .

- https://x.com/DailyLoud/status/2015107152772297086?s=20

- https://x.com/75secondes/status/2015134928745164848?s=20

The video was analyzed on Hive Moderation, an AI-detection platform, which confirmed that 98.7% of the content is AI-generated.

The viral video showing people jumping off a building into snow, claimed to depict a real incident in Russia, is entirely AI-generated. Social media users who shared it presented the digitally created footage as if it were real, making the claim false and misleading.

Executive Summary

A video circulating on social media shows Uttar Pradesh Chief Minister Yogi Adityanath and Gorakhpur MP Ravi Kishan walking with a group of people. Users are claiming that the two leaders were participating in a protest against the University Grants Commission (UGC). Research by CyberPeace has found the viral claim to be misleading. Our research revealed that the video is from September 2025 and is being shared out of context with recent events. The video was recorded when Chief Minister Yogi Adityanath undertook a foot march in Gorakhpur on a Monday. Ravi Kishan, MP from Gorakhpur, was also present. During the march, the Chief Minister visited local markets, malls, and shops, interacting with traders and gathering information on the implementation of GST rate cuts.

Claim Details:

On Instagram, a user shared the viral video on 27 January 2026. The video shows the Chief Minister and the MP walking with a group of people. The text “UGC protest” appears on the video, suggesting that it is connected to a protest against the University Grants Commission.

Fact Check:

To verify the claim, we searched Google using relevant keywords, but found no credible media reports confirming it.Next, we extracted key frames from the video and searched them using Google Lens. The video was traced to NBT Uttar Pradesh’s X (formerly Twitter) account, posted on 22 September 2025.

According to NBT Uttar Pradesh, CM Yogi Adityanath undertook a foot march in Gorakhpur, visiting malls and shops to interact with traders and check the implementation of GST rate cuts.

Conclusion:

The viral video is not related to any recent UGC guidelines. It dates back to September 2025, showing CM Yogi Adityanath and MP Ravi Kishan on a foot march in Gorakhpur, interacting with traders about GST rate cuts.The claim that the video depicts a protest against the University Grants Commission is therefore false and misleading.

Executive Summary

A viral image claims that an Israeli helicopter shot down in South Lebanon. This investigation evaluates the possible authenticity of the picture, concluding that it was an old photograph, taken out of context for a more modern setting.

Claims

The viral image circulating online claims to depict an Israeli helicopter recently shot down in South Lebanon during the ongoing conflict between Israel and militant groups in the region.

Factcheck:

Upon Reverse Image Searching, we found a post from 2019 on Arab48.com with the exact viral picture.

Thus, reverse image searches led fact-checkers to the original source of the image, thus putting an end to the false claim.

There are no official reports from the main news agencies and the Israeli Defense Forces that confirm a helicopter shot down in southern Lebanon during the current hostilities.

Conclusion

Cyber Peace Research Team has concluded that the viral image claiming an Israeli helicopter shot down in South Lebanon is misleading and has no relevance to the ongoing news. It is an old photograph which has been widely shared using a different context, fueling the conflict. It is advised to verify claims from credible sources and not spread false narratives.

- Claim: Israeli helicopter recently shot down in South Lebanon

- Claimed On: Facebook

- Fact Check: Misleading, Original Image found by Google Reverse Image Search

.webp)

Executive Summary:

Our Team recently came across a post on X (formerly twitter) where a photo widely shared with misleading captions was used about a Hindu Priest performing a vedic prayer at Washington after recent elections. After investigating, we found that it shows a ritual performed by a Hindu priest at a private event in White House to bring an end to the Covid-19 Pandemic. Always verify claims before sharing.

Claim:

An image circulating after Donald Trump’s win in the US election shows Pujari Harish Brahmbhatt at the White House recently.

Fact Check:

The analysis was carried out and found that the video is from an old post that was uploaded in May 2020. By doing a Reverse Image Search we were able to trace the sacred Vedic Shanti Path or peace prayer was recited by a Hindu priest in the Rose Garden of the White House on the occasion of National Day of Prayer Service with other religious leaders to pray for the health, safety and well-being of everyone affected by the coronavirus pandemic during those difficult days, and to bring an end to Covid-19 Pandemic.

Conclusion:

The viral claim mentioning that a Hindu priest performed a Vedic prayer at the White House during Donald Trump’s presidency isn’t true. The photo is actually from a private event in 2020 and provides misleading information.

Before sharing viral posts, take a brief moment to verify the facts. Misinformation spreads quickly and it’s far better to rely on trusted fact-checking sources.

- Claim: Hindu priest held a Vedic prayer at the White House under Trump

- Claimed On:Instagram and X (Formerly Known As Twitter)

- Fact Check: False and Misleading

Executive Summary:

An old video dated 2023 showing the arrest of a Bangladeshi migrant for murdering a Polish woman has been going viral massively on social media claiming that he is an Indian national. This viral video was fact checked and debunked.

Claim:

The video circulating on social media alleges that an Indian migrant was arrested in Greece for assaulting a young Christian girl. It has been shared with narratives maligning Indian migrants. The post was first shared on Facebook by an account known as “Voices of hope” and has been shared in the report as well.

Facts:

The CyberPeace Research team has utilized Google Image Search to find the original source of the claim. Upon searching we find the original news report published by Greek City Times in June 2023.

The person arrested in the video clip is a Bangladeshi migrant and not of Indian origin. CyberPeace Research Team assessed the available police reports and other verifiable sources to confirm that the arrested person is Bangladeshi.

The video has been dated 2023, relating to a case that occurred in Poland and relates to absolutely nothing about India migrants.

Neither the Polish government nor authorized news agency outlets reported Indian citizens for the controversy in question.

Conclusion:

The viral video falsely implicating an Indian migrant in a Polish woman’s murder is misleading. The accused is a Bangladeshi migrant, and the incident has been misrepresented to spread misinformation. This highlights the importance of verifying such claims to prevent the spread of xenophobia and false narratives.

- Claim: Video shows an Indian immigrant being arrested in Greece for allegedly assaulting a young Christian girl.

- Claimed On: X (Formerly Known As Twitter) and Facebook.

- Fact Check: Misleading.

Executive Summary:

A viral online video claims Billionaire and Founder of Tesla & SpaceX Elon Musk of promoting Cryptocurrency. The CyberPeace Research Team has confirmed that the video is a deepfake, created using AI technology to manipulate Elon’s facial expressions and voice through the use of relevant, reputed and well verified AI tools and applications to arrive at the above conclusion for the same. The original footage had no connections to any cryptocurrency, BTC or ETH apportion to the ardent followers of crypto-trading. The claim that Mr. Musk endorses the same and is therefore concluded to be false and misleading.

Claims:

A viral video falsely claims that Billionaire and founder of Tesla Elon Musk is endorsing a Crypto giveaway project for the crypto enthusiasts which are also his followers by consigning a portion of his valuable Bitcoin and Ethereum stock.

Fact Check:

Upon receiving the viral posts, we conducted a Google Lens search on the keyframes of the video. The search led us to various legitimate sources featuring Mr. Elon Musk but none of them included any promotion of any cryptocurrency giveaway. The viral video exhibited signs of digital manipulation, prompting a deeper investigation.

We used AI detection tools, such as TrueMedia.org, to analyze the video. The analysis confirmed with 99.0% confidence that the video was a deepfake. The tools identified "substantial evidence of manipulation," particularly in the facial movements and voice, which were found to be artificially generated.

Additionally, an extensive review of official statements and interviews with Mr. Musk revealed no mention of any such giveaway. No credible reports were found linking Elon Musk to this promotion, further confirming the video’s inauthenticity.

Conclusion:

The viral video claiming that Elon Musk promotes a crypto giveaway is a deep fake. The research using various tools such as Google Lens, AI detection tool confirms that the video is manipulated using AI technology. Additionally, there is no information in any official sources. Thus, the CyberPeace Research Team confirms that the video was manipulated using AI technology, making the claim false and misleading.

- Claim: Elon Musk conducting giving away Cryptocurrency viral on social media.

- Claimed on: X(Formerly Twitter)

- Fact Check: False & Misleading

EXECUTIVE SUMMARY:

A viral video is surfacing claiming to capture an aerial view of Mount Kailash that has breathtaking scenery apparently providing a rare real-life shot of Tibet's sacred mountain. Its authenticity was investigated, and authenticity versus digitally manipulative features were analyzed.

CLAIMS:

The viral video claims to reveal the real aerial shot of Mount Kailash, as if exposing us to the natural beauty of such a hallowed mountain. The video was circulated widely in social media, with users crediting it to be the actual footage of Mount Kailash.

FACTS:

The viral video that was circulated through social media was not real footage of Mount Kailash. The reverse image search revealed that it is an AI-generated video created by Sonam and Namgyal, two Tibet based graphic artists on Midjourney. The advanced digital techniques used helped to provide a realistic lifelike scene in the video.

No media or geographical source has reported or published the video as authentic footage of Mount Kailash. Besides, several visual aspects, including lighting and environmental features, indicate that it is computer-generated.

For further verification, we used Hive Moderation, a deep fake detection tool to conclude whether the video is AI-Generated or Real. It was found to be AI generated.

CONCLUSION:

The viral video claiming to show an aerial view of Mount Kailash is an AI-manipulated creation, not authentic footage of the sacred mountain. This incident highlights the growing influence of AI and CGI in creating realistic but misleading content, emphasizing the need for viewers to verify such visuals through trusted sources before sharing.

- Claim: Digitally Morphed Video of Mt. Kailash, Showcasing Stunning White Clouds

- Claimed On: X (Formerly Known As Twitter), Instagram

- Fact Check: AI-Generated (Checked using Hive Moderation).

Introduction:

This report examines ongoing phishing scams targeting "State Bank of India (SBI)" customers, India's biggest public bank using fake SelfKYC APKs to trick people. The image plays a part in a phishing plan to get users to download bogus APK files by claiming they need to update or confirm their "Know Your Customer (KYC)" info.

Fake Claim:

A picture making the rounds on social media comes with an APK file. It shows a phishing message that says the user's SBI YONO account will stop working because of their "Old PAN card." It then tells the user to install the "WBI APK" APK (Android Application Package) to check documents and keep their account open. This message is fake and aims to get people to download a harmful app.

Key Characteristics of the Scam:

- The messages "URGENTLY REQUIRED" and "Your account will be blocked today" show how scammers try to scare people into acting fast without thinking.

- PAN Card Reference: Crooks often use PAN card verification and KYC updates as a trick because these are normal for Indian bank customers.

- Risky APK Downloads: The message pushes people to get APK files, which can be dangerous. APKs from places other than the Google Play Store often have harmful software.

- Copying the Brand: The message looks a lot like SBI's real words and logos to seem legit.

- Shady Source: You can't find the APK they mention on Google Play or SBI's website, which means you should ignore the app right away.

Modus Operandi:

- Delivery Mechanism: Typically, users of messaging services like "WhatsApp," "SMS," or "email" receive identical messages with an APK link, which is how the scam is distributed.

- APK Installation: The phony APK frequently asks for a lot of rights once it is installed, including access to "SMS," "contacts," "calls," and "banking apps."

- Data Theft: Once installed, the program may have the ability to steal card numbers, personal information, OTPs, and banking credentials.

- Remote Access: These APKs may occasionally allow cybercriminals to remotely take control of the victim's device in order to carry out fraudulent financial activities.

While the user installs the application on their device the following interface opens:

It asks the user to allow the following:

- SMS is used to send and receive info from the bank.

- User details such as Username, Password, Mobile Number, and Captcha.

Technical Findings of the Application:

Static Analysis:

- File Name: SBI SELF KYC_015850.apk

- Package Name: com.mark.dot.comsbione.krishn

- Scan Date: Sept. 25, 2024, 6:45 a.m.

- App Security Score: 52/100 (MEDIUM RISK)

- Grade: B

File Information:

- File Name: SBI SELF KYC_015850.apk

- Size: 2.88MB

- MD5: 55fdb5ff999656ddbfa0284d0707d9ef

- SHA1: 8821ee6475576beb86d271bc15882247f1e83630

- SHA256: 54bab6a7a0b111763c726e161aa8a6eb43d10b76bb1c19728ace50e5afa40448

App Information:

- App Name: SBl Bank

- Package Name:: com.mark.dot.comsbione.krishn

- Main Activity: com.mark.dot.comsbione.krishn.MainActivity

- Target SDK: 34

- Min SDK: 24

- Max SDK:

- Android Version Name:: 1.0

- Android Version Code:: 1

App Components:

- Activities: 8

- Services: 2

- Receivers: 2

- Providers: 1

- Exported Activities: 0

- Exported Services: 1

- Exported Receivers: 2

- Exported Providers:: 0

Certificate Information:

- Binary is signed

- v1 signature: False

- v2 signature: True

- v3 signature: False

- v4 signature: False

- X.509 Subject: CN=PANDEY, OU=PANDEY, O=PANDEY, L=NK, ST=NK, C=91

- Signature Algorithm: rsassa_pkcs1v15

- Valid From: 20240904 07:38:35+00:00

- Valid To: 20490829 07:38:35+00:00

- Issuer: CN=PANDEY, OU=PANDEY, O=PANDEY, L=NK, ST=NK, C=91

- Serial Number: 0x1

- Hash Algorithm: sha256

- md5: 4536ca31b69fb68a34c6440072fca8b5

- sha1: 6f8825341186f39cfb864ba0044c034efb7cb8f4

- sha256: 6bc865a3f1371978e512fa4545850826bc29fa1d79cdedf69723b1e44bf3e23f

- sha512:05254668e1c12a2455c3224ef49a585b599d00796fab91b6f94d0b85ab48ae4b14868dabf16aa609c3b6a4b7ac14c7c8f753111b4291c4f3efa49f4edf41123d

- PublicKey Algorithm: RSA

- Bit Size: 2048

- Fingerprint: a84f890d7dfbf1514fc69313bf99aa8a826bade3927236f447af63fbb18a8ea6

- Found 1 unique certificate

App Permission

1. Normal Permissions

- Access_network_state: Allows the App to View the Network Status of All Networks.

- Foreground_service: Enables Regular Apps to Use Foreground Services.

- Foreground_service_data_sync: Allows Data Synchronization With Foreground Services.

- Internet: Grants Full Internet Access.

2. Signature Permission:

- Broadcast_sms: Sends Sms Received Broadcasts. It Can Be Abused by Malicious Apps to Forge Incoming Sms Messages.

3. Dangerous Permissions:

- Read_phone_numbers: Grants Access to the Device’s Phone Number(S).

- Read_phone_state: Reads the Phone’s State and Identity, Including Phone Features and Data.

- Read_sms: Allows the App to Read Sms or Mms Messages Stored on the Device or Sim Card. Malicious Apps Could Use This to Read Confidential Messages.

- Receive_sms: Enables the App to Receive and Process Sms Messages. Malicious Apps Could Monitor or Delete Messages Without Showing Them to the User.

- Send_sms: Allows the App to Send Sms Messages. Malicious Apps Could Send Messages Without the User’s Confirmation, Potentially Leading to Financial Costs.

On further analysis on virustotal platform using md5 hash file, the following results were retrieved where there are 24 security vendors out of 68, marked this apk file as malicious and the graph represents the distribution of malicious file in the environment.

Key Takeaways:

- Normal Permissions: Generally Safe for Accessing Basic Functionalities (Network State, Internet).

- Signature Permissions: May Pose Risks When Misused, Especially Related to Sms Broadcasts.

- Dangerous Permissions: Provide Sensitive Data Access, Such as Phone Numbers and Device Identity, Which Can Be Exploited by Malicious Apps.

- The Dangerous Permissions Pose Risks Regarding the Reading, Receiving, and Sending of Sms, Which Can Lead to Privacy Breaches or Financial Consequences.

How to Identify the Scam:

- Official Statement: SBI never asks clients to download unauthorized APKs for upgrades related to KYC or other services. All formal correspondence takes place via the SBI YONO app, which may be found in reputable app shops.

- No Immediate Threats: Bank correspondence never employs menacing language or issues harsh deadlines, such as "your account will be blocked today."

- Email Domain and SMS Number: Verified email addresses or phone numbers are used for official SBI correspondence. Generic, unauthorized numbers or addresses are frequently used in scams.

- Links and APK Files: Steer clear of downloading APK files from unreliable sources at all times. For app downloads, visit the Apple App Store or Google Play Store instead.

CyberPeace Advisory:

- The Research team recommends that people should avoid opening such messages sent via social platforms. One must always think before clicking on such links, or downloading any attachments from unauthorised sources.

- Downloading any application from any third party sources instead of the official app store should be avoided. This will greatly reduce the risk of downloading a malicious app, as official app stores have strict guidelines for app developers and review each app before it gets published on the store.

- Even if you download the application from an authorised source, check the app's permissions before you install it. Some malicious apps may request access to sensitive information or resources on your device. If an app is asking for too many permissions, it's best to avoid it.

- Keep your device and the app-store app up to date. This will ensure that you have the latest security updates and bug fixes.

- Falling into such a trap could result in a complete compromise of the system, including access to sensitive information such as microphone recordings, camera footage, text messages, contacts, pictures, videos, and even banking applications and could lead users to financial loss.

- Do not share confidential details like credentials, banking information with such types of Phishing scams.

- Never share or forward fake messages containing links on any social platform without proper verification.

Conclusion:

Fake APK phishing scams target financial institutions more often. This report outlines safety steps for SBI customers and ways to spot and steer clear of these cons. Keep in mind that legitimate banks never ask you to get an APK from shady websites or threaten to close your account right away. To stay safe, use SBI's official YONO app on both systems and get apps from trusted places like Google Play or the Apple App Store. Check if the info is true before you do anything turn on 2FA for all your bank and money accounts, and tell SBI or your local cyber police about any scams you see.

Executive Summary:

Recently, there has been a massive amount of fake news about India’s standing in the United Security Council (UNSC), including a veto. This report, compiled scrupulously by the CyberPeace Research Wing, delves into the provenance and credibility of the information, and it is debunked. No information from the UN or any relevant bodies has been released with regard to India’s permanent UNSC membership although India has swiftly made remarkable progress to achieve this strategic goal.

Claims:

Viral posts claim that India has become the first-ever unanimously voted permanent and veto-holding member of the United Nations Security Council (UNSC). Those posts also claim that this was achieved through overwhelming international support, granting India the same standing as the current permanent members.

Factcheck:

The CyberPeace Research Team did a thorough keyword search on the official UNSC official website and its associated social media profiles; there are presently no official announcements declaring India's entry into permanent status in the UNSC. India remains a non-permanent member, with the five permanent actors- China, France, Russia, United Kingdom, and USA- still holding veto power. Furthermore, India, along with Brazil, Germany, and Japan (the G4 nations), proposes reform of the UNSC; yet no formal resolutions have come to the surface to alter the status quo of permanent membership. We then used tools such as Google Fact Check Explorer to uncover the truth behind these viral claims. We found several debunked articles posted by other fact-checking organizations.

The viral claims also lack credible sources or authenticated references from international institutions, further discrediting the claims. Hence, the claims made by several users on social media about India becoming the first-ever unanimously voted permanent and veto-holding member of the UNSC are misleading and fake.

Conclusion:

The viral claim that India has become a permanent member of the UNSC with veto power is entirely false. India, along with the non-permanent members, protests the need for a restructuring of the UN Security Council. However, there have been no official or formal declarations or commitments for alterations in the composition of the permanent members and their powers to date. Social media users are advised to rely on verified sources for information and refrain from spreading unsubstantiated claims that contribute to misinformation.

- Claim: India’s Permanent Membership in UNSC.

- Claimed On: YouTube, LinkedIn, Facebook, X (Formerly Known As Twitter)

- Fact Check: Fake & Misleading.

Introduction

In the age of social media, the news can spread like wildfire. A recent viral claim contained that police have started a nationwide scheme of free travel service for women at night. It stated that any woman who is alone and cannot find a vehicle to go home between 10 PM and 06 AM can contact the provided numbers and request a free vehicle. The viral message further contained the request to share and forward this information to everyone to get the women to know about the free vehicle service offered by police at night. However, upon fact check the claim was found to be misleading.

Social Impact of Misleading Information

The fact that such misleading information gets viral at a fast speed is because of its ability to impact and influence people through emotional resonance. Especially during a time when women's safety is a topic discussed in media sensationalism due to recently highlighted rape or sexual violence incidents, such fake viral claims often spark widespread public concern, causing emotional resonance to people and they unknowingly share or forward such messages in the spike of emotional and sensational appeal contained in such messages. The emotional nature of these viral texts often overrides scepticism, leading to immediate sharing without verification.

Such nature of viral messages often tends to bring people to protest, raise awareness and create support networks, but in spite of emotional resonance people get targeted by misinformation and become the unintended superspreaders of fake news fueled by emotional and social media-driven reactions. Women’s safety in society is a sensitive topic and when people discover such viral claims to be misleading and fake, it often hurts the sentiments of society leading to significant social impacts, including distrust in social media, unnecessary panic and confusion.

CyberPeace Policy Vertical Advisory for Social Media Users

- Think before Sharing: All netizens must practice caution while sharing anything and double-check its authenticity before sharing/forwarding or reposting it on your social media stories.

- Don't be unintended superspreaders of Misinformation: Misinformation with emotional resonance and widespread sharing by netizens can lead to them becoming "superspreaders of misinformation" and making it viral quickly. Hence you must avoid such unintended consequences by following the best practices of being vigilant and informed by reliable sources.

- Exercise vigilance and scepticism: It is important that netizens exercise vigilance and they build cognitive abilities to recognise the red flags of misleading information. You can do so by following the official communication channels, looking for any discrepancy in the content of susceptible information and double-checking its authenticity before sharing it with anyone.

- Verify the information from official sources: Follow the official communication channels of concerned authorities for any kind of information, circulars, notifications etc. In case of finding any piece of information to be susceptible or misleading, intimate it to the relevant authority and the fact-checking organizations.

- Stay in touch with expert organizations: Cybersecurity experts and civil society organisations possess the unique blend of large-scale impact potential and technical expertise. Netizens can stay updated about recent developments in the tech-policy sphere and learn about internet best practices, and measures to counter misinformation through methods such as prebunking, debunking and more.

Connect with CyberPeace

As an expert organisation, we have the ability to educate and empower huge numbers, along with the skills and policy acumen needed to be able to not just make people aware of the problem but also teach them how to solve it for themselves. At CyberPeace we regularly produce fact-check reports, blogs & advisories, and insights on prebunking & debunking measures and capacity-building programs with the aim of empowering netizens at the heart of our initiatives. CyberPeace has established the largest network of CyberPeace Corps volunteers globally. These volunteers play a crucial role in assisting victims, raising awareness, and promoting proactive measures.

References:

Executive Summary:

A viral online video claims Canadian Prime Minister Justin Trudeau promotes an investment project. However, the CyberPeace Research Team has confirmed that the video is a deepfake, created using AI technology to manipulate Trudeau's facial expressions and voice. The original footage has no connection to any investment project. The claim that Justin Trudeau endorses this project is false and misleading.

Claims:

A viral video falsely claims that Canadian Prime Minister Justin Trudeau is endorsing an investment project.

Fact Check:

Upon receiving the viral posts, we conducted a Google Lens search on the keyframes of the video. The search led us to various legitimate sources featuring Prime Minister Justin Trudeau, none of which included promotion of any investment projects. The viral video exhibited signs of digital manipulation, prompting a deeper investigation.

We used AI detection tools, such as TrueMedia, to analyze the video. The analysis confirmed with 99.8% confidence that the video was a deepfake. The tools identified "substantial evidence of manipulation," particularly in the facial movements and voice, which were found to be artificially generated.

Additionally, an extensive review of official statements and interviews with Prime Minister Trudeau revealed no mention of any such investment project. No credible reports were found linking Trudeau to this promotion, further confirming the video’s inauthenticity.

Conclusion:

The viral video claiming that Justin Trudeau promotes an investment project is a deepfake. The research using various tools such as Google Lens, AI detection tool confirms that the video is manipulated using AI technology. Additionally, there is no information in any official sources. Thus, the CyberPeace Research Team confirms that the video was manipulated using AI technology, making the claim false and misleading.

- Claim: Justin Trudeau promotes an investment project viral on social media.

- Claimed on: Facebook

- Fact Check: False & Misleading

Executive Summary:

A viral video has circulated on social media, wrongly showing lawbreakers surrendering to the Indian Army. However, the verification performed shows that the video is of a group surrendering to the Bangladesh Army and is not related to India. The claim that it is related to the Indian Army is false and misleading.

Claims:

A viral video falsely claims that a group of lawbreakers is surrendering to the Indian Army, linking the footage to recent events in India.

Fact Check:

Upon receiving the viral posts, we analysed the keyframes of the video through Google Lens search. The search directed us to credible news sources in Bangladesh, which confirmed that the video was filmed during a surrender event involving criminals in Bangladesh, not India.

We further verified the video by cross-referencing it with official military and news reports from India. None of the sources supported the claim that the video involved the Indian Army. Instead, the video was linked to another similar Bangladesh Media covering the news.

No evidence was found in any credible Indian news media outlets that covered the video. The viral video was clearly taken out of context and misrepresented to mislead viewers.

Conclusion:

The viral video claiming to show lawbreakers surrendering to the Indian Army is footage from Bangladesh. The CyberPeace Research Team confirms that the video is falsely attributed to India, misleading the claim.

- Claim: The video shows miscreants surrendering to the Indian Army.

- Claimed on: Facebook, X, YouTube

- Fact Check: False & Misleading

Executive Summary:

A misleading video of a child covered in ash allegedly circulating as the evidence for attacks against Hindu minorities in Bangladesh. However, the investigation revealed that the video is actually from Gaza, Palestine, and was filmed following an Israeli airstrike in July 2024. The claim linking the video to Bangladesh is false and misleading.

Claims:

A viral video claims to show a child in Bangladesh covered in ash as evidence of attacks on Hindu minorities.

Fact Check:

Upon receiving the viral posts, we conducted a Google Lens search on keyframes of the video, which led us to a X post posted by Quds News Network. The report identified the video as footage from Gaza, Palestine, specifically capturing the aftermath of an Israeli airstrike on the Nuseirat refugee camp in July 2024.

The caption of the post reads, “Journalist Hani Mahmoud reports on the deadly Israeli attack yesterday which targeted a UN school in Nuseirat, killing at least 17 people who were sheltering inside and injuring many more.”

To further verify, we examined the video footage where the watermark of Al Jazeera News media could be seen, We found the same post posted on the Instagram account on 14 July, 2024 where we confirmed that the child in the video had survived a massacre caused by the Israeli airstrike on a school shelter in Gaza.

Additionally, we found the same video uploaded to CBS News' YouTube channel, where it was clearly captioned as "Video captures aftermath of Israeli airstrike in Gaza", further confirming its true origin.

We found no credible reports or evidence were found linking this video to any incidents in Bangladesh. This clearly implies that the viral video was falsely attributed to Bangladesh.

Conclusion:

The video circulating on social media which shows a child covered in ash as the evidence of attack against Hindu minorities is false and misleading. The investigation leads that the video originally originated from Gaza, Palestine and documents the aftermath of an Israeli air strike in July 2024.

- Claims: A video shows a child in Bangladesh covered in ash as evidence of attacks on Hindu minorities.

- Claimed by: Facebook

- Fact Check: False & Misleading

Executive Summary:

A viral picture on social media showing UK police officers bowing to a group of social media leads to debates and discussions. The investigation by CyberPeace Research team found that the image is AI generated. The viral claim is false and misleading.

Claims:

A viral image on social media depicting that UK police officers bowing to a group of Muslim people on the street.

Fact Check:

The reverse image search was conducted on the viral image. It did not lead to any credible news resource or original posts that acknowledged the authenticity of the image. In the image analysis, we have found the number of anomalies that are usually found in AI generated images such as the uniform and facial expressions of the police officers image. The other anomalies such as the shadows and reflections on the officers' uniforms did not match the lighting of the scene and the facial features of the individuals in the image appeared unnaturally smooth and lacked the detail expected in real photographs.

We then analysed the image using an AI detection tool named True Media. The tools indicated that the image was highly likely to have been generated by AI.

We also checked official UK police channels and news outlets for any records or reports of such an event. No credible sources reported or documented any instance of UK police officers bowing to a group of Muslims, further confirming that the image is not based on a real event.

Conclusion:

The viral image of UK police officers bowing to a group of Muslims is AI-generated. CyberPeace Research Team confirms that the picture was artificially created, and the viral claim is misleading and false.

- Claim: UK police officers were photographed bowing to a group of Muslims.

- Claimed on: X, Website

- Fact Check: Fake & Misleading