#FactCheck - Edited Video Falsely Claims as an attack on PM Netanyahu in the Israeli Senate

Executive Summary:

A viral online video claims of an attack on Prime Minister Benjamin Netanyahu in the Israeli Senate. However, the CyberPeace Research Team has confirmed that the video is fake, created using video editing tools to manipulate the true essence of the original footage by merging two very different videos as one and making false claims. The original footage has no connection to an attack on Mr. Netanyahu. The claim that endorses the same is therefore false and misleading.

Claims:

A viral video claims an attack on Prime Minister Benjamin Netanyahu in the Israeli Senate.

Fact Check:

Upon receiving the viral posts, we conducted a Reverse Image search on the keyframes of the video. The search led us to various legitimate sources featuring an attack on an ethnic Turkish leader of Bulgaria but not on the Prime Minister Benjamin Netanyahu, none of which included any attacks on him.

We used AI detection tools, such as TrueMedia.org, to analyze the video. The analysis confirmed with 68.0% confidence that the video was an editing. The tools identified "substantial evidence of manipulation," particularly in the change of graphics quality of the footage and the breakage of the flow in footage with the change in overall background environment.

Additionally, an extensive review of official statements from the Knesset revealed no mention of any such incident taking place. No credible reports were found linking the Israeli PM to the same, further confirming the video’s inauthenticity.

Conclusion:

The viral video claiming of an attack on Prime Minister Netanyahu is an old video that has been edited. The research using various AI detection tools confirms that the video is manipulated using edited footage. Additionally, there is no information in any official sources. Thus, the CyberPeace Research Team confirms that the video was manipulated using video editing technology, making the claim false and misleading.

- Claim: Attack on the Prime Minister Netanyahu Israeli Senate

- Claimed on: Facebook, Instagram and X(Formerly Twitter)

- Fact Check: False & Misleading

Related Blogs

Overview of the India-UK Joint Tech Security Initiative

India and the UK have been deepening their technological and security ties through various initiatives and agreements. One of the key developments in this partnership is the India-UK Joint Tech Security Initiative, which focuses on enhancing collaboration in areas like cybersecurity, artificial intelligence (AI),telecommunications, and critical technologies. Building upon the bilateral cooperation agenda set out in the India-UK Roadmap 2030, which seeks to bolster cooperation across various sectors, including trade, climate change, antidefense, the UK and India launched the Joint Tech Security Initiative (TSI) on July 24, 2024. This initiative will priorities collaboration in critical and emerging technologies across priority sectors. Coordinating with the national security agencies of both countries, the TSI will set priority areas and identify interdependencies for cooperation on critical and emerging technologies. This, in turn, will help build meaningful technology value chain partnerships between India & the UK.

The TSI will be coordinated by the National Security Advisors (NSAs) of both countries through existing and new dialogues. The NSAswill set priority areas and identify interdependencies for cooperation on critical and emerging tech, helping build meaningful technology value chain partnerships between the two countries. Progress made on the initiative will be reviewed on a half-yearly basis at the Deputy NSA level. A bilateral mechanism will be established led by India's Ministry of External Affairs and the UK government for promotion of trade in critical and emerging technologies, including resolution of relevant licensing or regulatory issues. Both countries view this TSI as a platform and a strong signal of intent to build and grow sustainable and tangible partnerships across priority tech sectors. They will explore how to build a deeper strategic partnership between UK and Indian research and technology centres and Incubators, enhance cooperation across UK and India tech and innovation ecosystems, and create a channel for industry and academia to help shape the TSI.

The UK and India are launching new bilateral initiatives to expand and deepen their technology security partnership. These initiatives will focus on various domains, including telecoms, critical minerals, semiconductors, and energy security.

In telecoms, the UK and India will build a new Future Telecoms Partnership, focusing on joint research on future telecoms, open RAN systems, testbed linkups, telecoms security, spectrum innovation, software and systems architecture. This will include collaboration between UK's SONIC Labs, India's Centre for Development of Telematics (C-DOT), and Dot's Telecoms Startup Mission.

In critical minerals, the UK and India will expand their collaboration on critical minerals, working together to improve supply chain resilience, explore possible research and development and technology partnerships along the complete critical minerals value chain, and share best practices on ESG standards. They will establish a roadmap for cooperation and establish a UK-India ‘critical minerals’ community of academics, innovators, and industry.

Key Areas of Collaboration:

- Strengthening cybersecurity defense and enhancing resilience through joint cybersecurity exercises and information-sharing and developing common standards and best practices while collaborating with their respective organisations, ie, CERT-In and NCSC.

- Promotion of ethical AI development and deployment with AI ethics guidelines and frameworks, and efforts encouraging academic collaborations. Support for new partnerships between UK and Indian research organizations alongside existing joint programmes using AI to tackle global challenges.

- Building secure and resilient telecom infrastructure with a focus on security and exchange of expertise and regulatory cooperation. Collaboration on Open Radio Access Networks tech to name as an example.

- Critical and emerging technologies development by advancing research and innovation in the quantum, semiconductors and biotechnology niches. Promoting and investing in tech startups and innovation ecosystems. Engaging in policy dialogues on tech governance and standards.

- Digital economy and trade facilitation to promote economic growth by enhancing frameworks and agreements for it. Collaborating on digital payment systems and fintech solutions and most importantly promoting data protection and privacy standards.

Outlook and Impact on the Industry

The initiative sets out a new approach for how the UK and India work together on the defining technologies of this decade. These include areas such as telecoms, critical minerals, AI, quantum, health/biotechnology, advanced materials and semiconductors. While the initiative looks promising, several challenges need to be addressed such as the need to put robust regulatory frameworks in place, and develop a balanced approach for data privacy and information exchange in the cross-border data flows. It is imperative to install mechanisms that ensure that intellectual property is protected while the facilitation of technology transfer is not hampered. Above all, geopolitical risks need to be navigated in a manner that the tensions are reduced and a stable partnership grows. The Initiative builds on a series of partnerships between India and the UK, as well as between industry and academia. Abilateral mechanism, led by India’s Ministry of External Affairs and the UK government, will promote trade in critical and emerging technologies, including the resolution of relevant licensing or regulatory issues.

Conclusion

This initiative, at its core, will drive forward a bilateral partnership that is framed on boosting economic growth and deepening cooperation across key issues including trade, technology, education, culture and climate. By combining their strengths, the UK and India are poised to create a robust framework for technological innovation and security that could serve as a model for international cooperation in tech.

References

- https://www.hindustantimes.com/india-news/india-uk-launch-joint-tech-security-initiative-101721876539784.html

- https://www.gov.uk/government/publications/uk-india-technology-security-initiative-factsheet/uk-india-technology-security-initiative-factsheet

- https://www.business-standard.com/economy/news/india-uk-unveil-futuristic-technology-security-initiative-to-seal-fta-soon-124072500014_1.htm

- https://bharatshakti.in/india-uk-technology-security-initiative/

Executive Summary:

On 20th May, 2024, Iranian President Ebrahim Raisi and several others died in a helicopter crash that occurred northwest of Iran. The images circulated on social media claiming to show the crash site, are found to be false. CyberPeace Research Team’s investigation revealed that these images show the wreckage of a training plane crash in Iran's Mazandaran province in 2019 or 2020. Reverse image searches and confirmations from Tehran-based Rokna Press and Ten News verified that the viral images originated from an incident involving a police force's two-seater training plane, not the recent helicopter crash.

Claims:

The images circulating on social media claim to show the site of Iranian President Ebrahim Raisi's helicopter crash.

Fact Check:

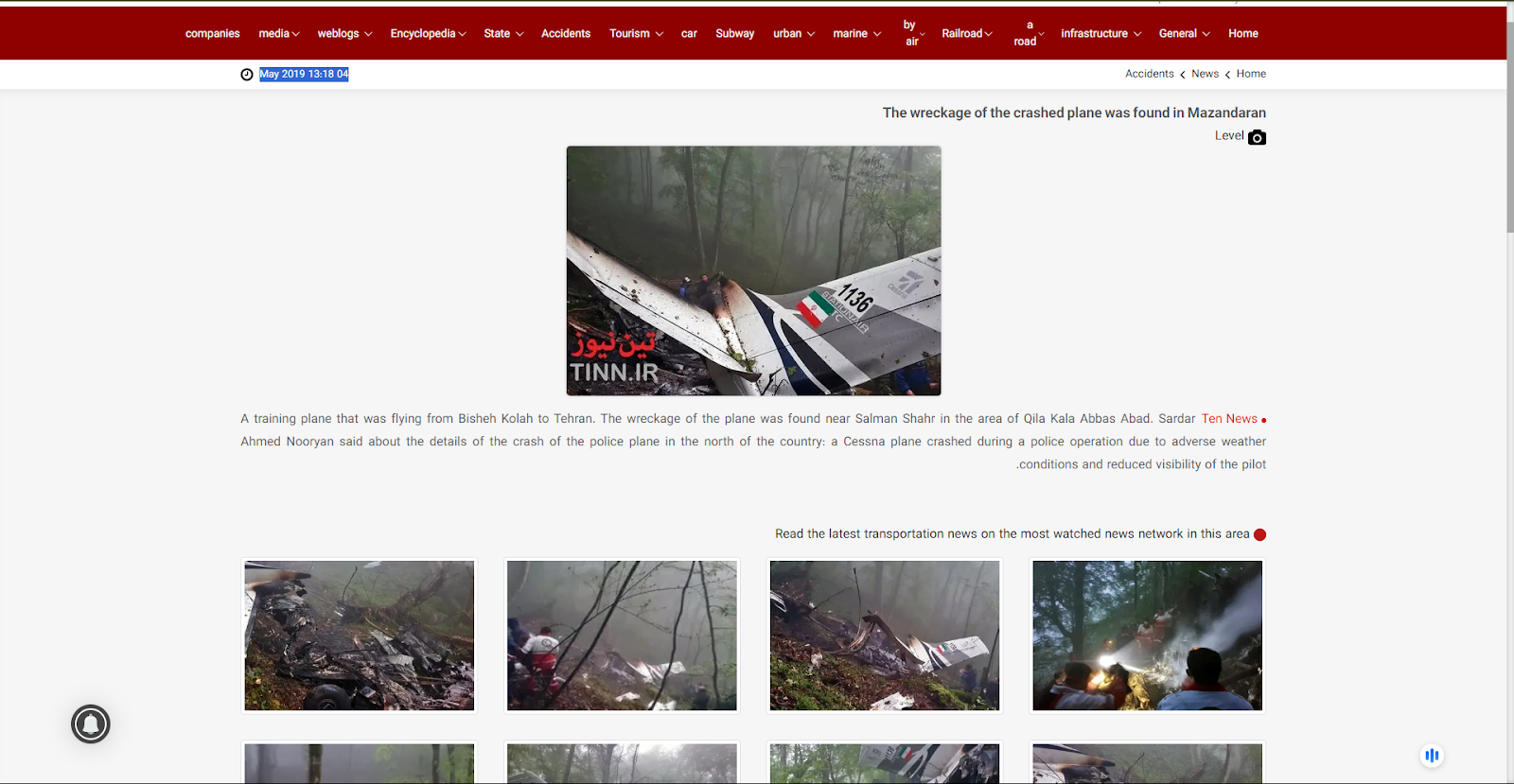

After receiving the posts, we reverse-searched each of the images and found a link to the 2020 Air Crash incident, except for the blue plane that can be seen in the viral image. We found a website where they uploaded the viral plane crash images on April 22, 2020.

According to the website, a police training plane crashed in the forests of Mazandaran, Swan Motel. We also found the images on another Iran News media outlet named, ‘Ten News’.

The Photos uploaded on to this website were posted in May 2019. The news reads, “A training plane that was flying from Bisheh Kolah to Tehran. The wreckage of the plane was found near Salman Shahr in the area of Qila Kala Abbas Abad.”

Hence, we concluded that the recent viral photos are not of Iranian President Ebrahim Raisi's Chopper Crash, It’s false and Misleading.

Conclusion:

The images being shared on social media as evidence of the helicopter crash involving Iranian President Ebrahim Raisi are incorrectly shown. They actually show the aftermath of a training plane crash that occurred in Mazandaran province in 2019 or 2020 which is uncertain. This has been confirmed through reverse image searches that traced the images back to their original publication by Rokna Press and Ten News. Consequently, the claim that these images are from the site of President Ebrahim Raisi's helicopter crash is false and Misleading.

- Claim: Viral images of Iranian President Raisi's fatal chopper crash.

- Claimed on: X (Formerly known as Twitter), YouTube, Instagram

- Fact Check: Fake & Misleading

Introduction

Recently the attackers employed the CVE-2017-0199 vulnerability in Microsoft Office to deliver a fileless form of the Remcos RAT. The Remcos RAT makes the attacker have full control of the systems that have been infected by this malware. This research will give a detailed technical description of the identified vulnerability, attack vector, and tactics together with the practical steps to counter the identified risks.

The Targeted Malware: Remcos RAT

Remcos RAT (Remote Control & Surveillance) is a commercially available remote access tool designed for legitimate administrative use. However, it has been widely adopted by cybercriminals for its stealth and extensive control capabilities, enabling:

- System control and monitoring

- Keylogging

- Data exfiltration

- Execution of arbitrary commands

The fileless variant utilised in this campaign makes detection even more challenging by running entirely in system memory, leaving minimal forensic traces.

Attack Vector: Phishing with Malicious Excel Attachments

The phishing email will be sent which appears as legitimate business communication, such as a purchase order or invoice. This email contains an Excel attachment that is weaponized to exploit the CVE-2017-0199 vulnerability.

Technical Analysis: CVE-2017-0199 Exploitation

Vulnerability Assessment

- CVE-2017-0199 is a Remote Code Execution (RCE) vulnerability in Microsoft Office which uses Object Linking and Embedding (OLE) objects.

- Affected Components:some text

- Microsoft Word

- Microsoft Excel

- WordPad

- CVSS Score: 7.8 (High Severity)

Mechanism of Exploitation

The vulnerability enables attackers to craft a malicious document when opened, it fetches and executes an external payload via an HTML Application (HTA) file. The execution process occurs without requiring user interaction beyond opening the document.

Detailed Exploitation Steps

- Phishing Email and Malicious Document some text

- The email contains an Excel file designed to make use of CVE-2017-0199.

- When the email gets opened, the document automatically connects to a remote server (e.g., 192.3.220[.]22) to download an HTA file (cookienetbookinetcache.hta).

- Execution via mshta.exe some text

- The downloaded HTA file is executed using mshta.exe, a legitimate Windows process for running HTML Applications.

- This execution is seamless and does not prompt the user, making the attack stealthy.

- Multi-Layer Obfuscation some text

- The HTA file is wrapped in several layers of scripting, including: some text

- JavaScript

- VBScript

- PowerShell

- This obfuscation helps evade static analysis by traditional antivirus solutions.

- The HTA file is wrapped in several layers of scripting, including: some text

- Fileless Payload Deployment some text

- The downloaded executable leverages process hollowing to inject malicious code into legitimate system processes.

- The Remcos RAT payload is loaded directly into memory, avoiding the creation of files on disk.

Fileless Malware Techniques

1. Process Hollowing

The attack replaces the memory of a legitimate process (e.g., explorer.exe) with the malicious Remcos RAT payload. This allows the malware to:

- Evade detection by blending into normal system activity.

- Run with the privileges of the hijacked process.

2. Anti-Analysis Techniques

- Anti-Debugging: Detects the presence of debugging tools and terminates malicious processes if found.

- Anti-VM and Sandbox Evasion: Ensures execution only on real systems to avoid detection during security analysis.

3. In-Memory Execution

- By running entirely in system memory, the malware avoids leaving artifacts on the disk, making forensic analysis and detection more challenging.

Capabilities of Remcos RAT

Once deployed, Remcos RAT provides attackers with a comprehensive suite of functionalities, including:

- Data Exfiltration: some text

- Stealing system information, files, and credentials.

- Remote Execution: some text

- Running arbitrary commands, scripts, and additional payloads.

- Surveillance: some text

- Enabling the camera and microphone.

- Capturing screen activity and clipboard contents.

- System Manipulation: some text

- Modifying Windows Registry entries.

- Controlling system services and processes.

- Disabling user input devices (keyboard and mouse).

Advanced Phishing Techniques in Parallel Campaigns

1. DocuSign Abuse

Attackers exploit legitimate DocuSign APIs to create authentic-looking phishing invoices. These invoices can trick users into authorising payments or signing malicious documents, bypassing traditional email security systems.

2. ZIP File Concatenation

By appending multiple ZIP archives into a single file, attackers exploit inconsistencies in how different tools handle these files. This allows them to embed malware that evades detection by certain archive managers.

Broader Implications of Fileless Malware

Fileless malware like Remcos RAT poses significant challenges:

- Detection Difficulties: Traditional signature-based antivirus systems struggle to detect fileless malware, as there are no static files to scan.

- Forensic Limitations: The lack of disk artifacts complicates post-incident analysis, making it harder to trace the attack's origin and scope.

- Increased Sophistication: These campaigns demonstrate the growing technical prowess of cybercriminals, leveraging legitimate tools and services for malicious purposes.

Mitigation Strategies

- Patch Management some text

- It is important to regularly update software to address known vulnerabilities like CVE-2017-0199. Microsoft released a patch for this vulnerability in April 2017.

- Advanced Email Security some text

- It is important to implement email filtering solutions that can detect phishing attempts, even those using legitimate services like DocuSign.

- Endpoint Detection and Response (EDR)some text

- Always use EDR solutions to monitor for suspicious behavior, such as unauthorized use of mshta.exe or process hollowing.

- User Awareness and Training some text

- Educate users about phishing techniques and the risks of opening unexpected attachments.

- Behavioral Analysis some text

- Deploy security solutions capable of detecting anomalous activity, even if no malicious files are present.

Conclusion

The attack via CVE-2017-0199 further led to the injection of a new fileless variant of Remcos RAT, proving how threats are getting more and more sophisticated. Thanks to the improved obfuscation and the lack of files, the attackers eliminate all traditional antiviral protection and gain full control over the infected computers. It is real and organisations have to make sure that they apply patches on time, that they build better technologies for detection and that the users themselves are more wary of the threats.

References

- Fortinet FortiGuard Labs: Analysis by Xiaopeng Zhang

- Perception Point: Research on ZIP File Concatenation

- Wallarm: DocuSign Phishing Analysis

- Microsoft Security Advisory: CVE-2017-0199