#FactCheck -Analysis Reveals AI-Generated Anomalies in Viral ‘Russia Snow Jump’ Video”

Executive Summary

A dramatic video showing several people jumping from the upper floors of a building into what appears to be thick snow has been circulating on social media, with users claiming that it captures a real incident in Russia during heavy snowfall. In the footage, individuals can be seen leaping one after another from a multi-storey structure onto a snow-covered surface below, eliciting reactions ranging from amusement to concern. The claim accompanying the video suggests that it depicts a reckless real-life episode in a snow-hit region of Russia.

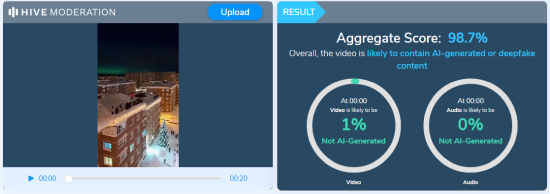

A thorough analysis by CyberPeace confirmed that the video is not a real-world recording but an AI-generated creation. The footage exhibits multiple signs of synthetic media, including unnatural human movements, inconsistent physics, blurred or distorted edges, and a glossy, computer-rendered appearance. In some frames, a partial watermark from an AI video generation tool is visible. Further verification using the Hive Moderation AI-detection platform indicated that 98.7% of the video is AI-generated, confirming that the clip is entirely digitally created and does not depict any actual incident in Russia.

Claim:

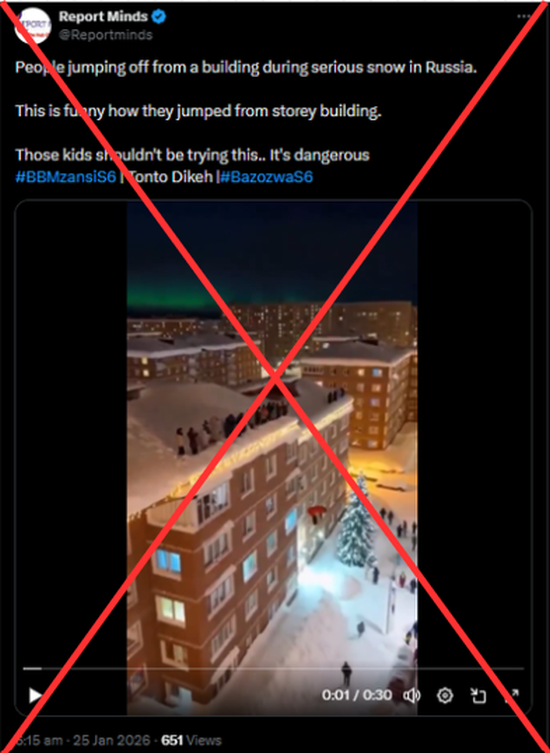

The video was shared on social media by an X (formerly Twitter) user ‘Report Minds’ on January 25, claiming it showed a real-life event in Russia. The post caption read: "People jumping off from a building during serious snow in Russia. This is funny, how they jumped from a storey building. Those kids shouldn't be trying this. It's dangerous." Here is the link to the post, and below is a screenshot.

Fact Check:

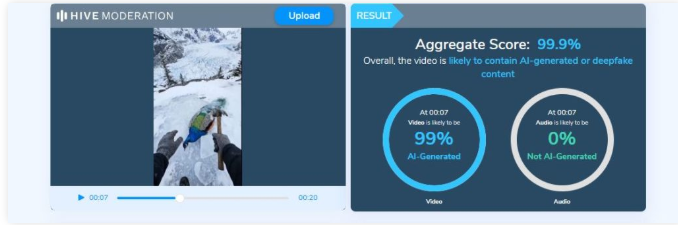

The Desk used the InVid tool to extract keyframes from the viral video and conducted a reverse image search, which revealed multiple instances of the same video shared by other users with similar claims. Upon close visual examination, several anomalies were observed, including unnatural human movements, blurred and distorted sections, a glossy, digitally-rendered appearance, and a partially concealed logo of the AI video generation tool ‘Sora AI’ visible in certain frames. Screenshots highlighting these inconsistencies were captured during the research .

- https://x.com/DailyLoud/status/2015107152772297086?s=20

- https://x.com/75secondes/status/2015134928745164848?s=20

The video was analyzed on Hive Moderation, an AI-detection platform, which confirmed that 98.7% of the content is AI-generated.

The viral video showing people jumping off a building into snow, claimed to depict a real incident in Russia, is entirely AI-generated. Social media users who shared it presented the digitally created footage as if it were real, making the claim false and misleading.