#FactCheck - "AI-Generated Image of UK Police Officers Bowing to Muslims Goes Viral”

Executive Summary:

A viral picture on social media showing UK police officers bowing to a group of social media leads to debates and discussions. The investigation by CyberPeace Research team found that the image is AI generated. The viral claim is false and misleading.

Claims:

A viral image on social media depicting that UK police officers bowing to a group of Muslim people on the street.

Fact Check:

The reverse image search was conducted on the viral image. It did not lead to any credible news resource or original posts that acknowledged the authenticity of the image. In the image analysis, we have found the number of anomalies that are usually found in AI generated images such as the uniform and facial expressions of the police officers image. The other anomalies such as the shadows and reflections on the officers' uniforms did not match the lighting of the scene and the facial features of the individuals in the image appeared unnaturally smooth and lacked the detail expected in real photographs.

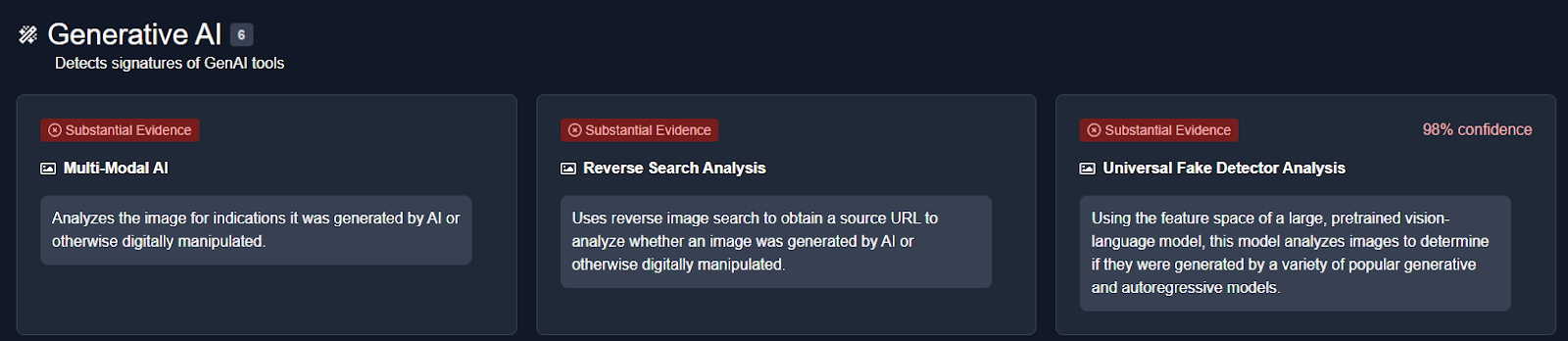

We then analysed the image using an AI detection tool named True Media. The tools indicated that the image was highly likely to have been generated by AI.

We also checked official UK police channels and news outlets for any records or reports of such an event. No credible sources reported or documented any instance of UK police officers bowing to a group of Muslims, further confirming that the image is not based on a real event.

Conclusion:

The viral image of UK police officers bowing to a group of Muslims is AI-generated. CyberPeace Research Team confirms that the picture was artificially created, and the viral claim is misleading and false.

- Claim: UK police officers were photographed bowing to a group of Muslims.

- Claimed on: X, Website

- Fact Check: Fake & Misleading

Related Blogs

Introduction

The 2025 Delhi Legislative Assembly election is just around the corner, scheduled for February 5, 2025, with all 70 constituencies heading to the polls. The eagerly awaited results will be announced on February 8, bringing excitement as the people of Delhi prepare to see their chosen leader take the helm as Chief Minister. As the election season unfolds, social media becomes a buzzing hub of activity, with information spreading rapidly across platforms. However, this period also sees a surge in online mis/disinformation, making elections a hotspot for misleading content. It is crucial for citizens to exercise caution and remain vigilant against false or deceptive online posts, videos, or content. Empowering voters to distinguish facts from fiction and recognize the warning signs of misinformation is essential to ensure informed decision-making. By staying alert and well-informed, we can collectively safeguard the integrity of the democratic process.

Risks of Mis/Disinformation

According to the 2024 survey report titled ‘Truth Be Told’ by ‘The 23 Watts’, 90% of Delhi’s youth (Gen Z) report witnessing a spike in fake news during elections, and 91% believe it influences voting patterns. Furthermore, the research highlights that 14% of Delhi’s youth tend to share sensational news without fact-checking, relying solely on conjecture.

Recent Measures by ECI

Recently the Election Commission of India (EC) has issued a fresh advisory to political parties to ensure responsible use of AI-generated content in their campaigns. The EC has issued guidelines to curb the potential use of "deepfakes" and AI-generated distorted content by political parties and their representatives to disturb the level playing field. EC has mandated the labelling of all AI-generated content used in election campaigns to enhance transparency, combat misinformation, ensuring a fair electoral process in the face of rapidly advancing AI technologies.

Best Practices to Avoid Electoral Mis/Disinformation

- Seek Information from Official Sources: Voters should rely on authenticated sources for information. These include reading official manifestos, following verified advisory notifications from the Election Commission, and avoiding unverified claims or rumours.

- Consume News Responsibly: Voters must familiarize themselves with dependable news channels and make use of reputable fact-checking organizations that uphold the integrity of news content. It is crucial to refrain from randomly sharing or forwarding any news post, video, or message without verifying its authenticity. Consume responsibly, fact-check thoroughly, and share cautiously.

- Role of Fact-Checking: Cross-checking and verifying information from credible sources are indispensable practices. Reliable and trustworthy fact-checking tools are vital for assessing the authenticity of information in the digital space. Voters are encouraged to use these tools to validate information from authenticated sources and adopt a habit of verification on their own. This approach fosters a culture of critical thinking, empowering citizens to counter deceptive deepfakes and malicious misinformation effectively. It also helps create a more informed and resilient electorate.

- Be Aware of Electoral Deepfakes: In the era of artificial intelligence, synthetic media presents significant challenges. Just as videos can be manipulated, voices can also be cloned. It is essential to remain vigilant against the misuse of deepfake audio and video content by malicious actors. Recognize the warning signs, such as inconsistencies or unnatural details, and stay alert to misleading multimedia content. Proactively question and verify such material to avoid falling prey to deception.

References

- https://www.financialexpress.com/business/brandwagon-90-ofnbsp-delhi-youth-witness-spike-in-fake-news-during-elections-91-believe-it-influences-voting-patterns-revealed-the-23-watts-report-3483166/

- https://timesofindia.indiatimes.com/india/election-commission-urges-parties-to-disclose-ai-generated-campaign-content-in-interest-of-transparency/articleshow/117306865.cms

- https://www.thehindu.com/news/national/election-commission-issues-advisory-on-use-of-ai-in-poll-campaigning/article69103888.ece

- https://indiaai.gov.in/article/election-commission-of-india-embraces-ai-ethics-in-campaigning-advisory-on-labelling-ai-generated-content

.webp)

Introduction

The rise of misinformation, disinformation, and synthetic media content on the internet and social media platforms has raised serious concerns, emphasizing the need for responsible use of social media to maintain information accuracy and combat misinformation incidents. With online misinformation rampant all over the world, the World Economic Forum's 2024 Global Risk Report, notably ranks India amongst the highest in terms of risk of mis/disinformation.

The widespread online misinformation on social media platforms necessitates a joint effort between tech/social media platforms and the government to counter such incidents. The Indian government is actively seeking to collaborate with tech/social media platforms to foster a safe and trustworthy digital environment and to also ensure compliance with intermediary rules and regulations. The Ministry of Information and Broadcasting has used ‘extraordinary powers’ to block certain YouTube channels, X (Twitter) & Facebook accounts, allegedly used to spread harmful misinformation. The government has issued advisories regulating deepfake and misinformation, and social media platforms initiated efforts to implement algorithmic and technical improvements to counter misinformation and secure the information landscape.

Efforts by the Government and Social Media Platforms to Combat Misinformation

- Advisory regulating AI, deepfake and misinformation

The Ministry of Electronics and Information Technology (MeitY) issued a modified advisory on 15th March 2024, in suppression of the advisory issued on 1st March 2024. The latest advisory specifies that the platforms should inform all users about the consequences of dealing with unlawful information on platforms, including disabling access, removing non-compliant information, suspension or termination of access or usage rights of the user to their user account and imposing punishment under applicable law. The advisory necessitates identifying synthetically created content across various formats, and instructs platforms to employ labels, unique identifiers, or metadata to ensure transparency.

- Rules related to content regulation

The Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021 (Updated as on 6.4.2023) have been enacted under the IT Act, 2000. These rules assign specific obligations on intermediaries as to what kind of information is to be hosted, displayed, uploaded, published, transmitted, stored or shared. The rules also specify provisions to establish a grievance redressal mechanism by platforms and remove unlawful content within stipulated time frames.

- Counteracting misinformation during Indian elections 2024

To counter misinformation during the Indian elections the government and social media platforms made their best efforts to ensure the electoral integrity was saved from any threat of mis/disinformation. The Election Commission of India (ECI) further launched the 'Myth vs Reality Register' to combat misinformation and to ensure the integrity of the electoral process during the general elections in 2024. The ECI collaborated with Google to empower the citizenry by making it easy to find critical voting information on Google Search and YouTube. In this way, Google has supported the 2024 Indian General Election by providing high-quality information to voters and helping people navigate AI-generated content. Google connected voters to helpful information through product features that show data from trusted institutions across its portfolio. YouTube showcased election information panels, featuring content from authoritative sources.

- YouTube and X (Twitter) new ‘Notes Feature’

- Notes Feature on YouTube: YouTube is testing an experimental feature that allows users to add notes to provide relevant, timely, and easy-to-understand context for videos. This initiative builds on previous products that display helpful information alongside videos, such as information panels and disclosure requirements when content is altered or synthetic. YouTube clarified that the pilot will be available on mobiles in the U.S. and in the English language, to start with. During this test phase, viewers, participants, and creators are invited to give feedback on the quality of the notes.

- Community Notes feature on X: Community Notes on X aims to enhance the understanding of potentially misleading posts by allowing users to add context to them. Contributors can leave notes on any post, and if enough people rate the note as helpful, it will be publicly displayed. The algorithm is open source and publicly available on GitHub, allowing anyone to audit, analyze, or suggest improvements. However, Community Notes do not represent X's viewpoint and cannot be edited or modified by their teams. A post with a Community Note will not be labelled, removed, or addressed by X unless it violates the X Rules, Terms of Service, or Privacy Policy. Failure to abide by these rules can result in removal from accessing Community Notes and/or other remediations. Users can report notes that do not comply with the rules by selecting the menu on a note and selecting ‘Report’ or using the provided form.

CyberPeace Policy Recommendations

Countering widespread online misinformation on social media platforms requires a multipronged approach that involves joint efforts from different stakeholders. Platforms should invest in state-of-the-art algorithms and technology to detect and flag suspected misleading information. They should also establish trustworthy fact-checking protocols and collaborate with expert fact-checking groups. Campaigns, seminars, and other educational materials must be encouraged by the government to increase public awareness and digital literacy about the mis/disinformation risks and impacts. Netizens should be empowered with the necessary skills and ability to discern fact and misleading information to successfully browse true information in the digital information age. The joint efforts by Government authorities, tech companies, and expert cyber security organisations are vital in promoting a secure and honest online information landscape and countering the spread of mis/disinformation. Platforms must encourage netizens/users to foster appropriate online conduct while using platforms and abiding by the terms & conditions and community guidelines of the platforms. Encouraging a culture of truth and integrity on the internet, honouring differing points of view, and confirming facts all help to create a more reliable and information-resilient environment.

References:

- https://www.meity.gov.in/writereaddata/files/Advisory%2015March%202024.pdf

- https://blog.google/intl/en-in/company-news/outreach-initiatives/supporting-the-2024-indian-general-election/

- https://blog.youtube/news-and-events/new-ways-to-offer-viewers-more-context/

- https://help.x.com/en/using-x/community-notes

Introduction

Prebunking is a technique that shifts the focus from directly challenging falsehoods or telling people what they need to believe to understanding how people are manipulated and misled online to begin with. It is a growing field of research that aims to help people resist persuasion by misinformation. Prebunking, or "attitudinal inoculation," is a way to teach people to spot and resist manipulative messages before they happen. The crux of the approach is rooted in taking a step backwards and nipping the problem in the bud by deepening our understanding of it, instead of designing redressal mechanisms to tackle it after the fact. It has been proven effective in helping a wide range of people build resilience to misleading information.

Prebunking is a psychological strategy for countering the effect of misinformation with the goal of assisting individuals in identifying and resisting deceptive content, hence increasing resilience against future misinformation. Online manipulation is a complex issue, and multiple approaches are needed to curb its worst effects. Prebunking provides an opportunity to get ahead of online manipulation, providing a layer of protection before individuals encounter malicious content. Prebunking aids individuals in discerning and refuting misleading arguments, thus enabling them to resist a variety of online manipulations.

Prebunking builds mental defenses for misinformation by providing warnings and counterarguments before people encounter malicious content. Inoculating people against false or misleading information is a powerful and effective method for building trust and understanding along with a personal capacity for discernment and fact-checking. Prebunking teaches people how to separate facts from myths by teaching them the importance of thinking in terms of ‘how you know what you know’ and consensus-building. Prebunking uses examples and case studies to explain the types and risks of misinformation so that individuals can apply these learnings to reject false claims and manipulation in the future as well.

How Prebunking Helps Individuals Spot Manipulative Messages

Prebunking helps individuals identify manipulative messages by providing them with the necessary tools and knowledge to recognize common techniques used to spread misinformation. Successful prebunking strategies include;

- Warnings;

- Preemptive Refutation: It explains the narrative/technique and how particular information is manipulative in structure. The Inoculation treatment messages typically include 2-3 counterarguments and their refutations. An effective rebuttal provides the viewer with skills to fight any erroneous or misleading information they may encounter in the future.

- Micro-dosing: A weakened or practical example of misinformation that is innocuous.

All these alert individuals to potential manipulation attempts. Prebunking also offers weakened examples of misinformation, allowing individuals to practice identifying deceptive content. It activates mental defenses, preparing individuals to resist persuasion attempts. Misinformation can exploit cognitive biases: people tend to put a lot of faith in things they’ve heard repeatedly - a fact that malicious actors manipulate by flooding the Internet with their claims to help legitimise them by creating familiarity. The ‘prebunking’ technique helps to create resilience against misinformation and protects our minds from the harmful effects of misinformation.

Prebunking essentially helps people control the information they consume by teaching them how to discern between accurate and deceptive content. It enables one to develop critical thinking skills, evaluate sources adequately and identify red flags. By incorporating these components and strategies, prebunking enhances the ability to spot manipulative messages, resist deceptive narratives, and make informed decisions when navigating the very dynamic and complex information landscape online.

CyberPeace Policy Recommendations

- Preventing and fighting misinformation necessitates joint efforts between different stakeholders. The government and policymakers should sponsor prebunking initiatives and information literacy programmes to counter misinformation and adopt systematic approaches. Regulatory frameworks should encourage accountability in the dissemination of online information on various platforms. Collaboration with educational institutions, technological companies and civil society organisations can assist in the implementation of prebunking techniques in a variety of areas.

- Higher educational institutions should support prebunking and media literacy and offer professional development opportunities for educators, and scholars by working with academics and professionals on the subject of misinformation by producing research studies on the grey areas and challenges associated with misinformation.

- Technological companies and social media platforms should improve algorithm transparency, create user-friendly tools and resources, and work with fact-checking organisations to incorporate fact-check labels and tools.

- Civil society organisations and NGOs should promote digital literacy campaigns to spread awareness on misinformation and teach prebunking strategies and critical information evaluation. Training programmes should be available to help people recognise and resist deceptive information using prebunking tactics. Advocacy efforts should support legislation or guidelines that support and encourage prebunking efforts and promote media literacy as a basic skill in the digital landscape.

- Media outlets and journalists including print & social media should follow high journalistic standards and engage in fact-checking activities to ensure information accuracy before release. Collaboration with prebunking professionals, cyber security experts, researchers and advocacy analysts can result in instructional content and initiatives that promote media literacy, prebunking strategies and misinformation awareness.

Final Words

The World Economic Forum's Global Risks Report 2024 identifies misinformation and disinformation as the top most significant risks for the next two years. Misinformation and disinformation are rampant in today’s digital-first reality, and the ever-growing popularity of social media is only going to see the challenges compound further. It is absolutely imperative for all netizens and stakeholders to adopt proactive approaches to counter the growing problem of misinformation. Prebunking is a powerful problem-solving tool in this regard because it aims at ‘protection through prevention’ instead of limiting the strategy to harm reduction and redressal. We can draw parallels with the concept of vaccination or inoculation, reducing the probability of a misinformation infection. Prebunking exposes us to a weakened form of misinformation and provides ways to identify it, reducing the chance false information takes root in our psyches.

The most compelling attribute of this approach is that the focus is not only on preventing damage but also creating widespread ownership and citizen participation in the problem-solving process. Every empowered individual creates an additional layer of protection against the scourge of misinformation, not only making safer choices for themselves but also lowering the risk of spreading false claims to others.

References

- [1] https://www3.weforum.org/docs/WEF_The_Global_Risks_Report_2024.pdf

- [2] https://prebunking.withgoogle.com/docs/A_Practical_Guide_to_Prebunking_Misinformation.pdf

- [3] https://ijoc.org/index.php/ijoc/article/viewFile/17634/3565