#FactCheck - Stunning 'Mount Kailash' Video Exposed as AI-Generated Illusion!

EXECUTIVE SUMMARY:

A viral video is surfacing claiming to capture an aerial view of Mount Kailash that has breathtaking scenery apparently providing a rare real-life shot of Tibet's sacred mountain. Its authenticity was investigated, and authenticity versus digitally manipulative features were analyzed.

CLAIMS:

The viral video claims to reveal the real aerial shot of Mount Kailash, as if exposing us to the natural beauty of such a hallowed mountain. The video was circulated widely in social media, with users crediting it to be the actual footage of Mount Kailash.

FACTS:

The viral video that was circulated through social media was not real footage of Mount Kailash. The reverse image search revealed that it is an AI-generated video created by Sonam and Namgyal, two Tibet based graphic artists on Midjourney. The advanced digital techniques used helped to provide a realistic lifelike scene in the video.

No media or geographical source has reported or published the video as authentic footage of Mount Kailash. Besides, several visual aspects, including lighting and environmental features, indicate that it is computer-generated.

For further verification, we used Hive Moderation, a deep fake detection tool to conclude whether the video is AI-Generated or Real. It was found to be AI generated.

CONCLUSION:

The viral video claiming to show an aerial view of Mount Kailash is an AI-manipulated creation, not authentic footage of the sacred mountain. This incident highlights the growing influence of AI and CGI in creating realistic but misleading content, emphasizing the need for viewers to verify such visuals through trusted sources before sharing.

- Claim: Digitally Morphed Video of Mt. Kailash, Showcasing Stunning White Clouds

- Claimed On: X (Formerly Known As Twitter), Instagram

- Fact Check: AI-Generated (Checked using Hive Moderation).

Related Blogs

Executive Summary

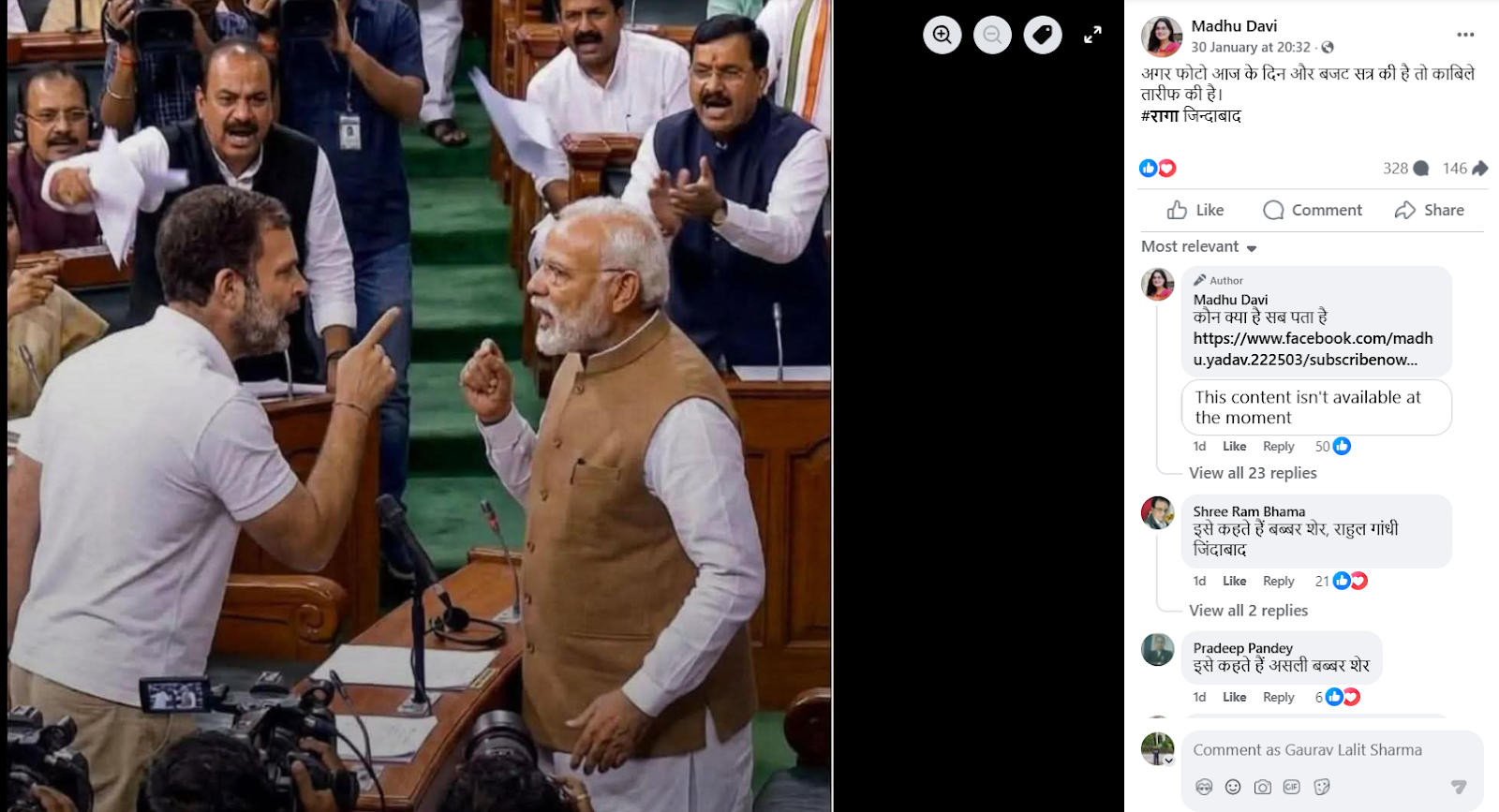

An image showing Prime Minister Narendra Modi and Leader of Opposition in the Lok Sabha and Congress MP Rahul Gandhi standing face to face inside Parliament is going viral on social media. Several users are sharing the image claiming that the photograph was taken during the ongoing Budget Session, suggesting a direct face-off between the two leaders inside Parliament. However, research conducted by the CyberPeacehas found that the viral claim is false. The image in question is not real but has been generated using Artificial Intelligence (AI). The AI-generated image is now being shared on social media with a misleading claim.

Claim

A Facebook user named Madhu Davi shared the viral image on January 30, 2026, with the caption: “If this photo is from today and the Budget Session, it is commendable. RAGA Zindabad.”

(Archived version of the post available here.)

- https://www.facebook.com/photo/?fbid=759145877237871&set=a.110639115421887

- https://perma.cc/N2XD-TZ32?type=image

Fact Check:

To verify the viral claim, we first conducted a keyword search on Google to check whether any credible media outlet had reported such an incident during the Budget Session. However, no news reports supporting the claim were found. We then performed a reverse image search using Google Lens, but this too did not yield any reliable media reports or evidence confirming the authenticity of the image. This raised suspicion that the image might be AI-generated. To further verify, the image was analysed using the AI detection tool Hive Moderation. The tool indicated a probability of over 99 per cent that the image was generated using Artificial Intelligence.

Conclusion

CyberPeace research confirms that the image being circulated with the claim that Prime Minister Narendra Modi and Rahul Gandhi came face to face during the Budget Session is fake. The viral image has been created using AI and is being shared with a false and misleading narrative.

Introduction

The increasing online interaction and popularity of social media platforms for netizens have made a breeding ground for misinformation generation and spread. Misinformation propagation has become easier and faster on online social media platforms, unlike traditional news media sources like newspapers or TV. The big data analytics and Artificial Intelligence (AI) systems have made it possible to gather, combine, analyse and indefinitely store massive volumes of data. The constant surveillance of digital platforms can help detect and promptly respond to false and misinformation content.

During the recent Israel-Hamas conflict, there was a lot of misinformation spread on big platforms like X (formerly Twitter) and Telegram. Images and videos were falsely shared attributing to the ongoing conflict, and had spread widespread confusion and tension. While advanced technologies such as AI and big data analytics can help flag harmful content quickly, they must be carefully balanced against privacy concerns to ensure that surveillance practices do not infringe upon individual privacy rights. Ultimately, the challenge lies in creating a system that upholds both public security and personal privacy, fostering trust without compromising on either front.

The Need for Real-Time Misinformation Surveillance

According to a recent survey from the Pew Research Center, 54% of U.S. adults at least sometimes get news on social media. The top spots are taken by Facebook and YouTube respectively with Instagram trailing in as third and TikTok and X as fourth and fifth. Social media platforms provide users with instant connectivity allowing them to share information quickly with other users without requiring the permission of a gatekeeper such as an editor as in the case of traditional media channels.

Keeping in mind the data dumps that generated misinformation due to the elections that took place in 2024 (more than 100 countries), the public health crisis of COVID-19, the conflicts in the West Bank and Gaza Strip and the sheer volume of information, both true and false, has been immense. Identifying accurate information amid real-time misinformation is challenging. The dilemma emerges as the traditional content moderation techniques may not be sufficient in curbing it. Traditional content moderation alone may be insufficient, hence the call for a dedicated, real-time misinformation surveillance system backed by AI and with certain human sight and also balancing the privacy of user's data, can be proven to be a good mechanism to counter misinformation on much larger platforms. The concerns regarding data privacy need to be prioritized before deploying such technologies on platforms with larger user bases.

Ethical Concerns Surrounding Surveillance in Misinformation Control

Real-time misinformation surveillance could pose significant ethical risks and privacy risks. Monitoring communication patterns and metadata, or even inspecting private messages, can infringe upon user privacy and restrict their freedom of expression. Furthermore, defining misinformation remains a challenge; overly restrictive surveillance can unintentionally stifle legitimate dissent and alternate perspectives. Beyond these concerns, real-time surveillance mechanisms could be exploited for political, economic, or social objectives unrelated to misinformation control. Establishing clear ethical standards and limitations is essential to ensure that surveillance supports public safety without compromising individual rights.

In light of these ethical challenges, developing a responsible framework for real-time surveillance is essential.

Balancing Ethics and Efficacy in Real-Time Surveillance: Key Policy Implications

Despite these ethical challenges, a reliable misinformation surveillance system is essential. Key considerations for creating ethical, real-time surveillance may include:

- Misinformation-detection algorithms should be designed with transparency and accountability in mind. Third-party audits and explainable AI can help ensure fairness, avoid biases, and foster trust in monitoring systems.

- Establishing clear, consistent definitions of misinformation is crucial for fair enforcement. These guidelines should carefully differentiate harmful misinformation from protected free speech to respect users’ rights.

- Only collecting necessary data and adopting a consent-based approach which protects user privacy and enhances transparency and trust. It further protects them from stifling dissent and profiling for targeted ads.

- An independent oversight body that can monitor surveillance activities while ensuring accountability and preventing misuse or overreach can be created. These measures, such as the ability to appeal to wrongful content flagging, can increase user confidence in the system.

Conclusion: Striking a Balance

Real-time misinformation surveillance has shown its usefulness in counteracting the rapid spread of false information online. But, it brings complex ethical challenges that cannot be overlooked such as balancing the need for public safety with the preservation of privacy and free expression is essential to maintaining a democratic digital landscape. The references from the EU’s Digital Services Act and Singapore’s POFMA underscore that, while regulation can enhance accountability and transparency, it also risks overreach if not carefully structured. Moving forward, a framework for misinformation monitoring must prioritise transparency, accountability, and user rights, ensuring that algorithms are fair, oversight is independent, and user data is protected. By embedding these safeguards, we can create a system that addresses the threat of misinformation and upholds the foundational values of an open, responsible, and ethical online ecosystem. Balancing ethics and privacy and policy-driven AI Solutions for Real-Time Misinformation Monitoring are the need of the hour.

References

- https://www.pewresearch.org/journalism/fact-sheet/social-media-and-news-fact-sheet/

- https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=OJ:C:2018:233:FULL

Misinformation is a scourge in the digital world, making the most mundane experiences fraught with risk. The threat is considerably heightened in conflict settings, especially in the modern era, where geographical borders blur and civilians and conflict actors alike can take to the online realm to discuss -and influence- conflict events. Propaganda can complicate the narrative and distract from the humanitarian crises affecting civilians, while also posing a serious threat to security operations and law and order efforts. Sensationalised reports of casualties and manipulated portrayals of military actions contribute to a cycle of violence and suffering.

A study conducted by MIT found the mere thought of sharing news on social media reduced the ability to judge whether a story was true or false; the urge to share outweighed the consideration of accuracy (2023). Cross-border misinformation has become a critical issue in today's interconnected world, driven by the rise of digital communication platforms. To effectively combat misinformation, coordinated international policy frameworks and cooperation between governments, platforms, and global institutions are created.

The Global Nature of Misinformation

Cross-border misinformation is false or misleading information that spreads across countries. Out-of-border creators amplify information through social media and digital platforms and are a key source of misinformation. Misinformation can interfere with elections, and create serious misconceptions about health concerns such as those witnessed during the COVID-19 pandemic, or even lead to military conflicts.

The primary challenge in countering cross-border misinformation is the difference in national policies, legal frameworks and governance policies of social media platforms across various jurisdictions. Examining the existing international frameworks, such as cybersecurity treaties and data-sharing agreements used for financial crimes might be helpful to effectively address cross-border misinformation. Adapting these approaches to the digital information ecosystem, nations could strengthen their collective response to the spread of misinformation across borders. Global institutions like the United Nations or regional bodies like the EU and ASEAN can work together to set a unified response and uniform international standards for regulation dealing with misinformation specifically.

Current National and Regional Efforts

Many countries have taken action to deal with misinformation within their borders. Some examples include:

- The EU’s Digital Services Act has been instrumental in regulating online intermediaries and platforms including marketplaces, social networks, content-sharing platforms, app stores, etc. The legislation aims to prevent illegal and harmful activities online and the spread of disinformation.

- The primary legislation that governs cyberspace in India is the IT Act of 2000 and its corresponding rules (IT Rules, 2023), which impose strict requirements on social media platforms to counter misinformation content and enable the traceability of the creator responsible for the origin of misinformation. Platforms have to conduct due diligence, failing which they risk losing their safe harbour protection. The recently-enacted DPDP Act of 2023 indirectly addresses personal data misuse that can be used to contribute to the creation and spread of misinformation. Also, the proposed Digital India Act is expected to focus on “user harms” specific to the online world.

- In the U.S., the Right to Editorial Discretion and Section 230 of the Communications Decency Act place the responsibility for regulating misinformation on private actors like social media platforms and social media regulations. The US government has not created a specific framework addressing misinformation and has rather encouraged voluntary measures by SMPs to have independent policies to regulate misinformation on their platforms.

The common gap area across these policies is the absence of a standardised, global framework for addressing cross-border misinformation which results in uneven enforcement and dependence on national regulations.

Key Challenges in Achieving International Cooperation

Some of the key challenges identified in achieving international cooperation to address cross-border misinformation are as follows:

- Geopolitical tensions can emerge due to the differences in political systems, priorities, and trust issues between countries that hinder attempts to cooperate and create a universal regulation.

- The diversity in approaches to internet governance and freedom of speech across countries complicates the matters further.

- Further complications arise due to technical and legal obstacles around the issues of sovereignty, jurisdiction and enforcement, further complicating matters relating to the monitoring and removal of cross-border misinformation.

CyberPeace Recommendations

- The UN Global Principles For Information Integrity Recommendations for Multi-stakeholder Action, unveiled on 24 June 2024, are a welcome step for addressing cross-border misinformation. This can act as the stepping stone for developing a framework for international cooperation on misinformation, drawing inspiration from other successful models like climate change agreements, international criminal law framework etc.

- Collaborations like public-private partnerships between government, tech companies and civil societies can help enhance transparency, data sharing and accountability in tackling cross-border misinformation.

- Engaging in capacity building and technology transfers in less developed countries would help to create a global front against misinformation.

Conclusion

We are in an era where misinformation knows no borders and the need for international cooperation has never been more urgent. Global democracies are exploring solutions, both regulatory and legislative, to limit the spread of misinformation, however, these fragmented efforts fall short of addressing the global scale of the problem. Establishing a standardised, international framework, backed by multilateral bodies like the UN and regional alliances, can foster accountability and facilitate shared resources in this fight. Through collaborative action, transparent regulations, and support for developing nations, the world can create a united front to curb misinformation and protect democratic values, ensuring information integrity across borders.

References

- https://economics.mit.edu/sites/default/files/2023-10/A%20Model%20of%20Online%20Misinformation.pdf

- https://www.indiatoday.in/global/story/in-the-crosshairs-manufacturing-consent-and-the-erosion-of-public-trust-2620734-2024-10-21

- https://laweconcenter.org/resources/knowledge-and-decisions-in-the-information-age-the-law-economics-of-regulating-misinformation-on-social-media-platforms/

- https://www.article19.org/resources/un-article-19-global-principles-for-information-integrity/