#FactCheck - "Deepfake Video Falsely Claims Justin Trudeau Endorses Investment Project”

Executive Summary:

A viral online video claims Canadian Prime Minister Justin Trudeau promotes an investment project. However, the CyberPeace Research Team has confirmed that the video is a deepfake, created using AI technology to manipulate Trudeau's facial expressions and voice. The original footage has no connection to any investment project. The claim that Justin Trudeau endorses this project is false and misleading.

Claims:

A viral video falsely claims that Canadian Prime Minister Justin Trudeau is endorsing an investment project.

Fact Check:

Upon receiving the viral posts, we conducted a Google Lens search on the keyframes of the video. The search led us to various legitimate sources featuring Prime Minister Justin Trudeau, none of which included promotion of any investment projects. The viral video exhibited signs of digital manipulation, prompting a deeper investigation.

We used AI detection tools, such as TrueMedia, to analyze the video. The analysis confirmed with 99.8% confidence that the video was a deepfake. The tools identified "substantial evidence of manipulation," particularly in the facial movements and voice, which were found to be artificially generated.

Additionally, an extensive review of official statements and interviews with Prime Minister Trudeau revealed no mention of any such investment project. No credible reports were found linking Trudeau to this promotion, further confirming the video’s inauthenticity.

Conclusion:

The viral video claiming that Justin Trudeau promotes an investment project is a deepfake. The research using various tools such as Google Lens, AI detection tool confirms that the video is manipulated using AI technology. Additionally, there is no information in any official sources. Thus, the CyberPeace Research Team confirms that the video was manipulated using AI technology, making the claim false and misleading.

- Claim: Justin Trudeau promotes an investment project viral on social media.

- Claimed on: Facebook

- Fact Check: False & Misleading

Related Blogs

Introduction

Charity and donation scams have continued to persist and are amplified in the digital era, where messages spread rapidly through WhatsApp, emails, and social media. These fraudulent schemes involve threat actors impersonating legitimate charities, government appeals, or social causes to solicit funds. Apart from targeting the general public, they also impact entities such as reputable tech firms and national institutions. Victims are tricked into transferring money or sharing personal information, often under the guise of urgent humanitarian efforts or causes.

A recent incident involves a fake WhatsApp message claiming to be from the Indian Ministry of Defence. The message urged users to donate to a fund for “modernising the Indian Army.” The government later confirmed this message was entirely fabricated and part of a larger scam. It emphasised that no such appeal had been issued by the Ministry, and urged citizens to verify such claims through official government portals before responding.

Tech Industry and Donation-Related Scams

Large corporations are also falling prey. According to media reports, an American IT company recently terminated around 700 Indian employees after uncovering a donation-related fraud. At least 200 of them were reportedly involved in a scheme linked to Telugu organisations in the US. The scam echoed a similar situation that had previously affected Apple, where Indian employees were fired after being implicated in donation fraud tied to the Telugu Association of North America (TANA). Investigations revealed that employees had made questionable donations to these groups in exchange for benefits such as visa support or employment favours.

Common People Targeted

While organisational scandals grab headlines, the common man remains equally or even more vulnerable. In a recent incident, a man lost over ₹1 lakh after clicking on a WhatsApp link asking for donations to a charity. Once he engaged with the link, the fraudsters manipulated him into making repeated transfers under various pretexts, ranging from processing fees to refund-related transactions (social engineering). Scammers often employ a similar set of tactics using urgency, emotional appeal, and impersonation of credible platforms to convince and deceive people.

Cautionary Steps

CyberPeace recommends adopting a cautious and informed approach when making charitable donations, especially online. Here are some key safety measures to follow:

- Verify Before You Donate. Always double-check the legitimacy of donation appeals. Use official government portals or the official charities' websites. Be wary of unfamiliar phone numbers, email addresses, or WhatsApp forwards asking for money.

- Avoid Clicking on Suspicious Links

Never click on links or download attachments from unknown or unverified sources. These could be phishing links/ malware designed to steal your data or access your bank accounts. - Be Sceptical of Urgency Scammers bank on creating a false sense of urgency to pressure their victims into donating quickly. One must take the time to evaluate before responding.

- Use Secure Payment Channels Ensure that one makes donations only through platforms that are secure, trusted, and verified. These include official UPI handles, government-backed portals (like PM CARES or Bharat Kosh), among others.

- Report Suspected Fraud In case one receives suspicious messages or falls victim to a scam, they are encouraged to report it to cybercrime authorities via cybercrime.gov.in (1930) or the local police, as prompt reporting can prevent further fraud.

Conclusion

Charity should never come at the cost of trust and safety. While donating to a good cause is noble, doing it mindfully is essential in today’s scam-prone environment. Always remember: a little caution today can save a lot tomorrow.

References

- https://economictimes.indiatimes.com/news/defence/misleading-message-circulating-on-whatsapp-related-to-donation-for-armys-modernisation-govt/articleshow/120672806.cms?from=mdr

- https://timesofindia.indiatimes.com/technology/tech-news/american-company-sacks-700-of-these-200-in-donation-scam-related-to-telugu-organisations-similar-to-firing-at-apple/articleshow/120075189.cms

- https://timesofindia.indiatimes.com/city/hyderabad/apple-fires-some-indians-over-donation-fraud-tana-under-scrutiny/articleshow/117034457.cms

- https://www.indiatoday.in/technology/news/story/man-gets-link-for-donation-and-charity-on-whatsapp-loses-over-rs-1-lakh-after-clicking-on-it-2688616-2025-03-04

Executive Summary

A video showing thick smoke rising from a building and people running in panic is being shared on social media. The video is being circulated with the claim that it shows Iran launching a missile attack on the United States.CyberPeace’s research found the claim to be misleading. Our probe revealed that the video is not related to any recent incident. The viral clip is actually from the September 11, 2001 terrorist attacks on the World Trade Center in the United States and is being falsely shared as footage of an alleged Iranian missile strike on the US.

Claim:

An Instagram user shared the video claiming, “Iran has attacked a US airbase in Qatar. Iran has fired six ballistic missiles at the Al Udeid Airbase in Qatar. Al Udeid Airbase is the largest US military base in West Asia.”

Links to the post and its archived version are provided below.

Fact Check:

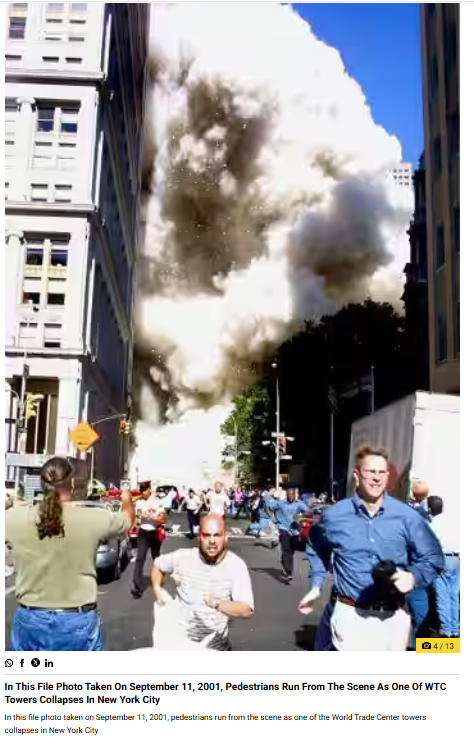

To verify the claim, we extracted key frames from the viral video and ran a reverse image search using Google Lens. During the search, we found visuals matching the viral clip in a report published by Wion on September 11, 2021. The report, titled “In pics | A look back at the scenes from the 9/11 attacks,” included an image that closely resembled the visuals seen in the viral video. The caption of the image stated that it was a file photo from September 11, 2001, showing pedestrians running as one of the World Trade Center towers collapsed in New York City.

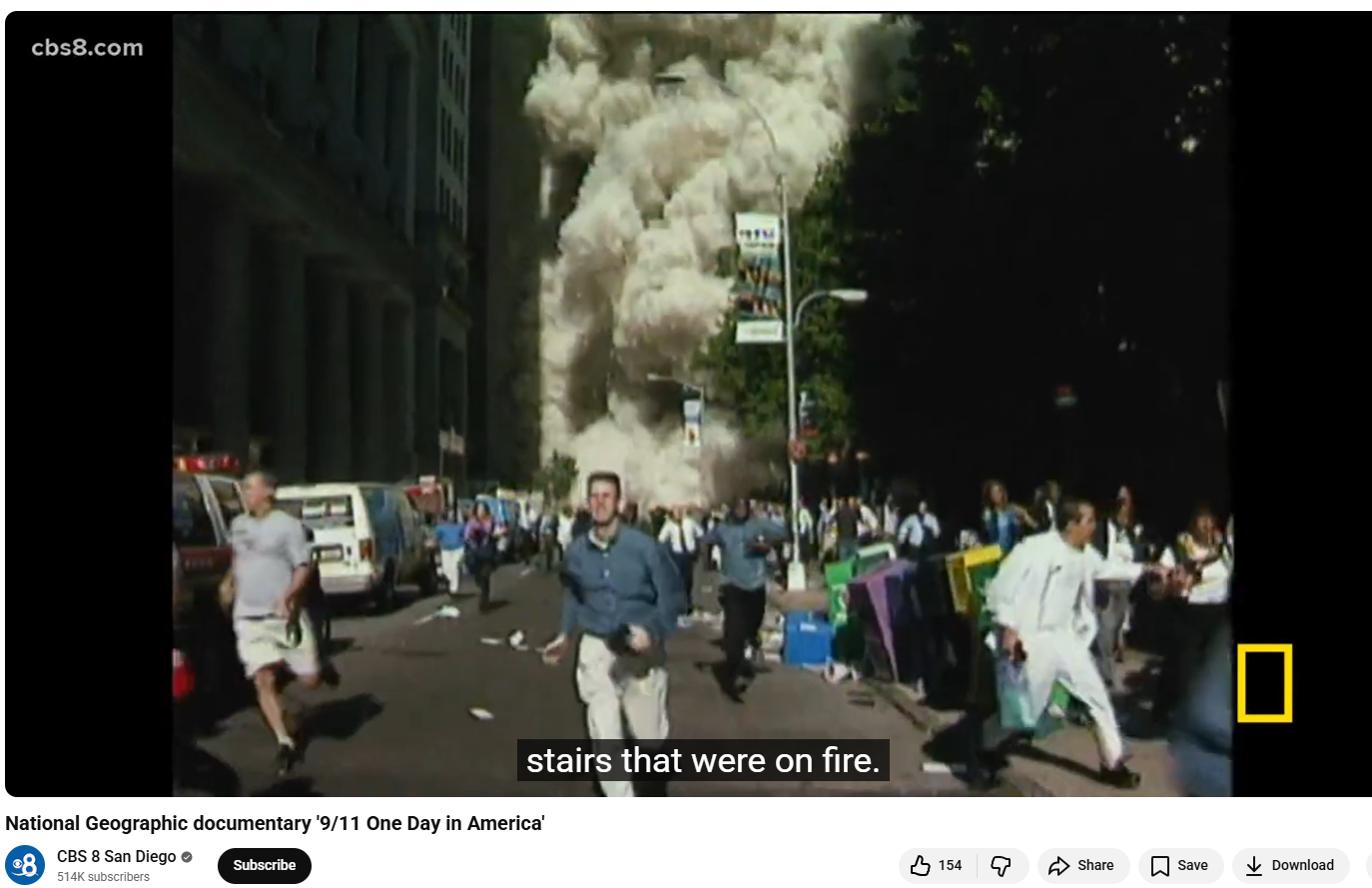

Further research led us to the same footage on the YouTube channel CBS 8 San Diego. At the 01:11 timestamp of the video, visuals matching the viral clip can be clearly seen.

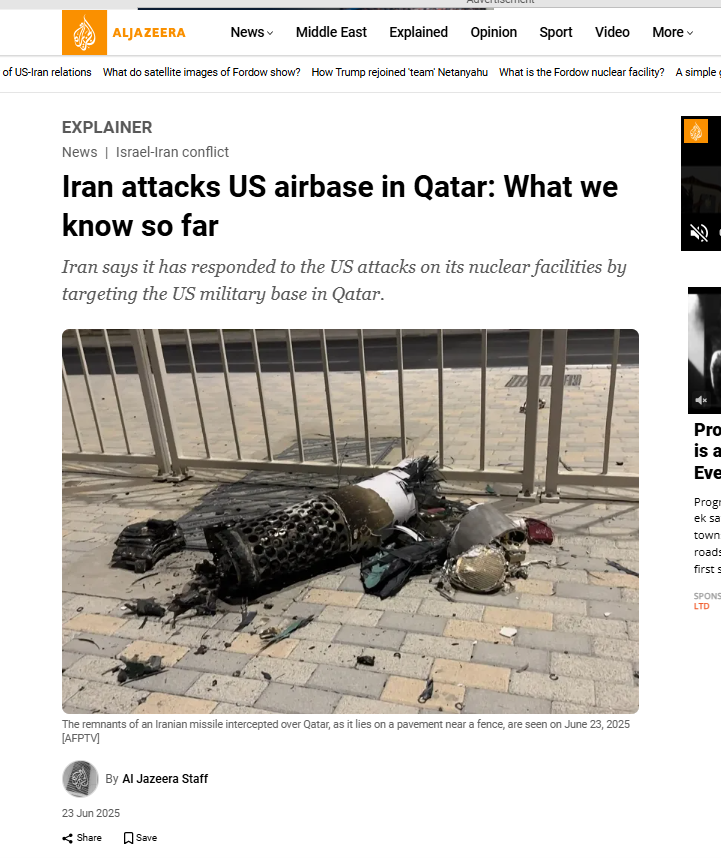

We also found an Al Jazeera report dated June 23, 2025, which confirmed that Iran had attacked US forces stationed at the Al Udeid airbase in Qatar in retaliation for US strikes on Iran’s uclear facilities. However, the visuals used in the viral video do not correspond to this incident.

Conclusion

The viral video does not show a recent Iranian attack on a US airbase in Qatar. The clip actually dates back to the September 11, 2001 terrorist attacks on the World Trade Center in the United States. Old 9/11 footage has been falsely shared with a misleading claim linking it to Iran’s alleged missile strike on the US.

.webp)

Introduction

To every Indian’s pride, the maritime sector has seen tremendous growth under various government initiatives. Still, each step towards growth should be given due regard to security measures. Sadly, cybersecurity is still treated as a secondary requirement in various critical sectors, let alone to protect the maritime sector and its assets. Maritime cybersecurity includes the protection of digital assets and networks that are vulnerable to online threats. Without an adequate cybersecurity framework in place, the assets remain at risk from cyber threats, such as malware and scams, to more sophisticated attacks targeting critical shore-based infrastructure. Amid rising global cyber threats, the maritime sector is emerging as a potential target, underscoring the need for proactive security measures to safeguard maritime operations. In this evolving threat landscape, assuming that India's maritime domain remains unaffected would be unrealistic.

Overview of India’s Maritime Sector

India’s potential in terms of its resources and its ever-so-great oceans. India is well endowed with its dynamic 7,500 km coastline, which anchors 12 major ports and over 200 minor ones. India is strategically positioned along the world’s busiest shipping routes, and it has the potential to rise to global prominence as a key trading hub. As of 2023, India’s share in global growth stands at a staggering 16%, and India is reportedly running its course to become the third-largest economy, which is no small feat for a country of 1.4 billion people. This growth can be attributed to various global initiatives undertaken by the government, such as “Sagarmanthan: The Great Oceans Dialogue,” laying the foundation of an insightful dialogue between the visionaries to design a landscape for the growth of the marine sector. The rationale behind solidifying a security mechanism in the maritime industry lies in the fact that 95% of the country’s trade by volume and 70% by value is handled by this sector.

Current Cybersecurity Landscape in the Maritime Sector

All across the globe, various countries are recognising the importance of their seas and shores, and it is promising that India is not far behind its western counterparts. India has a glorious history of seas that once whispered tales of Trade, Power, and Civilizational glory, and it shall continue to tread its path of glory by solidifying and securing its maritime digital infrastructure. The path brings together an integration of the maritime sector and advanced technologies, bringing India to a crucial juncture – one where proactive measures can help bridge the gap with global best practices. In this context, to bring together an infallible framework, it becomes pertinent to incorporate IMO’s Guidelines on maritime cyber risk management, which establish principles to assess potential threats and vulnerabilities and advocate for enhanced cyber discipline. In addition, the guidelines that are designed to encourage safety and security management practices in the cyber domain warn the authorities against procedural lapses that lead to the exploitation of vulnerabilities in either information technology or operational technology systems.

Anchoring Security: Global Best Practices & Possible Frameworks

The Asia-Pacific region has not fallen behind the US and the European Union in realising the need to have a dedicated framework, with the growing prominence of the maritime sector and countries like Singapore, China, and Japan leading the way with their robust frameworks. They have in place various requirements that govern their maritime operations and keep in check various vulnerabilities, such as Cybersecurity Awareness Training, Cyber Incident Reporting, Data Localisation, establishing secure communications, Incident management, penalties, etc.

Every country striving towards growth and expanding its international trade and commerce must ensure that it is secure from all ends to boost international cooperation and trust. On that note, the maritime sector has to be fortified by placing the best possible practices or a framework that is inclined towards its commitment to growth. The following four measures are indispensable to this framework, and in the maritime industry, they must be adapted to the unique blend of Information Technology (IT) and Operational Technology (OT) used in ships, ports, and logistics. The following mechanisms are not exhaustive in nature but form a fundamental part of the framework:

- Risk Assessment: Identifying, analysing, and ensuring that all systems that are susceptible to cyber threats are prioritized and vulnerability scans are conducted of vessel control systems and shore-based systems. The critical assets that have a larger impact on the whole system should be kept formidable in comparison to other systems that may not require the same attention.

- Access Control: Restrictions with regard to authorisation, wherein access must be restricted to verified personnel to reduce internal threats and external breaches.

- Incident Response Planning: The nature of cyber risks is inherently dynamic in nature; there are no calls for cyber attacks or warfare techniques. Such attacks are often committed in the shadows, so as to require an action plan to respond to and to recover from cyber incidents effectively.

- Continuous Staff Training: Regularly educating all levels of maritime personnel about cyber hygiene, threat trends, and secure practices.

CyberPeace Suggests: Legislative & Executive Imperatives

It can be said with reasonable foresight that the Indian maritime sector is in need of a national maritime cybersecurity framework that operates in cooperation with the international framework. The national imperatives will include robust cyber hygiene requirements, real-time threat intelligence mechanisms, incident response obligations, and penalties for non-compliance. The government must strive to support Indian shipbuilders through grants or incentives to adopt cyber-resilient ship design frameworks.

The legislative quest should be to incorporate the National Maritime Cybersecurity Framework with the well-established CERT-In guidelines and data protection principles. The one indispensable requirement set under the framework should be to mandate Cybersecurity Awareness Training to help deploy trained personnel equipped to tackle cyber threats. The rationale behind such a requirement is that there can be no “one-size-fits-all” approach to managing cybersecurity risk, which is dynamic and evolving in nature, and the trained personnel will play a key role in helping establish a customised framework.

References

- https://pib.gov.in/PressNoteDetails.aspx?NoteId=153432®=3&lang=1

- https://bisresearch.com/industry-report/global-maritime-cybersecurity-market.html#:~:text=Maritime%20cybersecurity%20involves%20safeguarding%20digital,and%20protection%20against%20potential%20risks.

- https://www.shipuniverse.com/2025-maritime-cybersecurity-regulations-a-simplified-breakdown/#:~:text=Japan%3A,for%20incident%20response%20and%20recovery.

- https://wwwcdn.imo.org/localresources/en/OurWork/Security/Documents/MSC-FAL.1-Circ.3-Rev.2%20-%20Guidelines%20On%20Maritime%20Cyber%20Risk%20Management%20(Secretariat)%20(1).pdf