#FactCheck

Executive Summary

A dispute had recently emerged in Kotdwar, Uttarakhand, over the name of a shop. During the controversy, a local youth, Deepak Kumar, came forward in support of the shopkeeper. The incident subsequently became a subject of discussion on social media, with users expressing varied reactions. Meanwhile, a photo began circulating on social media showing a burqa-clad woman presenting a bouquet to Deepak Kumar. The image is being shared with the claim that All India Majlis-e-Ittehadul Muslimeen (AIMIM)’s women’s president, Rubina, welcomed “Mohammad Deepak Kumar” by presenting him with a bouquet. However, research conducted by the CyberPeace found the viral claim to be false. The research revealed that users are sharing an AI-generated image with a misleading claim.

Claim:

On social media platform Instagram, a user shared the viral image claiming that AIMIM’s women’s president Rubina welcomed “Mohammad Deepak Kumar” by presenting him with a bouquet. The link to the post, its archived version, and a screenshot are provided below.

Fact Check:

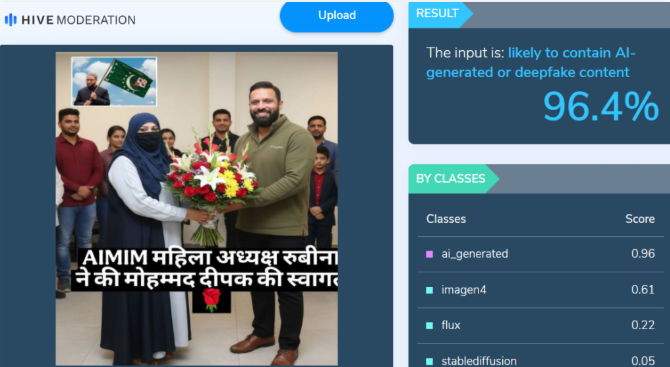

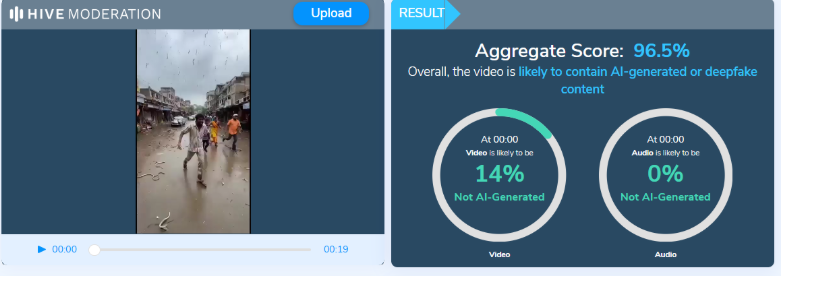

Upon closely examining the viral image, certain inconsistencies raised suspicion that it could be AI-generated. To verify its authenticity, the image was analysed using the AI detection tool Hive Moderation, which indicated a 96 percent probability that the image was AI-generated.

In the next stage of the research , the image was also analysed using another AI detection tool, Wasit AI, which likewise identified the image as AI-generated.

Conclusion

The research establishes that users are circulating an AI-generated image with a misleading claim linking it to the Kotdwar controversy.

Executive Summary

A picture circulating on social media allegedly shows Reliance Industries chairman Mukesh Ambani and Nita Ambani presenting a luxury car to India’s T20 team captain Suryakumar Yadav. The image is being widely shared with the claim that the Ambani family gifted the cricketer a luxury car in recognition of his outstanding performance. However, research conducted by the CyberPeace found the viral claim to be false. The research revealed that the image being circulated online is not authentic but generated using artificial intelligence (AI).

Claim

On February 8, 2025, a Facebook user shared the viral image claiming that Mukesh Ambani and Nita Ambani gifted a luxury car to Suryakumar Yadav following his brilliant innings. The post has been widely circulated across social media platforms. In another instance, a user shared a collage in which one image shows Suryakumar Yadav receiving an award, while another depicts him with Nita Ambani, further amplifying the claim.

- https://www.facebook.com/61559815349585/posts/122207061746327178/?rdid=0MukeT6c7WK1uB8m#

- https://archive.ph/wip/UH9Xh

Fact Check:

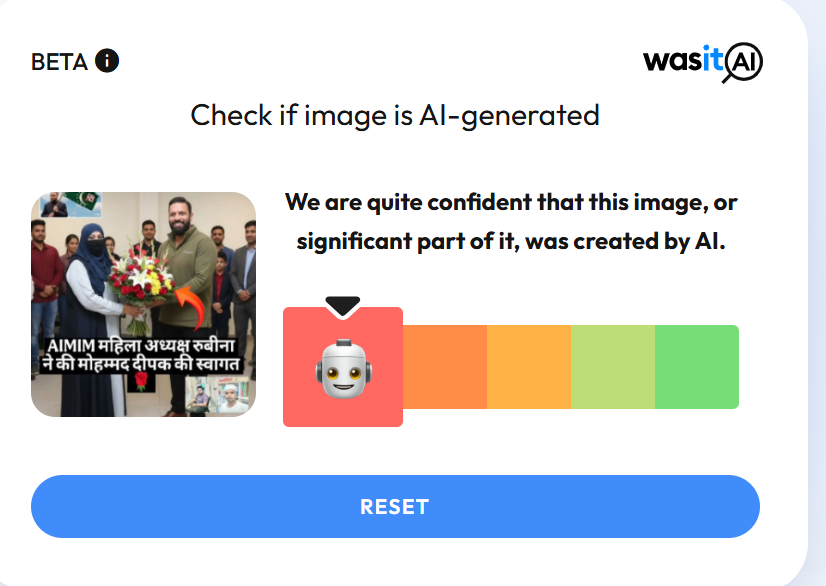

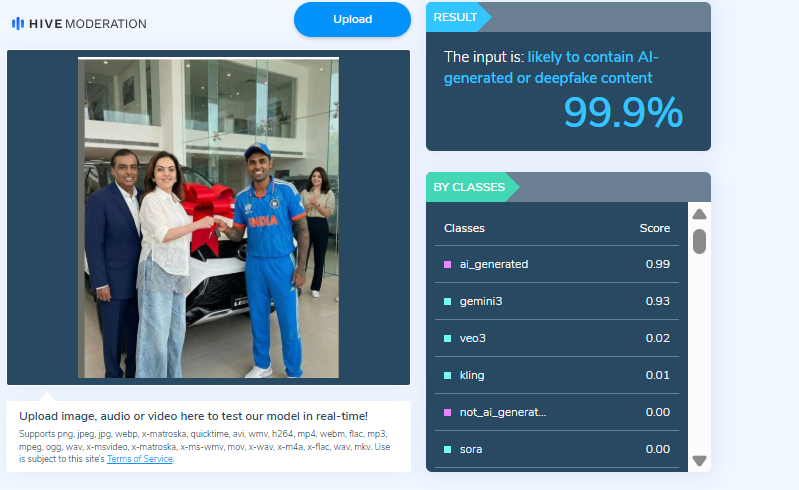

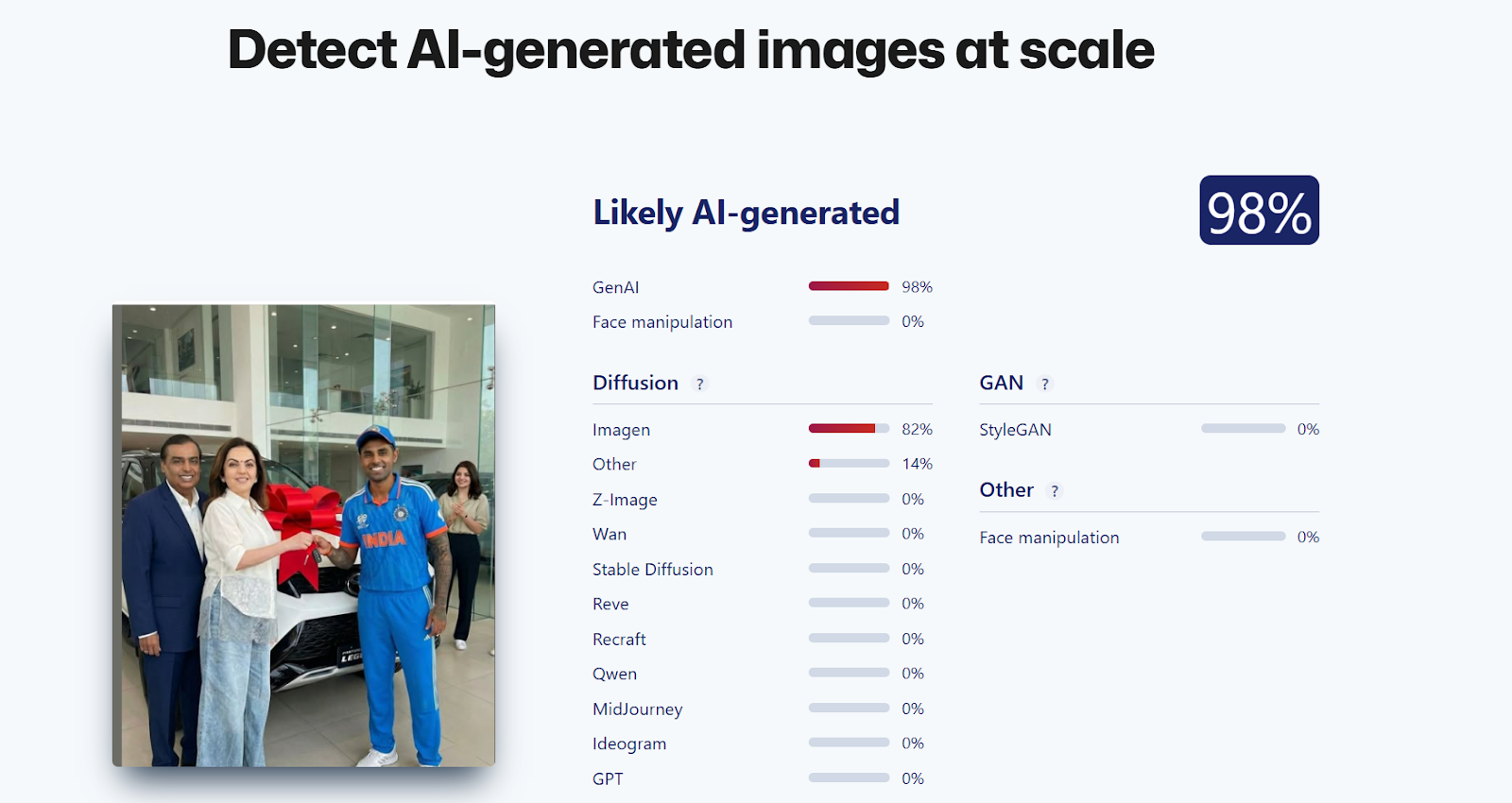

Upon closely examining the viral image, certain visual inconsistencies raised suspicion that it might be AI-generated. To verify its authenticity, the image was analysed using the AI detection tool Hive Moderation, which indicated a 99 percent probability that the image was AI-generated.

In the next step of the research, the image was also analysed using another AI detection tool, Sightengine, which found a 98 percent likelihood that the image was created using artificial intelligence.

Conclusion

The research clearly establishes that the viral image claiming Mukesh Ambani and Nita Ambani gifted a luxury car to Suryakumar Yadav is misleading. The picture is not real and has been generated using AI.

Executive Summary

A shocking video claiming to show snakes raining down from the sky is going viral on social media. The clip shows what appear to be cobras and pythons falling in large numbers instead of rain, while people are seen running in panic through a marketplace. The video is being shared with the claim that it is the result of “tampering with nature” and that sudden snake rainfall occurred in an unidentified country. (Links and archived versions provided)

CyberPeace researched the viral claim and found it to be false. The video does not depict a real incident. Instead, it has been generated using artificial intelligence (AI).

Fact Check

To verify the authenticity of the video, we extracted keyframes and conducted a reverse image search using Google Lens. However, we did not find any credible media report linked to the viral footage. We also searched relevant keywords on Google but found no reliable national or international news coverage supporting the claim. If snakes had genuinely rained from the sky in any country, the incident would have received widespread media attention globally. A frame-by-frame analysis of the video revealed multiple inconsistencies and visual anomalies:

In the first two seconds, a massive snake appears to fall onto electric wires, yet its body passes unrealistically through the wires — something that is physically impossible. The snakes falling from the sky and crawling on the ground move in an unnatural manner. Instead of falling under gravity, they appear to float mid-air. Around the 9–10 second mark, a person lying on the ground has a visibly distorted hand structure, a common artifact seen in AI-generated videos.

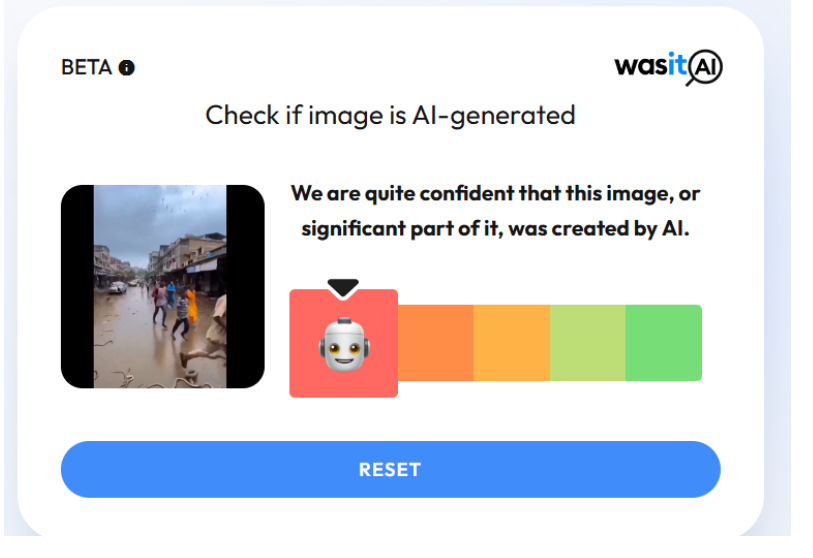

Such irregularities are typical indicators of AI-generated content. The viral video was further analyzed using the AI detection tool Hive Moderation, which indicated a 96.5% probability that the video was AI-generated.

Additionally, image detection tool WasitAI also classified the visuals in the viral clip as highly likely to be AI-generated.

Conclusion

CyberPeace ’s research confirms that the viral video claiming to show snakes raining from the sky is not authentic. The footage has been created using artificial intelligence and does not depict a real event.

Executive Summary:

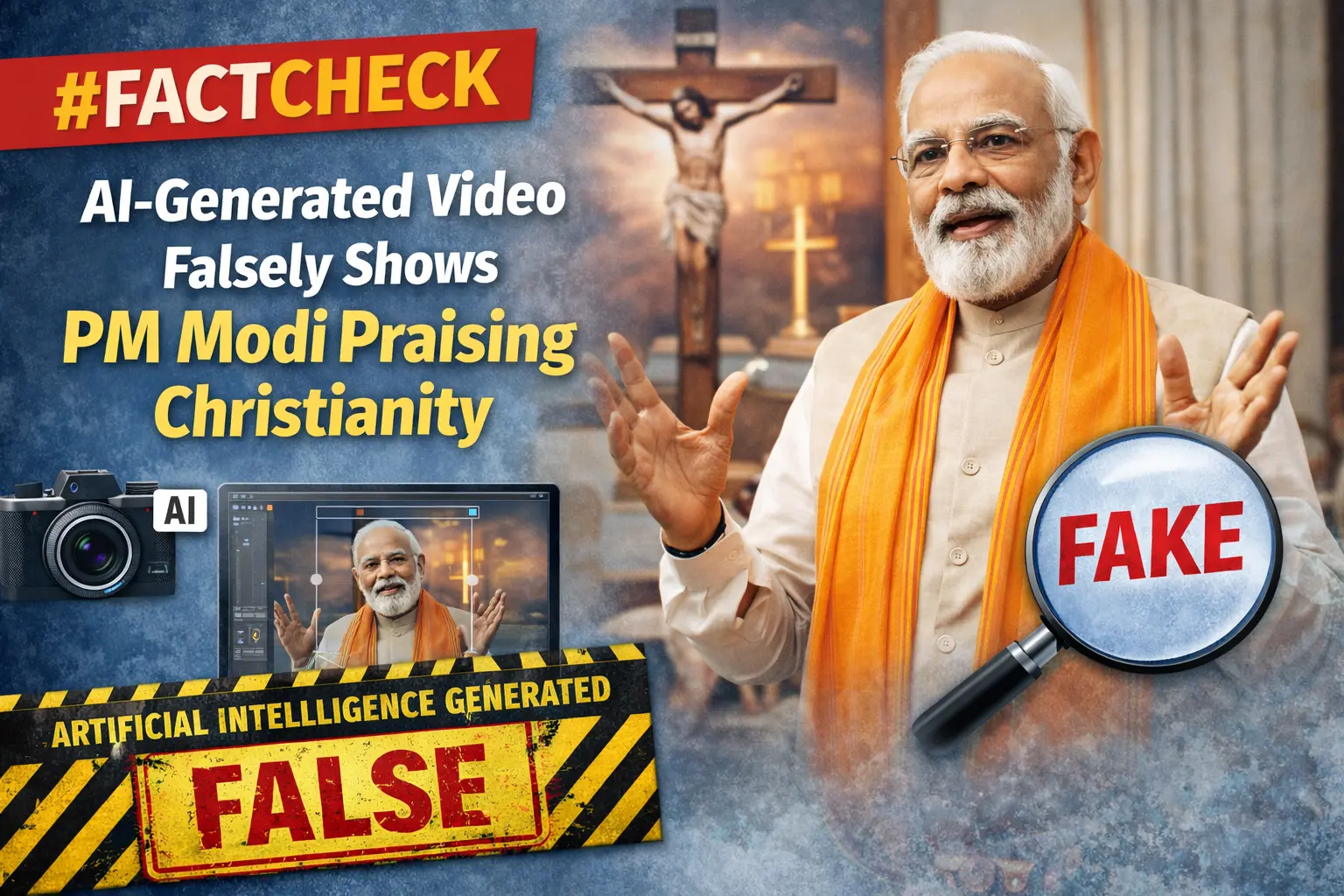

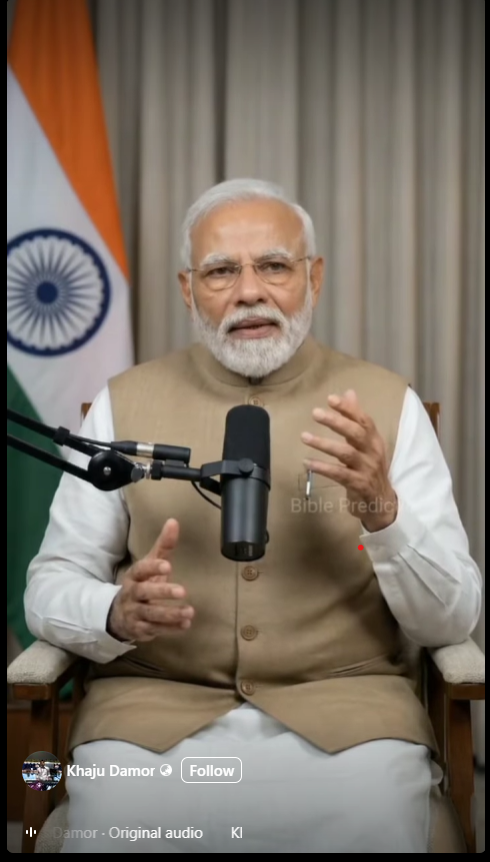

A video of Prime Minister Narendra Modi is going viral across multiple social media platforms. In the clip, PM Modi is purportedly heard praising Christianity and stating that only Jesus Christ can lead people to heaven.Several users are sharing and commenting on the video, believing it to be genuine. The CyberPeace researched the viral claim and found it to be false. The circulating video has been created using artificial intelligence (AI).

Claim:

On January 29, 2026, a Facebook user named ‘Khaju Damor’ posted the viral video of PM Modi. The post gained traction, with many users sharing and commenting on it as if it were authentic. (Links and archived versions provided)

Fact Check:

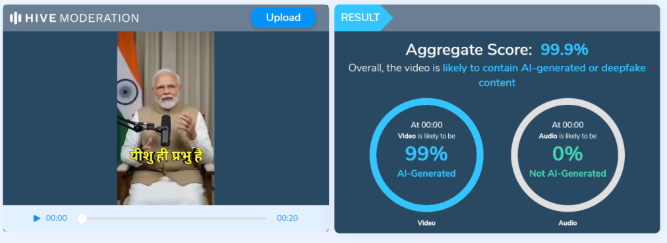

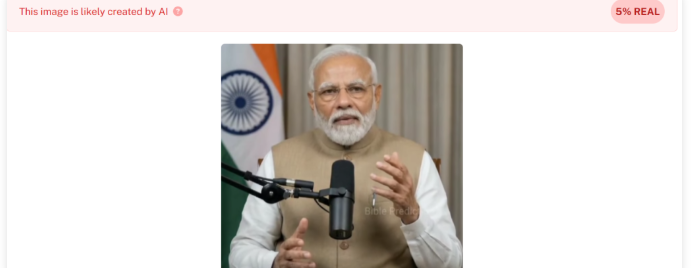

As part of our research , we first closely examined the viral video. Upon careful observation, several inconsistencies were noticed. The Prime Minister’s facial expressions and hand movements appeared unnatural. The lip-sync and overall visual presentation also raised suspicions about the clip being digitally manipulated. To verify this further, we analyzed the video using the AI detection tool Hive Moderation. The tool’s analysis indicated a 99% probability that the video was AI-generated.

To independently confirm the findings, we also ran the clip through another detection platform, Undetectable.ai. Its analysis likewise indicated a very high likelihood that the video was created using artificial intelligence.

Conclusion:

Our research confirms that the viral video of Prime Minister Narendra Modi praising Christianity and making the alleged statement about heaven is fake. The clip has been generated using AI tools and does not depict a real statement made by the Prime Minister.

Executive Summary

A video is being shared on social media claiming to show an avalanche in Kashmir. The caption of the post alleges that the incident occurred on February 6. Several users sharing the video are also urging people to avoid unnecessary travel to hilly regions. CyberPeace’s research found that the video being shared as footage of a Kashmir avalanche is not real. The research revealed that the viral video is AI-generated.

Claim

The video is circulating widely on social media platforms, particularly Instagram, with users claiming it shows an avalanche in Kashmir on February 6. The archived version of the post can be accessed here. Similar posts were also found online. (Links and archived links provided)

Fact Check:

To verify the claim, we searched relevant keywords on Google. During this process, we found a video posted on the official Instagram account of the BBC. The BBC post reported that an avalanche occurred near a resort in Sonamarg, Kashmir, on January 27. However, the BBC post does not contain the viral video that is being shared on social media, indicating that the circulating clip is unrelated to the real incident.

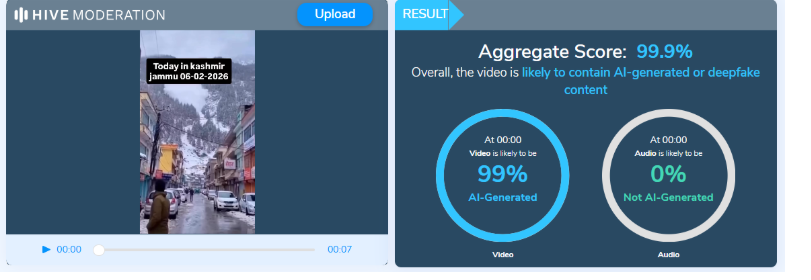

A close examination of the viral video revealed several inconsistencies. For instance, during the alleged avalanche, people present at the site are not seen panicking, running for cover, or moving toward safer locations. Additionally, the movement and flow of the falling snow appear unnatural. Such visual anomalies are commonly observed in videos generated using artificial intelligence. As part of the research , the video was analyzed using the AI detection tool Hive Moderation. The tool indicated a 99.9% probability that the video was AI-generated.

Conclusion

Based on the evidence gathered during our research , it is clear that the video being shared as footage of a Kashmir avalanche is not genuine. The clip is AI-generated and misleading. The viral claim is therefore false.

Executive Summary

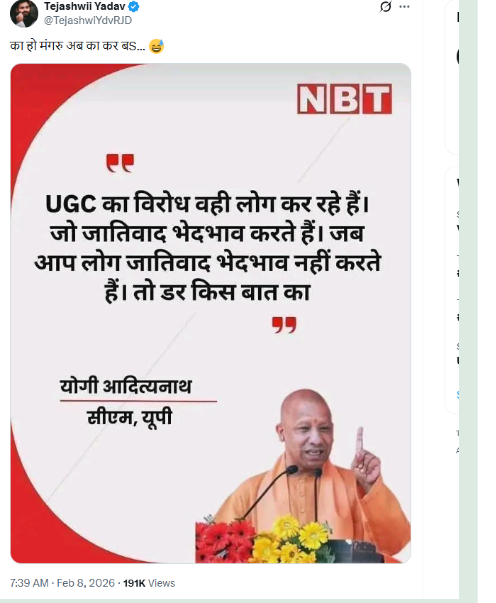

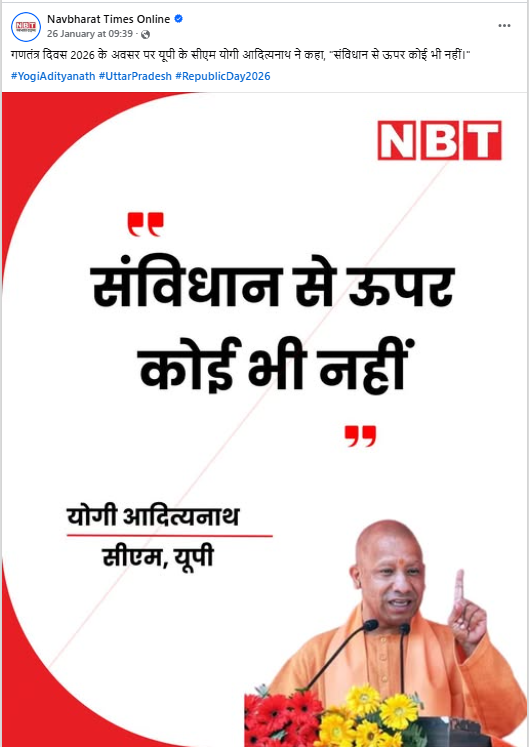

A news graphic is being shared on social media claiming that Uttar Pradesh Chief Minister Yogi Adityanath said,“Those who practice casteism and discrimination are the ones opposing UGC. If you do not indulge in caste-based discrimination, what is there to fear?” The CyberPeace’s research found the viral claim circulating on social media to be false. Our research revealed that Chief Minister Yogi Adityanath never made such a statement. It was also established that the viral news graphic has been digitally edited.

Claim

On February 8, a user on social media platform X (formerly Twitter) shared a news graphic bearing the logo of Navbharat Times, attributing the above statement to CM Yogi Adityanath. The post and its archived version can be seen below, along with screenshots. (Links and screenshots provided)

Fact Check:

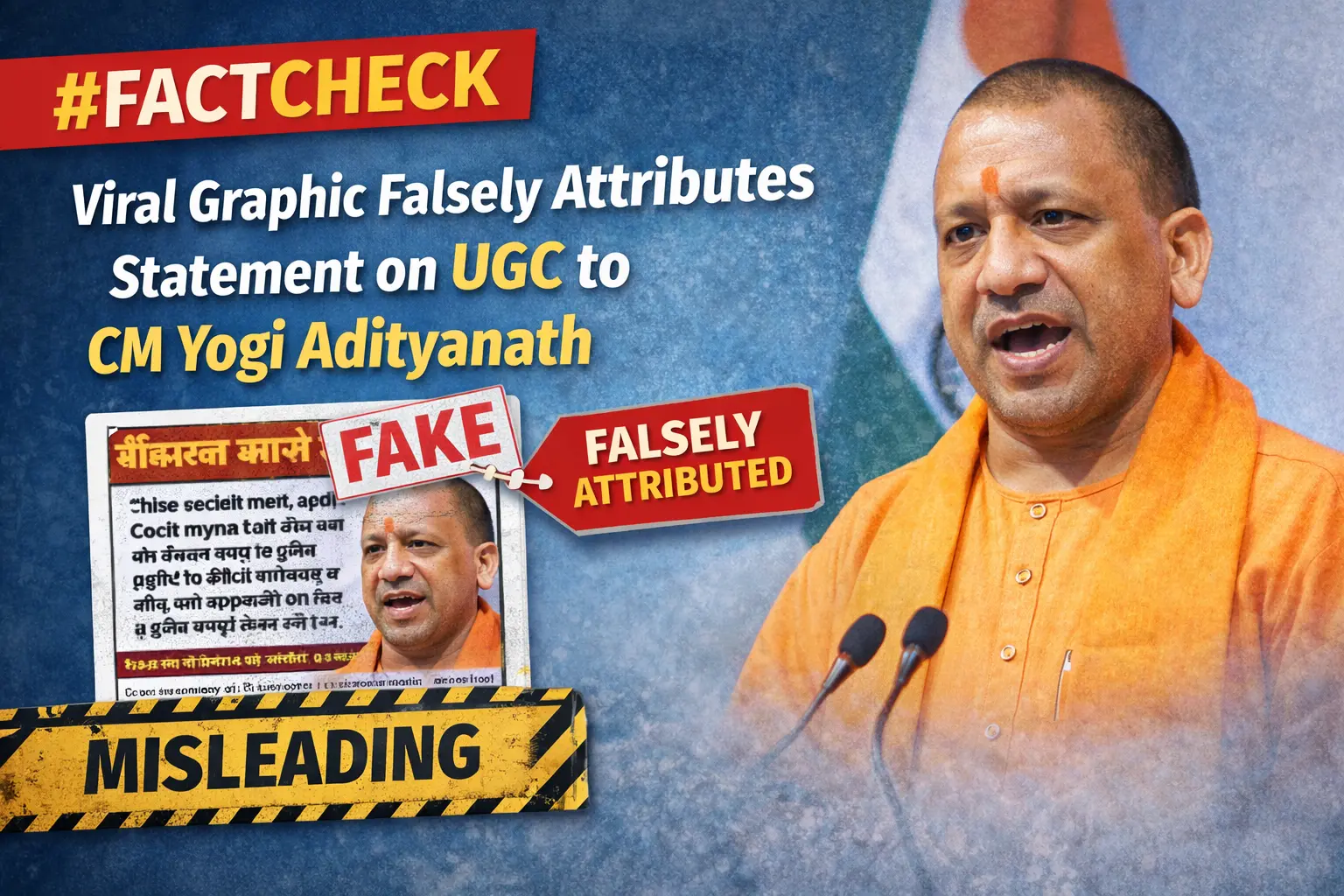

To verify the authenticity of the claim, we conducted a keyword-based search on Google. However, we did not find any credible or reliable media report supporting the viral statement. We further examined the official social media accounts of Chief Minister Yogi Adityanath, including his Facebook and Instagram handles. Our review found no post, speech, or statement resembling the claim made in the viral graphic.

Continuing the research , we examined the official social media accounts of Navbharat Times. During this process, we found the original graphic published on the Navbharat Times Facebook page on January 26, 2026. The caption of the original graphic read: “On the occasion of Republic Day 2026, Uttar Pradesh Chief Minister Yogi Adityanath said, ‘No one is above the Constitution.’”

This clearly differs from the claim made in the viral graphic, indicating that the latter was altered.

Conclusion

Our research confirms that Uttar Pradesh Chief Minister Yogi Adityanath did not make the statement being attributed to him on social media. The viral news graphic is digitally edited and misleading. The claim, therefore, is false.

Executive Summary

A digitally manipulated image of World Bank President Ajay Banga has been circulating on social media, falsely portraying him as holding a Khalistani flag. The image was shared by a Pakistan-based X (formerly Twitter) user, who also incorrectly identified Banga as the President of the International Monetary Fund (IMF), thereby fuelling misleading speculation that he supports the Khalistani movement against India.

The Claim

On February 5, an X user with the handle @syedAnas0101010 posted an image allegedly showing Ajay Banga holding a Khalistani flag. The user misidentified him as the IMF President and captioned the post, “IMF president sending signals to INDIA.” The post quickly gained traction, amplifying false narratives and political speculation. Here is the link and archive link to the post, along with a screenshot:

Fact Check:

To verify the authenticity of the image, the CyberPeace Fact Check Desk conducted a detailed research . The image was first subjected to a reverse image search using Google Lens, which led to a Reuters news report published on June 13, 2023. The original photograph, captured by Reuters photojournalist Jonathan Ernst, showed Ajay Banga arriving at the World Bank headquarters in Washington, D.C., on June 2, 2023, marking his first day in office. In the authentic image, Banga is seen holding a coffee cup, not a flag.

Further analysis confirmed that the viral image had been digitally altered to replace the coffee cup with a Khalistani flag, thereby misrepresenting the context and intent of the original photograph. Here is the link to the report, along with a screenshot.

To strengthen the findings, the altered image was also analysed using the Hive Moderation AI detection tool. The tool’s assessment indicated a high likelihood that the image contained AI-generated or manipulated elements, reinforcing the conclusion that the image was not genuine. Below is a screenshot of the result.

Conclusion

The viral image claiming to show World Bank President Ajay Banga holding a Khalistani flag is fake. The photograph was digitally manipulated to spread misinformation and provoke political speculation. In reality, the original Reuters image from June 2023 shows Banga holding a coffee cup during his arrival at the World Bank headquarters. The claim that he supports the Khalistani movement is false and misleading.

.webp)

Executive Summary:

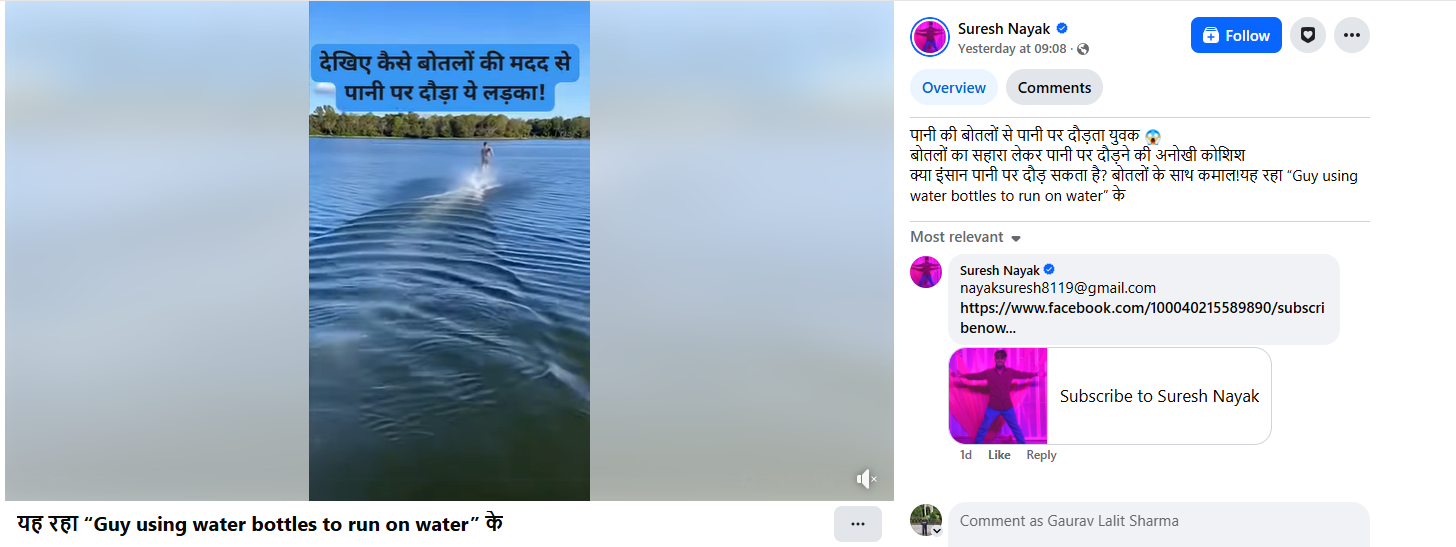

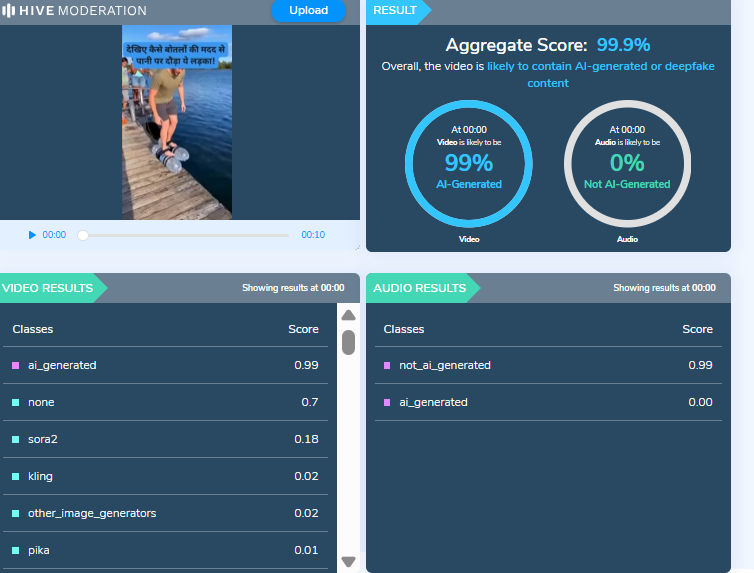

A video is being shared on social media showing a man running rapidly in a river with water bottles tied to both his feet. Users are circulating the video claiming that the man is attempting to run on water using the support of the bottles. CyberPeace’s research found the viral claim to be false. Our research revealed that the video being shared on social media is not real but has been generated using artificial intelligence (AI).

Claim :

The claim was shared by a Facebook user on February 5, 2026, who wrote that a man was running on water using water bottles tied to his feet, calling it a unique attempt and questioning whether humans can run on water. Links to the post, its archived version, and screenshots are provided below.

Fact Check:

To verify the claim, we searched relevant keywords on Google but did not find any credible media reports supporting the incident. A closer examination of the viral video revealed several visual irregularities, raising suspicion that it may have been AI-generated. The video was then scanned using the AI detection tool Hive Moderation. According to the tool’s results, the video is 99 percent likely to be AI-generated.

Conclusion:

Our research confirms that the viral video does not depict a real incident and has been falsely shared as a genuine attempt to run on water.

Executive Summary

A video showing thick smoke rising from a building and people running in panic is being shared on social media. The video is being circulated with the claim that it shows Iran launching a missile attack on the United States.CyberPeace’s research found the claim to be misleading. Our probe revealed that the video is not related to any recent incident. The viral clip is actually from the September 11, 2001 terrorist attacks on the World Trade Center in the United States and is being falsely shared as footage of an alleged Iranian missile strike on the US.

Claim:

An Instagram user shared the video claiming, “Iran has attacked a US airbase in Qatar. Iran has fired six ballistic missiles at the Al Udeid Airbase in Qatar. Al Udeid Airbase is the largest US military base in West Asia.”

Links to the post and its archived version are provided below.

Fact Check:

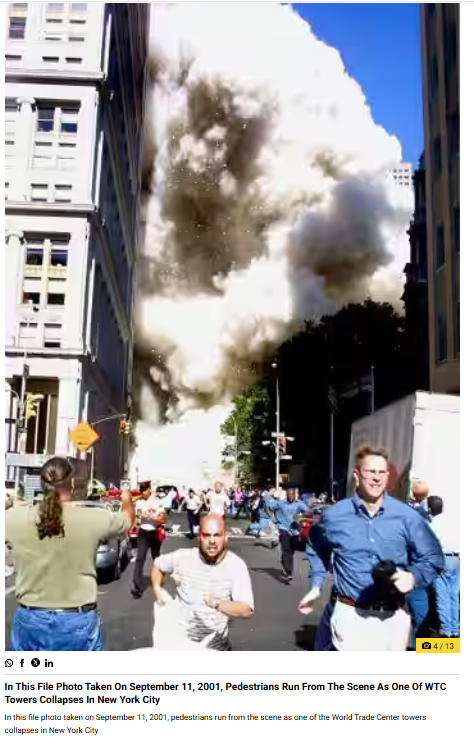

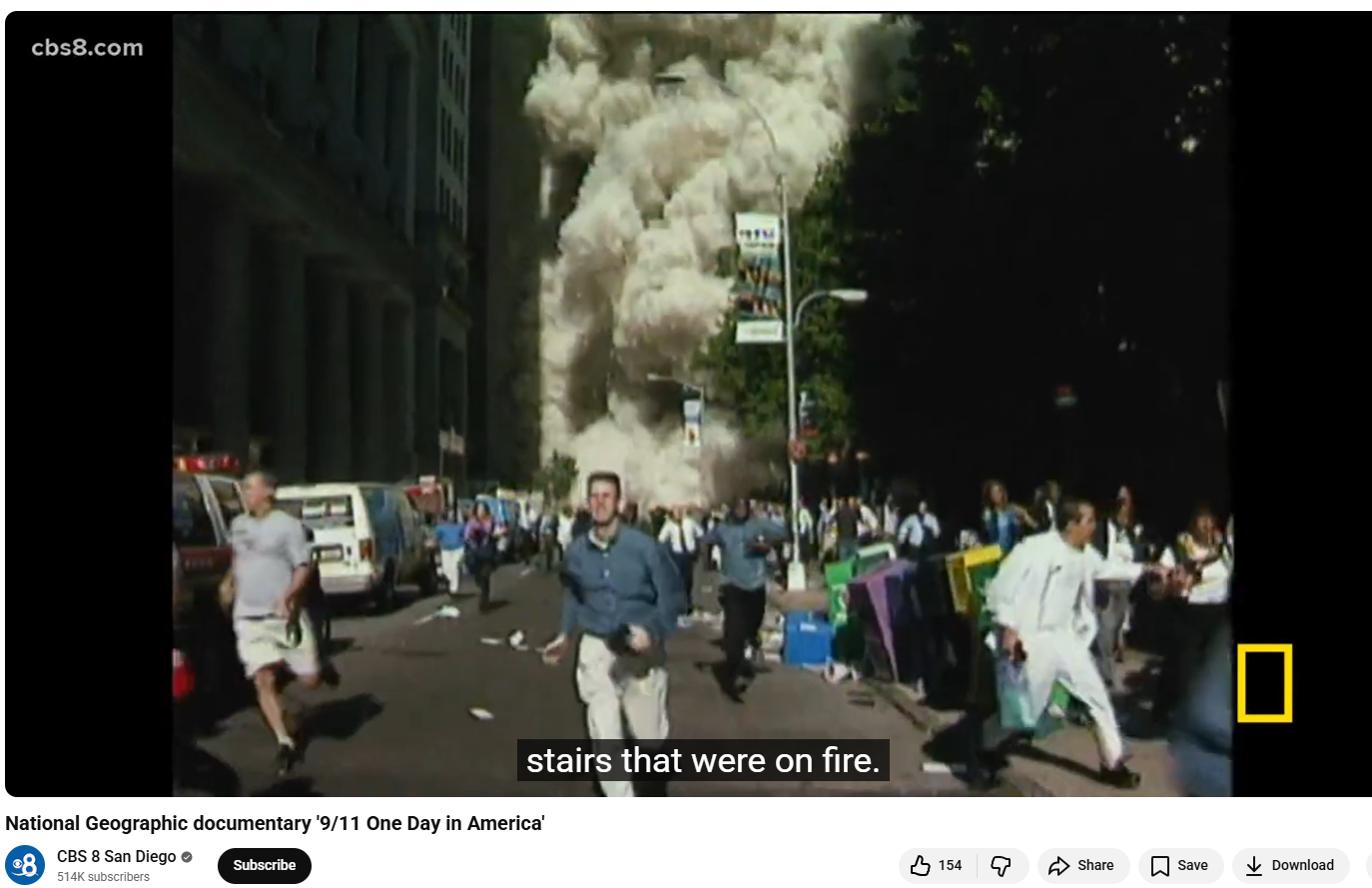

To verify the claim, we extracted key frames from the viral video and ran a reverse image search using Google Lens. During the search, we found visuals matching the viral clip in a report published by Wion on September 11, 2021. The report, titled “In pics | A look back at the scenes from the 9/11 attacks,” included an image that closely resembled the visuals seen in the viral video. The caption of the image stated that it was a file photo from September 11, 2001, showing pedestrians running as one of the World Trade Center towers collapsed in New York City.

Further research led us to the same footage on the YouTube channel CBS 8 San Diego. At the 01:11 timestamp of the video, visuals matching the viral clip can be clearly seen.

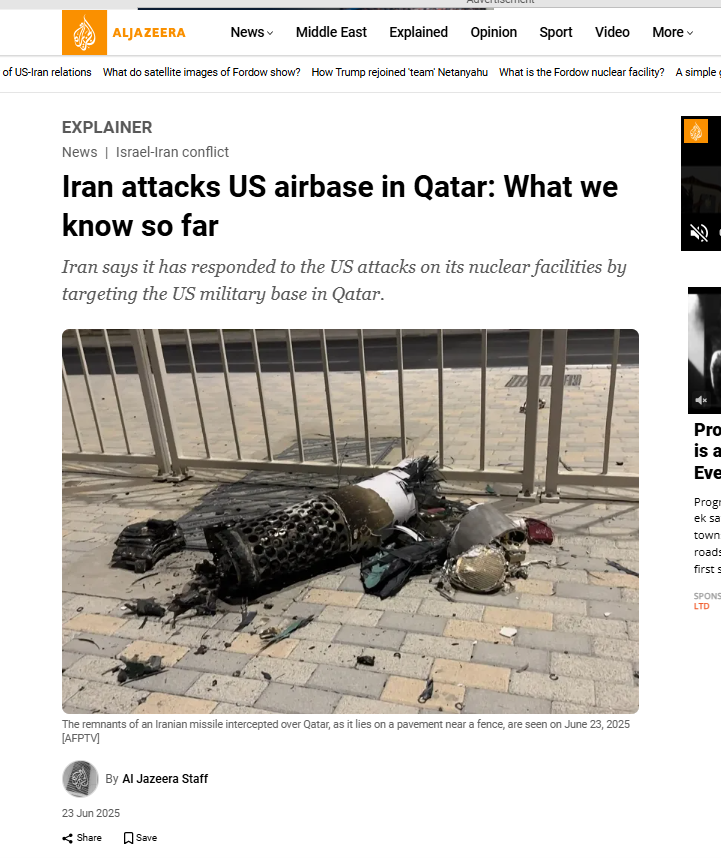

We also found an Al Jazeera report dated June 23, 2025, which confirmed that Iran had attacked US forces stationed at the Al Udeid airbase in Qatar in retaliation for US strikes on Iran’s uclear facilities. However, the visuals used in the viral video do not correspond to this incident.

Conclusion

The viral video does not show a recent Iranian attack on a US airbase in Qatar. The clip actually dates back to the September 11, 2001 terrorist attacks on the World Trade Center in the United States. Old 9/11 footage has been falsely shared with a misleading claim linking it to Iran’s alleged missile strike on the US.

Executive Summary:

A purported media release allegedly issued in the name of the International Cricket Council (ICC) is being widely circulated on social media. The release claims that the ICC has decided to impose a one-year ban on Pakistan cricket. CyberPeace’s research found this claim to be false.The research revealed that the media release circulating on social media is fake, and no such letter or official statement has been issued by the ICC.

Claim:

On social media platform X (formerly Twitter), a user shared the viral letter on February 3, 2026, claiming that an ICC meeting was held in which board members voted on issues related to Pakistan. The post alleged that 14 out of 16 votes were cast in favour of the BCCI. The user further claimed that Pakistan’s share of ICC revenue would be reduced and that Pakistan might be asked to compensate for losses incurred by the ICC.

The viral letter, written in English, stated that matters related to Pakistan were discussed in an ICC meeting and that a 14–2 majority vote led to the decision to impose a one-year ban on Pakistan cricket. It further claimed that the Pakistan Super League (PSL) would be suspended for one year, Pakistan’s annual revenue share would be reduced from 5.75 percent to 2.25 percent, and Pakistan would not be allowed to host any ICC tournaments until 2040. The letter also claimed that these decisions were taken to safeguard the integrity and spirit of the game. Links to the viral post, archive link, and screenshots can be seen below.

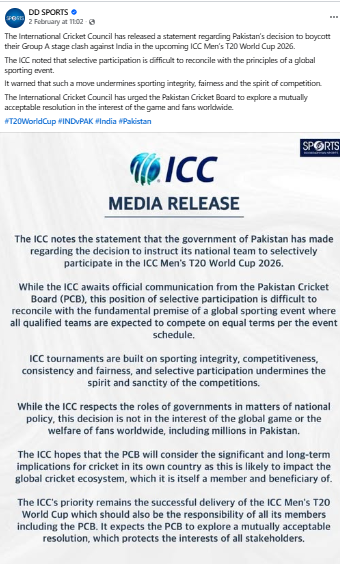

Fact Check:

To verify the viral claim, CyberPeace conducted a Google search using relevant keywords. However, no credible or reliable media reports supporting the claim were found. In the next step of the research , an official press release uploaded on DD Sports’ Facebook page on February 2, 2026, was found. The press release responded to Pakistan’s decision not to play against India in a Group A match. The DD Sports statement said that the Pakistan Cricket Board should consider the long-term and serious implications of such a decision, as it could impact the global cricket ecosystem—of which Pakistan is itself a member and beneficiary.

Notably, the official press release made no mention of any ban on Pakistan cricket, reduction in revenue share, suspension of the PSL, or restrictions on hosting ICC tournaments, contrary to the claims made in the viral letter. Further, the same official statement was found published on the ICC’s website on February 1, 2026. This release also did not mention any decision related to banning Pakistan cricket or barring the country from hosting ICC tournaments for the next 40 years.

Conclusion

CyberPeace concludes that the media release circulating on social media is fake. The ICC has not issued any official letter or statement announcing a one-year ban on Pakistan cricket, revenue cuts, or restrictions on hosting ICC tournaments.

Executive Summary

Mumbai’s Mira–Bhayandar bridge has recently been in the news due to its unusual design. In this context, a photograph is going viral on social media showing a bus seemingly stuck on the bridge. Some users are also sharing the image while claiming that it is from Sonpur subdivision in Bihar. However, an research by the CyberPeace has found that the viral image is not real. The bridge shown in the image is indeed the Mira–Bhayandar bridge, which is under discussion because its design causes it to suddenly narrow from four lanes to two lanes. That said, the bridge is not yet operational, and the viral image showing a bus stuck on it has been created using Artificial Intelligence (AI).

Claim

An Instagram user shared the viral image on January 29, 2026, with the caption:“Are Indian taxpayers happy to see that this is funded by their money?” The link, archive link, and screenshot of the post can be seen below.

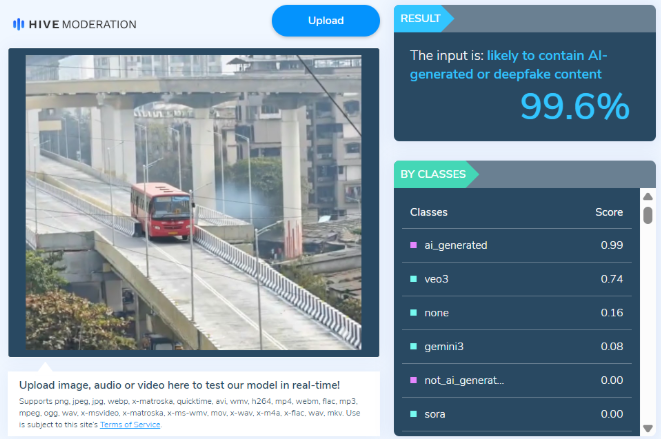

Fact Check:

To verify the claim, we first conducted a Google Lens reverse image search. This led us to a post shared by X (formerly Twitter) user Manoj Arora on January 29. While the bridge structure in that image matches the viral photo, no bus is visible in the original post.This raised suspicion that the viral image had been digitally manipulated.

We then ran the viral image through the AI detection tool Hive Moderation, which flagged it as over 99% likely to be AI-generated

Conclusion

The CyberPeace research confirms that while the Mira–Bhayandar bridge is real and has been in the news due to its design, the viral image showing a bus stuck on the bridge has been created using AI tools. Therefore, the image circulating on social media is misleading.

Executive Summary

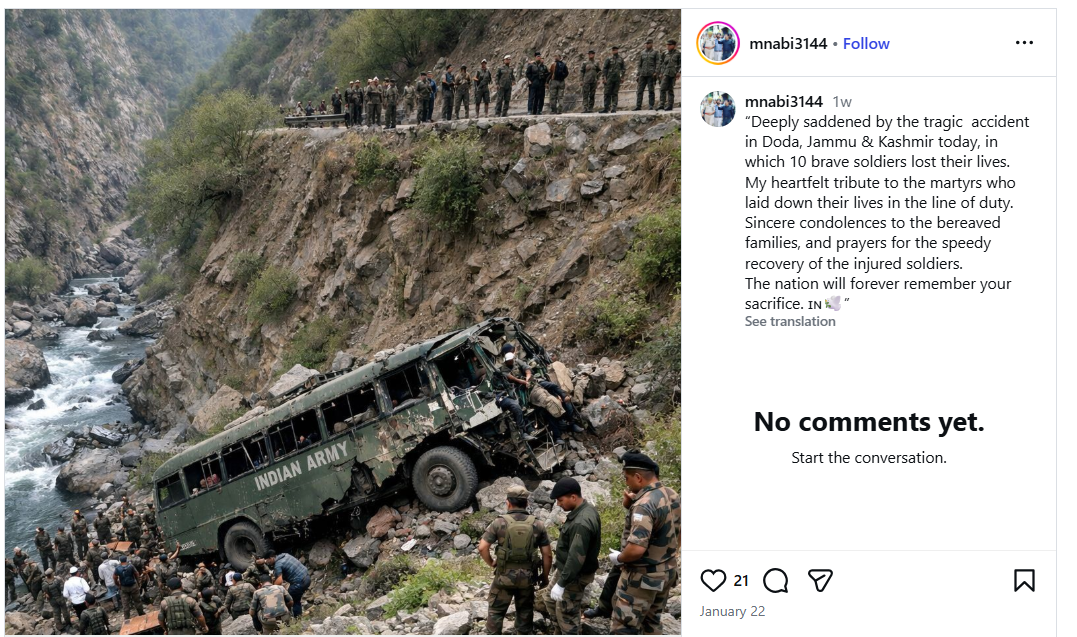

On January 22, an Indian Army vehicle met with an accident in Jammu and Kashmir’s Doda district, resulting in the death of 10 soldiers, while several others were injured. In connection with this tragic incident, a photograph is now going viral on social media. The viral image shows an Army vehicle that appears to have fallen into a deep gorge, with several soldiers visible around the site. Users sharing the image are claiming that it depicts the actual scene of the Doda accident.

However, an research by the CyberPeacehas found that the viral image is not genuine. The photograph has been generated using Artificial Intelligence (AI) and does not represent the real accident. Hence, the viral post is misleading.

Claim

An Instagram user shared the viral image on January 22, 2026, writing:“Deeply saddened by the tragic accident in Doda, Jammu & Kashmir today, in which 10 brave soldiers lost their lives. My heartfelt tribute to the martyrs who laid down their lives in the line of duty.Sincere condolences to the bereaved families, and prayers for the speedy recovery of the injured soldiers.The nation will forever remember your sacrifice.”

The link and screenshot of the post can be seen below.

- https://www.instagram.com/p/DT0UBIRk_3k/

- https://archive.ph/submit/?url=https%3A%2F%2Fwww.instagram.com%2Fp%2FDT0UBIRk_3k%2F+

Fact Check:

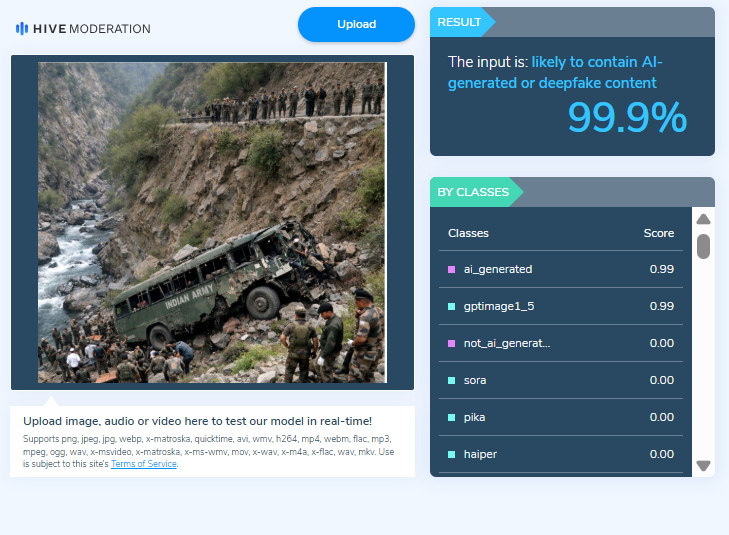

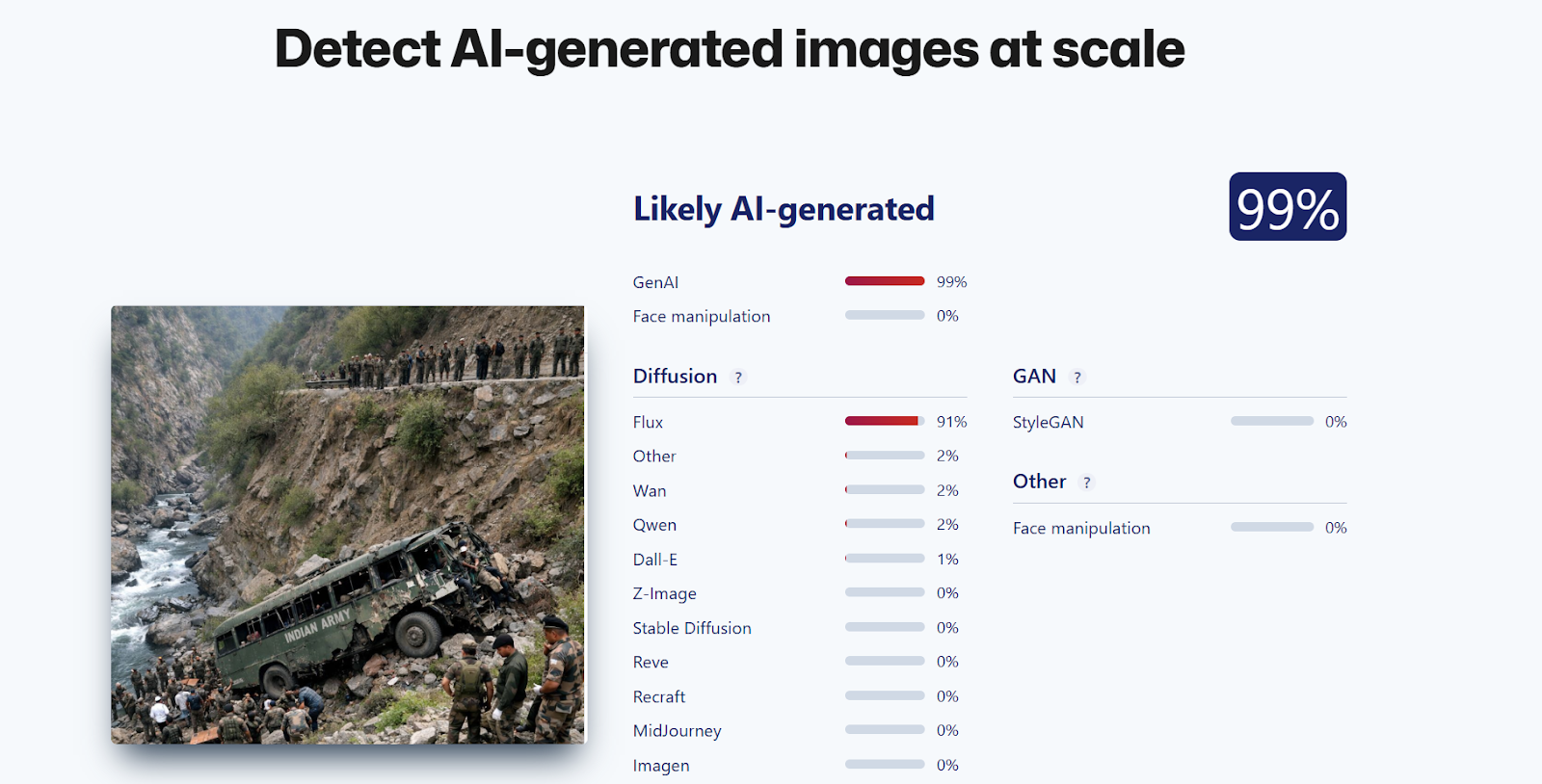

To verify the claim, we first closely examined the viral image. Several visual inconsistencies were observed. The structure of the soldier visible inside the damaged vehicle appears distorted, and the hands and limbs of people involved in the rescue operation look unnatural. These anomalies raised suspicion that the image might be AI-generated. Based on this, we ran the image through the AI detection tool Hive Moderation, which indicated that the image is over 99.9% likely to be AI-generated.

Another AI image detection tool, Sightengine, also flagged the image as 99% AI-generated.

During further research , we found a report published by Navbharat Times on January 22, 2026, which confirmed that an Indian Army vehicle had indeed fallen into a deep gorge in Doda district. According to officials, 10 soldiers were killed and 7 others were injured, and rescue operations were immediately launched.

However, it is important to note that the image circulating on social media is not an actual photograph from the incident.

Conclusion

CyberPeace research confirms that the viral image linked to the Doda Army vehicle accident has been created using Artificial Intelligence. It is not a real photograph from the incident, and therefore, the viral post is misleading.