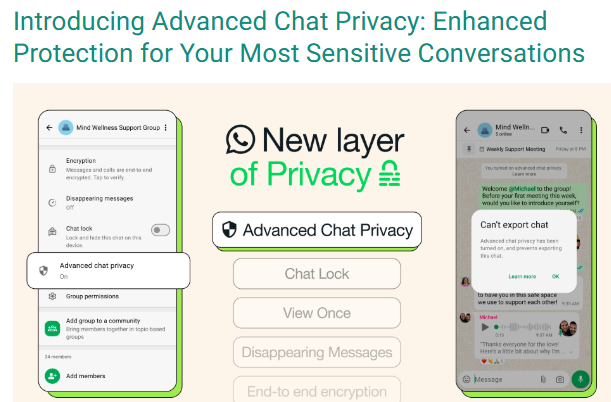

#FactCheck: A viral claim suggests that by turning on Advance Chat Privacy, Meta AI can avoid reading Whatsapp chats.

Executive Summary:

A viral social media video falsely claims that Meta AI reads all WhatsApp group and individual chats by default, and that enabling “Advanced Chat Privacy” can stop this. On performing reverse image search we found a blog post of WhatsApp which was posted in the month of April 2025 which claims that all personal and group chats remain protected with end to end (E2E) encryption, accessible only to the sender and recipient. Meta AI can interact only with messages explicitly sent to it or tagged with @MetaAI. The “Advanced Chat Privacy” feature is designed to prevent external sharing of chats, not to restrict Meta AI access. Therefore, the viral claim is misleading and factually incorrect, aimed at creating unnecessary fear among users.

Claim:

A viral social media video [archived link] alleges that Meta AI is actively accessing private conversations on WhatsApp, including both group and individual chats, due to the current default settings. The video further claims that users can safeguard their privacy by enabling the “Advanced Chat Privacy” feature, which purportedly prevents such access.

Fact Check:

Upon doing reverse image search from the keyframe of the viral video, we found a WhatsApp blog post from April 2025 that explains new privacy features to help users control their chats and data. It states that Meta AI can only see messages directly sent to it or tagged with @Meta AI. All personal and group chats are secured with end-to-end encryption, so only the sender and receiver can read them. The "Advanced Chat Privacy" setting helps stop chats from being shared outside WhatsApp, like blocking exports or auto-downloads, but it doesn’t affect Meta AI since it’s already blocked from reading chats. This shows the viral claim is false and meant to confuse people.

Conclusion:

The claim that Meta AI is reading WhatsApp Group Chats and that enabling the "Advance Chat Privacy" setting can prevent this is false and misleading. WhatsApp has officially confirmed that Meta AI only accesses messages explicitly shared with it, and all chats remain protected by end-to-end encryption, ensuring privacy. The "Advanced Chat Privacy" setting does not relate to Meta AI access, as it is already restricted by default.

- Claim: Viral social media video claims that WhatsApp Group Chats are being read by Meta AI due to current settings, and enabling the "Advance Chat Privacy" setting can prevent this.

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

Introduction

AI is transforming the way work is done and redefining the nature of jobs over the next decade. In the case of India, it is not just what duties will be taken over by machines, but how millions of employees will move to other sectors, which skills will become more sought-after, and how policy will have to change in response. This article relies on recent labour data of India's Periodic Labour Force Survey (PLFS, 2023-24) and discusses the vulnerabilities to disruption by location and social groups. It recommends viable actions that can be taken to ensure that risks are minimised and economic benefits maximised.

India’s Labour Market and Its Automation Readiness

According to India’s Periodic Labour Force Survey (PLFS), the labour market is changing and growing. Participation in the labour force improved to 60.1 per percent in 2023-24 versus 57.9 per cent the year before, and the ratio of the worker population also improved, signifying the increased employment uptake both in the rural and urban geographies (PLFS, 2023-24). There has also been an upsurge of female involvement. However, a big portion of the job market has been low-wage and informal, with most of the jobs being routine and thus most vulnerable to automation. The statistics indicate a two-tiered reality of the Indian labour market: an increased number of working individuals and a structural weakness.

AI-Driven Automation’s Impact on Tasks and Emerging Opportunities

AI-driven automation, for the most part, affects the task components of jobs rather than wiping out whole jobs. The most automatable tasks are routine and manual, and more recent developments in AI have extended to non-routine cognitive tasks like document review, customer query handling, basic coding and first-level decision-making. There are two concurrent findings of global studies. To start with, part of the ongoing tasks will be automated or expedited. Second, there will be completely new tasks and work positions around data annotation, the operation of AI systems, prompt engineering, algorithmic supervision and AI adherence (World Bank, 2025; McKinsey, 2017).

In the case of India, this change will be skewed by sector. The manufacturing, back-office IT services, retail and parts of financial services will see the highest rate of disruption due to the concentration of routine processes with the ease of technology adoption. In comparison, healthcare, education, high-tech manufacturing and AI safety auditing are placed to create new skilled jobs. NITI Aayog estimates huge returns in GDP with the adoption of AI but emphasises that India has to invest simultaneously in job creation and reskilling to achieve the returns (NITI Aayog, 2025).

Groups with Highest Vulnerability in the Transition to Automation

The PLFS emphasises that a large portion of the Indian population does not have any formal employment and that the social protection is minimal and formal training is not available to them. The risk of displacement is likely to be the greatest for informal employees, making up almost 90% of India’s labour force, who carry out low-skilled, repetitive jobs in the manufacturing and retail industry (PLFS, 2023-24). Women and young people in low-level service jobs also face a greater challenge of transition pressure unless the reskilling and placement efforts can be tailored to them. Meanwhile, major cities and urban centres are likely to have openings for most of the new skilled opportunities at the expense of an increasing geographic and social divide.

The Skills and Supply Challenge

While India’s education and research ecosystem is expanding, there remain significant gaps in preparing the workforce for AI-driven change. Given the vulnerabilities highlighted earlier, AI-focused reskilling must be a priority to equip workers with practical skills that meet industry needs. Short modular programs in areas such as cloud technologies, AI operations, data annotation, human-AI interaction, and cybersecurity can provide workers with employable skills. Particular attention should be given to routine-intensive sectors like manufacturing, retail, and back-office services, as well as to regions with high informal employment or lower access to formal training. Public-private partnerships and localised training initiatives can help ensure that reskilling translates into concrete job opportunities rather than purely theoretical knowledge (NITI Aayog, 2025)

The Way Forward

To facilitate the change process, the policy should focus on three interconnected goals: safeguarding the vulnerable, developing competencies on a large-scale level, and directing innovation towards the widespread ability to benefit.

- Protect the vulnerable through social buffers. Provide informal workers with social protection in the form of portable benefits, temporary income insurance based on reskilling, and earned training leave. While the new labour codes provide essential protections such as unemployment allowances and minimum wage standards, they could be strengthened by incorporating explicit provisions for reskilling. This would better support informal workers during job transitions and enhance workforce adaptability.

- Short modular courses on cloud computing, cybersecurity, data annotation, AI operations, and human-AI interaction should be planned through collaboration between public and private training providers. Special preference should be given to industry-certified certifications and apprenticeship-based placements. These apprenticeships should be made accessible in multiple languages to ensure inclusivity. Existing government initiatives, such as NASSCOM’s Future Skills Prime, need better outreach and marketing to reach the workforce effectively.

- Enhance local labour market mediators. Close the disparity between local demand and the supply of labour in the industry by enhancing placement services and government-subsidised internship programmes for displaced employees and encouraging firms to hire and train locally.

- Invest in AI literacy, AI ethics, and basic education. Democratise access to research and learning by introducing AI literacy in schools, increasing STEM seats in universities, and creating AI labs in the region (NITI Aayog, 2025).

- Encourage AI adoption that creates jobs rather than replaces them. Fiscal and regulatory incentives should prioritise AI tools that augment worker productivity in routine roles instead of eliminating positions. Public procurement can support firms that demonstrate responsible and inclusive deployment of AI, ensuring technology benefits both business and workforce.

- Supervise and oversee the transition. Use PLFS and real-time administrative data to monitor shrinking and expanding occupations. High-frequency labour market dashboards will allow making specific interventions in those regions in which the acceleration of displacement occurs.

Conclusion

The integration of AI will significantly impact the future of the Indian workforce, but policy will determine its effect on the labour market. The PLFS indicates increased employment but a structural weakness of informal and routine employment. Evidence from the Indian market and international research points to the fact that the appropriate combination of social protection, skills building and responsible technology implementation can change disruption into a path of upward mobility. There is a very limited window of action. The extent to which India will realise the productivity and GDP benefits predicted by national research, alongside the investments made in labour market infrastructure, remains uncertain. It is crucial that these efforts lead to the capture of gains and facilitate a fair and inclusive transition for workers.

References

- Annual Report Periodic Labour Force Survey (PLFS) JULY 2022 - JUNE 2023.

- Future Jobs: Robots, Artificial Intelligence, and Digital Platforms in East Asia and Pacific, World Bank.

- Jobs Lost, Jobs Gained: What the Future of Work Will Mean for Jobs, Skills, and Wages, McKinsey Global Institute

- Roadmap for Job Creation in the AI Economy, NITI Aayog

- India central bank chief warns of financial stability risks from growing use of AI, Reuters

- AI Cyber Attacks Statistics 2025, SQ Magazine.

Introduction

The mysteries of the universe have been a subject of curiosity for humans over thousands of years. To solve these unfolding mysteries of the universe, astrophysicists are always busy, and with the growing technology this seems to be achievable. Recently, with the help of Artificial Intelligence (AI), scientists have discovered the depths of the cosmos. AI has revealed the secret equation that properly “weighs” galaxy clusters. This groundbreaking discovery not only sheds light on the formation and behavior of these clusters but also marks a turning point in the investigation and discoveries of new cosmos. Scientists and AI have collaborated to uncover an astounding 430,000 galaxies strewn throughout the cosmos. The large haul includes 30,000 ring galaxies, which are considered the most unusual of all galaxy forms. The discoveries are the first outcomes of the "GALAXY CRUISE" citizen science initiative. They were given by 10,000 volunteers who sifted through data from the Subaru Telescope. After training the AI on 20,000 human-classified galaxies, scientists released it loose on 700,000 galaxies from the Subaru data.

Brief Analysis

A group of astronomers from the National Astronomical Observatory of Japan (NAOJ) have successfully applied AI to ultra-wide field-of-view images captured by the Subaru Telescope. The researchers achieved a high accuracy rate in finding and classifying spiral galaxies, with the technique being used alongside citizen science for future discoveries.

Astronomers are increasingly using AI to analyse and clean raw astronomical images for scientific research. This involves feeding photos of galaxies into neural network algorithms, which can identify patterns in real data more quickly and less prone to error than manual classification. These networks have numerous interconnected nodes and can recognise patterns, with algorithms now 98% accurate in categorising galaxies.

Another application of AI is to explore the nature of the universe, particularly dark matter and dark energy, which make up over 95% energy of the universe. The quantity and changes in these elements have significant implications for everything from galaxy arrangement.

AI is capable of analysing massive amounts of data, as training data for dark matter and energy comes from complex computer simulations. The neural network is fed these findings to learn about the changing parameters of the universe, allowing cosmologists to target the network towards actual data.

These methods are becoming increasingly important as astronomical observatories generate enormous amounts of data. High-resolution photographs of the sky will be produced from over 60 petabytes of raw data by the Vera C. AI-assisted computers are being utilized for this.

Data annotation techniques for training neural networks include simple tagging and more advanced types like image classification, which classify an image to understand it as a whole. More advanced data annotation methods, such as semantic segmentation, involve grouping an image into clusters and giving each cluster a label.

This way, AI is being used for space exploration and is becoming a crucial tool. It also enables the processing and analysis of vast amounts of data. This advanced technology is fostering the understanding of the universe. However, clear policy guidelines and ethical use of technology should be prioritized while harnessing the true potential of contemporary technology.

Policy Recommendation

- Real-Time Data Sharing and Collaboration - Effective policies and frameworks should be established to promote real-time data sharing among astronomers, AI developers and research institutes. Open access to astronomical data should be encouraged to facilitate better innovation and bolster the application of AI in space exploration.

- Ethical AI Use - Proper guidelines and a well-structured ethical framework can facilitate judicious AI use in space exploration. The framework can play a critical role in addressing AI issues pertaining to data privacy, AI Algorithm bias and transparent decision-making processes involving AI-based tech.

- Investing in Research and Development (R&D) in the AI sector - Government and corporate giants should prioritise this opportunity to capitalise on the avenue of AI R&D in the field of space tech and exploration. Such as funding initiatives focusing on developing AI algorithms coded for processing astronomical data, optimising telescope operations and detecting celestial bodies.

- Citizen Science and Public Engagement - Promotion of citizen science initiatives can allow better leverage of AI tools to involve the public in astronomical research. Prominent examples include the SETI @ Home program (Search for Extraterrestrial Intelligence), encouraging better outreach to educate and engage citizens in AI-enabled discovery programs such as the identification of exoplanets, classification of galaxies and discovery of life beyond earth through detecting anomalies in radio waves.

- Education and Training - Training programs should be implemented to educate astronomers in AI techniques and the intricacies of data science. There is a need to foster collaboration between AI experts, data scientists and astronomers to harness the full potential of AI in space exploration.

- Bolster Computing Infrastructure - Authorities should ensure proper computing infrastructure should be implemented to facilitate better application of AI in astronomy. This further calls for greater investment in high-performance computing devices and structures to process large amounts of data and AI modelling to analyze astronomical data.

Conclusion

AI has seen an expansive growth in the field of space exploration. As seen, its multifaceted use cases include discovering new galaxies and classifying celestial objects by analyzing the changing parameters of outer space. Nevertheless, to fully harness its potential, robust policy and regulatory initiatives are required to bolster real-time data sharing not just within the scientific community but also between nations. Policy considerations such as investment in research, promoting citizen scientific initiatives and ensuring education and funding for astronomers. A critical aspect is improving key computing infrastructure, which is crucial for processing the vast amount of data generated by astronomical observatories.

References

- https://mindy-support.com/news-post/astronomers-are-using-ai-to-make-discoveries/

- https://www.space.com/citizen-scientists-artificial-intelligence-galaxy-discovery

- https://www.sciencedaily.com/releases/2024/03/240325114118.htm

- https://phys.org/news/2023-03-artificial-intelligence-secret-equation-galaxy.html

- https://www.space.com/astronomy-research-ai-future

What are Deepfakes?

A deepfake is essentially a video of a person in which their face or body has been digitally altered so that they appear to be someone else, typically used maliciously or to spread false information. Deepfake technology is a method for manipulating videos, images, and audio utilising powerful computers and deep learning. It is used to generate fake news and commit financial fraud, among other wrongdoings. It overlays a digital composite over an already-existing video, picture, or audio; cybercriminals use Artificial Intelligence technology. The term deepfake was coined first time in 2017 by an anonymous Reddit user, who called himself deepfake.

Deepfakes works on a combination of AI and ML, which makes the technology hard to detect by Web 2.0 applications, and it is almost impossible for a layman to see if an image or video is fake or has been created using deepfakes. In recent times, we have seen a wave of AI-driven tools which have impacted all industries and professions across the globe. Deepfakes are often created to spread misinformation. There lies a key difference between image morphing and deepfakes. Image morphing is primarily used for evading facial recognition, but deepfakes are created to spread misinformation and propaganda.

Issues Pertaining to Deepfakes in India

Deepfakes are a threat to any nation as the impact can be divesting in terms of monetary losses, social and cultural unrest, and actions against the sovereignty of India by anti-national elements. Deepfake detection is difficult but not impossible. The following threats/issues are seen to be originating out of deep fakes:

- Misinformation: One of the biggest issues of Deepfake is misinformation, the same was seen during the Russia-Ukraine conflict, where in a deepfake of Ukraine’s president, Mr Zelensky, surfaced on the internet and caused mass confusion and propaganda-based misappropriation among the Ukrainians.

- Instigation against the Union of India: Deepfake poses a massive threat to the integrity of the Union of India, as this is one of the easiest ways for anti-national elements to propagate violence or instigate people against the nation and its interests. As India grows, so do the possibilities of anti-national attacks against the nation.

- Cyberbullying/ Harassment: Deepfakes can be used by bad actors to harass and bully people online in order to extort money from them.

- Exposure to Illicit Content: Deepfakes can be easily used to create illicit content, and oftentimes, it is seen that it is being circulated on online gaming platforms where children engage the most.

- Threat to Digital Privacy: Deepfakes are created by using existing videos. Hence, bad actors often use photos and videos from Social media accounts to create deepfakes, this directly poses a threat to the digital privacy of a netizen.

- Lack of Grievance Redressal Mechanism: In the contemporary world, the majority of nations lack a concrete policy to address the aspects of deepfake. Hence, it is of paramount importance to establish legal and industry-based grievance redressal mechanisms for the victims.

- Lack of Digital Literacy: Despite of high internet and technology penetration rates in India, digital literacy lags behind, this is a massive concern for the Indian netizens as it takes them far from understanding the tech, which results in the under-reporting of crimes. Large-scale awareness and sensitisation campaigns need to be undertaken in India to address misinformation and the influence of deepfakes.

How to spot deepfakes?

Deepfakes look like the original video at first look, but as we progress into the digital world, it is pertinent to establish identifying deepfakes in our digital routine and netiquettes in order to stay protected in the future and to address this issue before it is too late. The following aspects can be kept in mind while differentiating between a real video and a deepfake

- Look for facial expressions and irregularities: Whenever differentiating between an original video and deepfake, always look for changes in facial expressions and irregularities, it can be seen that the facial expressions, such as eye movement and a temporary twitch on the face, are all signs of a video being a deepfake.

- Listen to the audio: The audio in deepfake also has variations as it is imposed on an existing video, so keep a check on the sound effects coming from a video in congruence with the actions or gestures in the video.

- Pay attention to the background: The most easiest way to spot a deepfake is to pay attention to the background, in all deepfakes, you can spot irregularities in the background as, in most cases, its created using virtual effects so that all deepfakes will have an element of artificialness in the background.

- Context and Content: Most of the instances of deepfake have been focused towards creating or spreading misinformation hence, the context and content of any video is an integral part of differentiating between an original video and deepfake.

- Fact-Checking: As a basic cyber safety and digital hygiene protocol, one should always make sure to fact-check each and every piece of information they come across on social media. As a preventive measure, always make sure to fact-check any information or post sharing it with your known ones.

- AI Tools: When in doubt, check it out, and never refrain from using Deepfake detection tools like- Sentinel, Intel’s real-time deepfake detector - Fake catcher, We Verify, and Microsoft’s Video Authenticator tool to analyze the videos and combating technology with technology.

Recent Instance

A deepfake video of actress Rashmika Mandanna recently went viral on social media, creating quite a stir. The video showed a woman entering an elevator who looked remarkably like Mandanna. However, it was later revealed that the woman in the video was not Mandanna, but rather, her face was superimposed using AI tools. Some social media users were deceived into believing that the woman was indeed Mandanna, while others identified it as an AI-generated deepfake. The original video was actually of a British-Indian girl named Zara Patel, who has a substantial following on Instagram. This incident sparked criticism from social media users towards those who created and shared the video merely for views, and there were calls for strict action against the uploaders. The rapid changes in the digital world pose a threat to personal privacy; hence, caution is advised when sharing personal items on social media.

Legal Remedies

Although Deepfake is not recognised by law in India, it is indirectly addressed by Sec. 66 E of the IT Act, which makes it illegal to capture, publish, or transmit someone's image in the media without that person's consent, thus violating their privacy. The maximum penalty for this violation is ₹2 lakh in fines or three years in prison. The DPDP Act's applicability in 2023 means that the creation of deepfakes will directly affect an individual's right to digital privacy and will also violate the IT guidelines under the Intermediary Guidelines, as platforms will be required to exercise caution while disseminating and publishing misinformation through deepfakes. The indirect provisions of the Indian Penal Code, which cover the sale and dissemination of derogatory publications, songs and actions, deception in the delivery of property, cheating and dishonestly influencing the delivery of property, and forgery with the intent to defame, are the only legal remedies available for deepfakes. Deep fakes must be recognized legally due to the growing power of misinformation. The Data Protection Board and the soon-to-be-established fact-checking body must recognize crimes related to deepfakes and provide an efficient system for filing complaints.

Conclusion

Deepfake is an aftermath of the advancements of Web 3.0 and, hence is just the tip of the iceberg in terms of the issues/threats from emerging technologies. It is pertinent to upskill and educate the netizens about the keen aspects of deepfakes to stay safe in the future. At the same time, developing and developed nations need to create policies and laws to efficiently regulate deepfake and to set up redressal mechanisms for victims and industry. As we move ahead, it is pertinent to address the threats originating out of the emerging techs and, at the same time, create a robust resilience for the same.