#FactCheck: AI-Generated Audio Falsely Claims COAS Admitted to Loss of 6 Jets and 250 Soldiers

Executive Summary:

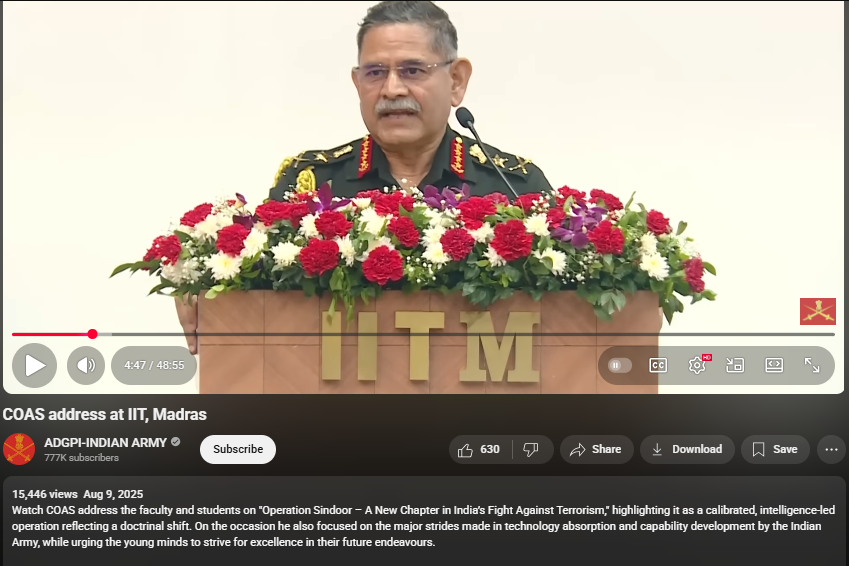

A viral video (archive link) claims General Upendra Dwivedi, Chief of Army Staff (COAS), admitted to losing six Air Force jets and 250 soldiers during clashes with Pakistan. Verification revealed the footage is from an IIT Madras speech, with no such statement made. AI detection confirmed parts of the audio were artificially generated.

Claim:

The claim in question is that General Upendra Dwivedi, Chief of Army Staff (COAS), admitted to losing six Indian Air Force jets and 250 soldiers during recent clashes with Pakistan.

Fact Check:

Upon conducting a reverse image search on key frames from the video, it was found that the original footage is from IIT Madras, where the Chief of Army Staff (COAS) was delivering a speech. The video is available on the official YouTube channel of ADGPI – Indian Army, published on 9 August 2025, with the description:

“Watch COAS address the faculty and students on ‘Operation Sindoor – A New Chapter in India’s Fight Against Terrorism,’ highlighting it as a calibrated, intelligence-led operation reflecting a doctrinal shift. On the occasion, he also focused on the major strides made in technology absorption and capability development by the Indian Army, while urging young minds to strive for excellence in their future endeavours.”

A review of the full speech revealed no reference to the destruction of six jets or the loss of 250 Army personnel. This indicates that the circulating claim is not supported by the original source and may contribute to the spread of misinformation.

Further using AI Detection tools like Hive Moderation we found that the voice is AI generated in between the lines.

Conclusion:

The claim is baseless. The video is a manipulated creation that combines genuine footage of General Dwivedi’s IIT Madras address with AI-generated audio to fabricate a false narrative. No credible source corroborates the alleged military losses.

- Claim: AI-Generated Audio Falsely Claims COAS Admitted to Loss of 6 Jets and 250 Soldiers

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

Introduction

In the sprawling online world, trusted relationships are frequently taken advantage of by cybercriminals seeking to penetrate guarded systems. The Watering Hole Attack is one advanced method, which focuses on a user’s ecosystem by compromising the genuine sites they often use. This attack method is different from phishing or direct attacks as it quietly exploits the everyday browsing of the target to serve malicious content. The quiet and exact nature of watering hole attacks makes them prevalent amongst Advanced Persistent Threat (APT) groups, especially in conjunction with state-sponsored cyber-espionage operations.

What Qualifies as a Watering Hole Attack?

A Watering Hole Attack targets and infects a trusted website. The targeted website is one that is used by a particular organization or community, such as a specific industry sector. This type of cyberattack is analogous to the method of attack used by animals and predators waiting by the water’s edge for prey to drink. Attackers prey on their targets by injecting malicious code, such as an exploit kit or malware loader, into websites that are popular with their victims. These victims are then infected when they visit said websites unknowingly. This opens as a gateway for attackers to infiltrate corporate systems, harvest credentials, and pivot across internal networks.

How Watering Hole Attacks Unfold

The attack lifecycle usually progresses as follows:

- Reconnaissance - Attackers gather intelligence on the websites frequented by the target audience, including specialized communities, partner websites, or local news sites.

- Website Exploitation - Through the use of outdated CMS software and insecure plugins, attackers gain access to the target website and insert malicious code such as JS or iframe redirections.

- Delivery and Exploitation - The visitor’s browser executes the malicious code injected into the page. The code might include a redirection payload which sends the user to an exploit kit that checks the user’s browser, plugins, operating system, and other components for vulnerabilities.

- Infection and Persistence - The infected system malware such as RATs, keyloggers, or backdoors. These enable lateral and long-term movements within the organisation for espionage.

- Command and Control (C2) - For further instructions, additional payload delivery, and stolen data retrieval, infected devices connect to servers managed by the attackers.

Key Features of Watering Hole Attacks

- Indirect Approach: Instead of going after the main target, attackers focus on sites that the main target trusts.

- Supply-Chain-Like Impact: An infected industry portal can affect many companies at the same time.

- Low Profile: It is difficult to identify since the traffic comes from real websites.

- Advanced Customization: Exploit kits are known to specialize in making custom payloads for specific browsers or OS versions to increase the chance of success.

Why Are These Attacks Dangerous?

Worming hole attacks shift the battlefield to new grounds in cyber warfare on the web. They eliminate the need for firewalls, email shields, and other security measures because they operate on the traffic to and from real, trusted websites. When the attacks work as intended, the following consequences can be expected:

- Stealing Credentials: Including privileged accounts and VPN credentials.

- Espionage: Theft of intellectual property, defense blueprints, or government confidential information.

- Supply Chain Attacks: Resulting in a series of infections among related companies.

- Zero-Day Exploits: Including automated attacks using zero-day exploits for full damage.

Incidents of Primary Concern

The implications of watering hole attacks have been felt in the real world for quite some time. An example from 2019 reveals this, where a known VoIP firm’s site was compromised and used to spread data-stealing malware to its users. Likewise, in 2014, the Operation Snowman campaign—which seems to have a state-backed origin—attempted to infect users of a U.S. veterans’ portal in order to gain access to visitors from government, defense, and related fields. Rounding up the list, in 2021, cybercriminals attacked regional publications focusing on energy, using the publications to spread malware to company officials and engineers working on critical infrastructure, as well as to steal data from their systems. These attacks show the widespread and dangerous impact of watering hole attacks in the world of cybersecurity.

Detection Issues

Due to the following reasons, traditional approaches to security fail to detect watering hole attacks:

- Use of Authentic Websites: Attacks involving trusted and popular domains evade detection via blacklisting.

- Encrypted Traffic: Delivering payloads over HTTPS conceals malicious scripts from being inspected at the network level.

- Fileless Methods: Using in-memory execution is a modern campaign technique, and detection based on signatures is futile.

Mitigation Strategies

To effectively neutralize the threat of watering hole attacks, an organization should implement a defense-in-depth strategy that incorporates the following elements:

- Patch Management and Hardening -

- Conduct routine updates on operating systems, web browsers, and extensions to eliminate exploit opportunities.

- Either remove or reduce the use of high-risk elements such as Flash and Java, if feasible.

- Network Segmentation - Minimize lateral movement by isolating critical systems from the general user network.

- Behavioral Analytics - Implement Endpoint Detection and Response (EDR) tools to oversee unusual behaviors on processes—for example, script execution or dubious outgoing connections.

- DNS Filtering and Web Isolation - Implement DNS-layer security to deny access to known malicious domains and use browser isolation for dangerous sites.

- Threat Intelligence Integration - Track watering hole threats and campaigns for indicators of compromise (IoCs) on advisories and threat feeds.

- Multi-Layer Email and Web Security - Use web gateways integrated with dynamic content scanning, heuristic analysis, and sandboxing.

- Zero Trust Architecture - Apply least privilege access, require device attestation, and continuous authentication for accessing sensitive resources.

Incident Response Best Practices

- Forensic Analysis: Check affected endpoints for any mechanisms set up for persistence and communication with C2 servers.

- Log Review: Look through proxy, DNS, and firewall logs to detect suspicious traffic.

- Threat Hunting: Search your environment for known Indicators of Compromise (IoCs) related to recent watering hole attacks.

- User Awareness Training: Help employees understand the dangers related to visiting external industry websites and promote safe browsing practices.

The Immediate Need for Action

The adoption of cloud computing and remote working models has significantly increased the attack surface for watering hole attacks. Trust and healthcare sectors are increasingly targeted by nation-state groups and cybercrime gangs using this technique. Not taking action may lead to data leaks, legal fines, and break-ins through the supply chain, which damage the trustworthiness and operational capacity of the enterprise.

Conclusion

Watering hole attacks demonstrate how phishing attacks evolve from a broad attack to a very specific, trust-based attack. Protecting against these advanced attacks requires the zero-trust mindset, adaptive defenses, and continuous monitoring, which is multicentral security. Advanced response measures, proactive threat intelligence, and detection technologies integration enable organizations to turn this silent threat from a lurking predator to a manageable risk.

References

- https://www.fortinet.com/resources/cyberglossary/watering-hole-attack

- https://en.wikipedia.org/wiki/Watering_hole_attack

- https://www.proofpoint.com/us/threat-reference/watering-hole

- https://www.techtarget.com/searchsecurity/definition/watering-hole-attack

The recent Promotion and Regulation of Online Gaming Act, 2025, that came into force in August, has been one of the most widely anticipated regulations in the digital entertainment industry. Among provisions such as promoting esports and licensing of online gaming, the legislation notably introduces a blanket ban on real-money gaming (RMG). The rationale behind this was to reduce its addictive effects, protect minors, and limit the circulation of black-money. However, in reality, the Act has spawned apprehension about the legislative process, regulatory redundancy, and unintended consequences that can shift users and revenue to offshore operators.

From Debate to Prohibition: How the Act was Passed

The Promotion and Regulation of Online Gaming Act was passed as a central law, providing the earlier fragmented state laws on online betting and gambling with an overarching framework. Proponents argue that, among other provisions, some kind of unified national framework was needed to deal with the scale of online betting due to its detrimental impact on young users. The current Act is a direct transition to criminalisation rather than the swings of self-regulation and partial restrictions used during the previous decade of incremental experiments in regulation. Stakeholders in the industry believe that this type of sudden, blanket action creates uncertainty and erodes confidence in the system in the long run. Further, critics have pointed out that the Bill was passed without adequate Parliamentary deliberation. A question has been raised about whether procedural safeguards were upheld.

Prohibition of Online RMG

Within the Indian context, a distinction has long been drawn between games of skill and games of chance, with the latter, like a lottery or a casino, being severely prohibited under state laws, whereas the former, like rummy or fantasy sports, have generally been allowed after being recognized as skill-based by court authorities. The Online Gaming Act of 2025 abolishes this distinction on the internet, thus banning all RMG actions that include cash transactions, regardless of skill or chance. The act also criminalises the advertising, facilitation, and hosting of such sites, thereby penalizing offshore operators with an Indian customer focus, and subjecting their payment gateways, app stores, and advertisers under its jurisdiction to penalties.

The Problem of Overlap

One potential issue that the Act presents is its overlap with the existing laws. The IT Rules 2023 mandate intermediaries in the gaming sector to appoint compliance officers, submit monthly reports, and undergo due diligence. The new Act introduces a three-level classification of games, whereas the advisories of the Central Consumer Protection Authority (CCPA) under the Consumer Protection Act treat online betting as an unfair trade practice.

This multiplicity of regulations builds a maze where different Ministries and state governments have overlapping jurisdiction. Policy experts caution that such an overlap can create enforcement challenges, punish players who act within the law, and leave offshore malefactors undetected.

Unintended Consequences: Driving Users Offshore

Outright prohibition will hardly ever remove demand; it will only push it out. Offshore sites have taken advantage of the situation as Indian operators like Dream11 shut down their money games after the ban. It has already been reported that there is aggressive advertising by foreign betting companies that are not registered in India, most of which have backend infrastructure that cannot be regulated by the Act (Storyboard18).

This diversion of users to unregulated markets has two main risks. First, Indian players are deprived of the consumer protection offered to them in local regulation, and their data can be sent to suspicious foreign organizations. Second, the government loses control over the money flow that can be transferred via informal channels or cryptocurrencies or other obscure systems. Industry analysts are alerting that such developments may only worsen the issue of black-money instead of solving it (IGamingBusiness).

Advertising, Age Gating, and Digital Rights

The Act has also strengthened advertisement regulations, aligning with advisories issued by the Advertising Standards Council of India, which prohibits the targeting of minors. However, critics believe that the application remains inadequately enforced, and children can with comparative ease access unregulated overseas applications. In the absence of complementary digital literacy programs and strong parental controls, these limitations can be effectively superficial instead of real.

Privacy advocates also warn that frequent prompts, vague messages, or invasive surveillance can weaken the digital rights of users instead of strengthening them. Overregulation has also been found to create banner blindness in global contexts where users ignore warnings without first clearly understanding them.

Enforcement Challenges

The Act puts a lot of responsibilities on many stakeholders, including the Ministry of Information and Broadcasting (MIB) and the Reserve Bank of India (RBI). Platforms like Google Play and Apple App Store are expected to verify government-approved lists of compliant gaming apps and remove non-compliant or banned ones, as directed by the MIB and the RBI. Although this pressure may motivate intermediaries to collaborate, it may also have a risk of overreach when it is applied unequally or in a political way.

According to the experts, the solution should be underpinned by technology itself. Artificial intelligence can be used to identify illegal advertisements, track illegal gaming in children, and trace payment streams. At the same time, the regulators should be able to issue final lists of either compliant or non-compliant applications to advise the consumers and intermediaries alike. Without such practical provisions, enforcement risks remaining patchy.

Online Gaming Rules

On 1 October 2025, the government issued a draft of the Online Gaming Rules in accordance with the Promotion and Regulation of Online Gaming Act. The regulations focus on the creation of the compliance frameworks, define the classification of the allowed gaming activities, and prescribe grievance-redressal mechanisms aiming to promote the protection of the players and procedural transparency. However, the draft does not revisit or soften the existing blanket prohibition on real-money gaming (RMG) and, hence, the questions about the effectiveness of enforcement and regulatory clarity remain open (Times of India, 2025).

Protecting Consumers Without Stifling Innovation

The ban highlights a larger conflict, i.e., the protection of the vulnerable users without stifling an industry that has traditionally contributed to innovation, jobs, and the collection of tax revenue. Online gaming has significantly added to the GST collections, and the sudden shakeup brings fiscal concerns (Reuters).

Several legal objections to the Act have already been brought, asking whether the Act is constitutional, especially as to whether the restrictions are proportional to the right to trade. The outcome of such cases will define the future trajectory of the digital economy of India (Reuters).

Way Forward

Instead of outright prohibition, a more balanced approach that incorporates regulation and consumer protection is suggested by the experts. Key measures could include:

- A definite difference between games of skill and games of chance, with proportionate regulation.

- Age confirmation and campaign against online illiteracy to protect the underage population.

- Enhanced advertising and payments compliance requirements and enforceable non-compliance penalty.

- Coordinated oversight among different ministries to prevent duplication and regulatory struggle.

- Leveraging AI and fintech to track illegal financial activities (black money flows) and developing innovation.

Conclusion

The Online Gaming Act 2025 addresses social issues, such as addiction, monetary risk, and child safety, that require governance interventions. However, the path it follows to this end, that of total prohibition, is more likely to spawn a new set of issues instead of providing solutions because it will send consumers to offshore sites, undermine consumer rights, and slow innovation.

For India, the real challenge is not whether to prohibit online money gaming but how to create a balanced, transparent, and enforceable framework that protects users while fostering a responsible gaming ecosystem. India can reduce the adverse consequences of online betting without keeping the industry in the shadows with better coordination, reasonable use of technology, and balanced protection.

References:

- India's Dream11, top gaming apps halt money-based games after ban

- India online gambling ban could drive punters to black market

- Offshore betting firms with backend ops in India not covered by online gaming law

- The Great Gamble: India’s Online Gaming Ban, The GST Battle, And What Lies Ahead.

- Game Over for Online Money Games? An Analysis of the Online Gaming Act 2025

- Government gambles heavily on prohibiting online money gaming

- Online gaming regulation: New rules to take effect from October 1; government stresses consultative approach with industry

.webp)

Introduction

The ongoing armed conflict between Israel and Hamas/ Palestine is in the news all across the world. The latest conflict was triggered by unprecedented attacks against Israel by Hamas militants on October 7, killing thousands of people. Israel has launched a massive counter-offensive against the Islamic militant group. Amid the war, the bad information and propaganda spreading on various social media platforms, tech researchers have detected a network of 67 accounts that posted false content about the war and received millions of views. The ‘European Commission’ has sent a letter to Elon Musk, directing them to remove illegal content and disinformation; otherwise, penalties can be imposed. The European Commission has formally requested information from several social media giants on their handling of content related to the Israel-Hamas war. This widespread disinformation impacts and triggers the nature of war and also impacts the world and affects the goodwill of the citizens. The bad group, in this way, weaponise the information and fuels online hate activity, terrorism and extremism, flooding political polarisation with hateful content on social media. Online misinformation about the war is inciting extremism, violence, hate and different propaganda-based ideologies. The online information environment surrounding this conflict is being flooded with disinformation and misinformation, which amplifies the nature of war and too many fake narratives and videos are flooded on social media platforms.

Response of social media platforms

As there is a proliferation of online misinformation and violent content surrounding the war, It imposes a question on social media companies in terms of content moderation and other policy shifts. It is notable that Instagram, Facebook and X(Formerly Twitter) all have certain features in place giving users the ability to decide what content they want to view. They also allow for limiting the potentially sensitive content from being displayed in search results.

The experts say that It is of paramount importance to get a sort of control in this regard and define what is permissible online and what is not, Hence, what is required is expertise to determine the situation, and most importantly, It requires robust content moderation policies.

During wartime, people who are aggrieved or provoked are often targeted by this internet disinformation that blends ideological beliefs and spreads conspiracy theories and hatred. This is not a new phenomenon, it is often observed that disinformation-spreading groups emerged and became active during such war and emergency times and spread disinformation and propaganda-based ideologies and influence the society at large by misrepresenting the facts and planted stories. Social media has made it easier to post user-generated content without properly moderating it. However, it is a shared responsibility of tech companies, users, government guidelines and policies to collectively define and follow certain mechanisms to fight against disinformation and misinformation.

Digital Services Act (DSA)

The newly enacted EU law, i.e. Digital Services Act, pushes various larger online platforms to prevent posts containing illegal content and also puts limits on targeted advertising. DSA enables to challenge the of illegal online content and also poses requirements to prevent misinformation and disinformation and ensure more transparency over what the users see on the platforms. Rules under the DSA cover everything from content moderation & user privacy to transparency in operations. DSA is a landmark EU legislation moderating online platforms. Large tech platforms are now subject to content-related regulation under this new EU law ‘The Digital Services Act’, which also requires them to prevent the spread of misinformation and disinformation and overall ensure a safer online environment.

Indian Scenario

The Indian government introduced the Intermediary Guidelines (Intermediary Guidelines and Digital Media Ethics Code) Rules, updated in 2023 which talks about the establishment of a "fact check unit" to identify false or misleading online content. Digital Personal Data Protection, 2023 has also been enacted which aims to protect personal data. The upcoming Digital India bill is also proposed to be tabled in the parliament, this act will replace the current Information & Technology Act, of 2000. The upcoming Digital India bill can be seen as future-ready legislation to strengthen India’s current cybersecurity posture. It will comprehensively deal with the aspects of ensuring privacy, data protection, and fighting growing cyber crimes in the evolving digital landscape and ensuring a safe digital environment. Certain other entities including civil societies are also actively engaged in fighting misinformation and spreading awareness for safe and responsible use of the Internet.

Conclusion:

The widespread disinformation and misinformation content amid the Israel-Hamas war showcases how user-generated content on social media shows you the illusion of reality. There is widespread misinformation, misleading content or posts on social media platforms, and misuse of new advanced AI technologies that even make it easier for bad actors to create synthetic media content. It is also notable that social media has connected us like never before. Social media is a great platform with billions of active social media users around the globe, it offers various conveniences and opportunities to individuals and businesses. It is just certain aspects that require the attention of all of us to prevent the bad use of social media. The social media platforms and regulatory authorities need to be vigilant and active in clearly defining and improving the policies for content regulation and safe and responsible use of social media which can effectively combat and curtail the bad actors from misusing social media for their bad motives. As a user, it's the responsibility of users to exercise certain duties and promote responsible use of social media. With the increasing penetration of social media and the internet, misinformation is rampant all across the world and remains a global issue which needs to be addressed properly by implementing strict policies and adopting best practices to fight the misinformation. Users are encouraged to flag and report misinformative or misleading content on social media and should always verify it from authentic sources. Hence creating a safer Internet environment for everyone.

References:

- https://abcnews.go.com/US/experts-fear-hate-extremism-social-media-israel-hamas-war/story?id=104221215

- https://edition.cnn.com/2023/10/14/tech/social-media-misinformation-israel-hamas/index.html

- https://www.nytimes.com/2023/10/13/business/israel-hamas-misinformation-social-media-x.html

- https://www.africanews.com/2023/10/24/fact-check-misinformation-about-the-israel-hamas-war-is-flooding-social-media-here-are-the//

- https://www.theverge.com/23845672/eu-digital-services-act-explained