#FactCheck: Fake Viral Video Claiming Vice Admiral AN Pramod saying that next time if Pakistan Attack we will complain to US and Prez Trump.

Executive Summary:

A viral video (archived link) circulating on social media claims that Vice Admiral AN Pramod stated India would seek assistance from the United States and President Trump if Pakistan launched an attack, portraying India as dependent rather than self-reliant. Research traced the extended footage to the Press Information Bureau’s official YouTube channel, published on 11 May 2025. In the authentic video, the Vice Admiral makes no such remark and instead concludes his statement with, “That’s all.” Further analysis using the AI Detection tool confirmed that the viral clip was digitally manipulated with AI-generated audio, misrepresenting his actual words.

Claim:

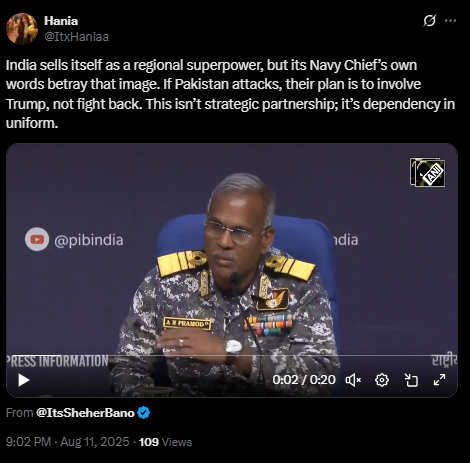

In the viral video an X user posted with the caption

”India sells itself as a regional superpower, but its Navy Chief’s own words betray that image. If Pakistan attacks, their plan is to involve Trump, not fight back. This isn’t strategic partnership; it’s dependency in uniform”.

In the video the Vice Admiral was heard saying

“We have worked out among three services, this time if Pakistan dares take any action, and Pakistan knows it, what we are going to do. We will complain against Pakistan to the United States of America and President Trump, like we did earlier in Operation Sindoor.”

Fact Check:

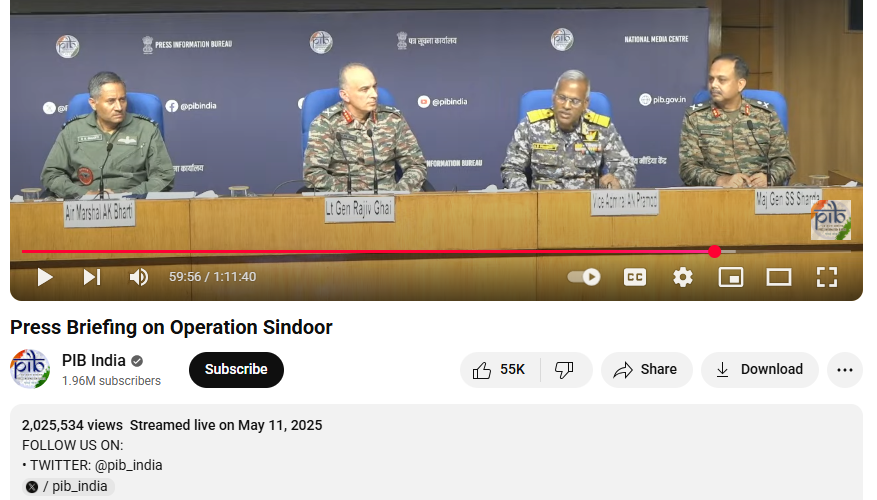

Upon conducting a reverse image search on key frames from the video, we located the full version of the video on the official YouTube channel of the Press Information Bureau (PIB), published on 11 May 2025. In this video, at the 59:57-minute mark, the Vice Admiral can be heard saying:

“This time if Pakistan dares take any action, and Pakistan knows it, what we are going to do. That’s all.”

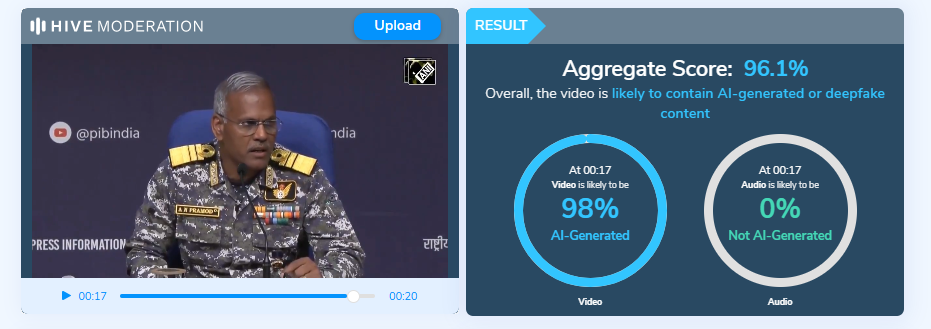

Further analysis was conducted using the Hive Moderation tool to examine the authenticity of the circulating clip. The results indicated that the video had been artificially generated, with clear signs of AI manipulation. This suggests that the content was not genuine but rather created with the intent to mislead viewers and spread misinformation.

Conclusion:

The viral video attributing remarks to Vice Admiral AN Pramod about India seeking U.S. and President Trump’s intervention against Pakistan is misleading. The extended speech, available on the Press Information Bureau’s official YouTube channel, contained no such statement. Instead of the alleged claim, the Vice Admiral concluded his comments by saying, “That’s all.” AI analysis using Hive Moderation further indicated that the viral clip had been artificially manipulated, with fabricated audio inserted to misrepresent his words. These findings confirm that the video is altered and does not reflect the Vice Admiral’s actual remarks.

Claim: Fake Viral Video Claiming Vice Admiral AN Pramod saying that next time if Pakistan Attack we will complain to US and Prez Trump.

Claimed On: Social Media

Fact Check: False and Misleading

Related Blogs

Introduction

Lost your phone? How to track and block your lost or stolen phone? Fear not, Say hello to Sanchar Saathi, the newly launched portal by the government. The smartphone has become an essential part of our daily life, our lots of personal data are stored in our smartphones, and if a phone is lost or stolen, it can be a frustrating experience. With the government initiative launching Sanchar Saathi Portal, you can now track and block your lost or stolen smartphone. The Portal uses a central equipment identity register to help users block their lost phones. It helps you track your lost and stolen smartphone. So now, say hello to Sanchar Saathi, the newly launched portal by the government. Users should keep an FIR copy of their lost/stolen smartphone handy for using certain features of the portal. FIR copy is also required for tracking the phone on the website. This portal allows users to track lost/stolen smartphones, and they can block the device across all telecom networks.

Preventing Data Leakage and Mobile Phone Theft

When you lose your phone or your phone is stolen, you worry as your smartphone holds your various personal sensitive information such as your bank account information, UPI IDs, and social media accounts such as WhatsApp, which cause a serious concern of data leakage and misuse in such a situation. Sanchar saathi portal addresses this problem and serves as a platform for blocking data saved on a lost or stolen device. This feature protects the users against financial fraud, identity thrift, and data leakage by blocking access to your lost or stolen device and ensuring that unauthorised parties cannot access or abuse important information.

How the Sanchar Saathi Portal Works

To file a complaint regarding their lost or stolen smartphones the users are required to provide IMEI (International Mobile Equipment Identity) number. The official website of the portal is https://sancharsaathi.gov.in/ users can access the “Citizen Centric Services” option on the homepage. Then users may, by clicking on “Block Your Lost/Stolen Mobile”, can fill out the form. Users need to fill in details such as IMEI number, contact number, model number of the smartphone, mobile purchase invoice, and information such as the date, time, district, and state where the device was lost or stolen. Users must keep a copy of the FIR handy and fill in their personal information, such as their name, email address, and residence. After completing and selecting the ‘Complete tab’, the form will be submitted, and access to the lost/stolen smartphone will be blocked.

Enhancing Security with SIM Card Verification

Using this portal, users can access their associated sim card numbers and block any unauthorised use. In this way portal allows owners to take immediate action if their sim card is being used or misused by someone else. The Sanchar Saathi Portal allows you to check the status of active SIM cards registered under an individual’s name. And it is an extra security feature provided by the portal. This proactive strategy helps users to safeguard their personal information against possible abuse and identity theft.

Advantages of the Sanchar Saathi Portal

The Sanchar Saathi platform offers various benefits for reducing mobile phone theft and protecting personal data. The portal offers a simplified and user-friendly platform for making complaints. The online complaint tracking function keeps consumers informed of the status of their complaints, increasing transparency and accountability.

The portal allows users to block access to personal data on lost/stolen smartphones which reduces the chances or potential risk of data leakage.

The portal SIM card verification feature acts as an extra layer of security, enabling users to monitor any unauthorised use of their personal information. This proactive approach empowers users to take immediate action if they detect any suspicious activity, preventing further damage to their personal data.

Conclusion

Our smartphones store large amounts of sensitive information and Data, so it becomes crucial to protect our smartphones from any unauthorised access, especially in case when the smartphone is lost or stolen. The Sanchar Saathi portal is a commendable step by the government by offering a comprehensive solution to combat mobile phone theft and protect personal data, the portal contributes to a safer digital environment for smartphone users.

The portal provides the option of blocking access to your lost/stolen device and also checking the SIM card verification. These features of the portal empower users to take control of their data security. In this way, the portal contributes to preventing mobile phone theft and data leakage.

Executive Summary:

BrazenBamboo’s DEEPDATA malware represents a new wave of advanced cyber espionage tools, exploiting a zero-day vulnerability in Fortinet FortiClient to extract VPN credentials and sensitive data through fileless malware techniques and secure C2 communications. With its modular design, DEEPDATA targets browsers, messaging apps, and password stores, while leveraging reflective DLL injection and encrypted DNS to evade detection. Cross-platform compatibility with tools like DEEPPOST and LightSpy highlights a coordinated development effort, enhancing its espionage capabilities. To mitigate such threats, organizations must enforce network segmentation, deploy advanced monitoring tools, patch vulnerabilities promptly, and implement robust endpoint protection. Vendors are urged to adopt security-by-design practices and incentivize vulnerability reporting, as vigilance and proactive planning are critical to combating this sophisticated threat landscape.

Introduction

The increased use of zero-day vulnerabilities by more complex threat actors reinforces the importance of more developed countermeasures. One of the threat actors identified is BrazenBamboo uses a zero-day vulnerability in Fortinet FortiClient for Windows through the DEEPDATA advanced malware framework. This research explores technical details about DEEPDATA, the tricks used in its operations, and its other effects.

Technical Findings

1. Vulnerability Exploitation Mechanism

The vulnerability in Fortinet’s FortiClient lies in its failure to securely handle sensitive information in memory. DEEPDATA capitalises on this flaw via a specialised plugin, which:

- Accesses the VPN client’s process memory.

- Extracts unencrypted VPN credentials from memory, bypassing typical security protections.

- Transfers credentials to a remote C2 server via encrypted communication channels.

2. Modular Architecture

DEEPDATA exhibits a highly modular design, with its core components comprising:

- Loader Module (data.dll): Decrypts and executes other payloads.

- Orchestrator Module (frame.dll): Manages the execution of multiple plugins.

- FortiClient Plugin: Specifically designed to target Fortinet’s VPN client.

Each plugin operates independently, allowing flexibility in attack strategies depending on the target system.

3. Command-and-Control (C2) Communication

DEEPDATA establishes secure channels to its C2 infrastructure using WebSocket and HTTPS protocols, enabling stealthy exfiltration of harvested data. Technical analysis of network traffic revealed:

- Dynamic IP switching for C2 servers to evade detection.

- Use of Domain Fronting, hiding C2 communication within legitimate HTTPS traffic.

- Time-based communication intervals to minimise anomalies in network behavior.

4. Advanced Credential Harvesting Techniques

Beyond VPN credentials, DEEPDATA is capable of:

- Dumping password stores from popular browsers, such as Chrome, Firefox, and Edge.

- Extracting application-level credentials from messaging apps like WhatsApp, Telegram, and Skype.

- Intercepting credentials stored in local databases used by apps like KeePass and Microsoft Outlook.

5. Persistence Mechanisms

To maintain long-term access, DEEPDATA employs sophisticated persistence techniques:

- Registry-based persistence: Modifies Windows registry keys to reload itself upon system reboot.

- DLL Hijacking: Substitutes legitimate DLLs with malicious ones to execute during normal application operations.

- Scheduled Tasks and Services: Configures scheduled tasks to periodically execute the malware, ensuring continuous operation even if detected and partially removed.

Additional Tools in BrazenBamboo’s Arsenal

1. DEEPPOST

A complementary tool used for data exfiltration, DEEPPOST facilitates the transfer of sensitive files, including system logs, captured credentials, and recorded user activities, to remote endpoints.

2. LightSpy Variants

- The Windows variant includes a lightweight installer that downloads orchestrators and plugins, expanding espionage capabilities across platforms.

- Shellcode-based execution ensures that LightSpy’s payload operates entirely in memory, minimising artifacts on the disk.

3. Cross-Platform Overlaps

BrazenBamboo’s shared codebase across DEEPDATA, DEEPPOST, and LightSpy points to a centralised development effort, possibly linked to a Digital Quartermaster framework. This shared ecosystem enhances their ability to operate efficiently across macOS, iOS, and Windows systems.

Notable Attack Techniques

1. Memory Injection and Data Extraction

Using Reflective DLL Injection, DEEPDATA injects itself into legitimate processes, avoiding detection by traditional antivirus solutions.

- Memory Scraping: Captures credentials and sensitive information in real-time.

- Volatile Data Extraction: Extracts transient data that only exists in memory during specific application states.

2. Fileless Malware Techniques

DEEPDATA leverages fileless infection methods, where its payload operates exclusively in memory, leaving minimal traces on the system. This complicates post-incident forensic investigations.

3. Network Layer Evasion

By utilising encrypted DNS queries and certificate pinning, DEEPDATA ensures that network-level defenses like intrusion detection systems (IDS) and firewalls are ineffective in blocking its communications.

Recommendations

1. For Organisations

- Apply Network Segmentation: Isolate VPN servers from critical assets.

- Enhance Monitoring Tools: Deploy behavioral analysis tools that detect anomalous processes and memory scraping activities.

- Regularly Update and Patch Software: Although Fortinet has yet to patch this vulnerability, organisations must remain vigilant and apply fixes as soon as they are released.

2. For Security Teams

- Harden Endpoint Protections: Implement tools like Memory Integrity Protection to prevent unauthorised memory access.

- Use Network Sandboxing: Monitor and analyse outgoing network traffic for unusual behaviors.

- Threat Hunting: Proactively search for indicators of compromise (IOCs) such as unauthorised DLLs (data.dll, frame.dll) or C2 communications over non-standard intervals.

3. For Vendors

- Implement Security by Design: Adopt advanced memory protection mechanisms to prevent credential leakage.

- Bug Bounty Programs: Encourage researchers to report vulnerabilities, accelerating patch development.

Conclusion

DEEPDATA is a form of cyber espionage and represents the next generation of tools that are more advanced and tunned for stealth, modularity and persistence. While Brazen Bamboo is in the process of fine-tuning its strategies, the organisations and vendors have to be more careful and be ready to respond to these tricks. The continuous updating, the ability to detect the threats and a proper plan on how to deal with incidents are crucial in combating the attacks.

References:

.webp)

Introduction

The unprecedented rise of social media, challenges with regional languages, and the heavy use of messaging apps like WhatsApp have all led to an increase in misinformation in India. False stories spread quickly and can cause significant harm, like political propaganda and health-related mis/misinformation. Programs that teach people how to use social media responsibly and attempt to check facts are essential, but they do not always connect with people deeply. Reading stories, attending lectures, and using tools that check facts are standard passive learning methods used in traditional media literacy programs.

Adding game-like features to non-game settings is called "gamification," it could be a new and interesting way to answer this question. Gamification involves engaging people by making them active players instead of just passive consumers of information. Research shows that interactive learning improves interest, thinking skills, and memory. People can learn to recognise fake news safely by turning fact-checking into a game before encountering it in real life. A study by Roozenbeek and van der Linden (2019) showed that playing misinformation games can significantly enhance people's capacity to recognise and avoid false information.

Several misinformation-related games have been successfully implemented worldwide:

- The Bad News Game – This browser-based game by Cambridge University lets players step into the shoes of a fake news creator, teaching them how misinformation is crafted and spread (Roozenbeek & van der Linden, 2019).

- Factitious – A quiz game where users swipe left or right to decide whether a news headline is real or fake (Guess et al., 2020).

- Go Viral! – A game designed to inoculate people against COVID-19 misinformation by simulating the tactics used by fake news peddlers (van der Linden et al., 2020).

For programs to effectively combat misinformation in India, they must consider factors such as the responsible use of smartphones, evolving language trends, and common misinformation patterns in the country. Here are some key aspects to keep in mind:

- Vernacular Languages

There should be games in Hindi, Tamil, Bengali, Telugu, and other major languages since that is how rumours spread in different areas and diverse cultural contexts. AI voice conversation and translation can help reduce literacy differences. Research shows that people are more likely to engage with and trust information in their native language (Pennycook & Rand, 2019).

- Games Based on WhatsApp

Interactive tests and chatbot-powered games can educate consumers directly within the app they use most frequently since WhatsApp is a significant hub for false information. A game with a WhatsApp-like interface where players may feel like they are in real life, having to decide whether to avoid, check the facts of, or forward messages that are going viral could be helpful in India.

- Detecting False Information

As part of a mobile-friendly game, players can pretend to be reporters or fact-checkers and have to prove stories that are going viral. They can do the same with real-life tools like reverse picture searches or reliable websites that check facts. Research shows that doing interactive tasks to find fake news makes people more aware of it over time (Lewandowsky et al., 2017).

- Reward-Based Participation

Participation could be increased by providing rewards for finishing misleading challenges, such as badges, diplomas, or even incentives on mobile data. This might be easier to do if there are relationships with phone companies. Reward-based learning has made people more interested and motivated in digital literacy classes (Deterding et al., 2011).

- Universities and Schools

Educational institutions can help people spot false information by adding game-like elements to their lessons. Hamari et al. (2014) say that students are more likely to join and remember what they learn when there are competitive and interactive parts to the learning. Misinformation games can be used in media studies classes at schools and universities by using models to teach students how to check sources, spot bias, and understand the psychological tricks that misinformation campaigns use.

What Artificial Intelligence Can Do for Gamification

Artificial intelligence can tailor learning experiences to each player in false games. AI-powered misinformation detection bots could lead participants through situations tailored to their learning level, ensuring they are consistently challenged. Recent natural language processing (NLP) developments enable AI to identify nuanced misinformation patterns and adjust gameplay accordingly (Zellers et al., 2019). This could be especially helpful in India, where fake news is spread differently depending on the language and area.

Possible Opportunities

Augmented reality (AR) scavenger hunts for misinformation, interactive misinformation events, and educational misinformation tournaments are all examples of games that help fight misinformation. India can help millions, especially young people, think critically and combat the spread of false information by making media literacy fun and interesting. Using Artificial Intelligence (AI) in gamified treatments for misinformation could be a fascinating area of study in the future. AI-powered bots could mimic real-time cases of misinformation and give quick feedback, which would help students learn more.

Problems and Moral Consequences

While gaming is an interesting way to fight false information, it also comes with some problems that you should think about:

- Ethical Concerns: Games that try to imitate how fake news spreads must ensure players do not learn how to spread false information by accident.

- Scalability: Although worldwide misinformation initiatives exist, developing and expanding localised versions for India's varied language and cultural contexts provide significant challenges.

- Assessing Impact: There is a necessity for rigorous research approaches to evaluate the efficacy of gamified treatments in altering misinformation-related behaviours, keeping cultural and socio-economic contexts in the picture.

Conclusion

A gamified approach can serve as an effective tool in India's fight against misinformation. By integrating game elements into digital literacy programs, it can encourage critical thinking and help people recognize misinformation more effectively. The goal is to scale these efforts, collaborate with educators, and leverage India's rapidly evolving technology to make fact-checking a regular practice rather than an occasional concern.

As technology and misinformation evolve, so must the strategies to counter them. A coordinated and multifaceted approach, one that involves active participation from netizens, strict platform guidelines, fact-checking initiatives, and support from expert organizations that proactively prebunk and debunk misinformation can be a strong way forward.

References

- Deterding, S., Dixon, D., Khaled, R., & Nacke, L. (2011). From game design elements to gamefulness: defining "gamification". Proceedings of the 15th International Academic MindTrek Conference.

- Guess, A., Nagler, J., & Tucker, J. (2020). Less than you think: Prevalence and predictors of fake news dissemination on Facebook. Science Advances.

- Hamari, J., Koivisto, J., & Sarsa, H. (2014). Does gamification work?—A literature review of empirical studies on gamification. Proceedings of the 47th Hawaii International Conference on System Sciences.

- Lewandowsky, S., Ecker, U. K., & Cook, J. (2017). Beyond misinformation: Understanding and coping with the “post-truth” era. Journal of Applied Research in Memory and Cognition.

- Pennycook, G., & Rand, D. G. (2019). Fighting misinformation on social media using “accuracy prompts”. Nature Human Behaviour.

- Roozenbeek, J., & van der Linden, S. (2019). The fake news game: actively inoculating against the risk of misinformation. Journal of Risk Research.

- van der Linden, S., Roozenbeek, J., Compton, J. (2020). Inoculating against fake news about COVID-19. Frontiers in Psychology.

- Zellers, R., Holtzman, A., Rashkin, H., Bisk, Y., Farhadi, A., Roesner, F., & Choi, Y. (2019). Defending against neural fake news. Advances in Neural Information Processing Systems.