#Fact Check: Viral Footage from Bangladesh Incorrectly Portrayed as Immigrant March for Violence in Assam.

Executive Summary:

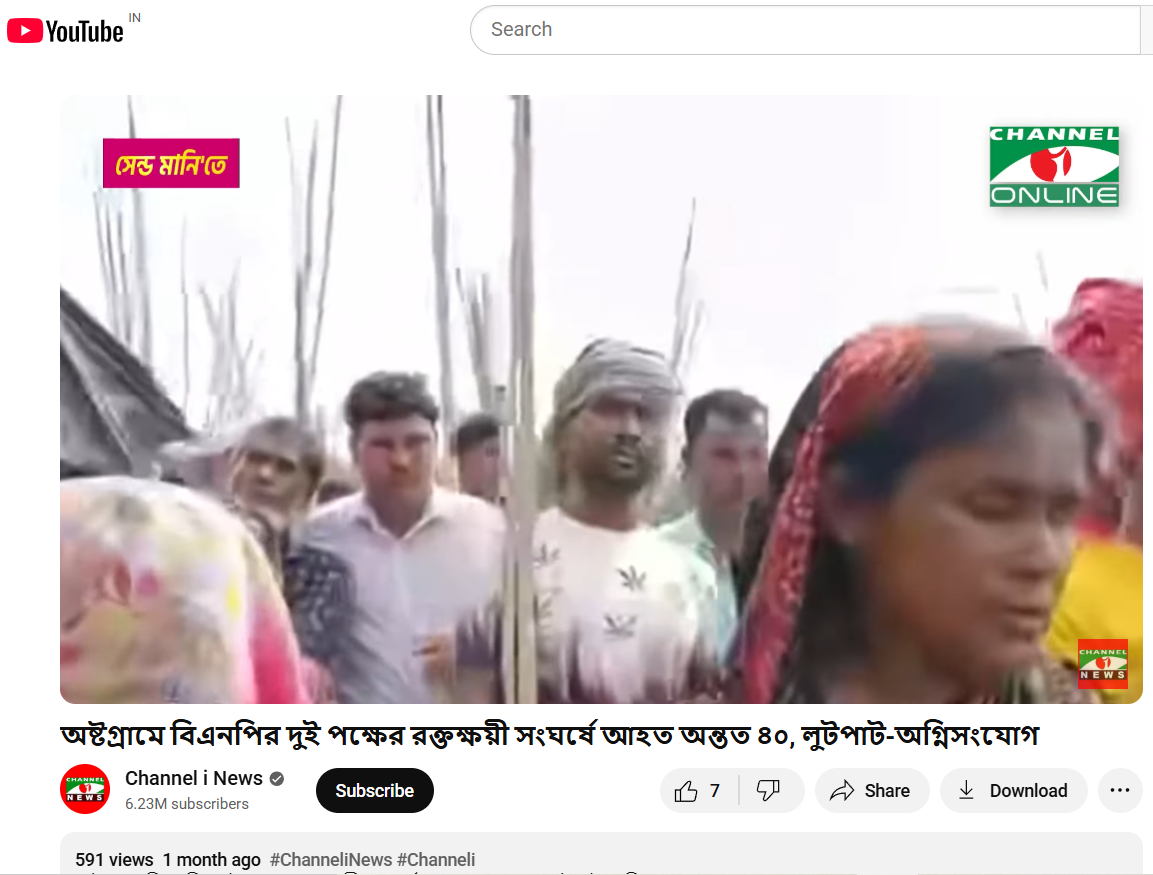

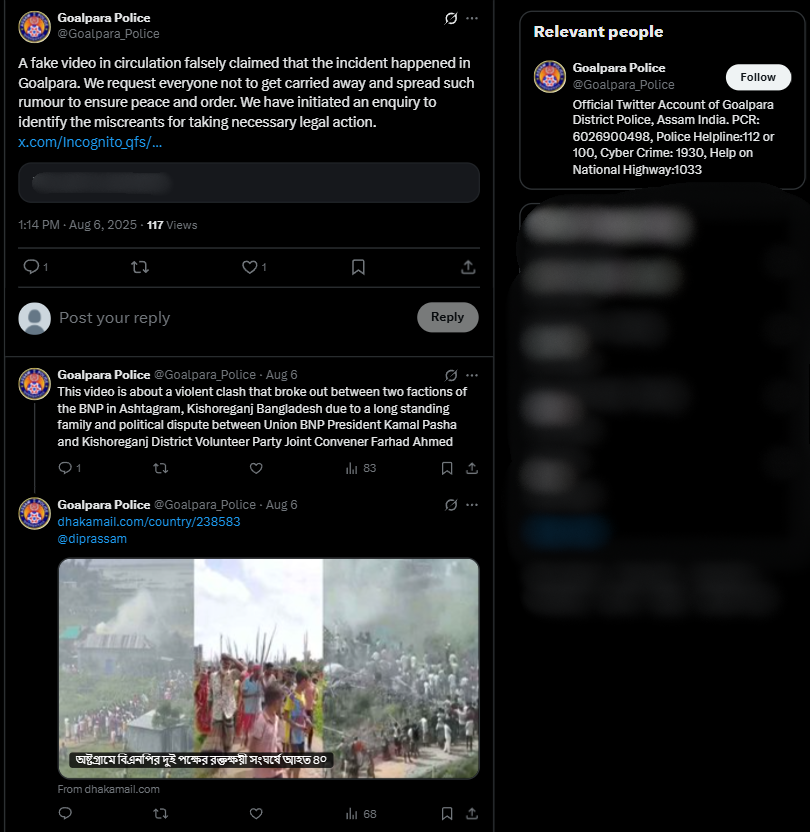

As we researched a viral social media video we encountered, we did a comprehensive fact check utilizing reverse image search. The video circulated with the claim that it shows illegal Bangladeshi in Assam's Goalpara district carrying homemade spears and attacking a police and/or government official. Our findings are certain that this claim is false. This video was filmed in the Kishoreganj district, Bangladesh, on July 1, 2025, during a political argument involving two rival factions of the Bangladesh Nationalist Party (BNP). The footage has been intentionally misrepresented, putting the report into context regarding Assam to disseminate false information.

Claim:

The viral video shows illegal Bangladeshi immigrants armed with spears marching in Goalpara, Assam, with the intention of attacking police or officials.

Fact Check:

To establish if the claim was valid, we performed a reverse image search on some of the key frames from the video. We did our research on a number of news articles and social media posts from Bangladeshi sources. This led us to a reality check as the events confirmed in these reports took place in Ashtagram, Kishoreganj district, Bangladesh, in a violent political confrontation between factions of the Bangladesh Nationalist Party (BNP) on July 1, 2025, that ultimately resulted in about 40 injuries.

We also found on local media, in particular, Channel i News reported full accounts of the viral report and showed images from the video post. The individuals seen in the video were engaged in a political fight and wielding makeshift spears rather than transitioning into a cross-border attack. The Assam Police issued an official response on X (formerly Twitter) that denied the claim, while noting that nothing of that nature occurred in Goalpara nor in any other district of Assam.

Conclusion:

Based on our research, we conclude that the viral video does not show unlawful Bangladeshi immigrants in Assam. It depicts a political clash in Kishoreganj, Bangladesh, on July 1, 2025. The claim attached to the video is completely untrue and is intended to mislead the public as to where and what the incident depicted is.

Claim: Video shows illegal migrants with spears moving in groups to assault police!

Claimed On: Social Media

Fact Check: False and Misleading

Related Blogs

Executive Summary:

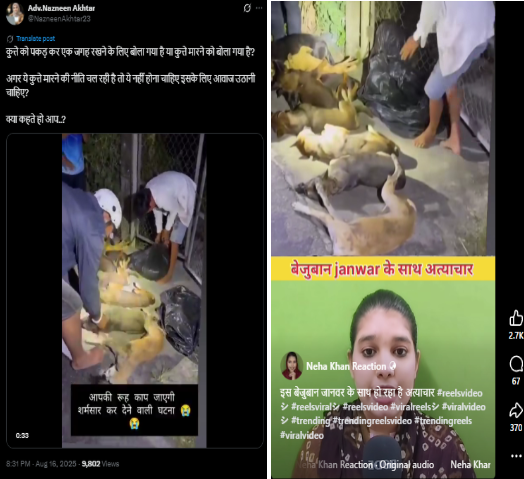

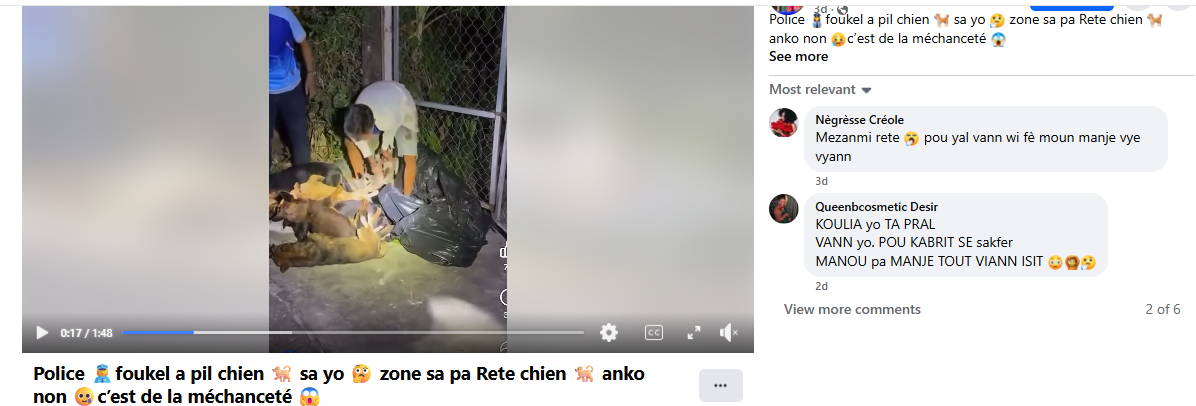

A viral claim alleges that following the Supreme Court of India’s August 11, 2025 order on relocating stray dogs, authorities in Delhi NCR have begun mass culling. However, verification reveals the claim to be false and misleading. A reverse image search of the viral video traced it to older posts from outside India, probably linked to Haiti or Vietnam, as indicated by the use of Haitian Creole and Vietnamese language respectively. While the exact location cannot be independently verified, it is confirmed that the video is not from Delhi NCR and has no connection to the Supreme Court’s directive. Therefore, the claim lacks authenticity and is misleading

Claim:

There have been several claims circulating after the Supreme Court of India on 11th August 2025 ordered the relocation of stray dogs to shelters. The primary claim suggests that authorities, following the order, have begun mass killing or culling of stray dogs, particularly in areas like Delhi and the National Capital Region. This narrative intensified after several videos purporting to show dead or mistreated dogs allegedly linked to the Supreme Court’s directive—began circulating online.

Fact Check:

After conducting a reverse image search using a keyframe from the viral video, we found similar videos circulating on Facebook. Upon analyzing the language used in one of the posts, it appears to be Haitian Creole (Kreyòl Ayisyen), which is primarily spoken in Haiti. Another similar video was also found on Facebook, where the language used is Vietnamese, suggesting that the post associates the incident with Vietnam.

However, it is important to note that while these posts point towards different locations, the exact origin of the video cannot be independently verified. What can be established with certainty is that the video is not from Delhi NCR, India, as is being claimed. Therefore, the viral claim is misleading and lacks authenticity.

Conclusion:

The viral claim linking the Supreme Court’s August 11, 2025 order on stray dogs to mass culling in Delhi NCR is false and misleading. Reverse image search confirms the video originated outside India, with evidence of Haitian Creole and Vietnamese captions. While the exact source remains unverified, it is clear the video is not from Delhi NCR and has no relation to the Court’s directive. Hence, the claim lacks credibility and authenticity.

Claim: Viral fake claim of Delhi Authority culling dogs after the Supreme Court directive on the ban of stray dogs as on 11th August 2025

Claimed On: Social Media

Fact Check: False and Misleading

Executive Summary:

QakBot, a particular kind of banking trojan virus, is capable of stealing personal data, banking passwords, and session data from a user's computer. Since its first discovery in 2009, Qakbot has had substantial modifications.

C2 Server commands infected devices and receives stolen data, which is essentially the brain behind Qakbot's operations.Qakbot employs PEDLL (Communication Files), a malicious program, to interact with the server in order to accomplish its main goals. Sensitive data, including passwords or personal information, is taken from the victims and sent to the C2 server. Referrer files start the main line of communication between Qakbot and the C2 server, such as phishing papers or malware droppers. WHOIS data includes registration details for this server, which helps to identify its ownership or place of origin.

This report specifically focuses on the C2 server infrastructure located in India, shedding light on its architecture, communication patterns, and threat landscape.

Introduction:

QakBot is also known as Pinkslipbot, QuakBot, and QBot, capable of stealing personal data, banking passwords, and session data from a user's computer. Malware is bad since it spreads very quickly to other networks, affecting them like a worm.,It employs contemporary methods like web injection to eavesdrop on customer online banking interactions. Qakbot is a member of a kind of malware that has robust persistence techniques, which are said to be the most advanced in order to gain access to compromised computers for extended periods of time.

Technical Analysis:

The following IP addresses have been confirmed as active C2 servers supporting Qbot malware activity:

Sample IP's

- 123.201.40[.]112

- 117.198.151[.]182

- 103.250.38[.]115

- 49.33.237[.]65

- 202.134.178[.]157

- 124.123.42[.]115

- 115.96.64[.]9

- 123.201.44[.]86

- 117.202.161[.]73

- 136.232.254[.]46

These servers have been operational in the past 14 days (report created in the month of Nov) and are being leveraged to perpetuate malicious activities globally.

URL/IP: 123.201.40[.]112

- inetnum: 123.201.32[.]0 - 123.201.47[.]255

- netname: YOUTELE

- descr: YOU Telecom India Pvt Ltd

- country: IN

- admin-c: HA348-AP

- tech-c: NI23-AP

- status: ASSIGNED NON-PORTABLE

- mnt-by: MAINT-IN-YOU

- last-modified: 2022-08-16T06:43:19Z

- mnt-irt: IRT-IN-YOU

- source: APNIC

- irt: IRT-IN-YOU

- address: YOU Broadband India Limited

- address: 2nd Floor, Millennium Arcade

- address: Opp. Samarth Park, Adajan-Hazira Road

- address: Surat-395009,Gujarat

- address: India

- e-mail: abuse@youbroadband.co.in

- abuse-mailbox: abuse@youbroadband.co.in

- admin-c: HA348-AP

- tech-c: NI23-AP

- auth: # Filtered

- mnt-by: MAINT-IN-YOU

- last-modified: 2022-08-08T10:30:51Z

- source: APNIC

- person: Harindra Akbari

- nic-hdl: HA348-AP

- e-mail: harindra.akbari@youbroadband.co.in

- address: YOU Broadband India Limited

- address: 2nd Floor, Millennium Arcade

- address: Opp. Samarth Park, Adajan-Hazira Road

- address: Surat-395009,Gujarat

- address: India

- phone: +91-261-7113400

- fax-no: +91-261-2789501

- country: IN

- mnt-by: MAINT-IN-YOU

- last-modified: 2022-08-10T11:01:47Z

- source: APNIC

- person: NOC IQARA

- nic-hdl: NI23-AP

- e-mail: network@youbroadband.co.in

- address: YOU Broadband India Limited

- address: 2nd Floor, Millennium Arcade

- address: Opp. Samarth Park, Adajan-Hazira Road

- address: Surat-395009,Gujarat

- address: India

- phone: +91-261-7113400

- fax-no: +91-261-2789501

- country: IN

- mnt-by: MAINT-IN-YOU

- last-modified: 2022-08-08T10:18:09Z

- source: APNIC

- route: 123.201.40.0/24

- descr: YOU Broadband & Cable India Ltd.

- origin: AS18207

- mnt-lower: MAINT-IN-YOU

- mnt-routes: MAINT-IN-YOU

- mnt-by: MAINT-IN-YOU

- last-modified: 2012-01-25T11:25:55Z

- source: APNIC

IP 123.201.40[.]112 uses the requested URL-path to make a GET request on the IP-address at port 80. "NOT RESPONDED" is the response status code for the request "C:\PROGRAM FILES GOOGLE CHROME APPLICATION CHROME.EXE" that was started by the process.

Programs that retrieve their server data using a GET request are considered legitimate. The Google Chrome browser, a fully functional application widely used for web browsing, was used to make the actual request. It asks to get access to the server with IP 123.201.40[.]112 in order to collect its data and other resources.

Malware uses GET requests to retrieve more commands or to send data back to the command and control servers. In this instance, it may be an attack server making the request to a known IP address with a known port number. Since the server has not replied to the request, the response status "NOT RESPONDED" may indicate that the activity was carried out with malicious intent.

This graph illustrates how the Qakbot virus operates and interacts with its C2 server, located in India and with the IP address 123.201.40[.]112.

Impact

Qbot is a kind of malware that is typically distributed through hacked websites, malicious email attachments, and phishing operations. It targets private user information, including corporate logins or banking passwords. The deployment of ransomware: Payloads from organizations such as ProLock and Egregor ransomware are delivered by Qbot, a predecessor. Network Vulnerability: Within corporate networks, compromised systems will act as gateways for more lateral movement.

Proposed Recommendations for Mitigation

- Quick Action: To stop any incoming or outgoing traffic, the discovered IP addresses will be added to intrusion detection/prevention systems and firewalls.

- Network monitoring: Examining network log information for any attempts to get in touch with these IPs

- Email security: Give permission for anti-phishing programs.

- Endpoint Protection: To identify and stop Qbot infestations, update antivirus definitions.,Install tools for endpoint detection and response.

- Patch management: To reduce vulnerabilities that Qbot exploits, update all operating systems and software on a regular basis.

- Incident Response: Immediately isolate compromised computers.

- Awareness: Dissemination of this information to block the IP addresses of active C2 servers supporting Qbot malware activity has to be carried out.

Conclusion:

The discovery of these C2 servers reveals the growing danger scenario that Indian networks must contend with. To protect its infrastructure from future abuse, organizations are urged to act quickly and put the aforementioned precautions into place.

Reference:

- Threat Intelligence - ANY.RUN

- https://www.virustotal.com/gui

- https://www.virustotal.com/gui/ip-address/123.201.40.112/relations

Executive Summary:

Microsoft rolled out a set of major security updates in August, 2024 that fixed 90 cracks in the MS operating systems and the office suite; 10 of these had been exploited in actual hacker attacks and were zero-days. In the following discussion, these vulnerabilities are first outlined and then a general analysis of the contemporary cyber security threats is also undertaken in this blog. This blog seeks to give an acquainted and non-acquainted audience about these updates, the threat that these exploits pose, and prevent measures concerning such dangers.

1. Introduction

Nowadays, people and organisations face the problem of cybersecurity as technologies develop and more and more actions take place online. These cyber threats have not ceased to mutate and hence safeguarding organisations’ digital assets requires a proactive stand. This report is concerned with the vulnerabilities fixed by Microsoft in August 2024 that comprised a cumulative of 90 security weaknesses where six of them were zero-day exploits. All these make a terrible risk pose and thus, it is important to understand them as we seek to safeguard virtual properties.

2. Overview of Microsoft’s August 2024 Security Updates

August 2024 security update provided by Microsoft to its products involved 90 vulnerabilities for Windows, Office, and well known programs and applications. These updates are of the latest type which are released by Microsoft under its Patch Tuesday program, a regular cum monthly release of all Patch updates.

- Critical Flaws: As expected, seven of the 90 were categorised as Critical, meaning that these are flaws that could be leveraged by hackers to compromise the targeted systems or bring operations to a halt.

- Zero-Day Exploits: A zero-day attack can be defined as exploits, which are as of now being exploited by attackers while the software vendor has not yet developed a patch for the same. It had managed 10 zero-days with the August update, which underlines that Microsoft and its ecosystems remain at risk.

- Broader Impact: These are not isolated to the products of Microsoft only They still persist Despite this, these vulnerabilities are not exclusive to the Microsoft products only. Other vendors such as Adobe, Cisco, Google, and others also released security advisories to fix a variety of issues which proves today’s security world is highly connected.

3. Detailed Analysis of Key Vulnerabilities

This section provides an in-depth analysis of some of the most critical vulnerabilities patched in August 2024. Each vulnerability is explained in layman’s terms to ensure accessibility for all readers.

3. 1 CVE-2024-38189: Microsoft Project Remote Code Execution Vulnerability (CVSS score:8. 8) :

The problem is in programs that belong to the Microsoft Project family which is known to be a popular project management system. The vulnerability enables an attacker to produce a file to entice an user into opening it and in the process execute code on the affected system. This could possibly get the attacker full control of the user’s system as mentioned in the following section.

Explanation for Non-Technical Readers: Let us assume that one day you received a file which appears to be a normal word document. When it is opened, it is in a format that it secretly downloads a problematic program in the computer and this goes unnoticed. This is what could happen with this vulnerability, that is why it is very dangerous.

3. 2 CVE-2024-38178: Windows Scripting Engine Memory Corruption Vulnerability (CVSS score: 7.5):

Some of the risks relate to a feature known as the Windows Scripting Engine, which is an important system allowing a browser or an application to run scripts in a web page or an application. The weak point can result in corruption of memory space and an attacker can perform remote code execution with the possibility to affect the entire system.

Explanation for Non-Technical Readers: For the purpose of understanding how your computer memory works, imagine if your computer’s memory is a library. This vulnerability corrupts the structure of the library so that an intruder can inject malicious books (programs) which you may read (execute) on your computer and create havoc.

3. 3 CVE-2024-38193: WinSock Elevation of Privilege Vulnerability (CVSS score: 7. 8 )

It opens up a security weakness in the Windows Ancillary Function Driver for WinSock, which is an essential model that masks the communication between the two. It enables the attacker to gain new privileges on the particular system they have attacked, in this case they gain some more privileges on the attacked system and can access other higher activities or details.

Explanation for Non-Technical Readers: This flaw is like somebody gaining access to the key to your house master bedroom. They can also steal all your valuable items that were earlier locked and could only be accessed by you. It lets the attacker cause more havoc as soon as he gets inside your computer.

3. 4 CVE-2024-38106: Windows Kernel Elevation of Privilege Vulnerability (CVSS score: 7. 0)

This vulnerability targets what is known as the Windows Kernel which forms the heart or main frameworks of the operating system that controls and oversees the functions of the computer components. This particular weakness can be exploited and an opponent will be able to get high-level access and ownership of the system.

Explanation for Non-Technical Readers: The kernel can be compared to the brain of your computer. It is especially dangerous that if someone can control the brain he can control all the rest, which makes it a severe weakness.

3. 5 CVE-2024-38213: Windows Mark of the Web Security Feature Bypass Vulnerability (CVSS score: 6.5).

This vulnerability enables the attackers to evade the SmartScreen component of Windows which is used to safeguard users from accessing unsafe files. This weakness can be easily used by the attackers to influence the users to open files that are otherwise malicious.

Explanation for Non-Technical Readers: Usually, before opening a file your computer would ask you in advance that opening the file may harm your computer. This weak point makes your computer believe that this dangerous file is good and then no warning will be given to you.

4. Implications of the Vulnerabilities

These vulnerabilities, importantly the zero-day exploits, have significant implications on all users.

- Data Breaches: These weaknesses can therefore be manipulated to cause exposures of various data, occasioning data leaks that put individual and corporate information and wealth.

- System Compromise: The bad guys could end up fully compromising the impacted systems meaning that they can put in malware, pilfer data or simply shut down a program.

- Financial Loss: The organisations that do not patch these vulnerabilities on the shortest notice may end up experiencing a lot of losses because of having to deal with a lot of downtimes on their systems, having to incur the costs of remediating the systems that have been breached and also dealing with legal repercussions.

- Reputation Damage: Security breaches and IT system corruptions can result in loss of customer and partner confidence in an organisation’s ability to protect their information affecting its reputation and its position in the market.

5. Recommendations for Mitigating Risks

Immediate measures should be taken regarding the risks linked to these issues since such weaknesses pose a rather high threat. The following are recommendations suitable for both technical and non-technical users.

5. 1 Regular Software Updates

Make it a point that all the software, particularly operating systems and all Microsoft applications are updated. Any system out there needs to update it from Microsoft, and its Patch Tuesday release is crucial.

For Non-Technical Users: As much as possible, reply ‘yes’ to updates whenever your computer or smartphone prompts for it. These updates correct security matters and secure your instruments.

5. 2 Realisation of Phishing Attacks

Most of the risks are normally realised through phishing techniques. People should be taught diversifiable actions that come with crazy emails like clicking on links and opening attachments.

For Non-Technical Users: Do not respond to emails from unknown people and if they make you follow a link or download a file, do not do it. If it looks like spam, do not click on it.

5. 3 Security Software

Strong and reliable antivirus and anti-malware software can be used to identify and avoid the attacks that might have high chances of using these vulnerabilities.

For Non-Technical Users: Ensure you download a quality antivirus and always update it. This works like a security guard to your computer by preventing bad programs.

5. 4 Introduce Multi Factor Authentication (MFA)

MFA works in a way to enforce a second factor of authentication before the account can be accessed; for instance, a user will be asked to input a text message or an authentication application.

For Non-Technical Users: NS is to make use of two-factor authentication on your accounts. It is like increasing the security measures that a man who has to burgle a house has to undergo by having to hammer an additional lock on the door.

5. 5 Network segmentations and Privileges management

Network segmentation should be adopted by organisations to prevent the spread of attacks while users should only be granted the privileges required to do their activities.

For Non- Technical Users: Perform the assessments of user privileges and the networks frequently and alter them in an effort of reducing the extent of the attacks.

6. Global Cybersecurity Landscape and Vendor Patches

The other major vendors have also released patches to address security vulnerabilities in their products. The interdependent nature of technology has the effect on the entire digital ecosystem.

- Adobe, Cisco, Google, and Others: These companies have released updates to address the weaknesses in their products that are applied in different sectors. These patches should be applied promptly to enhance cybersecurity.

- Collaboration and Information Sharing:Security vendors as well as researchers and experts in the cybersecurity domain, need to remain vigilant and keep on sharing information on emerging threats in cyberspace.

7. Conclusion

The security updates companies such as Microsoft and other vendors illustrate the present day fight between cybersecurity experts and cybercriminals. All the vulnerabilities addressed in this August 2024 update cycle are a call for prudence and constant protection of digital platforms. These vulnerabilities explain the importance of maintaining up-to-date systems, being aware of potential threats, and implementing robust security practices. Therefore, it is important to fortify our shield in this ever expanding threat domain, in order to be safe from attackers who use this weakness for their malicious purposes.