#FactCheck: Viral Video Claiming IAF Air Chief Marshal Acknowledged Loss of Jets Found Manipulated

Executive Summary:

A video circulating on social media falsely claims to show Indian Air Chief Marshal AP Singh admitting that India lost six jets and a Heron drone during Operation Sindoor in May 2025. It has been revealed that the footage had been digitally manipulated by inserting an AI generated voice clone of Air Chief Marshal Singh into his recent speech, which was streamed live on August 9, 2025.

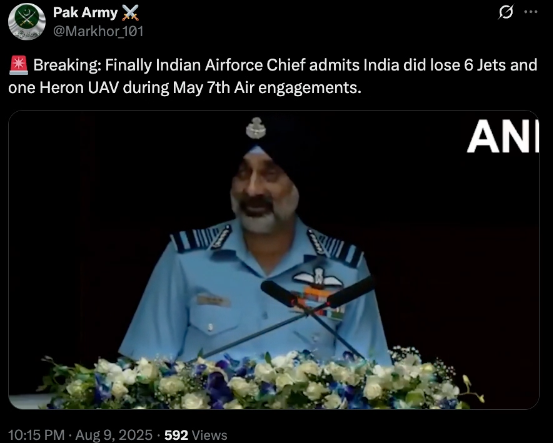

Claim:

A viral video (archived video) (another link) shared by an X user stating in the caption “ Breaking: Finally Indian Airforce Chief admits India did lose 6 Jets and one Heron UAV during May 7th Air engagements.” which is actually showing the Air Chief Marshal has admitted the aforementioned loss during Operation Sindoor.

Fact Check:

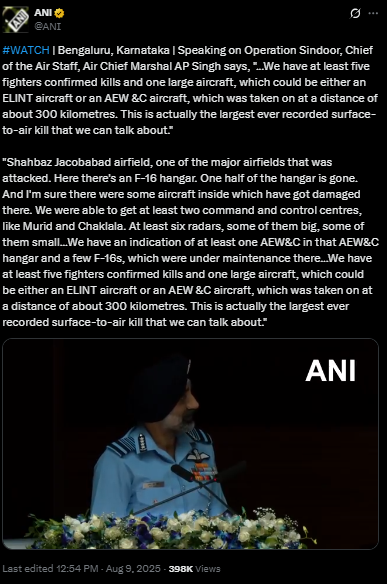

By conducting a reverse image search on key frames from the video, we found a clip which was posted by ANI Official X handle , after watching the full clip we didn't find any mention of the aforementioned alleged claim.

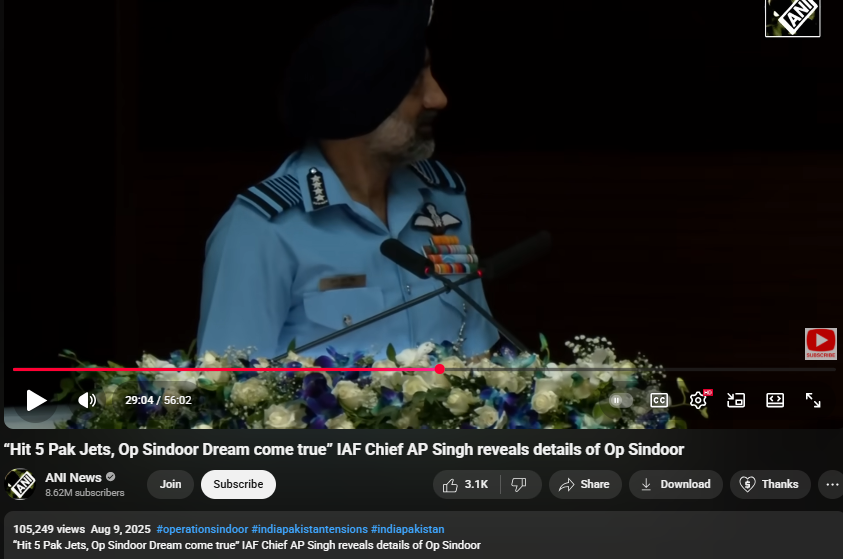

On further research we found an extended version of the video in the Official YouTube Channel of ANI which was published on 9th August 2025. At the 16th Air Chief Marshal L.M. Katre Memorial Lecture in Marathahalli, Bengaluru, Air Chief Marshal AP Singh did not mention any loss of six jets or a drone in relation to the conflict with Pakistan. The discrepancies observed in the viral clip suggest that portions of the audio may have been digitally manipulated.

The audio in the viral video, particularly the segment at the 29:05 minute mark alleging the loss of six Indian jets, appeared to be manipulated and displayed noticeable inconsistencies in tone and clarity.

Conclusion:

The viral video claiming that Air Chief Marshal AP Singh admitted to the loss of six jets and a Heron UAV during Operation Sindoor is misleading. A reverse image search traced the footage that no such remarks were made. Further an extended version on ANI’s official YouTube channel confirmed that, during the 16th Air Chief Marshal L.M. Katre Memorial Lecture, no reference was made to the alleged losses. Additionally, the viral video’s audio, particularly around the 29:05 mark, showed signs of manipulation with noticeable inconsistencies in tone and clarity.

- Claim: Viral Video Claiming IAF Chief Acknowledged Loss of Jets Found Manipulated

- Claimed On: Social Media

- Fact Check: False and Misleading