#FactCheck - Viral Video Falsely Linked to India; Actually from Bangladesh

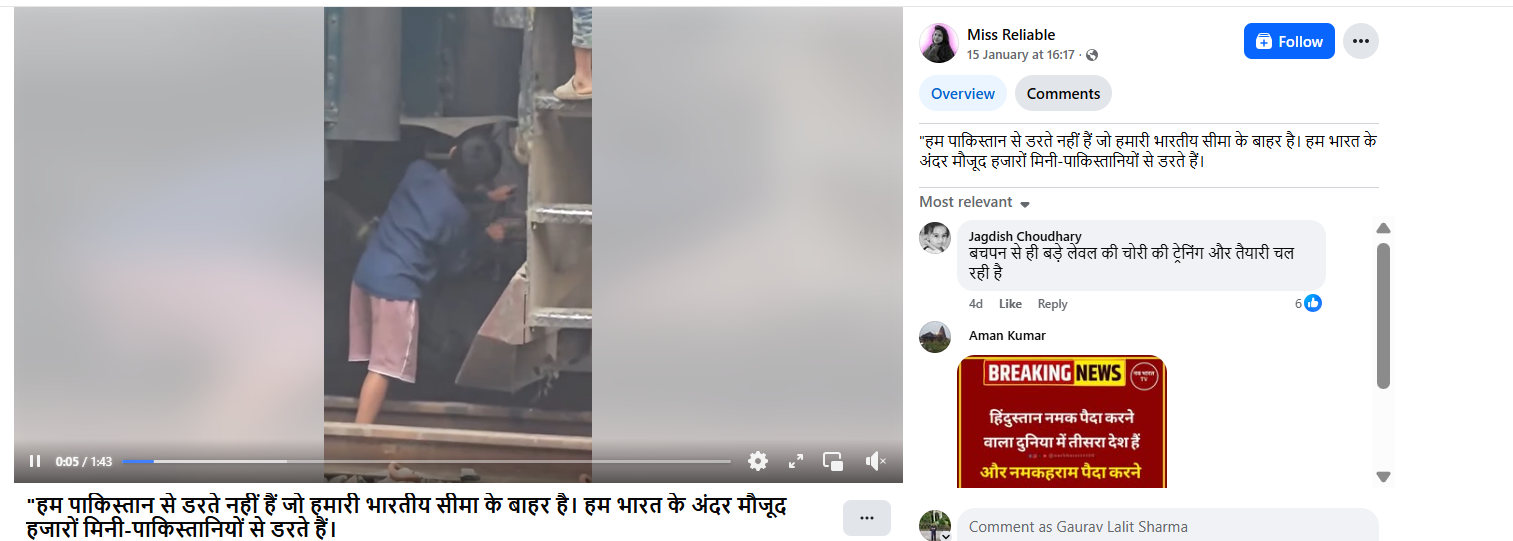

A video circulating widely on social media shows a child throwing stones at a moving train, while a few other children can also be seen climbing onto the engine. The video is being shared with a communal narrative, with claims that the incident took place in India.

Cyber Peace Foundation’s research found the viral claim to be misleading. Our research revealed that the video is not from India, but from Bangladesh, and is being falsely linked to India on social media.

Claim:

On January 15, 2026, a Facebook user shared the viral video claiming it depicted an incident from India. The post carried a provocative caption stating, “We are not afraid of Pakistan outside our borders. We are afraid of the thousands of mini-Pakistans within India.” The post has been widely circulated, amplifying communal sentiments.

Fact Check:

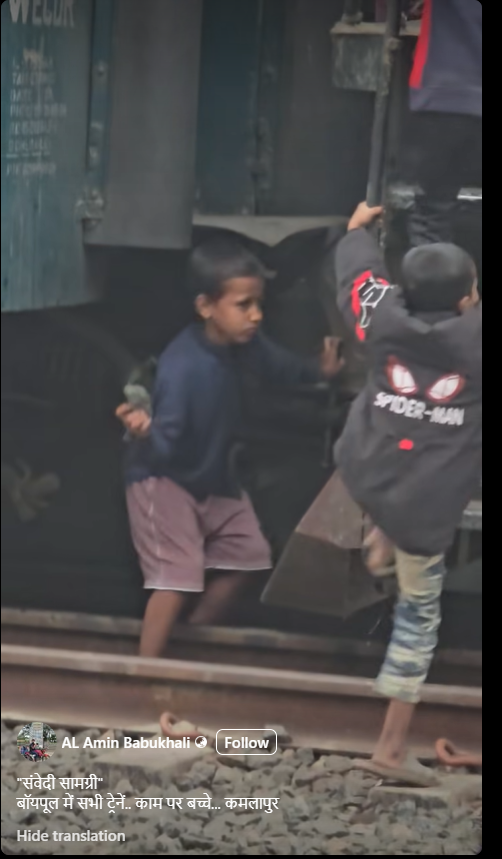

To verify the authenticity of the video, we conducted a reverse image search using Google Lens by extracting keyframes from the viral clip. During this process, we found the same video uploaded on a Bangladeshi Facebook account named AL Amin Babukhali on December 28, 2025. The caption of the original post mentions Kamalapur, which is a well-known railway station in Bangladesh. This strongly indicates that the incident did not occur in India.

Further analysis of the video shows that the train engine carries the marking “BR”, along with text written in the Bengali language. “BR” stands for Bangladesh Railways, confirming the origin of the train. To corroborate this further, we searched for images related to Bangladesh Railways using Google’s open tools. We found multiple images on Getty Images showing train engines with the same design and markings as seen in the viral video. The visual match clearly establishes that the train belongs to Bangladesh Railways.

Conclusion

Our research confirms that the viral video is from Bangladesh, not India. It is being shared on social media with a false and misleading claim to give it a communal angle and link it to India.

Related Blogs

Introduction

As our experiments with Generative Artificial Intelligence (AI) continue, companies and individuals look for new ways to incorporate and capitalise on it. This also includes big tech companies betting on their potential through investments. This process also sheds light on how such innovations are being carried out, used, and affect other stakeholders. Google’s AI overview feature has raised concerns from various website publishers and regulators. Recently, Chegg, a US-based tech education company that provides online resources for high school and college students, has filed a lawsuit against Google alleging abuse of monopoly over the searching mechanism.

Legal Background

Google’s AI Overview/Search Generative Experience (SGE) is a feature that incorporates AI into its standard search tool and helps summarise search results. This is then presented at the top, over the other published websites, when one looks for the search result. Although the sources of the information present are linked, they are half-covered, and it is ambiguous to tell which claims made by the AI come from which link. This creates an additional step for the searcher as, to find out the latter, their user interface requires the searcher to click on a drop-down box. Individual publishers and companies like Chegg have argued that such summaries deter their potential traffic and lead to losses as they continue to bid higher for advertisement services that Google offers, only to have their target audience discouraged from visiting their websites. What is unique about the lawsuit that has been filed by Chegg, is that it is based on anti-trust law rather than copyright law, which it has dealt with previously. In August 2024, a US Federal Judge had ruled that Google had an illegal monopoly over internet search and search text advertising markets, and by November, the US Department of Justice (DOJ) filed its proposed remedy. Some of them were giving advertisers and publishers more control of their data flowing through Google’s products, opening Google’s search index to the rest of the market, and imposing public oversight over Google’s AI investments. Currently, the DOJ has emphasised its stand on dismantling the search monopoly through structural separations, i.e., divesting Google of Chrome. The company is slated to defend itself before the DC District Court Judge Amit Mehta starting April 20, 2025.

CyberPeace Insights

As per a report by Statista (Global market share of leading search engines 2015-2025), Google, as the market leader, held a search traffic share of around 89.62 per cent. It is also stated that its advertising services account for the majority of its revenue, which amounted to a total of 305.63 billion U.S. dollars in 2023. The inclusion of the AI feature is undoubtedly changing how we search for things online. Benefits for users include an immediate, convenient scan of general information pertaining to the looked-up subject, but it may also raise concerns on the part of the website publishers and their loss of ad revenue owing to fewer impressions/clicks. Even though links (sources) are mentioned, they are usually buried. Such a searching mechanism questions the incentive on both ends- the user to explore various viewpoints, as people are now satisfied with the first few results that pop up, and the incentive for a creator/publisher to create new content as well as generate an income out of it. There might be a shift to more passive consumption rather than an active one, where one looks up/or is genuinely searching for information.

Conclusion

AI might make life more convenient, but in this case, it might also take away from small businesses, their finances, and the results of their hard work. It is also necessary for regulators, publishers, and users to continue asking such critical questions to keep the accountability of big tech giants in check, whilst not compromising their creations and publications.

References

- https://www.washingtonpost.com/technology/2024/05/13/google-ai-search-io-sge/

- https://www.theverge.com/news/619051/chegg-google-ai-overviews-monopoly

- https://economictimes.indiatimes.com/tech/technology/google-leans-further-into-ai-generated-overviews-for-its-search-engine/articleshow/118742139.cms?from=mdr

- https://www.nytimes.com/2024/12/03/technology/google-search-antitrust-judge.html

- https://www.odinhalvorson.com/monopoly-and-misuse-googles-strategic-ai-narrative/

- https://cio.economictimes.indiatimes.com/news/artificial-intelligence/google-leans-further-into-ai-generated-overviews-for-its-search-engine/118748621

- https://www.techpolicy.press/the-elephant-in-the-room-in-the-google-search-case-generative-ai/

- https://www.karooya.com/blog/proposed-remedies-break-googles-monopoly-antitrust/

- https://getellipsis.com/blog/googles-monopoly-and-the-hidden-brake-on-ai-innovation/

- https://www.statista.com/statistics/266249/advertising-revenue-of-google/#:~:text=Google:%20annual%20advertising%20revenue%202001,local%20products%20are%20more%20preferred.

- https://www.statista.com/statistics/1381664/worldwide-all-devices-market-share-of-search-engines/

- https://www.techpolicy.press/doj-sets-record-straight-of-whats-needed-to-dismantle-googles-search-monopoly/

Executive Summary:

Old footage of Indian Cricketer Virat Kohli celebrating Ganesh Chaturthi in September 2023 was being promoted as footage of Virat Kohli at the Ram Mandir Inauguration. A video of cricketer Virat Kohli attending a Ganesh Chaturthi celebration last year has surfaced, with the false claim that it shows him at the Ram Mandir consecration ceremony in Ayodhya on January 22. The Hindi newspaper Dainik Bhaskar and Gujarati newspaper Divya Bhaskar also displayed the now-viral video in their respective editions on January 23, 2024, escalating the false claim. After thorough Investigation, it was found that the Video was old and it was Ganesh Chaturthi Festival where the cricketer attended.

Claims:

Many social media posts, including those from news outlets such as Dainik Bhaskar and Gujarati News Paper Divya Bhaskar, show him attending the Ram Mandir consecration ceremony in Ayodhya on January 22, where after investigation it was found that the Video was of Virat Kohli attending Ganesh Chaturthi in September, 2023.

The caption of Dainik Bhaskar E-Paper reads, “ क्रिकेटर विराट कोहली भी नजर आए ”

Fact Check:

CyberPeace Research Team did a reverse Image Search of the Video where several results with the Same Black outfit was shared earlier, from where a Bollywood Entertainment Instagram Profile named Bollywood Society shared the same Video in its Page, the caption reads, “Virat Kohli snapped for Ganapaati Darshan” the post was made on 20 September, 2023.

Taking an indication from this we did some keyword search with the Information we have, and it was found in an article by Free Press Journal, Summarizing the article we got to know that Virat Kohli paid a visit to the residence of Shiv Sena leader Rahul Kanal to seek the blessings of Lord Ganpati. The Viral Video and the claim made by the news outlet is false and Misleading.

Conclusion:

The recent Claim made by the Viral Videos and News Outlet is an Old Footage of Virat Kohli attending Ganesh Chaturthi the Video back to the year 2023 but not of the recent auspicious day of Ram Mandir Pran Pratishtha. To be noted that, we also confirmed that Virat Kohli hadn’t attended the Program; there was no confirmation that Virat Kohli attended on 22 January at Ayodhya. Hence, we found this claim to be fake.

- Claim: Virat Kohli attending the Ram Mandir consecration ceremony in Ayodhya on January 22

- Claimed on: Youtube, X

- Fact Check: Fake

Introduction

Considering the development of technology, Voice cloning schemes are one such issue that has recently come to light. Scammers are moving forward with AI, and their methods and plans for deceiving and scamming people have also altered. Deepfake technology creates realistic imitations of a person’s voice that can be used to conduct fraud, dupe a person into giving up crucial information, or even impersonate a person for illegal purposes. We will look at the dangers and risks associated with AI voice cloning frauds, how scammers operate and how one might protect themselves from one.

What is Deepfake?

Artificial intelligence (AI), known as “deepfake,” can produce fake or altered audio, video, and film that pass for the real thing. The words “deep learning” and “fake” are combined to get the name “deep fake”. Deepfake technology creates content with a realistic appearance or sound by analysing and synthesising diverse volumes of data using machine learning algorithms. Con artists employ technology to portray someone doing something that has never been in audio or visual form. The best example is the American President, who used deep voice impersonation technology. Deep voice impersonation technology can be used maliciously, such as in deep voice fraud or disseminating false information. As a result, there is growing concerned about the potential influence of deep fake technology on society and the need for effective tools to detect and mitigate the hazards it may provide.

What exactly are deepfake voice scams?

Artificial intelligence (AI) is sometimes utilised in deepfake speech frauds to create synthetic audio recordings that seem like real people. Con artists can impersonate someone else over the phone and pressure their victims into providing personal information or paying money by using contemporary technology. A con artist may pose as a bank employee, a government official, or a friend or relative by utilising a deep false voice. It aims to earn the victim’s trust and raise the likelihood that they will fall for the hoax by conveying a false sense of familiarity and urgency. Deep fake speech frauds are increasing in frequency as deep fake technology becomes more widely available, more sophisticated, and harder to detect. In order to avoid becoming a victim of such fraud, it is necessary to be aware of the risks and take appropriate measures.

Why do cybercriminals use AI voice deep fake?

In order to mislead users into providing private information, money, or system access, cybercriminals utilise artificial intelligence (AI) speech-deep spoofing technology to claim to be people or entities. Using AI voice-deep fake technology, cybercriminals can create audio recordings that mimic real people or entities, such as CEOs, government officials, or bank employees, and use them to trick victims into taking activities that are advantageous to the criminals. This can involve asking victims for money, disclosing login credentials, or revealing sensitive information. In phishing assaults, where fraudsters create audio recordings that impersonate genuine messages from organisations or people that victims trust, deepfake AI voice technology can also be employed. These audio recordings can trick people into downloading malware, clicking on dangerous links, or giving out personal information. Additionally, false audio evidence can be produced using AI voice-deep fake technology to support false claims or accusations. This is particularly risky regarding legal processes because falsified audio evidence may lead to wrongful convictions or acquittals. Artificial intelligence voice deep fake technology gives con artists a potent tool for tricking and controlling victims. Every organisation and the general population must be informed of this technology’s risk and adopt the appropriate safety measures.

How to spot voice deepfake and avoid them?

Deep fake technology has made it simpler for con artists to edit audio recordings and create phoney voices that exactly mimic real people. As a result, a brand-new scam called the “deep fake voice scam” has surfaced. In order to trick the victim into handing over money or private information, the con artist assumes another person’s identity and uses a fake voice. What are some ways to protect oneself from deepfake voice scams? Here are some guidelines to help you spot them and keep away from them:

- Steer clear of telemarketing calls

- One of the most common tactics used by deep fake voice con artists, who pretend to be bank personnel or government officials, is making unsolicited phone calls.

- Listen closely to the voice

- Anyone who phones you pretending to be someone else should pay special attention to their voice. Are there any peculiar pauses or inflexions in their speech? Something that doesn’t seem right can be a deep voice fraud.

- Verify the caller’s identity

- It’s crucial to verify the caller’s identity in order to avoid falling for a deep false voice scam. You might ask for their name, job title, and employer when in doubt. You can then do some research to be sure they are who they say they are.

- Never divulge confidential information

- No matter who calls, never give out personal information like your Aadhar, bank account information, or passwords over the phone. Any legitimate companies or organisations will never request personal or financial information over the phone; if they do, it’s a warning sign that they’re a scammer.

- Report any suspicious activities

- Inform the appropriate authorities if you think you’ve fallen victim to a deep voice fraud. This may include your bank, credit card company, local police station, or the nearest cyber cell. By reporting the fraud, you could prevent others from being a victim.

Conclusion

In conclusion, the field of AI voice deep fake technology is fast expanding and has huge potential for beneficial and detrimental effects. While deep fake voice technology has the potential to be used for good, such as improving speech recognition systems or making voice assistants sound more realistic, it may also be used for evil, such as deep fake voice frauds and impersonation to fabricate stories. Users must be aware of the hazard and take the necessary precautions to protect themselves as AI voice deep fake technology develops, making it harder to detect and prevent deep fake schemes. Additionally, it is necessary to conduct ongoing research and develop efficient techniques to identify and control the risks related to this technology. We must deploy AI appropriately and ethically to ensure that AI voice-deep fake technology benefits society rather than harming or deceiving it.