#FactCheck: Viral Fake Post Claims Central Government Offers Unemployment Allowance Under ‘PM Berojgari Bhatta Yojna’

Executive Summary:

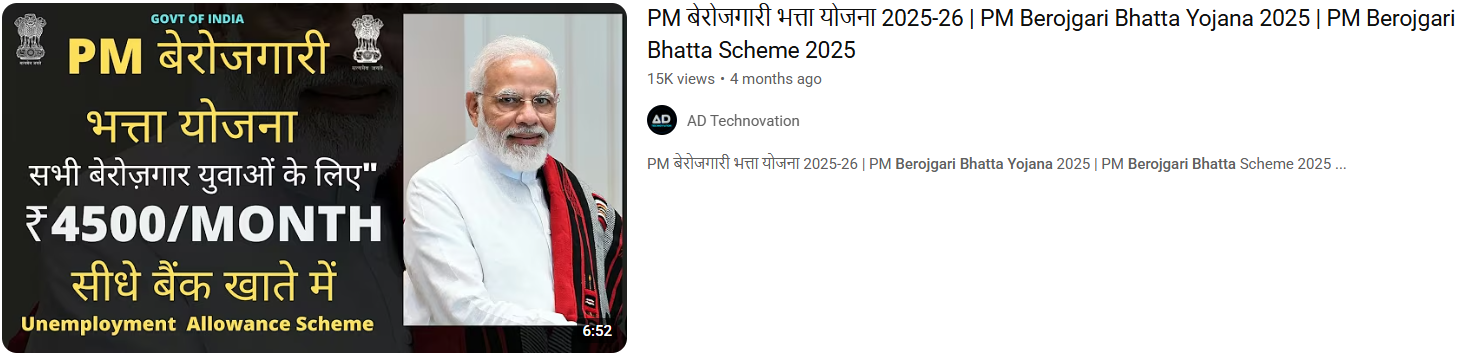

A viral thumbnail and numerous social posts state that the government of India is giving unemployed youth ₹4,500 a month under a program labeled "PM Berojgari Bhatta Yojana." This claim has been shared on multiple online platforms.. It has given many job-seeking individuals hope, however, when we independently researched the claim, there was no verified source of the scheme or government notification.

Claim:

The viral post states: "The Central Government is conducting a scheme called PM Berojgari Bhatta Yojana in which any unemployed youth would be given ₹ 4,500 each month. Eligible candidates can apply online and get benefits." Several videos and posts show suspicious and unverified website links for registration, trying to get the general public to share their personal information.

Fact check:

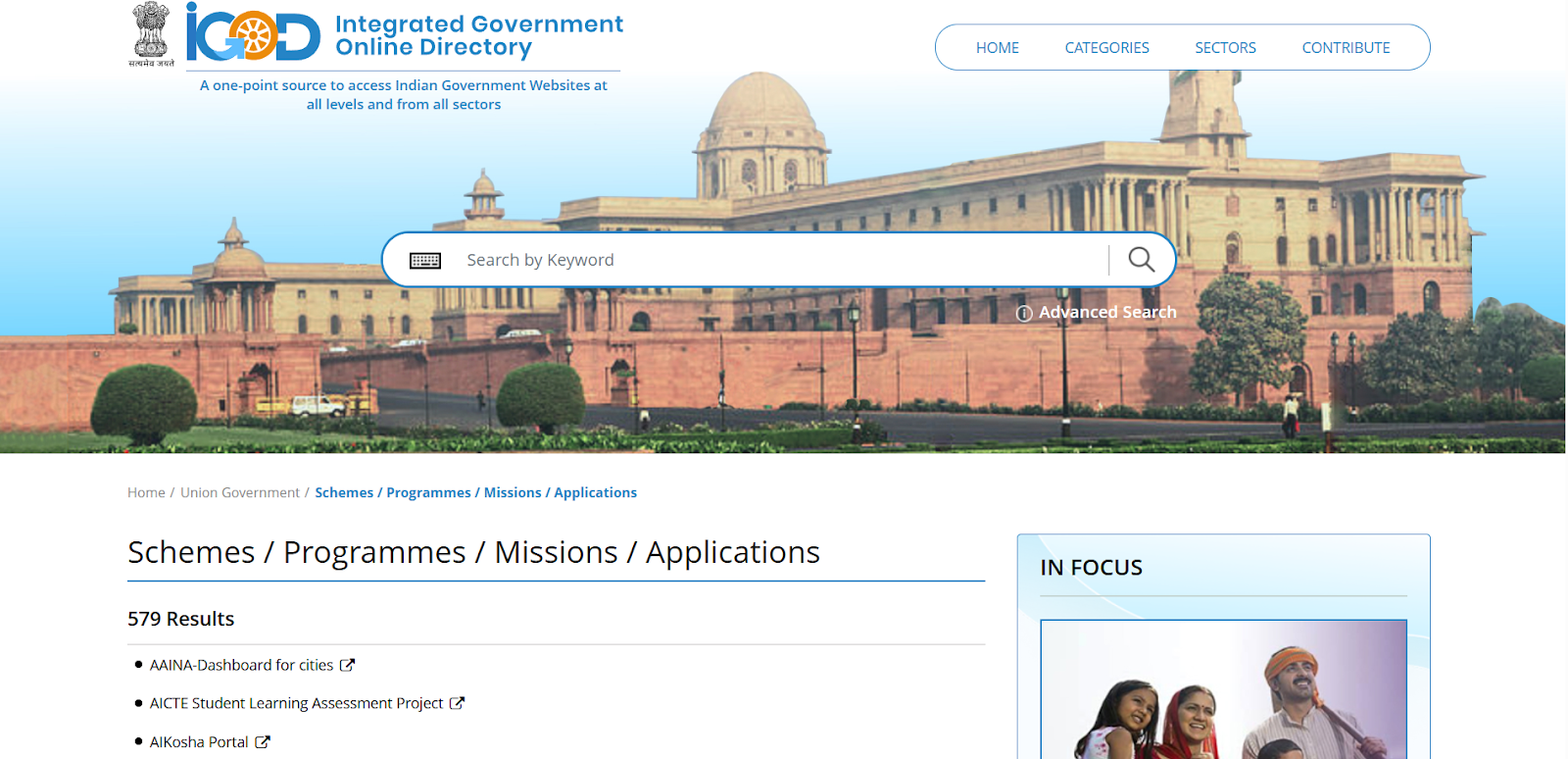

In the course of our verification, we conducted a research of all government portals that are official, in this case, the Ministry of Labour and Employment, PMO India, MyScheme, MyGov, and Integrated Government Online Directory, which lists all legitimate Schemes, Programmes, Missions, and Applications run by the Government of India does not posted any scheme related to the PM Berojgari Bhatta Yojana.

Numerous YouTube channels seem to be monetizing false narratives at the expense of sentiment, leading users to misleading websites. The purpose of these scams is typically to either harvest data or market pay-per-click ads that suspend disbelief in outrageous claims.

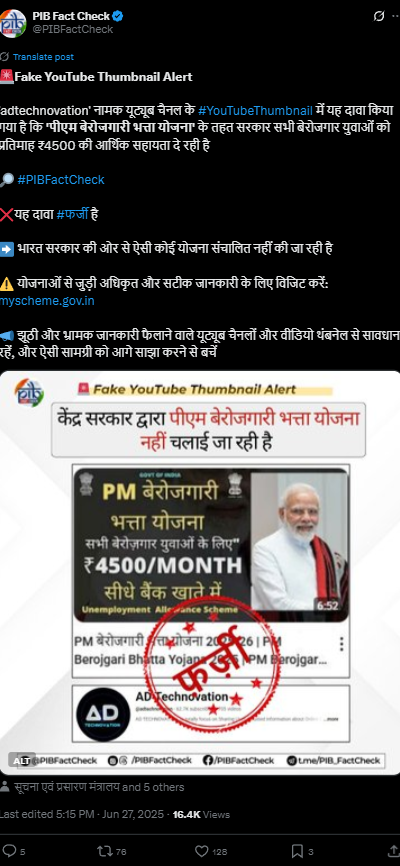

Our research findings were backed up later by the PIB Fact Check which shared a clarification on social media. stated that: “No such scheme called ‘PM Berojgari Bhatta Yojana’ is in existence. The claim that has gone viral is fake”.

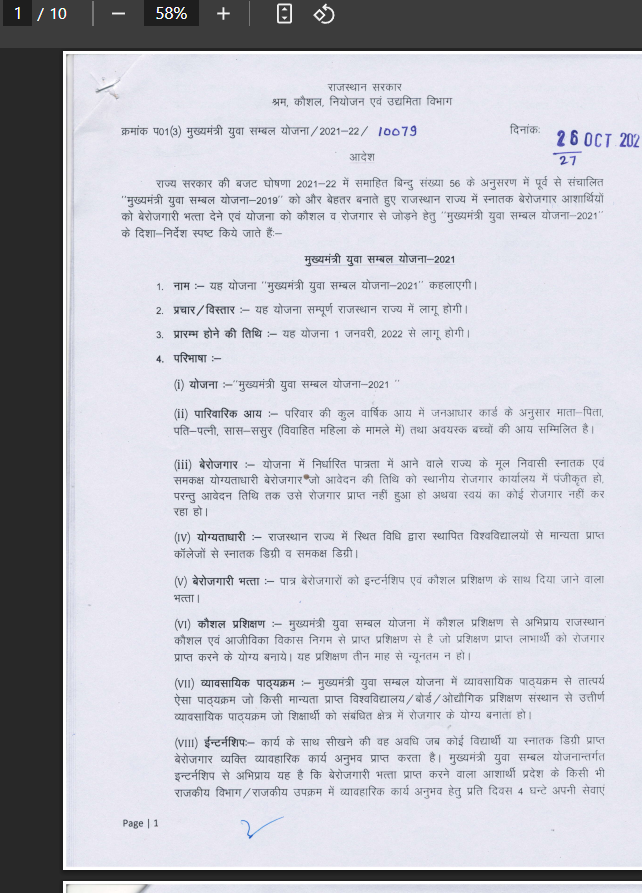

To provide some perspective, in 2021-22, the Rajasthan government launched a state-level program under the Mukhyamantri Udyog Sambal Yojana (MUSY) that provided ₹4,500/month to unemployed women and transgender persons, and ₹4000/month to unemployed males. This was not a Central Government program, and the current viral claim falsely contextualizes past, local initiatives as nationwide policy.

Conclusion:

The claim of a ₹4,500 monthly unemployment benefit under the PM Berojgari Bhatta Yojana is incorrect. The Central Government or any government department has not launched such a scheme. Our claim aligns with PIB Fact Check, which classifies this as a case of misinformation. We encourage everyone to be vigilant and avoid reacting to viral fake news. Verify claims through official sources before sharing or taking action. Let's work together to curb misinformation and protect citizens from false hopes and data fraud.

- Claim: A central policy offers jobless individuals ₹4,500 monthly financial relief

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

Executive Summary:

A viral social media message claims that the Indian government is offering a ₹5,000 gift to citizens in celebration of Prime Minister Narendra Modi’s birthday. However, this claim is false. The message is part of a deceptive scam that tricks users into transferring money via UPI, rather than receiving any benefit. Fact-checkers have confirmed that this is a fraud using misleading graphics and fake links to lure people into authorizing payments to scammers.

Claim:

The post circulating widely on platforms such as WhatsApp and Facebook states that every Indian citizen is eligible to receive ₹5,000 as a gift from the current Union Government on the Prime Minister’s birthday. The message post includes visuals of PM Modi, BJP party symbols, and UPI app interfaces such as PhonePe or Google Pay, and urges users to click on the BJP Election Symbol [Lotus] or on the provided link to receive the gift directly into their bank account.

Fact Check:

Our research indicates that there is no official announcement or credible article supporting the claim that the government is offering ₹5,000 under the Pradhan Mantri Jan Dhan Yojana (PMJDY). This claim does not appear on any official government websites or verified scheme listings.

While the message was crafted to appear legitimate, it was in fact misleading. The intent was to deceive users into initiating a UPI payment rather than receiving one, thereby putting them at financial risk.

A screen popped up showing a request to pay ₹686 to an unfamiliar UPI ID. When the ‘Pay ₹686’ button was tapped, the app asked for the UPI PIN—clearly indicating that this would have authorised a payment straight from the user’s bank account to the scammer’s.

We advise the public to verify such claims through official sources before taking any action.

Our research indicated that the claim in the viral post is false and part of a fraudulent UPI money scam.

Clicking the link that went with the viral Facebook post, it took us to a website

https://wh1449479[.]ispot[.]cc/with a somewhat odd domain name of 'ispot.cc', which is certainly not a government-related or commonly known domain name. On the website, we observed images that featured a number of unauthorized visuals, including a Prime Minister Narendra Modi image, a Union Minister and BJP President J.P. Nadda image, the national symbol, the BJP symbol, and the Pradhan Mantri Jan Dhan Yojana logo. It looked like they were using these visuals intentionally to convince users that the website was legitimate.

Conclusion:

The assertion that the Indian government is handing out ₹5,000 to all citizens is totally false and should be reported as a scam. The message uses the trust related to government schemes, tricking users into sending money through UPI to criminals. They recommend that individuals do not click on links or respond to any such message about obtaining a government gift prior to verification. If you or a friend has fallen victim to this fraud, they are urged to report it immediately to your bank, and report it through the National Cyber Crime Reporting Portal (https://cybercrime.gov.in) or contact the cyber helpline at 1930. They also recommend always checking messages like this through their official government website first.

- Claim: The Modi Government is distributing ₹5,000 to citizens through UPI apps

- Claimed On: Social Media

- Fact Check: False and Misleading

.webp)

Introduction

Misinformation is a major issue in the AI age, exacerbated by the broad adoption of AI technologies. The misuse of deepfakes, bots, and content-generating algorithms have made it simpler for bad actors to propagate misinformation on a large scale. These technologies are capable of creating manipulative audio/video content, propagate political propaganda, defame individuals, or incite societal unrest. AI-powered bots may flood internet platforms with false information, swaying public opinion in subtle ways. The spread of misinformation endangers democracy, public health, and social order. It has the potential to affect voter sentiments, erode faith in the election process, and even spark violence. Addressing misinformation includes expanding digital literacy, strengthening platform detection capabilities, incorporating regulatory checks, and removing incorrect information.

AI's Role in Misinformation Creation

AI's growth in its capabilities to generate content have grown exponentially in recent years. Legitimate uses or purposes of AI many-a-times take a backseat and result in the exploitation of content that already exists on the internet. One of the main examples of misinformation flooding the internet is when AI-powered bots flood social media platforms with fake news at a scale and speed that makes it impossible for humans to track and figure out whether the same is true or false.

The netizens in India are greatly influenced by viral content on social media. AI-generated misinformation can have particularly negative consequences. Being literate in the traditional sense of the word does not automatically guarantee one the ability to parse through the nuances of social media content authenticity and impact. Literacy, be it social media literacy or internet literacy, is under attack and one of the main contributors to this is the rampant rise of AI-generated misinformation. Some of the most common examples of misinformation that can be found are related to elections, public health, and communal issues. These issues have one common factor that connects them, which is that they evoke strong emotions in people and as such can go viral very quickly and influence social behaviour, to the extent that they may lead to social unrest, political instability and even violence. Such developments lead to public mistrust in the authorities and institutions, which is dangerous in any economy, but even more so in a country like India which is home to a very large population comprising a diverse range of identity groups.

Misinformation and Gen AI

Generative AI (GAI) is a powerful tool that allows individuals to create massive amounts of realistic-seeming content, including imitating real people's voices and creating photos and videos that are indistinguishable from reality. Advanced deepfake technology blurs the line between authentic and fake. However, when used smartly, GAI is also capable of providing a greater number of content consumers with trustworthy information, counteracting misinformation.

Generative AI (GAI) is a technology that has entered the realm of autonomous content production and language creation, which is linked to the issue of misinformation. It is often difficult to determine if content originates from humans or machines and if we can trust what we read, see, or hear. This has led to media users becoming more confused about their relationship with media platforms and content and highlighted the need for a change in traditional journalistic principles.

We have seen a number of different examples of GAI in action in recent times, from fully AI-generated fake news websites to fake Joe Biden robocalls telling the Democrats in the U.S. not to vote. The consequences of such content and the impact it could have on life as we know it are almost too vast to even comprehend at present. If our ability to identify reality is quickly fading, how will we make critical decisions or navigate the digital landscape safely? As such, the safe and ethical use and applications of this technology needs to be a top global priority.

Challenges for Policymakers

AI's ability to generate anonymous content makes it difficult to hold perpetrators accountable due to the massive amount of data generated. The decentralised nature of the internet further complicates regulation efforts, as misinformation can spread across multiple platforms and jurisdictions. Balancing the need to protect the freedom of speech and expression with the need to combat misinformation is a challenge. Over-regulation could stifle legitimate discourse, while under-regulation could allow misinformation to propagate unchecked. India's multilingual population adds more layers to already-complex issue, as AI-generated misinformation is tailored to different languages and cultural contexts, making it harder to detect and counter. Therefore, developing strategies catering to the multilingual population is necessary.

Potential Solutions

To effectively combat AI-generated misinformation in India, an approach that is multi-faceted and multi-dimensional is essential. Some potential solutions are as follows:

- Developing a framework that is specific in its application to address AI-generated content. It should include stricter penalties for the originator and spreader and dissemination of fake content in proportionality to its consequences. The framework should establish clear and concise guidelines for social media platforms to ensure that proactive measures are taken to detect and remove AI-generated misinformation.

- Investing in tools that are driven by AI for customised detection and flagging of misinformation in real time. This can help in identifying deepfakes, manipulated images, and other forms of AI-generated content.

- The primary aim should be to encourage different collaborations between tech companies, cyber security orgnisations, academic institutions and government agencies to develop solutions for combating misinformation.

- Digital literacy programs will empower individuals by training them to evaluate online content. Educational programs in schools and communities teach critical thinking and media literacy skills, enabling individuals to better discern between real and fake content.

Conclusion

AI-generated misinformation presents a significant threat to India, and it is safe to say that the risks posed are at scale with the rapid rate at which the nation is developing technologically. As the country moves towards greater digital literacy and unprecedented mobile technology adoption, one must be cognizant of the fact that even a single piece of misinformation can quickly and deeply reach and influence a large portion of the population. Indian policymakers need to rise to the challenge of AI-generated misinformation and counteract it by developing comprehensive strategies that not only focus on regulation and technological innovation but also encourage public education. AI technologies are misused by bad actors to create hyper-realistic fake content including deepfakes and fabricated news stories, which can be extremely hard to distinguish from the truth. The battle against misinformation is complex and ongoing, but by developing and deploying the right policies, tools, digital defense frameworks and other mechanisms, we can navigate these challenges and safeguard the online information landscape.

References:

- https://economictimes.indiatimes.com/news/how-to/how-ai-powered-tools-deepfakes-pose-a-misinformation-challenge-for-internet-users/articleshow/98770592.cms?from=mdr

- https://www.dw.com/en/india-ai-driven-political-messaging-raises-ethical-dilemma/a-69172400

- https://pure.rug.nl/ws/portalfiles/portal/975865684/proceedings.pdf#page=62

Introduction

A disturbing trend of courier-related cyber scams has emerged, targeting unsuspecting individuals across India. In these scams, fraudsters pose as officials from reputable organisations, such as courier companies or government departments like the narcotics bureau. Using sophisticated social engineering tactics, they deceive victims into divulging personal information and transferring money under false pretences. Recently, a woman IT professional from Mumbai fell victim to such a scam, losing Rs 1.97 lakh.

Instances of courier-related cyber scams

Recently, two significant cases of courier-related cyber scams have surfaced, illustrating the alarming prevalence of such fraudulent activities.

- Case in Delhi: A doctor in Delhi fell victim to an online scam, resulting in a staggering loss of approximately Rs 4.47 crore. The scam involved fraudsters posing as representatives of a courier company. They informed the doctor about a seized package and requested substantial money for verification purposes. Tragically, the doctor trusted the callers and lost substantial money.

- Case in Mumbai: In a strikingly similar incident, an IT professional from Mumbai, Maharashtra, lost Rs 1.97 lakh to cyber fraudsters pretending to be officials from the narcotics department. The fraudsters contacted the victim, claiming her Aadhaar number was linked to the criminals’ bank accounts. They coerced the victim into transferring money for verification through deceptive tactics and false evidence, resulting in a significant financial loss.

These recent cases highlight the growing threat of courier-related cyber scams and the devastating impact they can have on unsuspecting individuals. It emphasises the urgent need for increased awareness, vigilance, and preventive measures to protect oneself from falling victim to such fraudulent schemes.

Nature of the Attack

The cyber scam typically begins with a fraudulent call from someone claiming to be associated with a courier company. They inform the victim that their package is stuck or has been seized, escalating the situation by involving law enforcement agencies, such as the narcotics department. The fraudsters manipulate victims by creating a sense of urgency and fear, convincing them to download communication apps like Skype to establish credibility. Fabricated evidence and false claims trick victims into sharing personal information, including Aadhaar numbers, and coercing them to make financial transactions for verification purposes.

Best Practices to Stay Safe

To protect oneself from courier-related cyber scams and similar frauds, individuals should follow these best practices:

- Verify Calls and Identity: Be cautious when receiving calls from unknown numbers. Verify the caller’s identity by cross-checking with relevant authorities or organisations before sharing personal information.

- Exercise Caution with Personal Information: Avoid sharing sensitive personal information, such as Aadhaar numbers, bank account details, or passwords, over the phone or through messaging apps unless necessary and with trusted sources.

- Beware of Urgency and Threats: Scammers often create a sense of urgency or threaten legal consequences to manipulate victims. Remain vigilant and question any unexpected demands for money or personal information.

- Double-Check Suspicious Claims: If contacted by someone claiming to be from a government department or law enforcement agency, independently verify their credentials by contacting the official helpline or visiting the department’s official website.

- Educate and Spread Awareness: Share information about these scams with friends, family, and colleagues to raise awareness and collectively prevent others from falling victim to such frauds.

Legal Remedies

In case of falling victim to a courier-related cyber scam, individuals can sort to take the following legal actions:

- File a First Information Report (FIR): In case of falling victim to a courier-related cyber scam or any similar online fraud, individuals have legal options available to seek justice and potentially recover their losses. One of the primary legal actions that can be taken is to file a First Information Report (FIR) with the local police. The following sections of Indian law may be applicable in such cases:

- Section 419 of the Indian Penal Code (IPC): This section deals with the offence of cheating by impersonation. It states that whoever cheats by impersonating another person shall be punished with imprisonment of either description for a term which may extend to three years, or with a fine, or both.

- Section 420 of the IPC: This section covers the offence of cheating and dishonestly inducing delivery of property. It states that whoever cheats and thereby dishonestly induces the person deceived to deliver any property shall be punished with imprisonment of either description for a term which may extend to seven years and shall also be liable to pay a fine.

- Section 66(C) of the Information Technology (IT) Act, 2000: This section deals with the offence of identity theft. It states that whoever, fraudulently or dishonestly, makes use of the electronic signature, password, or any other unique identification feature of any other person shall be punished with imprisonment of either description for a term which may extend to three years and shall also be liable to pay a fine.

- Section 66(D) of the IT Act, 2000 pertains to the offence of cheating by personation by using a computer resource. It states that whoever, by means of any communication device or computer resource, cheats by personating shall be punished with imprisonment of either description for a term which may extend to three years and shall also be liable to pay a fine.

- National Cyber Crime Reporting Portal- One powerful resource available to victims is the National Cyber Crime Reporting Portal, equipped with a 24×7 helpline number, 1930. This portal serves as a centralised platform for reporting cybercrimes, including financial fraud.

Conclusion:

The rise of courier-related cyber scams demands increased vigilance from individuals to protect themselves against fraud. Heightened awareness, caution, and scepticism when dealing with unknown callers or suspicious requests are crucial. By following best practices, such as verifying identities, avoiding sharing sensitive information, and staying updated on emerging scams, individuals can minimise the risk of falling victim to these fraudulent schemes. Furthermore, spreading awareness about such scams and promoting cybersecurity education will play a vital role in creating a safer digital environment for everyone.