#FactCheck: Viral AI Video Showing Finance Minister of India endorsing an investment platform offering high returns.

Executive Summary:

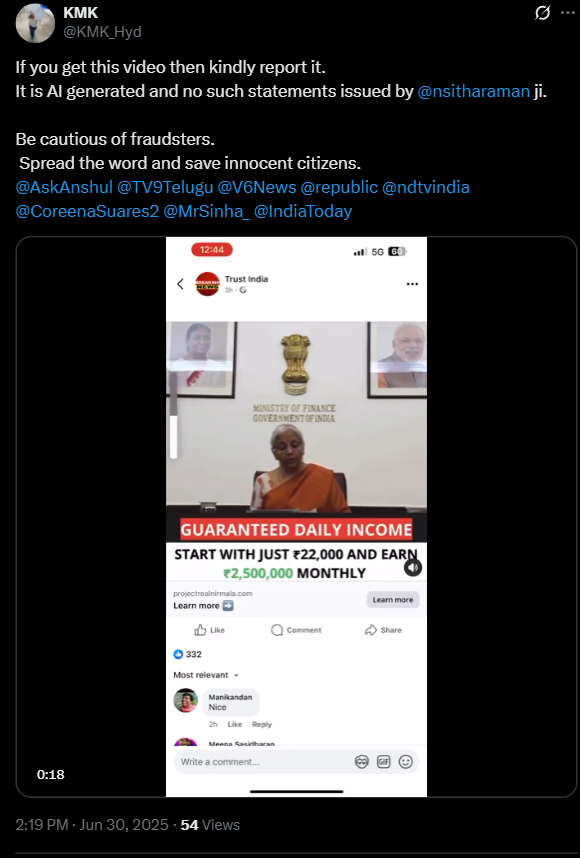

A video circulating on social media falsely claims that India’s Finance Minister, Smt. Nirmala Sitharaman, has endorsed an investment platform promising unusually high returns. Upon investigation, it was confirmed that the video is a deepfake—digitally manipulated using artificial intelligence. The Finance Minister has made no such endorsement through any official platform. This incident highlights a concerning trend of scammers using AI-generated videos to create misleading and seemingly legitimate advertisements to deceive the public.

Claim:

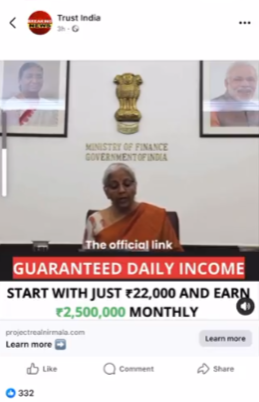

A viral video falsely claims that the Finance Minister of India Smt. Nirmala Sitharaman is endorsing an investment platform, promoting it as a secure and highly profitable scheme for Indian citizens. The video alleges that individuals can start with an investment of ₹22,000 and earn up to ₹25 lakh per month as guaranteed daily income.

Fact check:

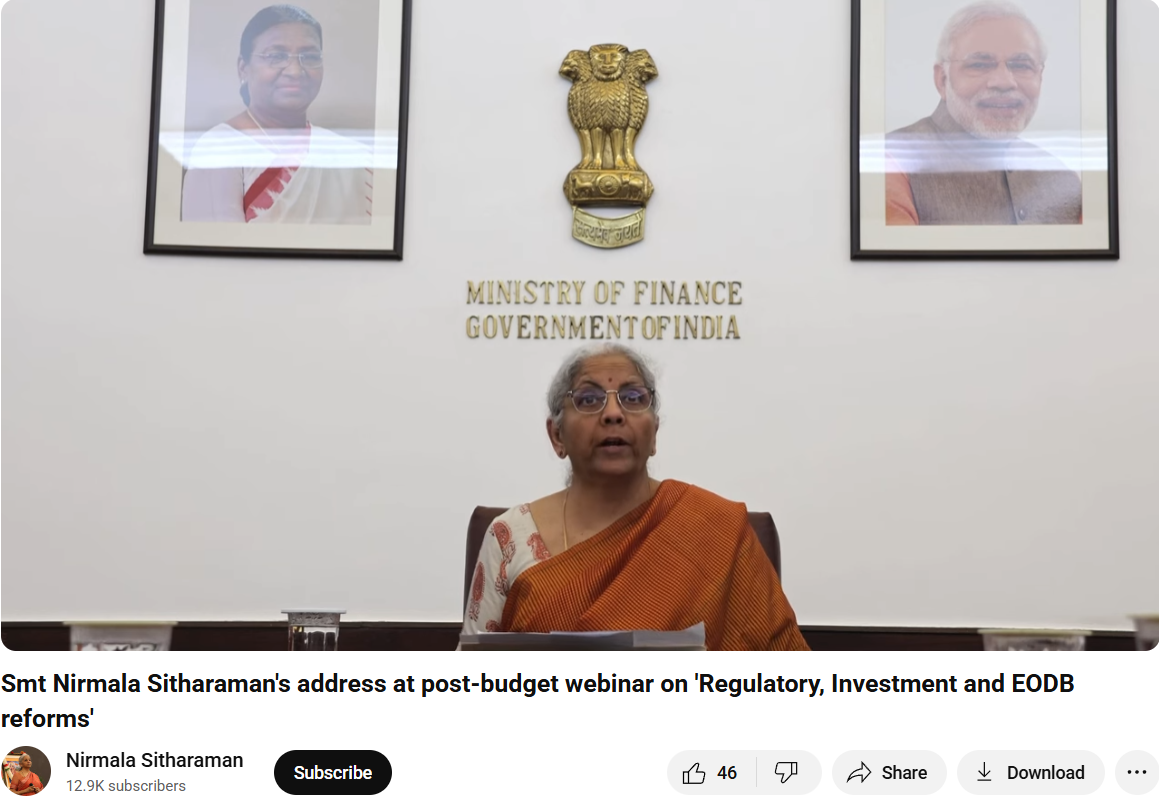

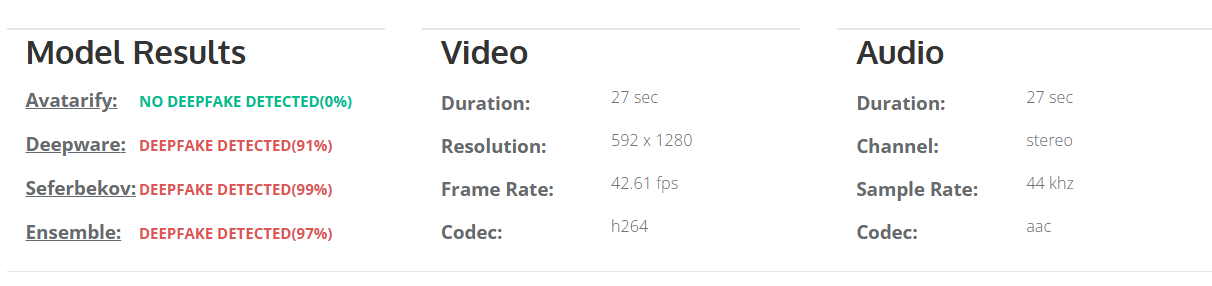

By doing a reverse image search from the key frames of the viral fake video we found an original YouTube clip of the Finance Minister of India delivering a speech on the webinar regarding 'Regulatory, Investment and EODB reforms'. Upon further research we have not found anything related to the viral investment scheme in the whole video.

The manipulated video has had an AI-generated voice/audio and scripted text injected into it to make it appear as if she has approved an investment platform.

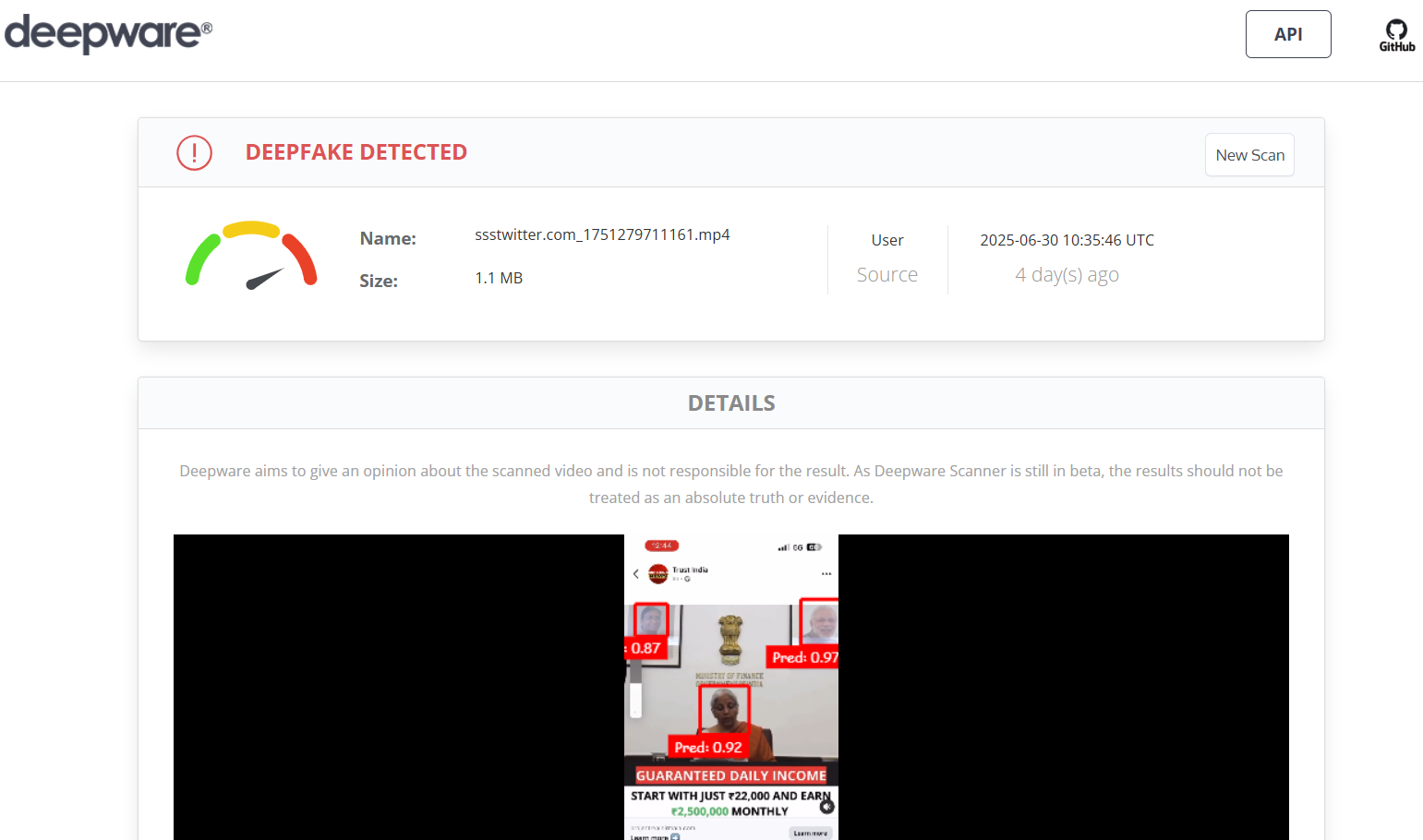

The key to deepfakes is that they seem relatively realistic in their facial movement; however, if you look closely, you can see that there are mismatched lip-syncing and visual transitions that are out of the ordinary, and the results prove our point.

Also, there doesn't appear to be any acknowledgment of any such endorsement from a legitimate government website or a credible news outlet. This video is a fabricated piece of misinformation to attempt to scam the viewers by leveraging the image of a trusted public figure.

Conclusion:

The viral video showing the Finance Minister of India, Smt. Nirmala Sitharaman promoting an investment platform is fake and AI-generated. This is a clear case of deepfake misuse aimed at misleading the public and luring individuals into fraudulent schemes. Citizens are advised to exercise caution, verify any such claims through official government channels, and refrain from clicking on unknown investment links circulating on social media.

- Claim: Nirmala Sitharaman promoted an investment app in a viral video.

- Claimed On: Social Media

- Fact Check: False and Misleading

.webp)