#FactCheck - Viral Videos of Mutated Animals Debunked as AI-Generated

Executive Summary:

Several videos claiming to show bizarre, mutated animals with features such as seal's body and cow's head have gone viral on social media. Upon thorough investigation, these claims were debunked and found to be false. No credible source of such creatures was found and closer examination revealed anomalies typical of AI-generated content, such as unnatural leg movements, unnatural head movements and joined shoes of spectators. AI material detectors confirmed the artificial nature of these videos. Further, digital creators were found posting similar fabricated videos. Thus, these viral videos are conclusively identified as AI-generated and not real depictions of mutated animals.

Claims:

Viral videos show sea creatures with the head of a cow and the head of a Tiger.

Fact Check:

On receiving several videos of bizarre mutated animals, we searched for credible sources that have been covered in the news but found none. We then thoroughly watched the video and found certain anomalies that are generally seen in AI manipulated images.

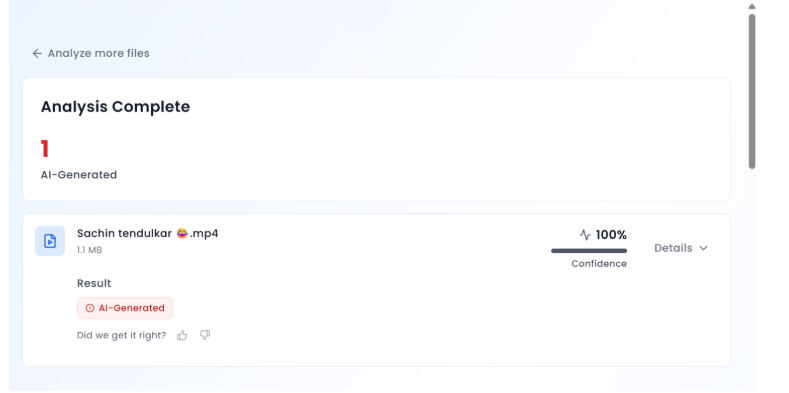

Taking a cue from this, we checked all the videos in the AI video detection tool named TrueMedia, The detection tool found the audio of the video to be AI-generated. We divided the video into keyframes, the detection found the depicting image to be AI-generated.

In the same way, we investigated the second video. We analyzed the video and then divided the video into keyframes and analyzed it with an AI-Detection tool named True Media.

It was found to be suspicious and so we analyzed the frame of the video.

The detection tool found it to be AI-generated, so we are certain with the fact that the video is AI manipulated. We analyzed the final third video and found it to be suspicious by the detection tool.

The detection tool found the frame of the video to be A.I. manipulated from which it is certain that the video is A.I. manipulated. Hence, the claim made in all the 3 videos is misleading and fake.

Conclusion:

The viral videos claiming to show mutated animals with features like seal's body and cow's head are AI-generated and not real. A thorough investigation by the CyberPeace Research Team found multiple anomalies in AI-generated content and AI-content detectors confirmed the manipulation of A.I. fabrication. Therefore, the claims made in these videos are false.

- Claim: Viral videos show sea creatures with the head of a cow, the head of a Tiger, head of a bull.

- Claimed on: YouTube

- Fact Check: Fake & Misleading