Introduction

When a tragedy strikes, moments are fragile, people are vulnerable, emotions run high, and every second is important. In such critical situations, information becomes as crucial as food, water, shelter, and medication. As soon as any information is received, it often leads to stampedes and chaos. Alongside the tragedy, whether natural or man-made, emerges another threat: misinformation. People, desperate for answers, cling to whatever they can find.

Tragedies can take many forms. These may include natural disasters, mass accidents, terrorist activities, or other emergencies. During the 2023 earthquakes in Turkey, misinformation spread on social media claiming that the Yarseli Dam had cracked and was about to burst. People believed it and began migrating from the area. Panic followed, and search and rescue teams stopped operations in that zone. Precious hours were lost. Later, it was confirmed to be a rumour. By then, the damage was already done.

Similarly, after the recent plane crash in Ahmedabad, India, numerous rumours and WhatsApp messages spread rapidly. One message claimed to contain the investigation report on the crash of Air India flight AI-171. It was later called out by PIB and declared fake.

These examples show how misinformation can take control of already painful moments. During emergencies, when emotions are intense and fear is widespread, false information spreads faster and hits harder. Some people share it unknowingly, while others do so to gain attention or push a certain agenda. But for those already in distress, the effect is often the same. It brings ore confusion, heightens anxiety, and adds to their suffering.

Understanding Disasters and the Role of Media in Crisis

Disaster can be defined as a natural or human-caused situation that causes a transformation from a usual life of society into a crisis that is far beyond its existing response capacity. It can have minimal or maximum effects, from mere disruption in daily life practices to as adverse as inability to meet basic requirements of life like food, water and shelter. Hence, the disaster is not just a sudden event. It becomes a disaster when it overwhelms a community’s ability to cope with it.

To cope with such situations, there is an organised approach called Disaster Management. It includes preventive measures, minimising damages and helping communities recover. Earlier, public institutions like governments used to be the main actors in disaster management, but today, with every small entity having a role, academic institutions, media outlets and even ordinary people are involved.

Communication is an important element in disaster management. It saves lives when done correctly. People who are vulnerable need to know what’s happening, what they should do and where to seek help. It involves risk in today’s instantaneous communication.

Research shows that the media often fails to focus on disaster preparedness. For example, studies found that during the 2019 Istanbul earthquake, the media focused more on dramatic scenes than on educating people. Similar trends were seen during the 2023 Turkey earthquakes. Rather than helping people prepare or stay calm, much of the media coverage amplified fear and sensationalised suffering. This shows a shift from preventive, helpful reporting to reactive, emotional storytelling. In doing so, the media sometimes fails in its duty to support resilience and worse, can become a channel for spreading misinformation during already traumatic events. However, fighting misinformation is not just someone’s liability. It is penalised in the official disaster management strategy. Section 54 of the Disaster Management Act, 2005 mentions that "Whoever makes or circulates a false alarm or warning as to disaster or its severity or magnitude, leading to panic, shall, on conviction, be punishable with imprisonment which may extend to one year or with a fine."

AI as a Tool in Countering Misinformation

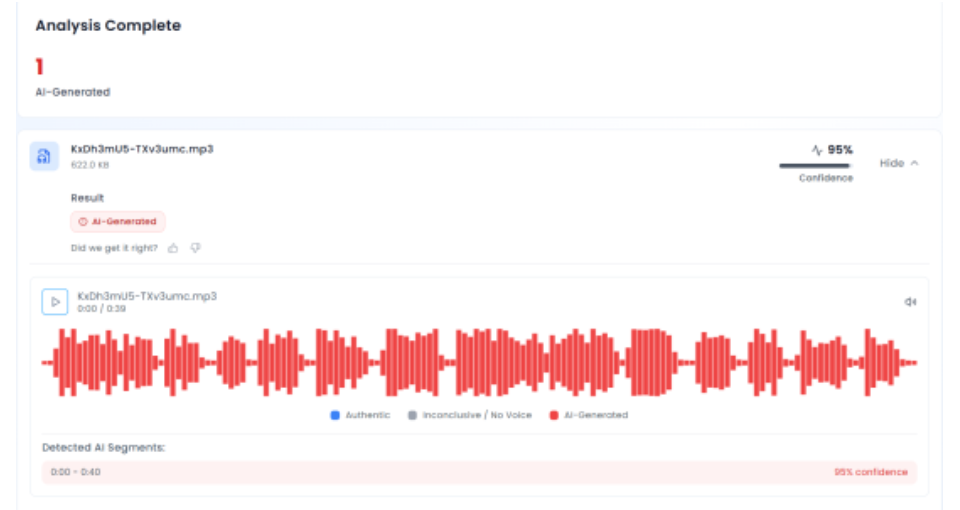

AI has emerged as a powerful mechanism to fight against misinformation. AI technologies like Natural Language Processing (NLP) and Machine Learning (ML) are effective in spotting and classifying misinformation with up to 97% accuracy. AI flags unverified content, leading to a 24% decrease in shares and 7% drop in likes on platforms like TikTok. Up to 95% fewer people view content on Facebook when fact-checking labels are used. Facebook AI also eliminates 86% of graphic violence, 96% of adult nudity, 98.5% of fake accounts and 99.5% of content related to terrorism. These tools help rebuild public trust in addition to limiting the dissemination of harmful content. In 2023, support for tech companies acting to combat misinformation rose to 65%, indicating a positive change in public expectations and awareness.

How to Counter Misinformation

Experts should step up in such situations. Social media has allowed many so-called experts to spread fake information without any real knowledge, research, or qualification. In such conditions, real experts such as authorities, doctors, scientists, public health officials, researchers, etc., need to take charge. They can directly address the myths and false claims and stop misinformation before it spreads further and reduce confusion.

Responsible journalism is crucial during crises. In times of panic, people look at the media for guidance. Hence, it is important to fact-check every detail before publishing. Reporting that is based on unclear tips, social media posts, or rumours can cause major harm by inciting mistrust, fear, or even dangerous behaviour. Cross-checking information, depending on reliable sources and promptly fixing errors are all components of responsible journalism. Protecting the public is more important than merely disseminating the news.

Focus on accuracy rather than speed. News spreads in a blink in today's world. Media outlets and influencers often come under pressure to publish it first. But in tragic situations like natural disasters and disease outbreaks, rushing to come first is not as important as accuracy is, as a single piece of misinformation can spark mass-scale panic and can slow down emergency efforts and lead people to make rash decisions. Taking a little more time to check the facts ensures that the information being shared is helpful, not harmful. Accuracy may save numerous lives during tragedies.

Misinformation spreads quickly it can only be prevented if people learn to critically evaluate what they hear and see. This entails being able to spot biased or deceptive headlines, cross-check claims and identify reliable sources. Digital literacy is of utmost importance; it makes people less susceptible to fear-based rumours, conspiracy theories and hoaxes.

Disaster preparedness programs should include awareness about the risks of spreading unverified information. Communities, schools and media platforms must educate people on how to respond responsibly during emergencies by staying calm, checking facts and sharing only credible updates. Spreading fake alerts or panic-inducing messages during a crisis is not only dangerous, but it can also have legal consequences. Public communication must focus on promoting trust, calm and clarity. When people understand the weight their words can carry during a crisis, they become part of the solution, not the problem.

References:

- https://dergipark.org.tr/en/download/article-file/3556152

- https://www.dhs.gov/sites/default/files/publications/SMWG_Countering-False-Info-Social-Media-Disasters-Emergencies_Mar2018-508.pdf

- https://english.mathrubhumi.com/news/india/fake-whatsapp-message-air-india-crash-pib-fact-check-fcwmvuyc

- https://www.dhs.gov/sites/default/files/publications/SMWG_Countering-False-Info-Social-Media-Disasters-Emergencies_Mar2018-508.pdf