#FactCheck - Viral Photos Falsely Linked to Iranian President Ebrahim Raisi's Helicopter Crash

Executive Summary:

On 20th May, 2024, Iranian President Ebrahim Raisi and several others died in a helicopter crash that occurred northwest of Iran. The images circulated on social media claiming to show the crash site, are found to be false. CyberPeace Research Team’s investigation revealed that these images show the wreckage of a training plane crash in Iran's Mazandaran province in 2019 or 2020. Reverse image searches and confirmations from Tehran-based Rokna Press and Ten News verified that the viral images originated from an incident involving a police force's two-seater training plane, not the recent helicopter crash.

Claims:

The images circulating on social media claim to show the site of Iranian President Ebrahim Raisi's helicopter crash.

Fact Check:

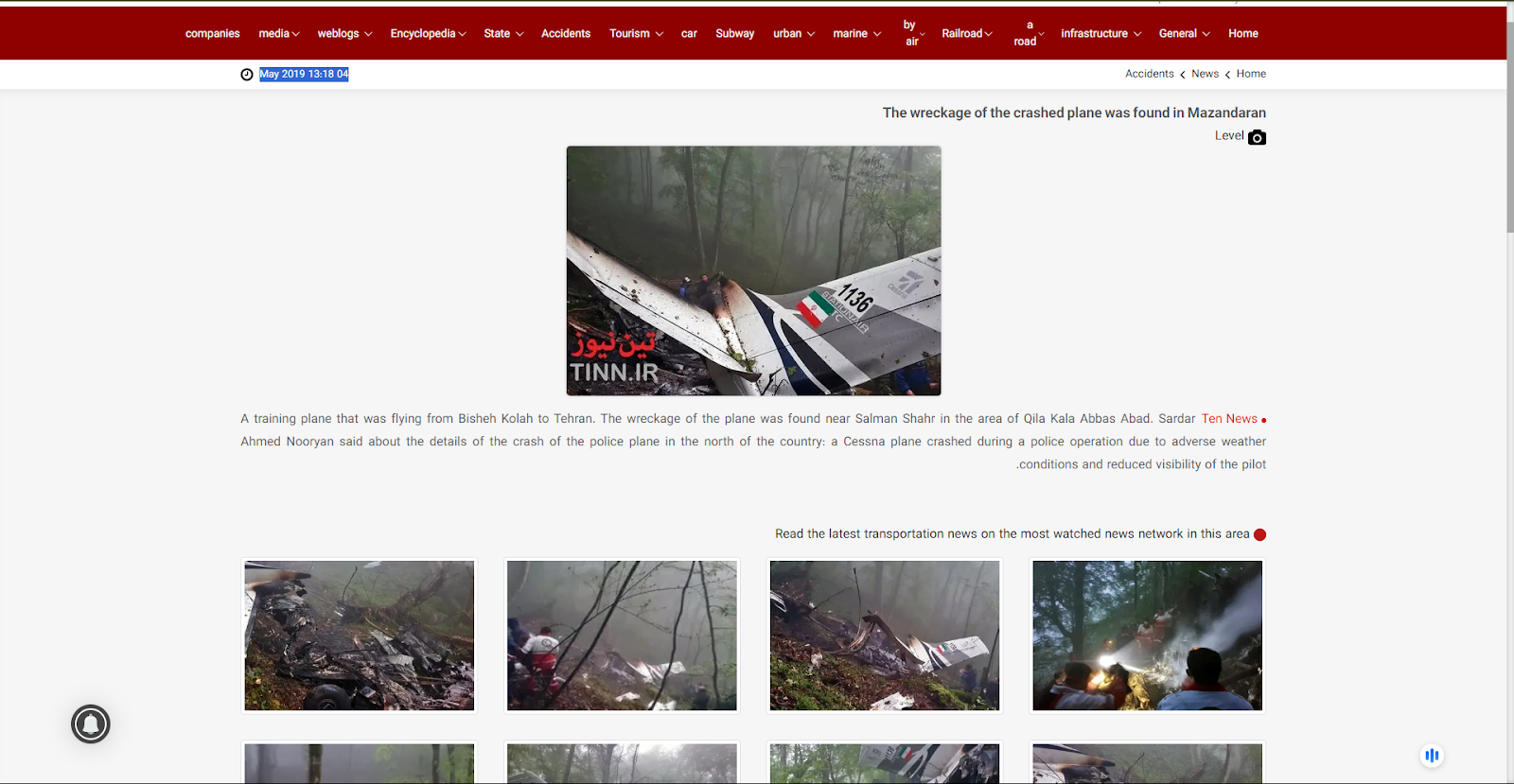

After receiving the posts, we reverse-searched each of the images and found a link to the 2020 Air Crash incident, except for the blue plane that can be seen in the viral image. We found a website where they uploaded the viral plane crash images on April 22, 2020.

According to the website, a police training plane crashed in the forests of Mazandaran, Swan Motel. We also found the images on another Iran News media outlet named, ‘Ten News’.

The Photos uploaded on to this website were posted in May 2019. The news reads, “A training plane that was flying from Bisheh Kolah to Tehran. The wreckage of the plane was found near Salman Shahr in the area of Qila Kala Abbas Abad.”

Hence, we concluded that the recent viral photos are not of Iranian President Ebrahim Raisi's Chopper Crash, It’s false and Misleading.

Conclusion:

The images being shared on social media as evidence of the helicopter crash involving Iranian President Ebrahim Raisi are incorrectly shown. They actually show the aftermath of a training plane crash that occurred in Mazandaran province in 2019 or 2020 which is uncertain. This has been confirmed through reverse image searches that traced the images back to their original publication by Rokna Press and Ten News. Consequently, the claim that these images are from the site of President Ebrahim Raisi's helicopter crash is false and Misleading.

- Claim: Viral images of Iranian President Raisi's fatal chopper crash.

- Claimed on: X (Formerly known as Twitter), YouTube, Instagram

- Fact Check: Fake & Misleading

Related Blogs

Introduction

With the increasing reliance on digital technologies in the banking industry, cyber threats have become a significant concern. Cyberlaw plays a crucial role in safeguarding the banking sector from cybercrimes and ensuring the security and integrity of financial systems.

The banking industry has witnessed a rapid digital transformation, enabling convenient services and greater access to financial resources. However, this digitalisation also exposes the industry to cyber threats, necessitating the formulation and implementation of effective cyber law frameworks.

Recent Trends in the Banking Industry

Digital Transformation: The banking industry has embraced digital technologies, such as mobile banking, internet banking, and financial apps, to enhance customer experience and operational efficiency.

Open Banking: The concept of open banking has gained prominence, enabling data sharing between banks and third-party service providers, which introduces new cyber risks.

How Cyber Law Helps the Banking Sector

The banking sector and cyber crime share an unspoken synergy due to the mass digitisation of banking services. Thanks to QR codes, UPI and online banking payments, India is now home to 40% of global online banking transactions. Some critical aspects of the cyber law and banking sector are as follows:

Data Protection: Cyberlaw mandates banks to implement robust data protection measures, including encryption, access controls, and regular security audits, to safeguard customer data.

Incident Response and Reporting: Cyberlaw requires banks to establish incident response plans, promptly report cyber incidents to regulatory authorities, and cooperate in investigations.

Customer Protection: Cyberlaw enforces regulations related to online banking fraud, identity theft, and unauthorised transactions, ensuring that customers are protected from cybercrimes.

Legal Framework: Cyberlaw provides a legal foundation for digitalisation in the banking sector, assuring customers that regulations protect their digital transactions and data.

Cybersecurity Training and Awareness: Cyberlaw encourages banks to conduct regular training programs and create awareness among employees and customers about cyber threats, safe digital practices, and reporting procedures.

RBI Guidelines

The RBI, as India’s central banking institution, has issued comprehensive guidelines to enhance cyber resilience in the banking industry. These guidelines address various aspects, including:

Technology Risk Management

Cyber Security Framework

IT Governance

Cyber Crisis Management Plan

Incident Reporting and Response

Recent Trends in Banking Sector Frauds and the Role of Cyber Law

Phishing Attacks: Cyberlaw helps banks combat phishing attacks by imposing penalties on perpetrators and mandating preventive measures like two-factor authentication.

Insider Threats: Cyberlaw regulations emphasise the need for stringent access controls, employee background checks, and legal consequences for insiders involved in fraudulent activities.

Ransomware Attacks: Cyberlaw frameworks assist banks in dealing with ransomware attacks by enabling legal actions against hackers and promoting preventive measures, such as regular software updates and data backups.

Master Directions on Cyber Resilience and Digital Payment Security Controls for Payment System Operators (PSOs)

Draft of Master Directions on Cyber Resilience and Digital Payment Security Controls for Payment System Operators (PSOs) issued by the Reserve Bank of India (RBI). The directions provide guidelines and requirements for PSOs to improve the safety and security of their payment systems, with a focus on cyber resilience. These guidelines for PSOs include mobile payment service providers like Paytm or digital wallet payment platforms.

Here are the highlights-

The Directions aim to improve the safety and security of payment systems operated by PSOs by providing a framework for overall information security preparedness, with an emphasis on cyber resilience.

The Directions apply to all authorised non-bank PSOs.

PSOs must ensure adherence to these Directions by unregulated entities in their digital payments ecosystem, such as payment gateways, third-party service providers, vendors, and merchants.

The PSO’s Board of Directors is responsible for ensuring adequate oversight over information security risks, including cyber risk and cyber resilience. A sub-committee of the Board may be delegated with primary oversight responsibilities.

PSOs must formulate a Board-approved Information Security (IS) policy that covers roles and responsibilities, measures to identify and manage cyber security risks, training and awareness programs, and more.

PSOs should have a distinct Board-approved Cyber Crisis Management Plan (CCMP) to detect, contain, respond, and recover from cyber threats and attacks.

A senior-level executive, such as a Chief Information Security Officer (CISO), should be responsible for implementing the IS policy and the cyber resilience framework and assessing the overall information security posture of the PSO.

PSOs need to define Key Risk Indicators (KRIs) and Key Performance Indicators (KPIs) to identify potential risk events and assess the effectiveness of security controls. The sub-committee of the Board is responsible for monitoring these indicators.

PSOs should conduct a cyber risk assessment when launching new products, services, technologies, or significant changes to existing infrastructure or processes.

PSOs, including inventory management, identity and access management, network security, application security life cycle, security testing, vendor risk management, data security, patch and change management life cycle, incident response, business continuity planning, API security, employee awareness and training, and other security measures should implement various baseline information security measures and controls.

PSOs should ensure that payment transactions involving debit to accounts conducted electronically are permitted only through multi-factor authentication, except where explicitly permitted/relaxed.

Conclusion

The relationship between cyber law and the banking industry is crucial in ensuring a secure and trusted digital environment. Recent trends indicate that cyber threats are evolving and becoming more sophisticated. Compliance with cyber law provisions and adherence to guidelines such as those provided by the RBI is essential for banks to protect themselves and their customers from cybercrimes. By embracing robust cyber law frameworks, the banking industry can foster a resilient ecosystem that enables innovation while safeguarding the interests of all stakeholders or users.

Introduction

The world has been riding the wave of technological advancements, and the fruits it has born have impacted our lives. Technology, by its virtue, cannot be quantified as safe or unsafe it is the application and use of technology which creates the threats. Its times like this, the importance and significance of policy framework are seen in cyberspace. Any technology can be governed by means of policies and laws only. In this blog, we explore the issues raised by the EU for the tech giants and why the Indian Govt is looking into probing Whatsapp.

EU on Big Techs

Eu has always been seen to be a strong policy maker for cyberspace, and the same can be seen from the scope, extent and compliance of GDPR. This data protection bill is the holy grail for worldwide data protection bills. Apart from the GDPR, the EU has always maintained strong compliance demographics for the big tech as most of them have originated outside of Europe, and the rights of EU citizens come into priority above anything else.

New Draft Notification

According to the draft of the new notification, Amazon, Google, Microsoft and other non-European Union cloud service providers looking to secure an EU cybersecurity label to handle sensitive data can only do so via a joint venture with an EU-based company. The document adds that the cloud service must be operated and maintained from the EU, all customer data must be stored and processed in the EU, and EU laws take precedence over non-EU laws regarding the cloud service provider. Certified cloud services are operated only by companies based in the EU, with no entity from outside the EU having effective control over the CSP (cloud service provider) to mitigate the risk of non-EU interfering powers undermining EU regulations, norms and values.

This move from the EU is still in the draft phase however, it is expected to come into action soon as issues related to data breaches of EU citizens have been reported on numerous occasions. The document said the tougher rules would apply to personal and non-personal data of particular sensitivity where a breach may have a negative impact on public order, public safety, human life or health, or the protection of intellectual property.

How will it secure the netizens?

Since the EU has been the leading policy maker in cyberspace, it is often seen that the rules and policies of the EU are often replicated around the world. Hence this move comes at a critical time as the EU is looking towards safeguarding the EU netizens and the Cyber security industry in the EU by allowing them to collaborate with big tech while maintaining compliance. Cloud services can be protected by this mechanism, thus ensuring fewer instances of data breaches, thus contributing to a dip in cyber crimes and attacks.

The Indian Govt on WhatsApp

The Indian Govt has decided to probe Whatsapp and its privacy settings. One of the Indian Whatsapp users tweeted a screenshot of WhatsApp accessing the phone’s mic even when the phone was not in use, and the app was not open even in the background. The meta-owned Social messaging platform enjoys nearly 487 million users in India, making it their biggest market. The 2018 judgement on Whatsapp and its privacy issues was a landmark judgement, but the platform is in violation of the same.

The MoS, Ministry of Electronics and Information Technology, Rajeev Chandrashekhar, has already tweeted that the issue will be looked into and that they will be punished if the platform is seen violating the guidelines. The Digital Personal Data Protection Bill is yet to be tabled at the parliament. Still, despite the draft bill being public, big platforms must maintain the code of conduct to maintain compliance when the bill turns into an Act.

Threats for Indian Users

The Indian Whatsapp user contributes to the biggest user base un the world, and still, they are vulnerable to attacks on WhatsApp and now WhatsApp itself. The netizens are under the following potential threats –

- Data breaches

- Identity theft

- Phishing scams

- Unconsented data utilisation

- Violation of Right to Privacy

- Unauthorised flow of data outside India

- Selling of data to a third party without consent

The Indian netizen needs to stay vary of such issues and many more by practising basic cyber safety and security protocols and keeping a check on the permissions granted to apps, to keep track of one’s digital footprint.

Conclusion

Whether it’s the EU or Indian Government, it is pertinent to understand that the world powers are all working towards creating a safe and secured cyberspace for its netizens. The move made by the EU will act as a catalyst for change at a global level, as once the EU enforces the policy, the world will soon replicate it to safeguard their cyber interests, assets and netizens. The proactive stance of the Indian Government is a crucial sign that the things will not remain the same in the Indian Cyber ecosystem, and its upon the platforms and companies to ensure compliance, even in the absence of a strong legislation for cyberspace. The government is taking all steps to safeguard the Indian netizen, as the same lies in the souls and spirit of the new Digital India Bill, which will govern cyberspace in the near future. Still, till then, in order to maintain the synergy and equilibrium, it is pertinent for the platforms to be in compliance with the laws of natural justice.

Introduction

In recent years, India has witnessed a significant rise in the popularity and recognition of esports, which refers to online gaming. Esports has emerged as a mainstream phenomenon, influencing players and youngsters worldwide. In India, with the penetration of the internet at 52%, the youth has got its attracted to Esports. In this blog post, we will look at how the government is booting the players, establishing professional leagues, and supporting gaming companies and sponsors in the best possible manner. As the ecosystem continues to rise in prominence and establish itself as a mainstream sporting phenomenon in India.

Factors Shaping Esports in India: A few factors are shaping and growing the love for esports in India here. Let’s have a look.

Technological Advances: The availability and affordability of high-speed internet connections and smart gaming equipment have played an important part in making esports more accessible to a broader audience in India. With the development of smartphones and low-cost gaming PCs, many people may now easily participate in and watch esports tournaments.

Youth Demographic: India has a large population of young people who are enthusiastic gamers and tech-savvy. The youth demographic’s enthusiasm for gaming has spurred the expansion of esports in the country, as they actively participate in competitive gaming and watch major esports competitions.

Increase in the Gaming community: Gaming has been deeply established in Indian society, with many people using it for enjoyment and social contact. As the competitive component of gaming, esports has naturally gained popularity among gamers looking for a more competitive and immersive experience.

Esports Infrastructure and Events: The creation of specialised esports infrastructure, such as esports arenas, gaming cafés, and tournament venues, has considerably aided esports growth in India. Major national and international esports competitions and leagues have also been staged in India, offering exposure and possibilities for prospective esports players. Also supports various platforms such as YouTube, Twitch, and Facebook gaming, which has played a vital role in showcasing and popularising Esports in India.

Government support: Corporate and government sectors in India have recognised the potential of esports and are actively supporting its growth. Major corporate investments, sponsorships, and collaborations with esports organisations have supplied the financial backing and resources required for the country’s esports development. Government attempts to promote esports have also been initiated, such as forming esports governing organisations and including esports in official sporting events.

Growing Popularity and Recognition: Esports in India has witnessed a significant surge in viewership and fanbase, all thanks to online streaming platforms such as Twitch, YouTube which have provided a convenient way for fans to watch live esports events at home and at high-definition quality social media platforms let the fans to interact with their favourite players and stay updated on the latest esports news and events.

Esports Leagues in India

The organisation of esports tournaments and leagues in India has increased, with the IGL being one of the largest and most popular. The ESL India Premiership is a major esports event the Electronic Sports League organised in collaboration with NODWIN Gaming. Viacom18, a well-known Indian media business, established UCypher, an esports league. It focuses on a range of gaming games such as CS: GO, Dota 2, and Tekken in order to promote esports as a professional sport in India. All of these platforms provide professional players with a venue to compete and establish their profile in the esports industry.

India’s Performance in Esports to Date

Indian esports players have achieved remarkable global success, including outstanding results in prominent events and leagues. Individual Indian esports players’ success stories illustrate their talent, determination, and India’s ability to flourish in the esports sphere. These accomplishments contribute to the worldwide esports landscape’s awareness and growth of Indian esports. To add the name of the players and their success stories that have bought pride to India, they are Tirth Metha, Known as “Ritr”, a CS:GO player, Abhijeet “Ghatak”, Ankit “V3nom”, Saloni “Meow16K”.Apart from this Indian women’s team has also done exceptionally well in CS:GO and has made it to the finale.

Government and Corporate Sectors support: The Indian esports business has received backing from the government and corporate sectors, contributing to its growth and acceptance as a genuine sport.

Government Initiatives: The Indian government has expressed increased support for esports through different initiatives. This involves recognising esports as an official sport, establishing esports regulating organisations, and incorporating esports into national sports federations. The government has also announced steps to give financial assistance, subsidies, and infrastructure development for esports, therefore providing a favourable environment for the industry’s growth. Recently, Kalyan Chaubey, joint secretary and acting CEO of the IOA, personally gave the athletes cutting-edge training gear during this occasion, providing kits to the players. The kit includes the following:

Advanced gaming mouse.

Keyboard built for quick responses.

A smooth mousepad

A headphone for crystal-clear communication

An eSports bag to carry the equipment.

Corporate Sponsorship and Partnerships

Indian corporations have recognised esports’ promise and actively sponsored and collaborated with esports organisations, tournaments, and individual players. Companies from various industries, including technology, telecommunications, and entertainment, have invested in esports to capitalise on its success and connect with the esports community. These sponsorships and collaborations give financial support, resources, and visibility to esports in India. The leagues and championships provide opportunities for young players to showcase their talent.

Challenges and future

While esports provides great job opportunities, several obstacles must be overcome in order for the industry to expand and gain recognition:

Infrastructure & Training Facilities: Ensuring the availability of high-quality training facilities and infrastructure is critical for developing talent and allowing players to realise their maximum potential. Continued investment in esports venues, training facilities, and academies is critical for the industry’s long-term success.

Fostering a culture of skill development and giving outlets for formal education in esports would improve the professionalism and competitiveness of Indian esports players. Collaborations between educational institutions and esports organisations can result in the development of specialised programs in areas such as game analysis, team management, and sports psychology.

Establishing a thorough legal framework and governance structure for esports will help it gain legitimacy as a professional sport. Clear standards on player contracts, player rights, anti-doping procedures, and fair competition policies are all part of this.

Conclusion

Esports in India provide massive professional opportunities and growth possibilities for aspiring esports athletes. The sector’s prospects are based on overcoming infrastructure, perception, talent development, and regulatory barriers. Esports may establish itself as a viable and acceptable career alternative in India with continued support, investment, and stakeholder collaboration