#FactCheck - AI-Generated Image of Abhishek Bachchan and Aishwarya Rai Falsely Linked to Kedarnath Visit

A photo featuring Bollywood actor Abhishek Bachchan and actress Aishwarya Rai is being widely shared on social media. In the image, the Kedarnath Temple is clearly visible in the background. Users are claiming that the couple recently visited the Kedarnath shrine for darshan.

Cyber Peace Foundation’s research found the viral claim to be false. Our research revealed that the image of Abhishek Bachchan and Aishwarya Rai is not real, but AI-generated, and is being misleadingly shared as a genuine photograph.

Claim

On January 14, 2026, a user on X (formerly Twitter) shared the viral image with a caption suggesting that all rumours had ended and that the couple had restarted their life together. The post further claimed that both actors were seen smiling after a long time, implying that the image was taken during their visit to Kedarnath Temple.

The post has since been widely circulated on social media platforms

Fact Check:

To verify the claim, we first conducted a keyword search on Google related to Abhishek Bachchan, Aishwarya Rai, and a Kedarnath visit. However, we did not find any credible media reports confirming such a visit.

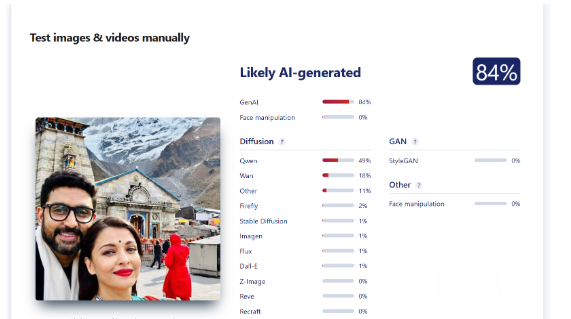

On closely examining the viral image, several visual inconsistencies raised suspicion about it being artificially generated. To confirm this, we scanned the image using the AI detection tool Sightengine. According to the tool’s analysis, the image was found to be 84 percent AI-generated.

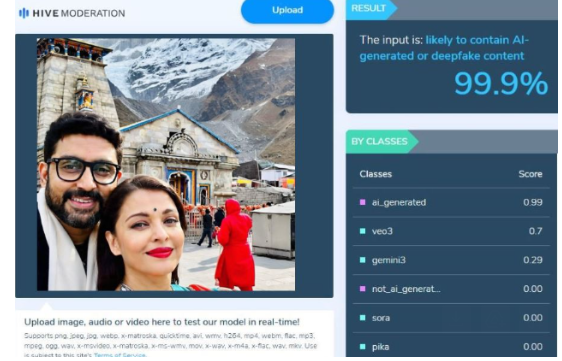

Additionally, we scanned the same image using another AI detection tool, HIVE Moderation. The results showed an even stronger indication, classifying the image as 99 percent AI-generated.

Conclusion

Our research confirms that the viral image showing Abhishek Bachchan and Aishwarya Rai at Kedarnath Temple is not authentic. The picture is AI-generated and is being falsely shared on social media to mislead users.

Related Blogs

Introduction

Data Breaches have taken over cyberspace as one of the rising issues, these data breaches result in personal data making its way toward cybercriminals who use this data for no good. As netizens, it's our digital responsibility to be cognizant of our data and the data of one's organization. The increase in internet and technology penetration has made people move to cyberspace at a rapid pace, however, awareness regarding the same needs to be inculcated to maximise the data safety of netizens. The recent AIIMS cyber breach has got many organisations worried about their cyber safety and security. According to the HIPPA Journal, 66% of healthcare organizations reported ransomware attacks on them. Data management and security is the prime aspect of clients all across the industry and is now growing into a concern for many. The data is primarily classified into three broad terms-

- Personal Identified Information (PII) - Any representation of information that permits the identity of an individual to whom the information applies to be reasonably inferred by either direct or indirect means.

- Non-Public Information (NPI) - The personal information of an individual that is not and should not be available to the public. This includes Social Security Numbers, bank information, other personal identifiable financial information, and certain transactions with financial institutions.

- Material Non-Public Information (MNPI) - Data relating to a company that has not been made public but could have an impact on its share price. It is against the law for holders of nonpublic material information to use the information to their advantage in trading stocks.

This classification of data allows the industry to manage and secure data effectively and efficiently and at the same time, this allows the user to understand the uses of their data and its intensity in case of breach of data. Organisations process data that is a combination of the above-mentioned classifications and hence in instances of data breach this becomes a critical aspect. Coming back to the AIIMS data breach, it is a known fact that AIIMS is also an educational and research institution. So, one might assume that the reason for any attack on AIIMS could be either to exfiltrate patient data or could be to obtain hands-on the R & D data including research-related intellectual properties. If we postulate the latter, we could also imagine that other educational institutes of higher learning such as IITs, IISc, ISI, IISERs, IIITs, NITs, and some of the significant state universities could also be targeted. In 2021, the Ministry of Home Affairs through the Ministry of Education sent a directive to IITs and many other institutes to take certain steps related to cyber security measures and to create SoPs to establish efficient data management practices. The following sectors are critical in terms of data protection-

- Health sector

- Financial sector

- Education sector

- Automobile sector

These sectors are generally targeted by bad actors and often data breach from these sectors result in cyber crimes as the data is soon made available on Darkweb. These institutions need to practice compliance like any other corporate house as the end user here is the netizen and his/her data is of utmost importance in terms of protection.Organisations in today's time need to be in coherence to the advancement in cyberspace to find out keen shortcomings and vulnerabilities they may face and subsequently create safeguards for the same. The AIIMS breach is an example to learn from so that we can protect other organisations from such cyber attacks. To showcase strong and impenetrable cyber security every organisation should be able to answer these questions-

- Do you have a centralized cyber asset inventory?

- Do you have human resources that are trained to model possible cyber threats and cyber risk assessment?

- Have you ever undertaken a business continuity and resilience study of your institutional digitalized business processes?

- Do you have a formal vulnerability management system that enumerates vulnerabilities in your cyber assets and a patch management system that patches freshly discovered vulnerabilities?

- Do you have a formal configuration assessment and management system that checks the configuration of all your cyber assets and security tools (firewalls, antivirus management, proxy services) regularly to ensure they are most securely configured?

- Do have a segmented network such that your most critical assets (servers, databases, HPC resources, etc.) are in a separate network that is access-controlled and only people with proper permission can access?

- Do you have a cyber security policy that spells out the policies regarding the usage of cyber assets, protection of cyber assets, monitoring of cyber assets, authentication and access control policies, and asset lifecycle management strategies?

- Do you have a business continuity and cyber crisis management plan in place which is regularly exercised like fire drills so that in cases of exigencies such plans can easily be followed, and all stakeholders are properly trained to do their part during such emergencies?

- Do you have multi-factor authentication for all users implemented?

- Do you have a supply chain security policy for applications that are supplied by vendors? Do you have a vendor access policy that disallows providing network access to vendors for configuration, updates, etc?

- Do you have regular penetration testing of the cyberinfrastructure of the organization with proper red-teaming?

- Do you have a bug-bounty program for students who could report vulnerabilities they discover in your cyber infrastructure and get rewarded?

- Do you have an endpoint security monitoring tool mandatory for all critical endpoints such as database servers, application servers, and other important cyber assets?

- Do have a continuous network monitoring and alert generation tool installed?

- Do you have a comprehensive cyber security strategy that is reflected in your cyber security policy document?

- Do you regularly receive cyber security incidents (including small, medium, or high severity incidents, network scanning, etc) updates from your cyber security team in order to ensure that top management is aware of the situation on the ground?

- Do you have regular cyber security skills training for your cyber security team and your IT/OT engineers and employees?

- Do your top management show adequate support, and hold the cyber security team accountable on a regular basis?

- Do you have a proper and vetted backup and restoration policy and practice?

If any organisation has definite answers to these questions, it is safe to say that they have strong cyber security, these questions should not be taken as a comparison but as a checklist by various organisations to be up to date in regard to the technical measures and policies related to cyber security. Having a strong cyber security posture does not drive the cyber security risk to zero but it helps to reduce the risk and improves the fighting chance. Further, if a proper risk assessment is regularly carried out and high-risk cyber assets are properly protected, then the damages resulting from cyber attacks can be contained to a large extent.

Introduction

“an intermediary, on whose computer resource the information is stored, hosted or published, upon receiving actual knowledge in the form of an order by a court of competent jurisdiction or on being notified by the Appropriate Government or its agency under clause (b) of sub-section (3) of section 79 of the Act, shall not , which is prohibited under any law for the time being in force in relation to the interest of the sovereignty and integrity of India; security of the State; friendly relations with foreign States; public order; decency or morality; in relation to contempt of court; defamation; incitement to an offence relating to the above, or any information which is prohibited under any law for the time being in force”

Law grows by confronting its absences, it heals itself through its own gaps. The most recent notification from MeitY, G.S.R. 775(E) dated October 22, 2025, is an illustration of that self-correction. On November 15, 2025, the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Amendment Rules, 2025, will come into effect. They accomplish two crucial things: they restrict who can use "actual knowledge” to initiate takedown and require senior-level scrutiny of those directives. By doing this, they maintain genuine security requirements while guiding India’s content governance system towards more transparent due process.

When Regulation Learns Restraint

To better understand the jurisprudence of revision, one must need to understand that Regulation, in its truest form, must know when to pause. The 2025 amendment marks that rare moment when the government chooses precision over power, when regulation learns restraint. The amendment revises Rule 3(1)(d) of the 2021 Rules. Social media sites, hosting companies, and other digital intermediaries are still required to take action within 36 hours of receiving “actual knowledge” that a piece of content is illegal (e.g. poses a threat to public order, sovereignty, decency, or morality). However, “actual knowledge” now only occurs in the following situations:

(i) a court order from a court of competent jurisdiction, or

(ii) a reasoned written intimation from a duly authorised government officer not below Joint Secretary rank (or equivalent)

The authorised authority in matters involving the police “must not be below the rank of Deputy Inspector General of Police (DIG)”. This creates a well defined, senior-accountable channel in place of a diffuse trigger.

There are two more new structural guardrails. The Rules first establish a monthly assessment of all takedown notifications by a Secretary-level officer of the relevant government to test necessity, proportionality, and compliance with India’s safe harbour provision under Section 79(3) of the IT Act. Second, in order for platforms to act precisely rather than in an expansive manner, takedown requests must be accompanied by legal justification, a description of the illegal act, and precise URLs or identifiers. The cumulative result of these guardrails is that each removal has a proportionality check and a paper trail.

Due Process as the Law’s Conscience

Indian jurisprudence has been debating what constitutes “actual knowledge” for over a decade. The Supreme Court in Shreya Singhal (2015) connected an intermediary’s removal obligation to notifications from official channels or court orders rather than vague notice. But over time, that line became hazy due to enforcement practices and some court rulings, raising concerns about over-removal and safe-harbour loss under Section 79(3). Even while more recent decisions questioned the “reasonable efforts” of intermediaries, the 2025 amendment institutionally pays homage to Shreya Singhal’s ethos by refocusing “actual knowledge” on formal reviewable communications from senior state actors or judges.

The amendment also introduces an internal constitutionalism to executive orders by mandating monthly audits at the Secretary level. The state is required to re-justify its own orders on a rolling basis, evaluating them against proportionality and necessity, which are criteria that Indian courts are increasingly requesting for speech restrictions. Clearer triggers, better logs, and less vague “please remove” communications that previously left compliance teams in legal limbo are the results for intermediaries.

The Court’s Echo in the Amendment

The essence of this amendment is echoed in Karnataka High Court’s Ruling on Sahyog Portal, a government portal used to coordinate takedown orders under Section 79(3)(b), was constitutional. The HC rejected X’s (formerly Twitter’s) appeal contesting the legitimacy of the portal in September. The business had claimed that by giving nodal officers the authority to issue takedown orders without court review, the portal permitted arbitrary content removals. The court disagreed, holding that the officers’ acts were in accordance with Section 79 (3)(b) and that they were “not dropping from the air but emanating from statutes.” The amendment turns compliance into conscience by conforming to the Sahyog Portal verdict, reiterating that due process is the moral grammar of governance rather than just a formality.

Conclusion: The Necessary Restlessness of Law

Law cannot afford stillness; it survives through self doubt and reinvention. The 2025 amendment, too, is not a destination, it’s a pause before the next question, a reminder that justice breathes through revision. As befits a constitutional democracy, India’s path to content governance has been combative and iterative. The next rule making cycle has been sharpened by the stays split judgments, and strikes down that have resulted from strategic litigation centred on the IT Rules, safe harbour, government fact-checking, and blocking orders. Lessons learnt are reflected in the 2025 amendment: review triumphs over opacity; specificity triumphs over vagueness; and due process triumphs over discretion. A digital republic balances freedom and force in this way.

Sources

- https://pressnews.in/law-and-justice/government-notifies-amendments-to-it-rules-2025-strengthening-intermediary-obligations/

- https://www.meity.gov.in/static/uploads/2025/10/90dedea70a3fdfe6d58efb55b95b4109.pdf

- https://www.pib.gov.in/PressReleasePage.aspx?PRID=2181719

- https://www.scobserver.in/journal/x-relies-on-shreya-singhal-in-arbitrary-content-blocking-case-in-karnataka-hc/

- https://www.medianama.com/2025/10/223-content-takedown-rules-online-platforms-36-hr-deadline-officer-rank/#:~:text=It%20specifies%20that%20government%20officers,Deputy%20Inspector%20General%20of%20Police%E2%80%9D.

Introduction

Prebunking is a technique that shifts the focus from directly challenging falsehoods or telling people what they need to believe to understanding how people are manipulated and misled online to begin with. It is a growing field of research that aims to help people resist persuasion by misinformation. Prebunking, or "attitudinal inoculation," is a way to teach people to spot and resist manipulative messages before they happen. The crux of the approach is rooted in taking a step backwards and nipping the problem in the bud by deepening our understanding of it, instead of designing redressal mechanisms to tackle it after the fact. It has been proven effective in helping a wide range of people build resilience to misleading information.

Prebunking is a psychological strategy for countering the effect of misinformation with the goal of assisting individuals in identifying and resisting deceptive content, hence increasing resilience against future misinformation. Online manipulation is a complex issue, and multiple approaches are needed to curb its worst effects. Prebunking provides an opportunity to get ahead of online manipulation, providing a layer of protection before individuals encounter malicious content. Prebunking aids individuals in discerning and refuting misleading arguments, thus enabling them to resist a variety of online manipulations.

Prebunking builds mental defenses for misinformation by providing warnings and counterarguments before people encounter malicious content. Inoculating people against false or misleading information is a powerful and effective method for building trust and understanding along with a personal capacity for discernment and fact-checking. Prebunking teaches people how to separate facts from myths by teaching them the importance of thinking in terms of ‘how you know what you know’ and consensus-building. Prebunking uses examples and case studies to explain the types and risks of misinformation so that individuals can apply these learnings to reject false claims and manipulation in the future as well.

How Prebunking Helps Individuals Spot Manipulative Messages

Prebunking helps individuals identify manipulative messages by providing them with the necessary tools and knowledge to recognize common techniques used to spread misinformation. Successful prebunking strategies include;

- Warnings;

- Preemptive Refutation: It explains the narrative/technique and how particular information is manipulative in structure. The Inoculation treatment messages typically include 2-3 counterarguments and their refutations. An effective rebuttal provides the viewer with skills to fight any erroneous or misleading information they may encounter in the future.

- Micro-dosing: A weakened or practical example of misinformation that is innocuous.

All these alert individuals to potential manipulation attempts. Prebunking also offers weakened examples of misinformation, allowing individuals to practice identifying deceptive content. It activates mental defenses, preparing individuals to resist persuasion attempts. Misinformation can exploit cognitive biases: people tend to put a lot of faith in things they’ve heard repeatedly - a fact that malicious actors manipulate by flooding the Internet with their claims to help legitimise them by creating familiarity. The ‘prebunking’ technique helps to create resilience against misinformation and protects our minds from the harmful effects of misinformation.

Prebunking essentially helps people control the information they consume by teaching them how to discern between accurate and deceptive content. It enables one to develop critical thinking skills, evaluate sources adequately and identify red flags. By incorporating these components and strategies, prebunking enhances the ability to spot manipulative messages, resist deceptive narratives, and make informed decisions when navigating the very dynamic and complex information landscape online.

CyberPeace Policy Recommendations

- Preventing and fighting misinformation necessitates joint efforts between different stakeholders. The government and policymakers should sponsor prebunking initiatives and information literacy programmes to counter misinformation and adopt systematic approaches. Regulatory frameworks should encourage accountability in the dissemination of online information on various platforms. Collaboration with educational institutions, technological companies and civil society organisations can assist in the implementation of prebunking techniques in a variety of areas.

- Higher educational institutions should support prebunking and media literacy and offer professional development opportunities for educators, and scholars by working with academics and professionals on the subject of misinformation by producing research studies on the grey areas and challenges associated with misinformation.

- Technological companies and social media platforms should improve algorithm transparency, create user-friendly tools and resources, and work with fact-checking organisations to incorporate fact-check labels and tools.

- Civil society organisations and NGOs should promote digital literacy campaigns to spread awareness on misinformation and teach prebunking strategies and critical information evaluation. Training programmes should be available to help people recognise and resist deceptive information using prebunking tactics. Advocacy efforts should support legislation or guidelines that support and encourage prebunking efforts and promote media literacy as a basic skill in the digital landscape.

- Media outlets and journalists including print & social media should follow high journalistic standards and engage in fact-checking activities to ensure information accuracy before release. Collaboration with prebunking professionals, cyber security experts, researchers and advocacy analysts can result in instructional content and initiatives that promote media literacy, prebunking strategies and misinformation awareness.

Final Words

The World Economic Forum's Global Risks Report 2024 identifies misinformation and disinformation as the top most significant risks for the next two years. Misinformation and disinformation are rampant in today’s digital-first reality, and the ever-growing popularity of social media is only going to see the challenges compound further. It is absolutely imperative for all netizens and stakeholders to adopt proactive approaches to counter the growing problem of misinformation. Prebunking is a powerful problem-solving tool in this regard because it aims at ‘protection through prevention’ instead of limiting the strategy to harm reduction and redressal. We can draw parallels with the concept of vaccination or inoculation, reducing the probability of a misinformation infection. Prebunking exposes us to a weakened form of misinformation and provides ways to identify it, reducing the chance false information takes root in our psyches.

The most compelling attribute of this approach is that the focus is not only on preventing damage but also creating widespread ownership and citizen participation in the problem-solving process. Every empowered individual creates an additional layer of protection against the scourge of misinformation, not only making safer choices for themselves but also lowering the risk of spreading false claims to others.

References

- [1] https://www3.weforum.org/docs/WEF_The_Global_Risks_Report_2024.pdf

- [2] https://prebunking.withgoogle.com/docs/A_Practical_Guide_to_Prebunking_Misinformation.pdf

- [3] https://ijoc.org/index.php/ijoc/article/viewFile/17634/3565