#FactCheck - Viral Video Claiming to Show Kashmir Avalanche Is AI-Generated

Executive Summary

A video is being shared on social media claiming to show an avalanche in Kashmir. The caption of the post alleges that the incident occurred on February 6. Several users sharing the video are also urging people to avoid unnecessary travel to hilly regions. CyberPeace’s research found that the video being shared as footage of a Kashmir avalanche is not real. The research revealed that the viral video is AI-generated.

Claim

The video is circulating widely on social media platforms, particularly Instagram, with users claiming it shows an avalanche in Kashmir on February 6. The archived version of the post can be accessed here. Similar posts were also found online. (Links and archived links provided)

Fact Check:

To verify the claim, we searched relevant keywords on Google. During this process, we found a video posted on the official Instagram account of the BBC. The BBC post reported that an avalanche occurred near a resort in Sonamarg, Kashmir, on January 27. However, the BBC post does not contain the viral video that is being shared on social media, indicating that the circulating clip is unrelated to the real incident.

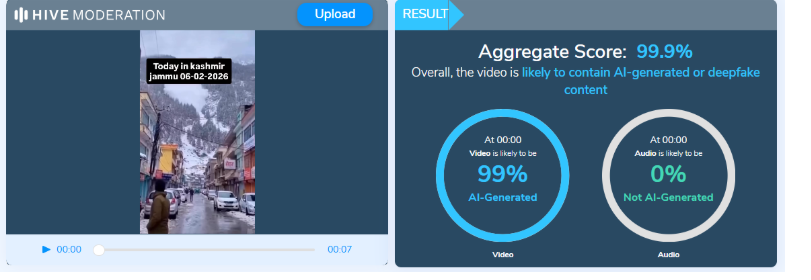

A close examination of the viral video revealed several inconsistencies. For instance, during the alleged avalanche, people present at the site are not seen panicking, running for cover, or moving toward safer locations. Additionally, the movement and flow of the falling snow appear unnatural. Such visual anomalies are commonly observed in videos generated using artificial intelligence. As part of the research , the video was analyzed using the AI detection tool Hive Moderation. The tool indicated a 99.9% probability that the video was AI-generated.

Conclusion

Based on the evidence gathered during our research , it is clear that the video being shared as footage of a Kashmir avalanche is not genuine. The clip is AI-generated and misleading. The viral claim is therefore false.

Related Blogs

Executive Summary:

A video circulating on social media falsely claims to show Indian Air Chief Marshal AP Singh admitting that India lost six jets and a Heron drone during Operation Sindoor in May 2025. It has been revealed that the footage had been digitally manipulated by inserting an AI generated voice clone of Air Chief Marshal Singh into his recent speech, which was streamed live on August 9, 2025.

Claim:

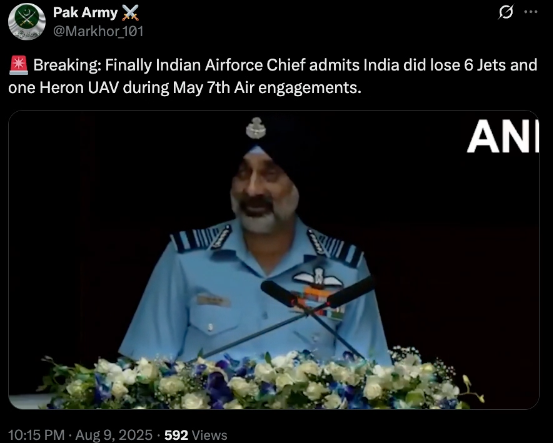

A viral video (archived video) (another link) shared by an X user stating in the caption “ Breaking: Finally Indian Airforce Chief admits India did lose 6 Jets and one Heron UAV during May 7th Air engagements.” which is actually showing the Air Chief Marshal has admitted the aforementioned loss during Operation Sindoor.

Fact Check:

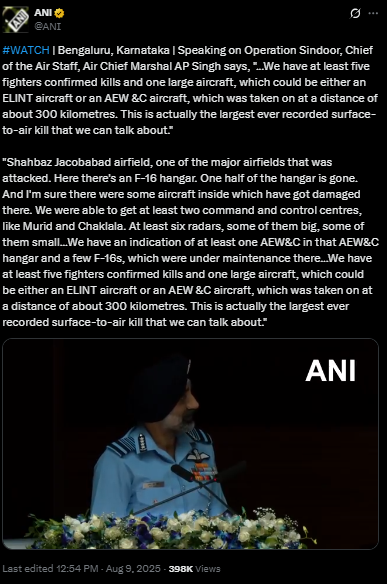

By conducting a reverse image search on key frames from the video, we found a clip which was posted by ANI Official X handle , after watching the full clip we didn't find any mention of the aforementioned alleged claim.

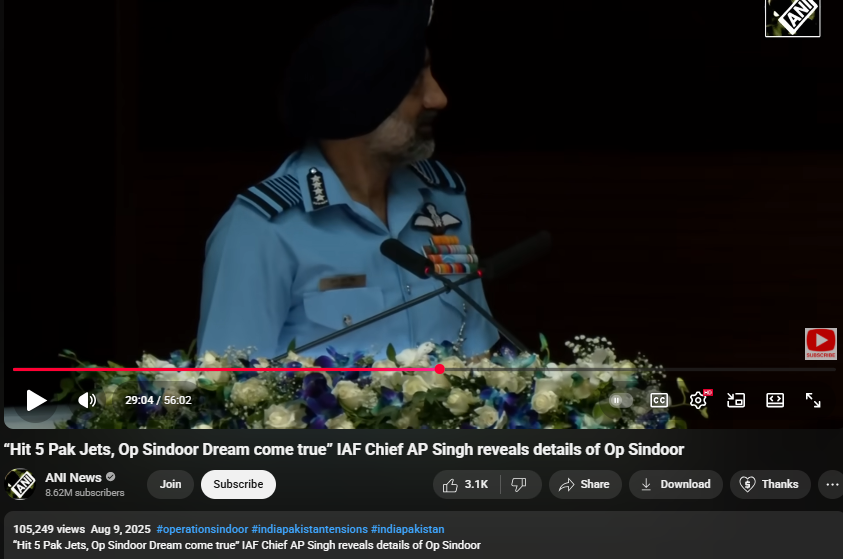

On further research we found an extended version of the video in the Official YouTube Channel of ANI which was published on 9th August 2025. At the 16th Air Chief Marshal L.M. Katre Memorial Lecture in Marathahalli, Bengaluru, Air Chief Marshal AP Singh did not mention any loss of six jets or a drone in relation to the conflict with Pakistan. The discrepancies observed in the viral clip suggest that portions of the audio may have been digitally manipulated.

The audio in the viral video, particularly the segment at the 29:05 minute mark alleging the loss of six Indian jets, appeared to be manipulated and displayed noticeable inconsistencies in tone and clarity.

Conclusion:

The viral video claiming that Air Chief Marshal AP Singh admitted to the loss of six jets and a Heron UAV during Operation Sindoor is misleading. A reverse image search traced the footage that no such remarks were made. Further an extended version on ANI’s official YouTube channel confirmed that, during the 16th Air Chief Marshal L.M. Katre Memorial Lecture, no reference was made to the alleged losses. Additionally, the viral video’s audio, particularly around the 29:05 mark, showed signs of manipulation with noticeable inconsistencies in tone and clarity.

- Claim: Viral Video Claiming IAF Chief Acknowledged Loss of Jets Found Manipulated

- Claimed On: Social Media

- Fact Check: False and Misleading

Artificial Intelligence (AI) provides a varied range of services and continues to catch intrigue and experimentation. It has altered how we create and consume content. Specific prompts can now be used to create desired images enhancing experiences of storytelling and even education. However, as this content can influence public perception, its potential to cause misinformation must be noted as well. The realistic nature of the images can make it hard to discern as artificially generated by the untrained eye. As AI operates by analysing the data it was trained on previously to deliver, the lack of contextual knowledge and human biases (while framing prompts) also come into play. The stakes are higher whilst dabbling with subjects such as history, as there is a fine line between the creation of content with the intent of mere entertainment and the spread of misinformation owing to biases and lack of veracity left unchecked. AI-generated images enhance storytelling but can also spread misinformation, especially in historical contexts. For instance, an AI-generated image of London during the Black Death might include inaccurate details, misleading viewers about the past.

The Rise of AI-Generated Historical Images as Entertainment

Recently, generated images and videos of various historical instances along with the point of view of the people present have been floating all over the internet. Some of them include the streets of London during the Black Death in the 1300s in England, the eruption of Mount Vesuvius at Pompeii etc. Hogne and Dan, two creators who operate accounts named POV Lab and Time Traveller POV on TikTok state that they create such videos as they feel that seeing the past through a first-person perspective is an interesting way to bring history back to life while highlighting the cool parts, helping the audience learn something new. Mostly sensationalised for visual impact and storytelling, such content has been called out by historians for inconsistencies with respect to details particular of the time. Presently, artists admit to their creations being inaccurate, reasoning them to be more of an artistic interpretation than fact-checked documentaries.

It is important to note that AI models may inaccurately depict objects (issues with lateral inversion), people(anatomical implausibilities), or scenes due to "present-ist" bias. As noted by Lauren Tilton, an associate professor of digital humanities at the University of Richmond, many AI models primarily rely on data from the last 15 years, making them prone to modern-day distortions especially when analysing and creating historical content. The idea is to spark interest rather than replace genuine historical facts while it is assumed that engagement with these images and videos is partly a product of the fascination with upcoming AI tools. Apart from this, there are also chatbots like Hello History and Charater.ai which enable simulations of interacting with historical figures that have piqued curiosity.

Although it makes for an interesting perspective, one cannot ignore that our inherent biases play a role in how we perceive the information presented. Dangerous consequences include feeding into conspiracy theories and the erasure of facts as information is geared particularly toward garnering attention and providing entertainment. Furthermore, exposure of such content to an impressionable audience with a lesser attention span increases the gravity of the matter. In such cases, information regarding the sources used for creation becomes an important factor.

Acknowledging the risks posed by AI-generated images and their susceptibility to create misinformation, the Government of Spain has taken a step in regulating the AI content created. It has passed a bill (for regulating AI-Generated content) that mandates the labelling of AI-generated images and failure to do so would warrant massive fines (up to $38 million or 7% of turnover on companies). The idea is to ensure that content creators label their content which would help to spot images that are artificially created from those that are not.

The Way Forward: Navigating AI and Misinformation

While AI-generated images make for exciting possibilities for storytelling and enabling intrigue, their potential to spread misinformation should not be overlooked. To address these challenges, certain measures should be encouraged.

- Media Literacy and Awareness – In this day and age critical thinking and media literacy among consumers of content is imperative. Awareness, understanding, and access to tools that aid in detecting AI-generated content can prove to be helpful.

- AI Transparency and Labeling – Implementing regulations similar to Spain’s bill on labelling content could be a guiding crutch for people who have yet to learn to tell apart AI-generated content from others.

- Ethical AI Development – AI developers must prioritize ethical considerations in training using diverse and historically accurate datasets and sources which would minimise biases.

As AI continues to evolve, balancing innovation with responsibility is essential. By taking proactive measures in the early stages, we can harness AI's potential while safeguarding the integrity and trust of the sources while generating images.

References:

- https://www.npr.org/2023/06/07/1180768459/how-to-identify-ai-generated-deepfake-images

- https://www.nbcnews.com/tech/tech-news/ai-image-misinformation-surged-google-research-finds-rcna154333

- https://www.bbc.com/news/articles/cy87076pdw3o

- https://newskarnataka.com/technology/government-releases-guide-to-help-citizens-identify-ai-generated-images/21052024/

- https://www.technologyreview.com/2023/04/11/1071104/ai-helping-historians-analyze-past/

- https://www.psypost.org/ai-models-struggle-with-expert-level-global-history-knowledge/

- https://www.youtube.com/watch?v=M65IYIWlqes&t=2597s

- https://www.vice.com/en/article/people-are-creating-records-of-fake-historical-events-using-ai/?utm_source=chatgpt.com

- https://www.reuters.com/technology/artificial-intelligence/spain-impose-massive-fines-not-labelling-ai-generated-content-2025-03-11/?utm_source=chatgpt.com

- https://www.theguardian.com/film/2024/sep/13/documentary-ai-guidelines?utm_source=chatgpt.com

Introduction

Cyber attacks are becoming increasingly common and most sophisticated around the world. India's Telecom operator BSNL has allegedly suffered a data breach. Reportedly, Hackers managed to steal sensitive information of BSNL customers and the same is now available for sale on the dark web. The leaked information includes names email addresses billing details contact numbers and outgoing call records of BSNL customers victims include both BSNL fibre and landline users. The threat actor using Querel has released a sample data set on a dark web forum and the data set contains 32,000 lines of leaked information the threat actor has claimed that the total number of lines across all databases amounts to approximately 2.9 Million.

The Persistent Threat to Digital Fortresses

As we plunge into the abyssal planes of the internet, where the shadowy tendrils of cyberspace stretch out like the countless arms of some digital leviathan, we find ourselves facing a stark and chilling revelation. At its murky depths lurks the dark web, a term that brings forth images of a clandestine digital netherworld where anonymity reigns supreme and the conventional rules of law struggle to cast their net. It is here, in this murky digital landscape, where the latest trophy of cyber larceny has been flagrantly displayed — the plundered data of Bharat Sanchar Nigam Ltd (BSNL), India's state-owned telecommunications colossus.

This latest breach serves not simply as a singular incident in the tapestry of cyber incursions but as a profound reminder of the enduring fragility of our digital bastions against the onslaught wielded by the ever-belligerent adversaries in cyberspace.

The Breach

Tracing the genesis of this worrisome event, we find a disconcerting story unfold. It began to surface when a threat actor, shrouded in the mystique of the digital shadows and brandishing the enigmatic alias 'Perell,' announced their triumph on the dark web. This self-styled cyber gladiator took to the encrypted recesses of this hidden domain with bravado, professing to have extracted 'critical information' from the inner sanctum of BSNL's voluminous databases. It is from these very vaults that the most sensitive details of the company's fibre network and landline customers originate.

A portion of the looted data, a mere fragment of a more extensive and damning corpus, was brandished like a nefariously obtained banner for all to see on the dark web. It was an ostentatious display, a teaser intended to tantalize and terrify — approximately 32,000 lines of data, a hint of the reportedly vast 2.9 million lines of data that 'Perell' claimed to have sequestered in their digital domain. The significance of this compromised information cannot be overstated; it is not mere bytes and bits strewn about in the cyber-wind. It constitutes the very essence of countless individuals, an amalgamation of email addresses, billing histories, contact numbers, and a myriad of other intimate details that, if weaponized, could set the stage for heinous acts of identity theft, insidious financial fraud, and precisely sculpted phishing schemes.

Ramifications

The ramifications of such a breach extend far beyond individual concerns of privacy invasion. This event signifies an alarming clarion call highlighting the susceptibility of our digital identities. In an era where the strands of our daily lives are ever more entwined with the World Wide Web, such penetrations are not merely an affront to corporate entities; they are a direct assault on the individual's inherent right to security and the implicit trust placed in the institutions that profess to shield their most private information.

Ripples of concern have emanated throughout the cybersecurity community, prompting urgent action from Cert-In, India's cyber security sentinel. Upon notification of this digital transgression, alarms were sounded, and yet, in a disconcerting turn, BSNL has remained enigmatic, adopting a silence that seems to belie the gravity of the situation. This reticence stands in contrast to the urgency for open dialogue and transparency — it is within the anvil of these principles that the foundations of trust are laid and sustained.

Conclusion

The narrative of the BSNL data breach transcends a singular tale of digital larceny or vulnerability; it unfolds as an insistent call to action, demanding a unified and proactive response to the perpetually morphing threat landscape that haunts our technologically dependent world. It is an uncomfortable reminder that in the intricately woven web of our online existence, we each stand as potential targets with our personal data held precariously as the coveted prize for those shadow-walkers and data marauders who dwell in the secretive realms of the internet's darkest corners.