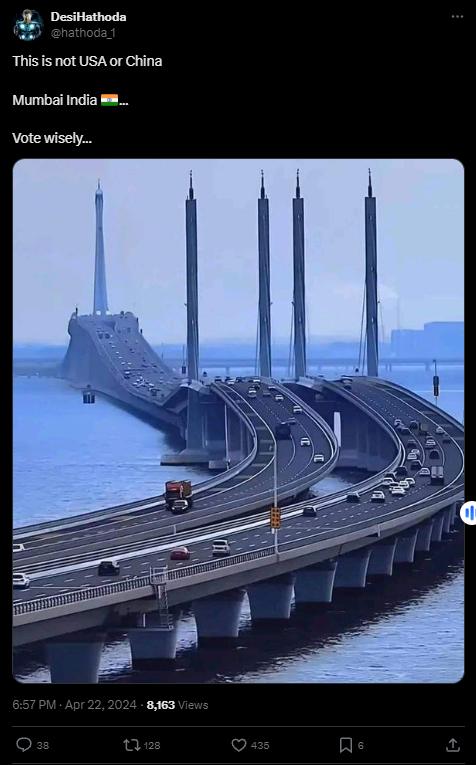

#FactCheck - Viral Image of Bridge claims to be of Mumbai, but in reality it's located in Qingdao, China

Executive Summary:

The photograph of a bridge allegedly in Mumbai, India circulated through social media was found to be false. Through investigations such as reverse image searches, examination of similar videos, and comparison with reputable news sources and google images, it has been found that the bridge in the viral photo is the Qingdao Jiaozhou Bay Bridge located in Qingdao, China. Multiple pieces of evidence, including matching architectural features and corroborating videos tell us that the bridge is not from Mumbai. No credible reports or sources have been found to prove the existence of a similar bridge in Mumbai.

Claims:

Social media users claim a viral image of the bridge is from Mumbai.

Fact Check:

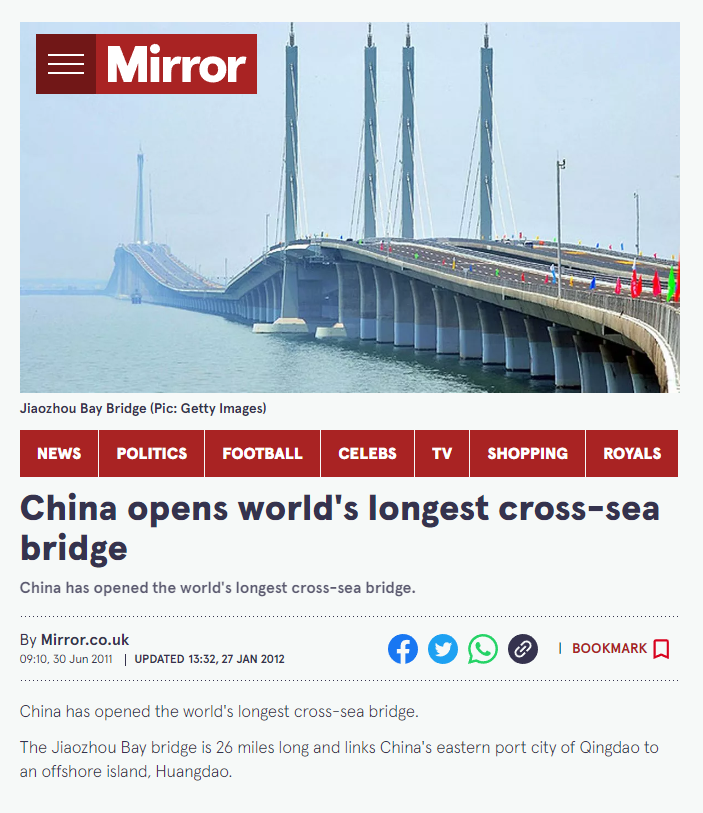

Once the image was received, it was investigated under the reverse image search to find any lead or any information related to it. We found an image published by Mirror News media outlet, though we are still unsure but we can see the same upper pillars and the foundation pillars with the same color i.e white in the viral image.

The name of the Bridge is Jiaozhou Bay Bridge located in China, which connects the eastern port city of the country to an offshore island named Huangdao.

Taking a cue from this we then searched for the Bridge to find any other relatable images or videos. We found a YouTube Video uploaded by a channel named xuxiaopang, which has some similar structures like pillars and road design.

In reverse image search, we found another news article that tells about the same bridge in China, which is more likely similar looking.

Upon lack of evidence and credible sources for opening a similar bridge in Mumbai, and after a thorough investigation we concluded that the claim made in the viral image is misleading and false. It’s a bridge located in China not in Mumbai.

Conclusion:

In conclusion, after fact-checking it was found that the viral image of the bridge allegedly in Mumbai, India was claimed to be false. The bridge in the picture climbed to be Qingdao Jiaozhou Bay Bridge actually happened to be located in Qingdao, China. Several sources such as reverse image searches, videos, and reliable news outlets prove the same. No evidence exists to suggest that there is such a bridge like that in Mumbai. Therefore, this claim is false because the actual bridge is in China, not in Mumbai.

- Claim: The bridge seen in the popular social media posts is in Mumbai.

- Claimed on: X (formerly known as Twitter), Facebook,

- Fact Check: Fake & Misleading

Related Blogs

%20(1).webp)

Digitisation in Agriculture

The traditional way of doing agriculture has undergone massive digitization in recent years, whereby several agricultural processes have been linked to the Internet. This globally prevalent transformation, driven by smart technology, encompasses the use of sensors, IoT devices, and data analytics to optimize and automate labour-intensive farming practices. Smart farmers in the country and abroad now leverage real-time data to monitor soil conditions, weather patterns, and crop health, enabling precise resource management and improved yields. The integration of smart technology in agriculture not only enhances productivity but also promotes sustainable practices by reducing waste and conserving resources. As a result, the agricultural sector is becoming more efficient, resilient, and capable of meeting the growing global demand for food.

Digitisation of Food Supply Chains

There has also been an increase in the digitisation of food supply chains across the globe since it enables both suppliers and consumers to keep track of the stage of food processing from farm to table and ensures the authenticity of the food product. The latest generation of agricultural robots is being tested to minimise human intervention. It is thought that AI-run processes can mitigate labour shortage, improve warehousing and storage and make transportation more efficient by running continuous evaluations and adjusting the conditions real-time while increasing yield. The company Muddy Machines is currently trialling an autonomous asparagus-harvesting robot called Sprout that not only addresses labour shortages but also selectively harvests green asparagus, which traditionally requires careful picking. However, Chris Chavasse, co-founder of Muddy Machines, highlights that hackers and malicious actors could potentially hack into the robot's servers and prevent it from operating by driving it into a ditch or a hedge, thereby impending core crop activities like seeding and harvesting. Hacking agricultural pieces of machinery also implies damaging a farmer’s produce and in turn profitability for the season.

Case Study: Muddy Machines and Cybersecurity Risks

A cyber attack on digitised agricultural processes has a cascading impact on online food supply chains. Risks are non-exhaustive and spill over to poor protection of cargo in transit, increased manufacturing of counterfeit products, manipulation of data, poor warehousing facilities and product-specific fraud, amongst others. Additional impacts on suppliers are also seen, whereby suppliers have supplied the food products but fail to receive their payments. These cyber-threats may include malware(primarily ransomware) that accounts for 38% of attacks, Internet of Things (IoT) attacks that comprise 29%, Distributed Denial of Service (DDoS) attacks, SQL Injections, phishing attacks etc.

Prominent Cyber Attacks and Their Impacts

Ransomware attacks are the most popular form of cyber threats to food supply chains and may include malicious contaminations, deliberate damage and destruction of tangible assets (like infrastructure) or intangible assets (like reputation and brand). In 2017, NotPetya malware disrupted the world’s largest logistics giant Maersk and destroyed all end-user devices in more than 60 countries. Interestingly, NotPetya was also linked to the malfunction of freezers connected to control systems. The attack led to these control systems being compromised, resulting in freezer failures and potential spoilage of food, highlighting the vulnerability of industrial control systems to cyber threats.

Further Case Studies

NotPetya also impacted Mondelez, the maker of Oreos but disrupting its email systems, file access and logistics for weeks. Mondelez’s insurance claim was also denied since NotPetya malware was described as a “war-like” action, falling outside the purview of the insurance coverage. In April 2021, over the Easter weekend, Bakker Logistiek, a logistics company based in the Netherlands that offers air-conditioned warehousing and food transportation for Dutch supermarkets, experienced a ransomware attack. This incident disrupted their supply chain for several days, resulting in empty shelves at Albert Heijn supermarkets, particularly for products such as packed and grated cheese. Despite the severity of the attack, the company successfully restored their operations within a week by utilizing backups. JBS, one of the world’s biggest meat processing companies, also had to pay $11 million in ransom via Bitcoin to resolve a cyber attack in the same year, whereby computer networks at JBS were hacked, temporarily shutting down their operations and endangering consumer data. The disruption threatened food supplies and risked higher food prices for consumers. Additional cascading impacts also include low food security and hindrances in processing payments at retail stores.

Credible Threat Agents and Their Targets

Any cyber-attack is usually carried out by credible threat agents that can be classified as either internal or external threat agents. Internal threat agents may include contractors, visitors to business sites, former/current employees, and individuals who work for suppliers. External threat agents may include activists, cyber-criminals, terror cells etc. These threat agents target large organisations owing to their larger ransom-paying capacity, but may also target small companies due to their vulnerability and low experience, especially when such companies are migrating from analogous methods to digitised processes.

The Federal Bureau of Investigation warns that the food and agricultural systems are most vulnerable to cyber-security threats during critical planting and harvesting seasons. It noted an increase in cyber-attacks against six agricultural co-operatives in 2021, with ancillary core functions such as food supply and distribution being impacted. Resultantly, cyber-attacks may lead to a mass shortage of food not only meant for human consumption but also for animals.

Policy Recommendations

To safeguard against digital food supply chains, Food defence emerges as one of the top countermeasures to prevent and mitigate the effects of intentional incidents and threats to the food chain. While earlier, food defence vulnerability assessments focused on product adulteration and food fraud, including vulnerability assessments of agriculture technology now be more relevant.

Food supply organisations must prioritise regular backups of data using air-gapped and password-protected offline copies, and ensure critical data copies are not modifiable or deletable from the main system. For this, blockchain-based food supply chain solutions may be deployed, which are not only resilient to hacking, but also allow suppliers and even consumers to track produce. Companies like Ripe.io, Walmart Global Tech, Nestle and Wholechain deploy blockchain for food supply management since it provides overall process transparency, improves trust issues in the transactions, enables traceable and tamper-resistant records and allows accessibility and visibility of data provenance. Extensive recovery plans with multiple copies of essential data and servers in secure, physically separated locations, such as hard drives, storage devices, cloud or distributed ledgers should be adopted in addition to deploying operations plans for critical functions in case of system outages. For core processes which are not labour-intensive, including manual operation methods may be used to reduce digital dependence. Network segmentation, updates or patches for operating systems, software, and firmware are additional steps which can be taken to secure smart agricultural technologies.

References

- Muddy Machines website, Accessed 26 July 2024. https://www.muddymachines.com/

- “Meat giant JBS pays $11m in ransom to resolve cyber-attack”, BBC, 10 June 2021. https://www.bbc.com/news/business-57423008

- Marshall, Claire & Prior, Malcolm, “Cyber security: Global food supply chain at risk from malicious hackers.”, BBC, 20 May 2022. https://www.bbc.com/news/science-environment-61336659

- “Ransomware Attacks on Agricultural Cooperatives Potentially Timed to Critical Seasons.”, Private Industry Notification, Federal Bureau of Investigation, 20 April https://www.ic3.gov/Media/News/2022/220420-2.pdf.

- Manning, Louise & Kowalska, Aleksandra. (2023). “The threat of ransomware in the food supply chain: a challenge for food defence”, Trends in Organized Crime. https://doi.org/10.1007/s12117-023-09516-y

- “NotPetya: the cyberattack that shook the world”, Economic Times, 5 March 2022. https://economictimes.indiatimes.com/tech/newsletters/ettech-unwrapped/notpetya-the-cyberattack-that-shook-the-world/articleshow/89997076.cms?from=mdr

- Abrams, Lawrence, “Dutch supermarkets run out of cheese after ransomware attack.”, Bleeping Computer, 12 April 2021. https://www.bleepingcomputer.com/news/security/dutch-supermarkets-run-out-of-cheese-after-ransomware-attack/

- Pandey, Shipra; Gunasekaran, Angappa; Kumar Singh, Rajesh & Kaushik, Anjali, “Cyber security risks in globalised supply chains: conceptual framework”, Journal of Global Operations and Strategic Sourcing, January 2020. https://www.researchgate.net/profile/Shipra-Pandey/publication/338668641_Cyber_security_risks_in_globalized_supply_chains_conceptual_framework/links/5e2678ae92851c89c9b5ac66/Cyber-security-risks-in-globalized-supply-chains-conceptual-framework.pdf

- Daley, Sam, “Blockchain for Food: 10 examples to know”, Builin, 22 March 2023 https://builtin.com/blockchain/food-safety-supply-chain

Introduction:

Apple is known for its unique innovations and designs. Apple, with the introduction of the iPhone 15 series, now will come up with the USB-C by complying with European Union(EU) regulations. The standard has been set by the European Union’s rule for all mobile devices. The new iPhone will now come up with USB-C. However there is a little caveat here, you will be able to use any USB-C cable to charge or transfer from your iPhone. European Union approved new rules to make it compulsory for tech companies to ensure a universal charging port is introduced for electronic gadgets like mobile phones, tablets, cameras, e-readers, earbuds and other devices by the end of next year.

The new iPhone will now come up with USB-C. However, Apple being Apple, will limit third-party USB-C cables. This means Apple-owned MFI-certified cable will have an optimised charging speed and a faster data transfer speed. MFI stands for 'Made for iPhone/iPad' and is a quality mark or testing program from Apple for Lightning cables and other products. The MFI-certified product ensures safety and improved performance.

European Union's regulations on common charging port:

The new iPhone will have a type-c USB port. EU rules have made it mandatory that all phones and laptops need to have one USB-C charging port. IPhone will be switching to USB-C from the lightning port. European Union's mandate for all mobile device makers to adopt this technology. EU has set a deadline for all new phones to use USB-C for wired charging by the end of 2024. These EU rules will be applicable to all devices, such as tablets, digital cameras, headphones, handheld video game consoles, etc. And will apply to devices that offer wired charging. The EU rules require that phone manufacturers adopt a common charging connection. The mobile manufacturer or relevant industry has to comply with these rules by the end of 2024. The rules are enacted with the intent to save consumers money and cut waste. EU stated that these rules will save consumers from unnecessary charger purchases and tonnes of cut waste per year. With the implementation of these rules, the phone manufacturers have to comply with it, and customers will be able to use a single charger for their different devices. It will strengthen the speed of data transfer in new iPhone models. The iPhone will also be compatible with chargers used by non-apple users, i.e. USB-C.

Indian Standards on USB-C Type Charging Ports in India

The Bureau of Indian Standards (BIS) has also issued standards for USB-C-type chargers. The standards aim to provide a solution of a common charger for all different charging devices. Consumers will not need to purchase multiple chargers for their different devices, ultimately leading to a reduction in the number of chargers per consumer. This would contribute to the Government of India's goal of reducing e-waste and moving toward sustainable development.

Conclusion:

New EU rules require all mobile phone devices, including iPhones, to have a USB-C connector for their charging ports. Notably, now you can see the USB-C port on the upcoming iPhone 15. These rules will enable the customers to use a single charger for their different Apple devices, such as iPads, Macs and iPhones. Talking about the applicability of these rules, the EU common-charger rule will cover small and medium-sized portable electronics, which will include mobile phones, tablets, e-readers, mice and keyboards, digital cameras, handheld videogame consoles, portable speakers, etc. Such devices are mandated to have USB-C charging ports if they offer the wired charging option. Laptops will also be covered under these rules, but they are given more time to adopt the changes and abide by these rules. Overall, this step will help in reducing e-waste and moving toward sustainable development.

References:

https://www.bbc.com/news/technology-66708571

.webp)

Introduction

Raksha Bandhan is a cherished festival which is celebrated every year on the full moon day of the Hindu month of Shravan. It is a festival that represents the love, care, and protection that siblings share. This year, Raksha Bandhan falls on 09th August 2025. On this day, sisters tie a sacred thread known as Rakhi on their brothers' wrists as a symbol of love and protection, and in return, brothers promise to safeguard them in all walks of life. The origin of this festival traces back to the Mahabharata, when lord Krishna injured his finger. To bandage the wound, Draupadi, also known as Panchali, tore a piece of her saree and tied it on Krishna's finger. Krishna was touched by her selfless gesture and promised to always protect her, a promise he fulfilled during Drapadi’s time of greatest need.

Today, in the evolving world driven by technology in all aspects of life, the nature of threats has evolved. In this digital age, physical safety alone is no longer enough. Alongside the traditional vow, there is now a growing need for another promise, the promise of Cyber Raksha (Cyber Safety). As we celebrate the spirit of Raksha Bandhan, this year also take the pledge of offering and taking care of the Cyber Suraksha of your sibling.

Ek Vaada Cyber Raksha ka

All the brothers and sisters share the bond of mutual care and responsibility. In the evolving threats of cybercrimes, they must understand the vulnerabilities they might face and the cyber safety tips they should be aware of to protect themselves. You must promise to guide, protect each other from online dangers, and help understand the importance of digital safety. Hence, this Raksha Bandhan, let’s also tie a knot of cyber awareness, responsibility, and digital protection, because true raksha in today’s age is not only about protection in the offline world, it is about protection in both the offline and online world.

CyberPeace has curated the following best practices for you to consider in your life and also to share with your sisters and brothers.

Password Security

It is most important to realise that cybercrooks mostly have their eyes on your passwords to target and gain access to your accounts or information. Scammers try multiple ways to get access to your passwords by way of various methods such as OTP frauds, Fake login pages (spoofing), Social engineering, Credential stuffing, Brute-force attacks, phishing, etc.

Quick Tips

- Use strong passwords.

- Regularly update passwords.

- Use separate passwords for different accounts.

- Use secure & trusted password managers.

- Use two-factor authentication for an extra layer of security.

- Make sure not to save passwords on random devices.

Social Media Security

There are endless cyber scams that take place through social media, such as identity theft, cyberbullying, cyber stalking, online harassment, data leaks, suspicious links leading to phishing and malware, exposure to inappropriate content, etc. It becomes important for netizens to protect their accounts, data, and online presence on social media platforms from the growing cyber threats.

Quick Tips

- Review app permissions and do not give any unnecessary app permissions.

- Keep your account private or customise your privacy settings as per your needs.

- Be cautious while interacting with strangers.

- Do not click on any suspicious or unknown links.

- Make sure to log out in case you have to log in to your social media on an unfamiliar device, and update your password to prevent unauthorised access.

- Always use Two-Factor authentication for your social media accounts.

- Avoid sharing too much of your personal information on the public story or public posts. This can be used by cybercriminals for social engineering.

- Use the report & block function to protect yourself from spam accounts and unwanted interactions.

- If you encounter any issue, report it to the ‘Platform’s reporting mechanism at the ‘Help Centre’.

- One can also reach out to the platform’s grievance officer.

Device Security

In today’s world, the interconnectedness is unavoidable, your devices, be it smartphones, tablets, laptops are not just tools, they are digital extensions of yourself. They contain your discussions, recollections, private information, and frequently your financial and professional information. Safeguarding your devices in the digital world can be equated with safeguarding your physical possessions against undesirable encroachments. Just like a sibling would never let anyone invade your privacy, you too must promise to keep your devices secured against malicious threats like malware, spyware, ransomware, and unauthorized access.

Quick Tips

- Update your apps, browsers, and operating systems frequently; these updates frequently contain security vulnerabilities.

- Install reliable anti-virus and anti-malware software, then perform routine device scans.

- Do not download files or apps from unidentified sources.

- Avoid using open or unprotected public Wi-Fi for private activities like email or banking.

- Employ screen locks (passwords, biometrics, or PINs) to stop unwanted physical access.

- Enable remote wipe or ‘find my Device’ functions in case your device is lost or stolen.

Digital Payments Security

Rakshabandhan is all about giving, but let’s not make it easy for cyber fraudsters to take! Convenience can come at a great cost. It often comes with a danger of fraud, phishing, and money-stealing schemes, as evidenced by the rise in digital payments and UPI transactions. But by being cautious, one can avoid being defrauded. Whether you’re gifting a sibling online or shopping for festive deals, promise yourself and your loved ones that you’ll transact wisely and safely.

Quick Tips

- Never give out your bank credentials, CVV, OTP, or UPI PIN to anyone, even if they seem trustworthy before extensively verifying their credentials.

- Before completing a transaction, confirm the account information or UPI ID.

- Refund or payment links sent by WhatsApp accounts or unknown numbers should not be clicked.

- Use only trusted apps (like BHIM, PhonePe, Google Pay, etc.) downloaded from official app stores.

Email Security

Your email serves as a key to your digital kingdom and serves as more than just a tool for communication. Your email frequently connects everything, from banking to social networking. Scammers use phishing assaults, malware attachments, and impersonation frauds to target it first. Just like a sibling watches your back, watch your inbox. Make a vow not to fall for the digital bait.

Quick tips

- Never open attachments or links in emails that seem strange or suspicious.

- Subject lines that evoke fear, such as “Account Suspended,” “Urgent Action Required” should be avoided.

- Verify the sender’s email address at all times because scammers frequently use little misspellings to deceive you.

- Set up two-factor authentication and create a secure, one of a kind password for your email accounts.

- Avoid using unprotected Wi-Fi networks or public computers to check your email.

- Avoid responding to spam emails or unsubscribing through dubious links as this could give the attacker your address.

Common scams to watch out for

Festive deals scams

As the festive season sales surge in India, so does the risk of cyber scams. Cyber crooks exploit the victims and urge them to share OTPs under the guise of preventing fraudulent activity, sharing malicious links to get sensitive information.

Mis-disinformation

The spread of mis-disinformation has surged on social media platforms. It spreads like wildfire across the digital landscape, and the need for effective strategies to counteract these challenges has grown exponentially in a very short period. ‘Prebunking’ and ‘Debunking’ are two approaches for countering the growing spread of misinformation online.

Deepfake and Voice cloning scams

By using the Deepfake technology, cybercriminals manipulate audio and video content which looks very realistic but, in actuality, is fake. Voice cloning is also a part of deepfake. To create a voice clone of anyone's, audio can be deepfaked too, which closely resembles a real one but, in actuality, is a fake voice created through deepfake technology.

Juice Jacking

Cybercriminals can hack your phone using or exploiting some public charging stations, such as at airports, Malls, hotel rooms, etc. When you plug your cell phone into a USB power charger, you may be plugging into a hacker. Juice jacking poses a security threat commonly at places that provide free charging stations for mobile devices.

Suspicious links & downloads

Suspicious links & downloads can lead you to a phishing site or install malware into your system, which can even lead to compromise your device, expose sensitive data, and cause financial losses.

Conclusion

This Rakhi, ensure your and your sibling’s online safety and security by being cybersafe and smart. You can seek assistance from the CyberPeace Helpline at helpline@cyberpeace.net