#FactCheck - AI Artwork Misattributed: Mahendra Singh Dhoni Sand Sculptures Exposed as AI-Generated

Executive Summary:

A recent claim going around on social media that a child created sand sculptures of cricket legend Mahendra Singh Dhoni, has been proven false by the CyberPeace Research Team. The team discovered that the images were actually produced using an AI tool. Evident from the unusual details like extra fingers and unnatural characteristics in the sculptures, the Research Team discerned the likelihood of artificial creation. This suspicion was further substantiated by AI detection tools. This incident underscores the need to fact-check information before posting, as misinformation can quickly go viral on social media. It is advised everyone to carefully assess content to stop the spread of false information.

Claims:

The claim is that the photographs published on social media show sand sculptures of cricketer Mahendra Singh Dhoni made by a child.

Fact Check:

Upon receiving the posts, we carefully examined the images. The collage of 4 pictures has many anomalies which are the clear sign of AI generated images.

In the first image the left hand of the sand sculpture has 6 fingers and in the word INDIA, ‘A’ is not properly aligned i.e not in the same line as other letters. In the second image, the finger of the boy is missing and the sand sculpture has 4 fingers in its front foot and has 3 legs. In the third image the slipper of the boy is not visible whereas some part of the slipper is visible, and in the fourth image the hand of the boy is not looking like a hand. These are some of the major discrepancies clearly visible in the images.

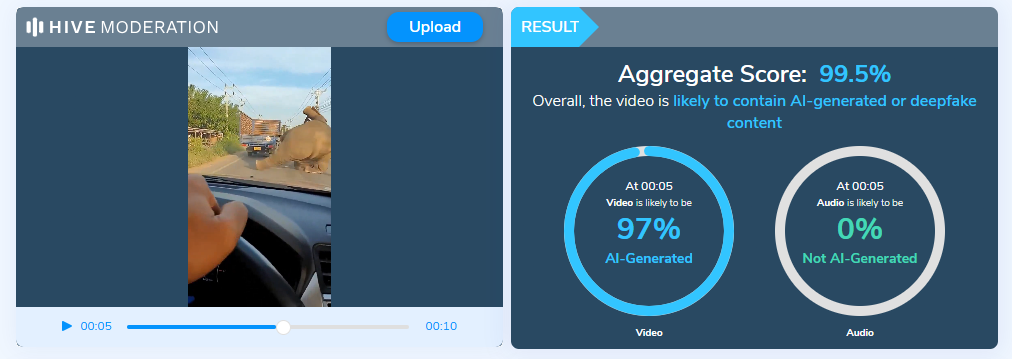

We then checked using an AI Image detection tool named ‘Hive’ image detection, Hive detected the image as 100.0% AI generated.

We then checked it in another AI image detection named ContentAtScale AI image detection, and it found to be 98% AI generated.

From this we concluded that the Image is AI generated and has no connection with the claim made in the viral social media posts. We have also previously debunked AI Generated artwork of sand sculpture of Indian Cricketer Virat Kohli which had the same types of anomalies as those seen in this case.

Conclusion:

Taking into consideration the distortions spotted in the images and the result of AI detection tools, it can be concluded that the claim of the pictures representing the child's sand sculptures of cricketer Mahendra Singh Dhoni is false. The pictures are created with Artificial Intelligence. It is important to check and authenticate the content before posting it to social media websites.

- Claim: The frame of pictures shared on social media contains child's sand sculptures of cricket player Mahendra Singh Dhoni.

- Claimed on: X (formerly known as Twitter), Instagram, Facebook, YouTube

- Fact Check: Fake & Misleading