#FactCheck - AI-Generated Image Falsely Shared as ‘Border 2’ Shooting Photo Goes Viral

Executive Summary

Border 2 is set to hit theatres today, January 23. Meanwhile, a photograph is going viral on social media showing actors Sunny Deol, Suniel Shetty, Akshaye Khanna and Jackie Shroff sitting together and having a meal, while a woman is seen serving food to them. Social media users are sharing this image claiming that it was taken during the shooting of Border 2. It is being alleged that the photograph shows a moment from the film’s set, where the actors were having food during a break in shooting. However, Cyber Peace research has found the viral claim to be false. Our investigation revealed that users are sharing an AI-generated image with a misleading claim.

Claim

On Instagram, a user shared the viral image on January 9, 2026, with the caption: “During the shooting of Border 2.” The link to the post, its archive link and screenshots can be seen below.

Fact Check:

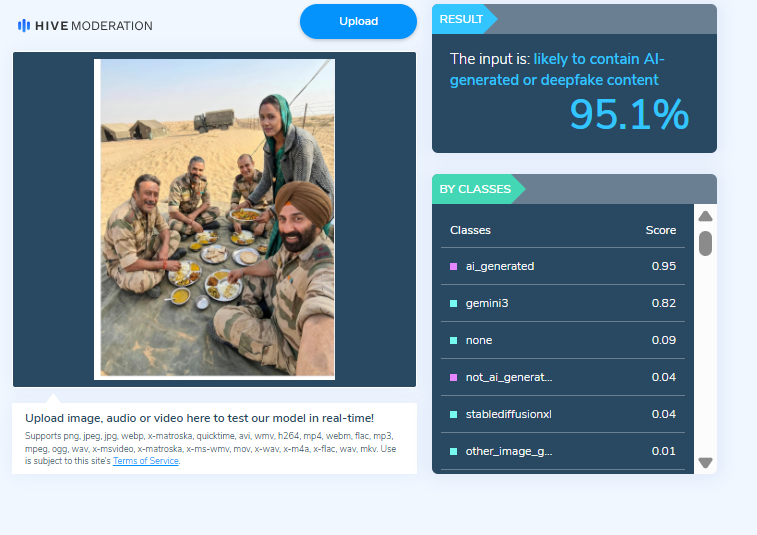

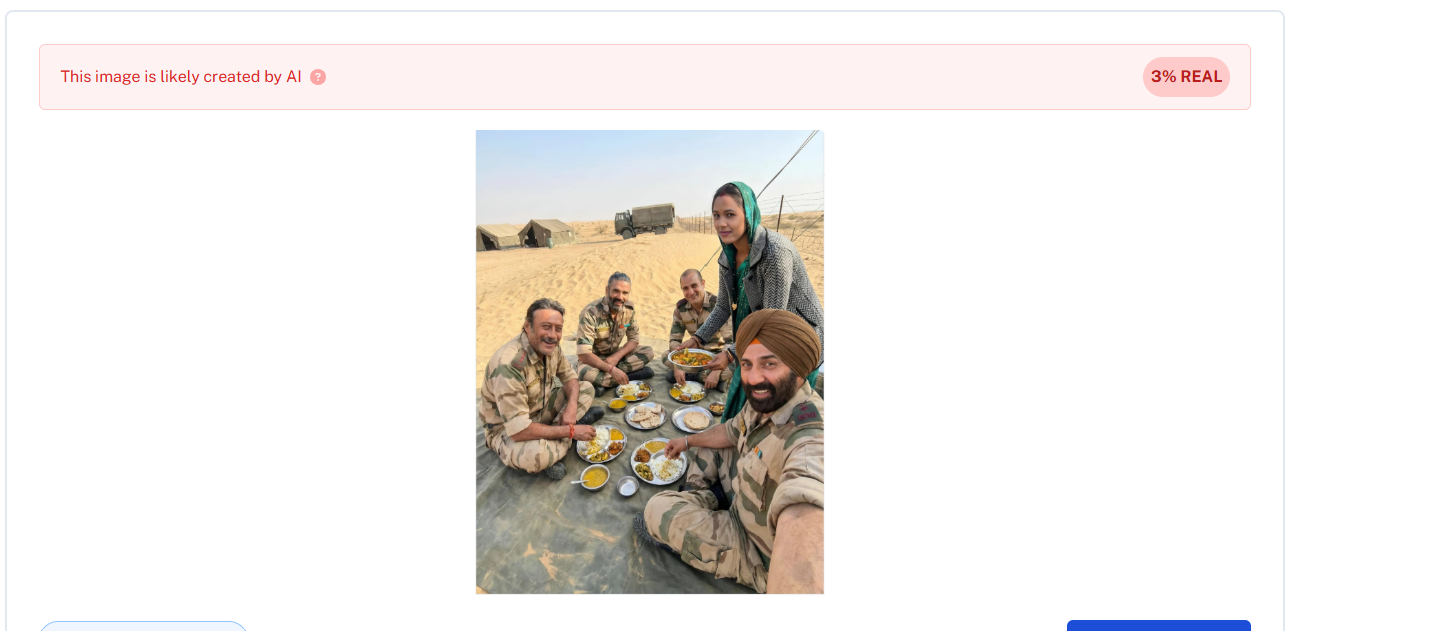

To verify the claim, we first checked Google for the official star cast of the film Border 2. Our search showed that the names of the actors seen in the viral image are not part of the film’s officially announced cast. Next, upon closely examining the image, we noticed that the facial structure and expressions of the actors appeared unnatural and distorted. The facial features did not look realistic, raising suspicion that the image might have been created using Artificial Intelligence (AI). We then scanned the viral image using the AI-generated content detection tool HIVE Moderation. The results indicated that the image is 95 per cent AI-generated.

In the final step of our investigation, we analysed the image using another AI-detection tool, Undetectable AI. According to the results, the viral image was confirmed to be AI-generated.

Conclusion:

Our research confirms that social media users are sharing an AI-generated image while falsely claiming that it is from the shooting of Border 2. The viral claim is misleading and false.

Our research revealed that users are sharing an AI-generated image along with misleading claims

Related Blogs

Introduction

The digital realm is evolving at a rapid pace, revolutionising cyberspace at a breakneck speed. However, this dynamic growth has left several operational and regulatory lacunae in the fabric of cyberspace, which are exploited by cybercriminals for their ulterior motives. One of the threats that emerged rapidly in 2024 is proxyjacking, in which vulnerable systems are exploited by cyber criminals to sell their bandwidth to third-party proxy servers. This cyber threat poses a significant threat to organisations and individual servers.

Proxyjacking is a kind of cyber attack that leverages legit bandwidth sharing services such as Peer2Profit and HoneyGain. These are legitimate platforms but proxyjacking occurs when such services are exploited without user consent. These services provide the opportunity to monetize their surplus internet bandwidth by sharing with other users. The model itself is harmless but provides an avenue for numerous cyber hostilities. The participants install net-sharing software and add the participating system to the proxy network, enabling users to route their traffic through the system. This setup intends to enhance privacy and provide access to geo-locked content.

The Modus Operandi

These systems are hijacked by cybercriminals, who sell the bandwidth of infected devices. This is achieved by establishing Secure Shell (SSH) connections to vulnerable servers. While hackers rarely use honeypots to render elaborate scams, the technical possibility of them doing so cannot be discounted. Cowrie Honeypots, for instance, are engineered to emulate UNIX systems. Attackers can use similar tactics to gain unauthorized access to poorly secured systems. Once inside the system, attackers utilise legit tools such as public docker images to take over proxy monetization services. These tools are undetectable to anti-malware software due to being genuine software in and of themselves. Endpoint detection and response (EDR) tools also struggle with the same threats.

The Major Challenges

Limitation Of Current Safeguards – current malware detection software is unable to distinguish between malicious and genuine use of bandwidth services, as the nature of the attack is not inherently malicious.

Bigger Threat Than Crypto-Jacking – Proxyjacking poses a bigger threat than cryptojacking, where systems are compromised to mine crypto-currency. Proxyjacking uses minimal system resources rendering it more challenging to identify. As such, proxyjacking offers perpetrators a higher degree of stealth because it is a resource-light technique, whereas cryptojacking can leave CPU and GPU usage footprints.

Role of Technology in the Fight Against Proxyjacking

Advanced Safety Measures- Implementing advanced safety measures is crucial in combating proxyjacking. Network monitoring tools can help detect unusual traffic patterns indicative of proxyjacking. Key-based authentication for SSH can significantly reduce the risk of unauthorized access, ensuring that only trusted devices can establish connections. Intrusion Detection Systems and Intrusion Prevention Systems can go a long way towards monitoring unusual outbound traffic.

Robust Verification Processes- sharing services must adopt robust verification processes to ensure that only legitimate users are sharing bandwidth. This could include stricter identity verification methods and continuous monitoring of user activities to identify and block suspicious behaviour.

Policy Recommendations

Verification for Bandwidth Sharing Services – Mandatory verification standards should be enforced for bandwidth-sharing services, including stringent Know Your Customer (KYC) protocols to verify the identity of users. A strong regulatory body would ensure proper compliance with verification standards and impose penalties. The transparency reports must document the user base, verification processes and incidents.

Robust SSH Security Protocols – Key-based authentication for SSH across organisations should be mandated, to neutralize the risk of brute force attacks. Mandatory security audits of SSH configuration within organisations to ensure best practices are complied with and vulnerabilities are identified will help. Detailed logging of SSH attempts will streamline the process of identification and investigation of suspicious behaviour.

Effective Anomaly Detection System – Design a standard anomaly detection system to monitor networks. The industry-wide detection system should focus on detecting inconsistencies in traffic patterns indicating proxy-jacking. Establishing mandatory protocols for incident reporting to centralised authority should be implemented. The system should incorporate machine learning in order to stay abreast with evolving attack methodologies.

Framework for Incident Response – A national framework should include guidelines for investigation, response and remediation to be followed by organisations. A centralized database can be used for logging and tracking all proxy hacking incidents, allowing for information sharing on a real-time basis. This mechanism will aid in identifying emerging trends and common attack vectors.

Whistleblower Incentives – Enacting whistleblower protection laws will ensure the proper safety of individuals reporting proxyjacking activities. Monetary rewards provide extra incentives and motivate individuals to join whistleblowing programs. To provide further protection to whistleblowers, secure communication channels can be established which will ensure full anonymity to individuals.

Conclusion

Proxyjacking represents an insidious and complicated threat in cyberspace. By exploiting legitimate bandwidth-sharing services, cybercriminals can profit while remaining entirely anonymous. Addressing this issue requires a multifaceted approach, including advanced anomaly detection systems, effective verification systems, and comprehensive incident response frameworks. These measures of strong cyber awareness among netizens will ensure a healthy and robust cyberspace.

References

- https://gridinsoft.com/blogs/what-is-proxyjacking/

- https://www.darkreading.com/cyber-risk/ssh-servers-hit-in-proxyjacking-cyberattacks

- https://therecord.media/hackers-use-log4j-in-proxyjacking-scheme

A video of Bollywood actor and Kolkata Knight Riders (KKR) owner Shah Rukh Khan is going viral on social media. The video claims that Shah Rukh Khan is reacting to opposition against Bangladeshi bowler Mustafizur Rahman playing for KKR and is allegedly calling industrialist Gautam Adani a “traitor,” while appealing to stop Hindu–Muslim politics.

Research by the CyberPeace Foundation found that the voice heard in the video is not Shah Rukh Khan’s but is AI-generated. Shah Rukh Khan has not made any official statement regarding Mustafizur Rahman’s removal from KKR. The claim made in the video concerning industrialist Gautam Adani is also completely misleading and baseless.

Claim

In the viral video, Shah Rukh Khan is allegedly heard saying: “People barking about Mustafizur Rahman playing for KKR should stop it. Adani is earning money by betraying the country by supplying electricity from India to Bangladesh. Leave Hindu–Muslim politics and raise your voice against traitors like Adani for the welfare of the country. Mustafizur Rahman will continue to play for the team.”

The post link, archive link, and screenshots can be seen below:

- Archive link: https://archive.is/XsQXp

- Facebook reel link: https://www.facebook.com/reel/1220246633365097

Research

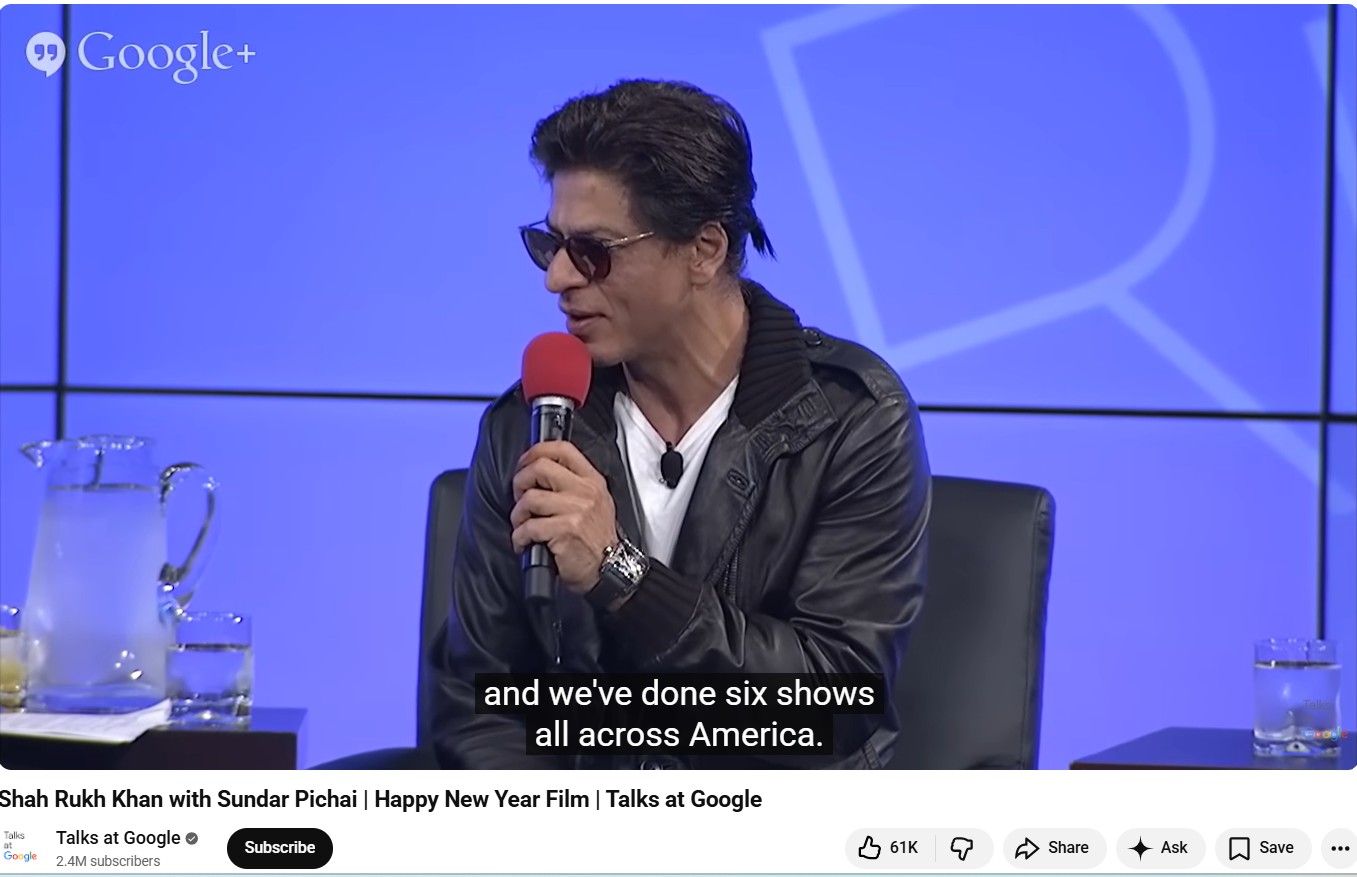

We examined the key frames of Shah Rukh Khan’s viral video using Google Lens. During this process, we found the original video on the official YouTube channel Talks at Google, which was uploaded on 2 October 2014.

In this video, Shah Rukh Khan is seen wearing the same outfit as in the viral clip. He is seen responding to questions from Google CEO Sundar Pichai. The YouTube video description mentions that Shah Rukh Khan participated in a fireside chat held at the Googleplex, where he answered Pichai’s questions and also promoted his upcoming film “Happy New Year.”

The link to the video is given : https://www.youtube.com/watch?v=H_8UBv5bZo0

Upon closely analyzing the viral video of Shah Rukh Khan, we noticed a clear mismatch between his voice and lip movements (lip sync). Such inconsistencies usually appear when the original video or its audio has been tampered with.

We then examined the audio present in the video using the AI detection tool Aurigin. According to the tool’s results, the audio in the viral video was found to be approximately 99 percent AI-generated.

Conclusion

Our research confirmed that the voice heard in the video is not Shah Rukh Khan’s but is AI-generated. Shah Rukh Khan has not made any official comment regarding Mustafizur Rahman’s removal from KKR. Additionally, the claims made in the video about industrialist Gautam Adani are completely misleading and baseless.

CAPTCHA, or the Completely Automated Public Turing Test to Tell Computers and Humans Apart function, is an image or distorted text that users have to identify or interpret to prove they are human. 2007 marked the inception of CAPTCHA, and Google developed its free service called reCAPTCHA, one of the most commonly used technologies to tell computers apart from humans. CAPTCHA protects websites from spam and abuse by using tests considered easy for humans but were supposed to be difficult for bots to solve.

But, now this has changed. With AI becoming more and more sophisticated, it is now capable of solving CAPTCHA tests at a rate that is more accurate than humans, rendering them increasingly ineffective. This raises the question of whether CAPTCHA is still effective as a detection tool with the advancements of AI.

CAPTCHA Evolution: From 2007 Till Now

CAPTCHA has evolved through various versions to keep bots at bay. reCAPTCHA v1 relied on distorted text recognition, v2 introduced image-based tasks and behavioural analysis, and v3 operated invisibly, assigning risk scores based on user interactions. While these advancements improved user experience and security, AI now solves CAPTCHA with 96% accuracy, surpassing humans (50-86%). Bots can mimic human behaviour, undermining CAPTCHA’s effectiveness and raising the question: is it still a reliable tool for distinguishing real people from bots?

Smarter Bots and Their Rise

AI advancements like machine learning, deep learning and neural networks have developed at a very fast pace in the past decade, making it easier for bots to bypass CAPTCHA. They allow the bots to process and interpret the CAPTCHA types like text and images with almost human-like behaviour. Some examples of AI developments against bots are OCR or Optical Character Recognition. The earlier versions of CAPTCHA relied on distorted text: AI because of this tech is able to recognise and decipher the distorted text, making CAPTCHA useless. AI is trained on huge datasets which allows Image Recognition by identifying the objects that are specific to the question asked. These bots can mimic human habits and patterns by Behavioural Analysis and therefore fool the CAPTCHA.

To defeat CAPTCHA, attackers have been known to use Adversarial Machine Learning, which refers to AI models trained specifically to defeat CAPTCHA. They collect CAPTCHA datasets and answers and create an AI that can predict correct answers. The implications that CAPTCHA failures have on platforms can range from fraud to spam to even cybersecurity breaches or cyberattacks.

CAPTCHA vs Privacy: GDPR and DPDP

GDPR and the DPDP Act emphasise protecting personal data, including online identifiers like IP addresses and cookies. Both frameworks mandate transparency when data is transferred internationally, raising compliance concerns for reCAPTCHA, which processes data on Google’s US servers. Additionally, reCAPTCHA's use of cookies and tracking technologies for risk scoring may conflict with the DPDP Act's broad definition of data. The lack of standardisation in CAPTCHA systems highlights the urgent need for policymakers to reevaluate regulatory approaches.

CyberPeace Analysis: The Future of Human Verification

CAPTCHA, once a cornerstone of online security, is losing ground as AI outperforms humans in solving these challenges with near-perfect accuracy. Innovations like invisible CAPTCHA and behavioural analysis provided temporary relief, but bots have adapted, exploiting vulnerabilities and undermining their effectiveness. This decline demands a shift in focus.

Emerging alternatives like AI-based anomaly detection, biometric authentication, and blockchain verification hold promise but raise ethical concerns like privacy, inclusivity, and surveillance. The battle against bots isn’t just about tools but it’s about reimagining trust and security in a rapidly evolving digital world.

AI is clearly winning the CAPTCHA war, but the real victory will be designing solutions that balance security, user experience and ethical responsibility. It’s time to embrace smarter, collaborative innovations to secure a human-centric internet.

References

- https://www.business-standard.com/technology/tech-news/bot-detection-no-longer-working-just-wait-until-ai-agents-come-along-124122300456_1.html

- https://www.milesrote.com/blog/ai-defeating-recaptcha-the-evolving-battle-between-bots-and-web-security

- https://www.technologyreview.com/2023/10/24/1081139/captchas-ai-websites-computing/

- https://datadome.co/guides/captcha/recaptcha-gdpr/