#FactCheck - AI-Generated Image Falsely Linked to Doda Army Vehicle Accident

Executive Summary

On January 22, an Indian Army vehicle met with an accident in Jammu and Kashmir’s Doda district, resulting in the death of 10 soldiers, while several others were injured. In connection with this tragic incident, a photograph is now going viral on social media. The viral image shows an Army vehicle that appears to have fallen into a deep gorge, with several soldiers visible around the site. Users sharing the image are claiming that it depicts the actual scene of the Doda accident.

However, an research by the CyberPeacehas found that the viral image is not genuine. The photograph has been generated using Artificial Intelligence (AI) and does not represent the real accident. Hence, the viral post is misleading.

Claim

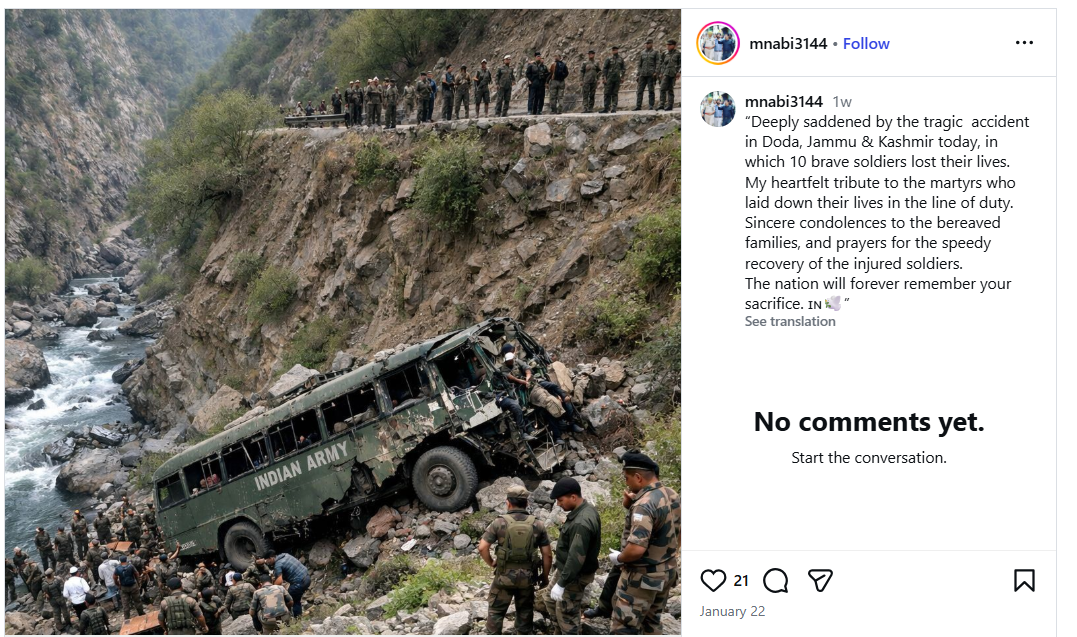

An Instagram user shared the viral image on January 22, 2026, writing:“Deeply saddened by the tragic accident in Doda, Jammu & Kashmir today, in which 10 brave soldiers lost their lives. My heartfelt tribute to the martyrs who laid down their lives in the line of duty.Sincere condolences to the bereaved families, and prayers for the speedy recovery of the injured soldiers.The nation will forever remember your sacrifice.”

The link and screenshot of the post can be seen below.

- https://www.instagram.com/p/DT0UBIRk_3k/

- https://archive.ph/submit/?url=https%3A%2F%2Fwww.instagram.com%2Fp%2FDT0UBIRk_3k%2F+

Fact Check:

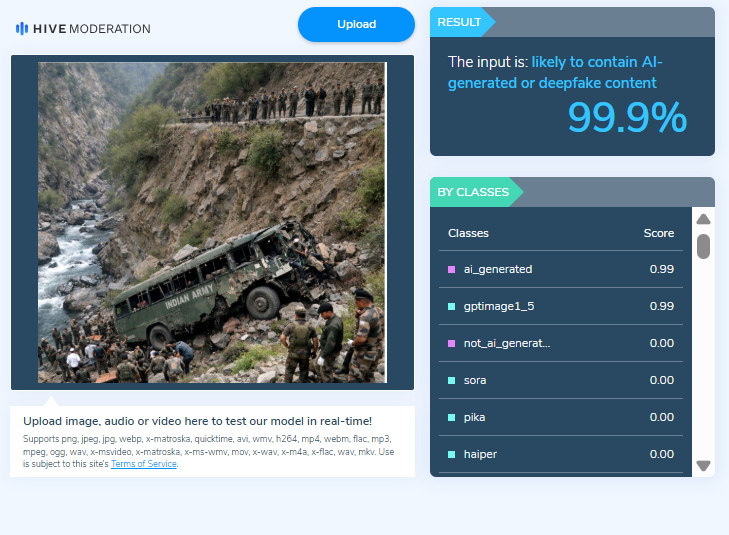

To verify the claim, we first closely examined the viral image. Several visual inconsistencies were observed. The structure of the soldier visible inside the damaged vehicle appears distorted, and the hands and limbs of people involved in the rescue operation look unnatural. These anomalies raised suspicion that the image might be AI-generated. Based on this, we ran the image through the AI detection tool Hive Moderation, which indicated that the image is over 99.9% likely to be AI-generated.

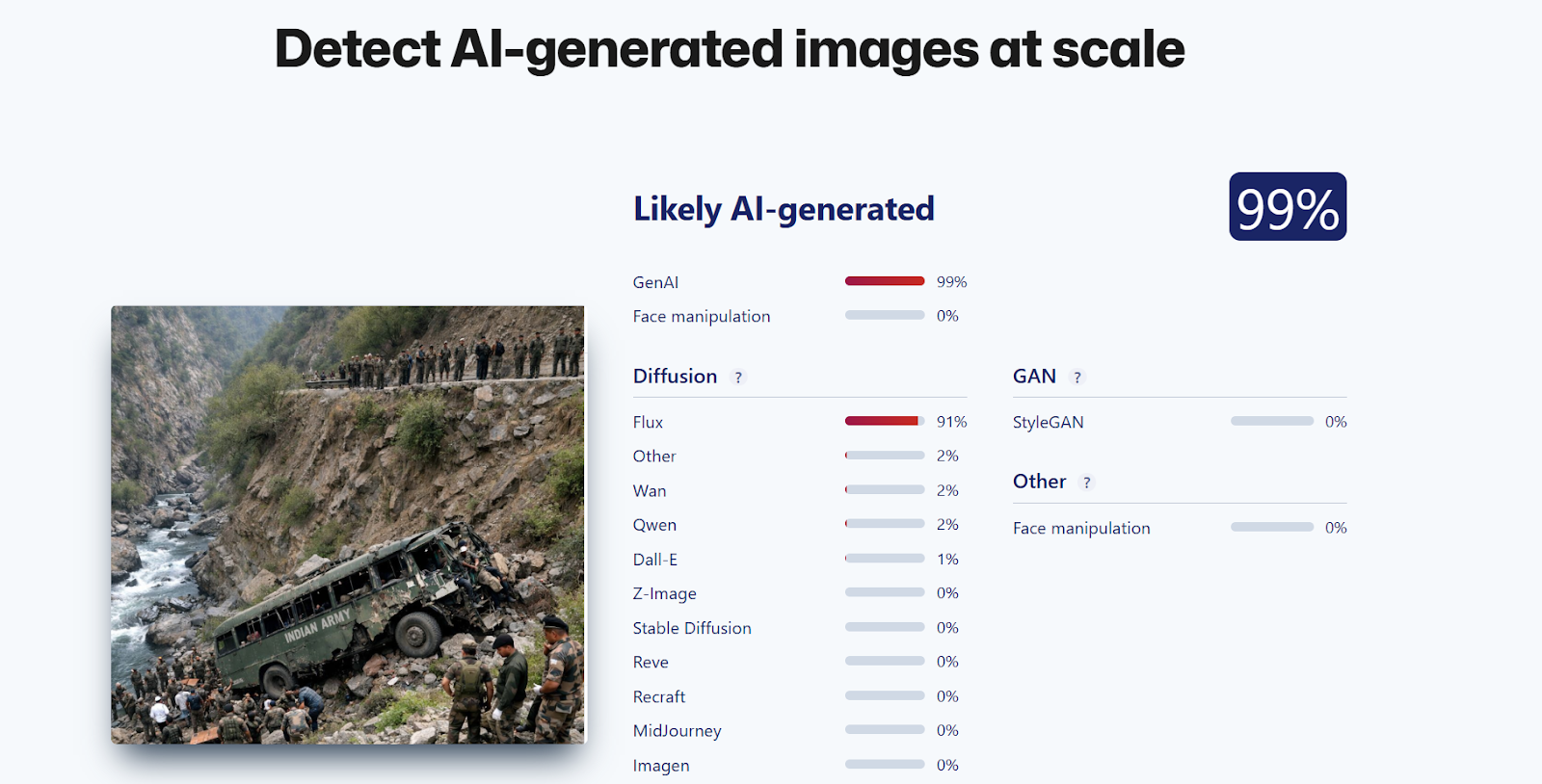

Another AI image detection tool, Sightengine, also flagged the image as 99% AI-generated.

During further research , we found a report published by Navbharat Times on January 22, 2026, which confirmed that an Indian Army vehicle had indeed fallen into a deep gorge in Doda district. According to officials, 10 soldiers were killed and 7 others were injured, and rescue operations were immediately launched.

However, it is important to note that the image circulating on social media is not an actual photograph from the incident.

Conclusion

CyberPeace research confirms that the viral image linked to the Doda Army vehicle accident has been created using Artificial Intelligence. It is not a real photograph from the incident, and therefore, the viral post is misleading.

Related Blogs

Introduction

In this ever-evolving world of technology, cybercrimes and criminals continue to explore new and innovative methods to exploit and intimidate their victims. One of the recent shocking incidents has been reported from the city of Bharatpur, Rajasthan, where the cyber crooks organised a mock court session This complex operation, meant to induce fear and force obedience, exemplifies the daring and intelligence of modern hackers. In this blog article, we’ll go deeper into this concerning occurrence, delving into it to offer light on the strategies used and the ramifications for cybersecurity.to frighten their targets.

The Setup

The case was reported from Gopalgarh village in Bharatpur, Rajasthan, and has unfolded with a shocking twist -the father-son duo, Tahir Khan and his son Talim Khano — from Gopalgarh village in Bharatpur, Rajasthan, has been fooling people to gain their monetary gain by staging a mock court setting and recorded the proceedings to intimidate their victims into paying hefty sums. In the recent case, they have gained 2.69 crores through sextortion. the duo uses to trace their targets on social media platforms, blackmail them, and earn a hefty amount.

An official complaint was filed by a 69-year-old victim who was singled out through his social media accounts, his friends, and his posts Initially, they contacted the victim with a pre-recorded video featuring a nude woman, coaxing him into a compromising situation. As officials from the Delhi Crime Branch and the CBI, they threatened the victim, claiming that a girl had approached them intending to file a complaint against him. Later, masquerading as YouTubers, they threatened to release the incriminating video online. Adding to the charade, they impersonated a local MLA and presented the victim with a forged stamp paper alleging molestation charges. Eventually, posing as Delhi Crime Branch officials again, they demanded money to settle the case after falsely stating that they had apprehended the girl. To further manipulate the victim, the accused staged a court proceeding, recording it and subsequently sending it to him, creating the illusion that everything was concluded. This unique case of sextortion stands out as the only instance where the culprits went to such lengths, staging and recording a mock court to extort money. Furthermore, it was discovered that the accused had fabricated a letter from the Delhi High Court, adding another layer of deception to their scheme.

The Investigation

The complaint was made in a cyber cell. After the complaint was filed, the investigation was made, and it was found that this case stands as one of the most significant sextortion incidents in the country. The father-son pair skillfully assumed five different roles, meticulously executing their plan, which included creating a simulated court environment. “We have also managed to recover Rs 25 lakh from the accused duo—some from their residence in Gopalgarh and the rest from the bank account where it was deposited.

The Tricks used by the duo

The father-son The setup in the fake court scene event was a meticulously built web of deception to inspire fear and weakness in the victim. Let’s look at the tricks the two used to fool the people.

- Social Engineering strategies: Cyber criminals are skilled at using social engineering strategies to acquire the trust of their victims. In this situation, they may have employed phishing emails or phone calls to get personal information about the victim. By appearing as respectable persons or organisations, the crooks tricked the victim into disclosing vital information, giving them weapons they needed to create a sense of trustworthiness.

- Making a False Narrative: To make the fictitious court scenario more credible, the cyber hackers concocted a captivating story based on the victim’s purported legal problems. They might have created plausible papers to give their plan authority, such as forged court summonses, legal notifications, or warrants. They attempted to create a sense of impending danger and an urgent necessity for the victim to comply with their demands by deploying persuasive language and legal jargon.

- Psychological Manipulation: The perpetrators of the fictitious court scenario were well aware of the power of psychological manipulation in coercing their victims. They hoped to emotionally overwhelm the victim by using fear, uncertainty, and the possible implications of legal action. The offenders probably used threats of incarceration, fines, or public exposure to increase the victim’s fear and hinder their capacity to think critically. The idea was to use desperation and anxiety to force the victim to comply.

- Use of Technology to Strengthen Deception: Technological advancements have given cyber thieves tremendous tools to strengthen their misleading methods. The simulated court scenario might have included speech modulation software or deep fake technology to impersonate the voices or appearances of legal experts, judges, or law enforcement personnel. This technology made the deception even more believable, blurring the border between fact and fiction for the victim.

The use of technology in cybercriminals’ misleading techniques has considerably increased their capacity to fool and influence victims. Cybercriminals may develop incredibly realistic and persuasive simulations of judicial processes using speech modulation software, deep fake technology, digital evidence alteration, and real-time communication tools. Individuals must be attentive, gain digital literacy skills, and practice critical thinking when confronting potentially misleading circumstances online as technology advances. Individuals can better protect themselves against the expanding risks posed by cyber thieves by comprehending these technological breakthroughs.

What to do?

Seeking Help and Reporting Incidents: If you or anyone you know is the victim of cybercrime or is fooled by cybercrooks. When confronted with disturbing scenarios such as the imitation court scene staged by cybercrooks, victims must seek help and act quickly by reporting the occurrence. Prompt reporting serves various reasons, including increasing awareness, assisting with investigations, and preventing similar crimes from occurring again. Victims should take the following steps:

- Contact your local law enforcement: Inform local legal enforcement about the cybercrime event. Provide them with pertinent incident facts and proof since they have the experience and resources to investigate cybercrime and catch the offenders involved.

- Seek Assistance from a Cybersecurity specialist: Consult a cybersecurity specialist or respected cybersecurity business to analyse the degree of the breach, safeguard your digital assets, and obtain advice on minimising future risks. Their knowledge and forensic analysis can assist in gathering evidence and mitigating the consequences of the occurrence.

- Preserve Evidence: Keep any evidence relating to the event, including emails, texts, and suspicious actions. Avoid erasing digital evidence, and consider capturing screenshots or creating copies of pertinent exchanges. Evidence preservation is critical for investigations and possible legal procedures.

Conclusion

The setting fake court scene event shows how cybercriminals would deceive and abuse their victims. These criminals tried to use fear and weakness in the victim through social engineering methods, the fabrication of a false narrative, the manipulation of personal information, psychological manipulation, and the use of technology. Individuals can better defend themselves against cybercrooks by remaining watchful and sceptical.

Introduction

The CID of Jharkhand Police has uncovered a network of around 8000 bank accounts engaged in cyber fraud across the state, with a focus on Deoghar district, revealing a surprising 25% concentration of fraudulent accounts. In a recent meeting with bank officials, the CID shared compiled data, with 20% of the identified accounts traced to State Bank of India branches. This revelation, surpassing even Jamtara's cyber fraud reputation, prompts questions about the extent of cybercrime in Jharkhand. Under Director General Anurag Gupta's leadership, the CID has registered 90 cases, apprehended 468 individuals, and seized 1635 SIM cards and 1107 mobile phones through the Prakharna portal to combat cybercrime.

This shocking revelation by, Jharkhand Police's Criminal Investigation Department (CID) has built a comprehensive database comprising information on about 8000 bank accounts tied to cyber fraud operations in the state. This vital information has aided in the launch of investigations to identify the account holders implicated in these illegal actions. Furthermore, the CID shared this information with bank officials at a meeting on January 12 to speed up the identification process.

Background of the Investigation

The CID shared the collated material with bank officials in a meeting on 12 January 2024 to expedite the identification process. A stunning 2000 of the 8000 bank accounts under investigation are in the Deoghar district alone, with 20 per cent of these accounts connected to various State Bank of India branches. The discovery of 8000 bank accounts related to cybercrime in Jharkhand is shocking and disturbing. Surprisingly, Deoghar district has exceeded even Jamtara, which was famous for cybercrime, accounting for around 25% of the discovered bogus accounts in the state.

As per the information provided by the CID Crime Branch, it has been found that most of the accounts were opened in banks, are currently under investigation and around 2000 have been blocked by the investigating agencies.

Recovery Process

During the investigation, it was found out that most of these accounts were running on rent, the cyber criminals opened them by taking fake phone numbers along with Aadhar cards and identity cards from people in return these people(account holders) will get a fixed amount every month.

The CID has been unrelenting in its pursuit of cybercriminals. Police have recorded 90 cases and captured 468 people involved in cyber fraud using the Prakharna site. 1635 SIM Cards and 1107 mobile phones were confiscated by police officials during raids in various cities.

The Crime Branch has revealed the names of the cities where accounts are opened

- Deoghar 2500

- Dhanbad 1183

- Ranchi 959

- Bokaro 716

- Giridih 707

- Jamshedpur 584

- Hazaribagh 526

- Dumka 475

- Jamtara 443

Impact on the Financial Institutions and Individuals

These cyber scams significantly influence financial organisations and individuals; let us investigate the implications.

- Victims: Cybercrime victims have significant financial setbacks, which can lead to long-term financial insecurity. In addition, people frequently suffer mental pain as a result of the breach of personal information, which causes worry, fear, and a lack of faith in the digital financial system. One of the most difficult problems for victims is the recovery process, which includes retrieving lost cash and repairing the harm caused by the cyberattack. Individuals will find this approach time-consuming and difficult, in a lot of cases people are unaware of where and when to approach and seek help. Hence, awareness about cybercrimes and a reporting mechanism are necessary to guide victims through the recovery process, aiding them in retrieving lost assets and repairing the harm inflicted by cyberattacks.

- Financial Institutions: Financial institutions face direct consequences when they incur significant losses due to cyber financial fraud. Unauthorised account access, fraudulent transactions, and the compromise of client data result in immediate cash losses and costs associated with investigating and mitigating the breach's impact. Such assaults degrade the reputation of financial organisations, undermine trust, erode customer confidence, and result in the loss of potential clients.

- Future Implications and Solutions: Recently, the CID discovered a sophisticated cyber fraud network in Jharkhand. As a result, it is critical to assess the possible long-term repercussions of such discoveries and propose proactive ways to improve cybersecurity. The CID's findings are expected to increase awareness of the ongoing threat of cyber fraud to both people and organisations. Given the current state of cyber dangers, it is critical to implement rigorous safeguards and impose heavy punishments on cyber offenders. Government organisations and regulatory bodies should also adapt their present cybersecurity strategies to address the problems posed by modern cybercrime.

Solution and Preventive Measures

Several solutions can help combat the growing nature of cybercrime. The first and foremost step is to enhance cybersecurity education at all levels, including:

- Individual Level: To improve cybersecurity for individuals, raising awareness across all age groups is crucial. This can only be done by knowing the potential threats by following the best online practices, following cyber hygiene, and educating people to safeguard themselves against financial frauds such as phishing, smishing etc.

- Multi-Layered Authentication: Encouraging individuals to enable MFA for their online accounts adds an extra layer of security by requiring additional verification beyond passwords.

- Continuous monitoring and incident Response: By continuously monitoring their financial transactions and regularly reviewing the online statements and transaction history, ensure that everyday transactions are aligned with your expenditures, and set up the accounts alert for transactions exceeding a specified amount for usual activity.

- Report Suspicious Activity: If you see any fraudulent transactions or activity, contact your bank or financial institution immediately; they will lead you through investigating and resolving the problem. The victim must supply the necessary paperwork to support your claim.

How to reduce the risks

- Freeze compromised accounts: If you think that some of your accounts have been compromised, call the bank immediately and request that the account be frozen or temporarily suspended, preventing further unauthorised truncations

- Update passwords: Update and change your passwords for all the financial accounts, emails, and online banking accounts regularly, if you suspect any unauthorised access, report it immediately and always enable MFA that adds an extra layer of protection to your accounts.

Conclusion

The CID's finding of a cyber fraud network in Jharkhand is a stark reminder of the ever-changing nature of cybersecurity threats. Cyber security measures are necessary to prevent such activities and protect individuals and institutions from being targeted against cyber fraud. As the digital ecosystem continues to grow, it is really important to stay vigilant and alert as an individual and society as a whole. We should actively participate in more awareness activities to update and upgrade ourselves.

References

- https://avenuemail.in/cid-uncovers-alarming-cyber-fraud-network-8000-bank-accounts-in-jharkhand-involved/

- https://www.the420.in/jharkhand-cid-cyber-fraud-crackdown-8000-bank-accounts-involved/

- https://www.livehindustan.com/jharkhand/story-cyber-fraudsters-in-jharkhand-opened-more-than-8000-bank-accounts-cid-freezes-2000-accounts-investigating-9203292.html

Introduction

The unprecedented cyber espionage attempt on the Indian Air Force has shocked the military fraternity in the age of the internet where innovation is vital to national security. The attackers have shown a high degree of expertise in their techniques, using a variant of the infamous Go Stealer and current military acquisition pronouncements as a cover to obtain sensitive information belonging to the Indian Air Force. In this recent cyber espionage revelation, the Indian Air Force faces a sophisticated attack leveraging the infamous Go Stealer malware. The timing, coinciding with the Su-30 MKI fighter jets' procurement announcement, raises serious questions about possible national security espionage actions.

A sophisticated attack using the Go Stealer malware exploits defense procurement details, notably the approval of 12 Su-30 MKI fighter jets. Attackers employ a cunningly named ZIP file, "SU-30_Aircraft_Procurement," distributed through an anonymous platform, Oshi, taking advantage of heightened tension surrounding defense procurement.

Advanced Go Stealer Variant:

The malware, coded in Go language, introduces enhancements, including expanded browser targeting and a unique data exfiltration method using Slack, showcasing a higher level of sophistication.

Strategic Targeting of Indian Air Force Professionals:

The attack strategically focuses on extracting login credentials and cookies from specific browsers, revealing the threat actor's intent to gather precise and sensitive information.

Timing Raises Espionage Concerns:

The cyber attack coincides with the Indian Government's Su-30 MKI fighter jets procurement announcement, raising suspicions of targeted attacks or espionage activities.

The Deceitful ZIP ArchiveSU-30 Aircraft Acquisition

The cyberattack materialised as a sequence of painstakingly planned actions. Using the cleverly disguised ZIP file "SU-30_Aircraft_Procurement," the perpetrators took benefit of the authorisation of 12 Su-30 MKI fighter jets by the Indian Defense Ministry in September 2023. Distributed via the anonymous file storage network Oshi, the fraudulent file most certainly made its way around via spam emails or other forms of correspondence.

The Spread of Infection and Go Stealer Payload:

The infiltration procedure progressed through a ZIP file to an ISO file, then to a.lnk file, which finally resulted in the Go Stealer payload being released. This Go Stealer version, written in the programming language Go, adds sophisticated capabilities, such as a wider range of browsing focussed on and a cutting-edge technique for collecting information using the popular chat app Slack.

Superior Characteristics of the Go Stealer Version

Different from its GitHub equivalent, this Go Stealer version exhibits a higher degree of complexity. It creates a log file in the machine owned by the victim when it is executed and makes use of GoLang utilities like GoReSym for in-depth investigation. The malware focuses on cookies and usernames and passwords from web browsers, with a particular emphasis on Edge, Brave, and Google Chrome.

This kind is unique in that it is more sophisticated. Its deployment's cyber enemies have honed its strengths, increasing its potency and detection resistance. Using GoLang tools like GoReSym for comprehensive evaluation demonstrates the threat actors' careful planning and calculated technique.

Go Stealer: Evolution of Threat

The Go Stealer first appeared as a free software project on GitHub and quickly became well-known for its capacity to stealthily obtain private data from consumers who aren't paying attention. Its effectiveness and stealthy design rapidly attracted the attention of cyber attackers looking for a sophisticated tool for clandestine data exfiltration. It was written in the Go programming language.

Several cutting-edge characteristics distinguish the Go Stealer from other conventional data thieves. From the beginning, it showed a strong emphasis on browser focusing on, seeking to obtain passwords and login information from particular websites including Edge, Brave, and Google Chrome.The malware's initial iteration was nurtured on the GitHub database, which has the Go Stealer initial edition. Threat actors have improved and altered the code to serve their evil goals, even if the basic structure is freely accessible.

The Go Stealer version that has been discovered as the cause of the current internet spying by the Indian Air Force is not limited to its GitHub roots. It adds features that make it more dangerous, like a wider range of browsers that may be targeted and a brand-new way to exfiltrate data via Slack, a popular messaging app.

Secret Communications and Information Expulsion

This variation is distinguished by its deliberate usage of the Slack API for secret chats. Slack was chosen because it is widely used in company networks and allows harmful activity to blend in with normal business traffic. The purpose of the function "main_Vulpx" is specifically to upload compromised information to the attacker's Slack route, allowing for covert data theft and communication.

The Time and Strategic Objective

There are worries about targeted assaults or espionage activities due to the precise moment of the cyberattack, which coincides with the Indian government's declaration of its acquisition of Su-30 MKI fighter fighters. The deliberate emphasis on gathering cookies and login passwords from web browsers highlights the threat actor's goal of obtaining accurate and private data from Indian Air Force personnel.

Using Caution: Preventing Possible Cyber Espionage

- Alertness Against Misleading Techniques: Current events highlight the necessity of being on the lookout for files that appear harmless but actually have dangerous intent. The Su-30 Acquisition ZIP file is a stark illustration of how these kinds of data might be included in larger-scale cyberespionage campaigns.

- Potentially Wider Impact: Cybercriminals frequently plan coordinated operations to target not just individuals but potentially many users and government officials. Compromised files increase the likelihood of a serious cyber-attack by opening the door for larger attack vectors.

- Important Position in National Security: Recognize the crucial role people play in the backdrop of national security in the age of digitalisation. Organised assaults carry the risk of jeopardising vital systems and compromising private data.

- Establish Strict Download Guidelines: Implement a strict rule requiring file downloads to only come from reputable and confirmed providers. Be sceptical, particularly when you come across unusual files, and make sure the sender is legitimate before downloading any attachments.

- Literacy among Government Employees: Acknowledge that government employees are prime targets as they have possession of private data. Enable people by providing them with extensive cybersecurity training and awareness that will increase their cognition and fortitude.

Conclusion

Indian Air Force cyber surveillance attack highlights how sophisticated online dangers have become in the digital era. Threat actors' deliberate and focused approach is demonstrated by the deceptive usage of a ZIP archive that is camouflaged and paired with a sophisticated instance of the Go Stealer virus. An additional level of complication is introduced by integrating Slack for covert communication. Increased awareness, strict installation guidelines, and thorough cybersecurity education for government employees are necessary to reduce these threats. In the digital age, protecting national security necessitates ongoing adaptation as well as safeguards toward ever-more potent and cunning cyber threats.

References

- https://www.overtoperator.com/p/indianairforcemalwaretargetpotential

- https://cyberunfolded.in/blog/indian-air-force-targeted-in-sophisticated-cyber-attack-with-su-30-procurement-zip-file#go-stealer-a-closer-look-at-its-malicious-history

- https://thecyberexpress.com/cyberattack-on-the-indian-air-force/https://therecord.media/indian-air-force-infostealing-malware