#FactCheck - AI-Generated Flyover Collapse Video Shared With Misleading Claims

Executive Summary

A video showing a flyover collapse is going viral on social media. The clip shows a flyover and a road passing beneath it, with vehicles moving normally. Suddenly, a portion of the flyover appears to collapse and fall onto the road below, with some vehicles seemingly coming under its impact. The video has been widely shared by users online. However, research by the CyberPeace found the viral claim to be false. The probe revealed that the video is not real but has been created using artificial intelligence.

Claim:

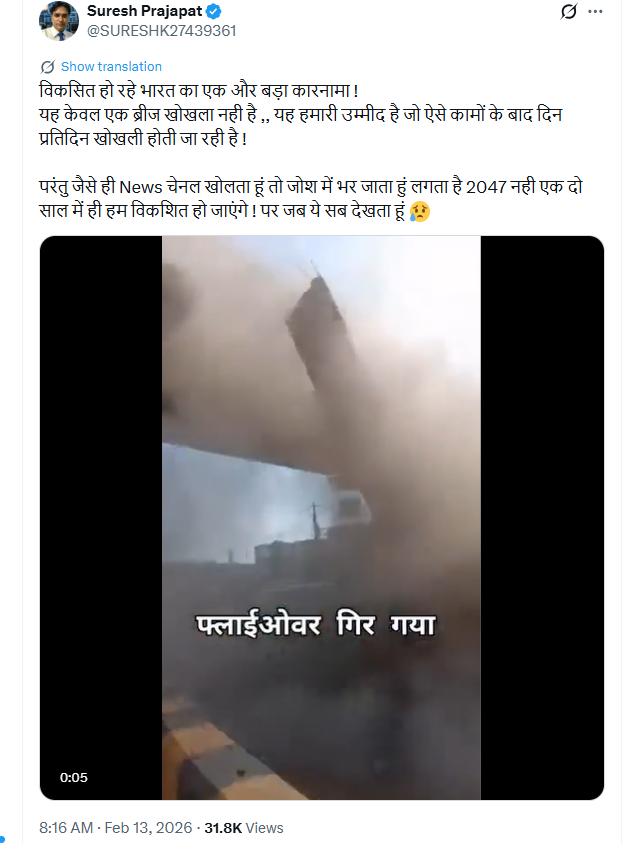

On X (formerly Twitter), a user shared the viral video on February 13, 2026, claiming it showed the reality of India’s infrastructure development and criticizing ongoing projects. The post quickly gained traction, with several users sharing it as a real incident. Similarly, another user shared the same video on Facebook on February 13, 2026, making a similar claim.

Fact Check:

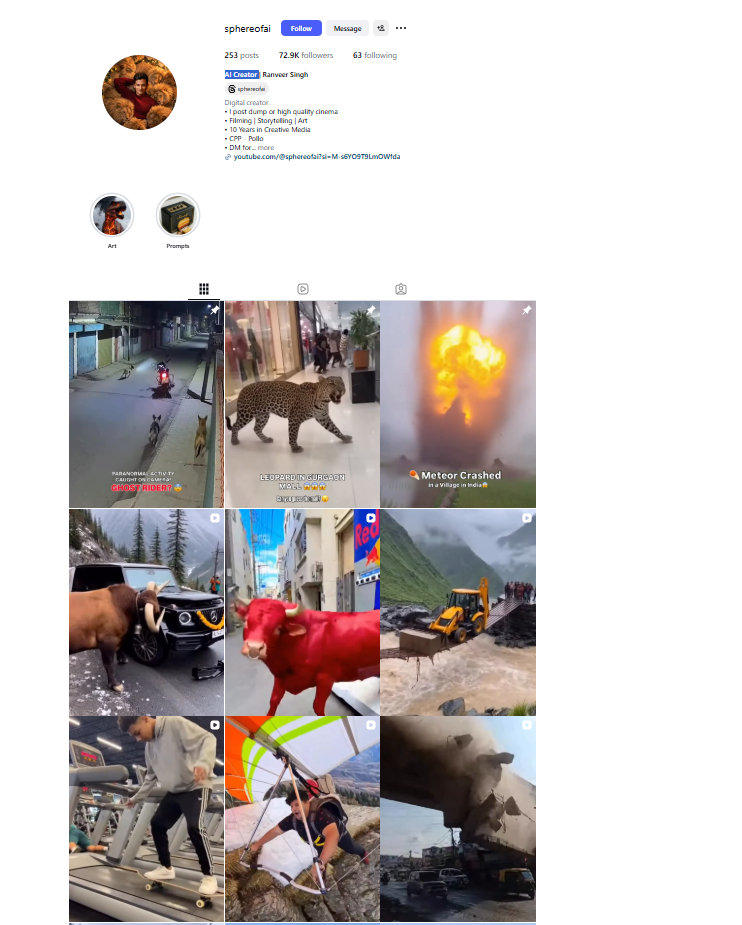

To verify the claim, key frames from the viral video were extracted and searched using Google Lens. During the search, the video was traced to an account named “sphereofai” on Instagram, where it had been posted on February 9. The post included hashtags such as “AI Creator” and “AI Generated,” clearly indicating that the video was created using AI. Further examination of the account showed that the user identifies themselves as an AI content creator.

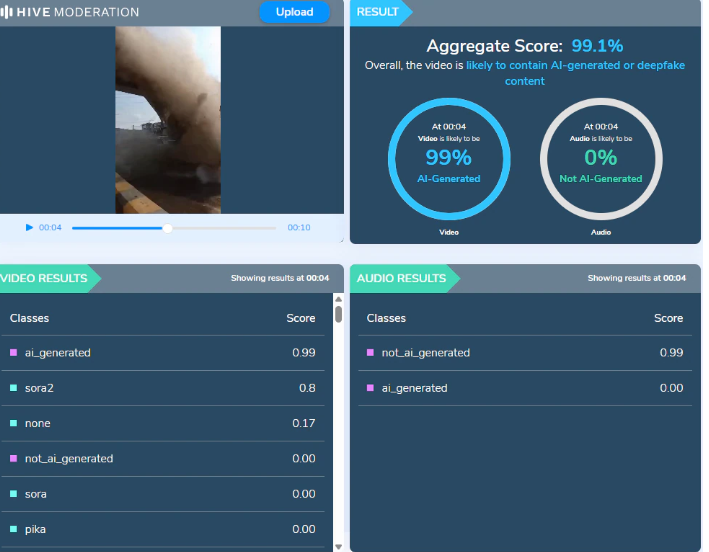

To confirm the findings, the viral video was also analysed using Hive Moderation. The tool’s analysis suggested a 99 percent probability that the video was AI-generated.

Conclusion:

The research established that the viral flyover collapse video is not authentic. It is an AI-generated clip being circulated online with misleading claims.

Related Blogs

Introduction

Misinformation is rampant all over the world and impacting people at large. In 2023, UNESCO commissioned a survey on the impact of Fake News which was conducted by IPSOS. This survey was conducted in 16 countries that are to hold national elections in 2024 with a total of 2.5 billion voters and showed how pressing the need for effective regulation had become and found that 85% of people are apprehensive about the repercussions of online disinformation or misinformation. UNESCO has introduced a plan to regulate social media platforms in light of these worries, as they have become major sources of misinformation and hate speech online. This action plan is supported by the worldwide opinion survey, highlighting the urgent need for strong actions. The action plan outlines the fundamental principles that must be respected and concrete measures to be implemented by all stakeholders associated, i.e., government, regulators, civil society and the platforms themselves.

The Key Areas in Focus of the Action Plan

The focus area of the action plan is on the protection of the Freedom of Expression while also including access to information and other human rights in digital platform governance. The action plan works on the basic premise that the impact on human rights becomes the compass for all decision-making, at every stage and by every stakeholder. Groups of independent regulators work in close coordination as part of a wider network, to prevent digital companies from taking advantage of disparities between national regulations. Moderation of content as a feasible and effective option at the required scale, in all regions and all languages.

The algorithms of these online platforms, particularly the social media platforms are established, but it is too often geared towards maximizing engagement rather than the reliability of information. Platforms are required to take on more initiative to educate and train users to be critical thinkers and not just hopers. Regulators and platforms are in a position to take strong measures during particularly sensitive conditions ranging from elections to crises, particularly the information overload that is taking place.

Key Principles of the Action Plan

- Human Rights Due Diligence: Platforms are required to assess their impact on human rights, including gender and cultural dimensions, and to implement risk mitigation measures. This would ensure that the platforms are responsible for educating users about their rights.

- Adherence to International Human Rights Standards: Platforms must align their design, content moderation, and curation with international human rights standards. This includes ensuring non-discrimination, supporting cultural diversity, and protecting human moderators.

- Transparency and Openness: Platforms are expected to operate transparently, with clear, understandable, and auditable policies. This includes being open about the tools and algorithms used for content moderation and the results they produce.

- User Access to Information: Platforms should provide accessible information that enables users to make informed decisions.

- Accountability: Platforms must be accountable to their stakeholders which would include the users and the public, which would ensure that redressal for content-related decisions is not compromised. This accountability extends to the implementation of their terms of service and content policies.

Enabling Environment for the application of the UNESCO Plan

The UNESCO Action Plan to counter misinformation has been created to create an environment where freedom of expression and access to information flourish, all while ensuring safety and security for digital platform users and non-users. This endeavour calls for collective action—societies as a whole must work together. Relevant stakeholders, from vulnerable groups to journalists and artists, enable the right to expression.

Conclusion

The UNESCO Action Plan is a response to the dilemma that has been created due to the information overload, particularly, because the distinction between information and misinformation has been so clouded. The IPSOS survey has revealed the need for an urgency to address these challenges in the users who fear the repercussions of misinformation.

The UNESCO action plan provides a comprehensive framework that emphasises the protection of human rights, particularly freedom of expression, while also emphasizing the importance of transparency, accountability, and education in the governance of digital platforms as a priority. By advocating for independent regulators and encouraging platforms to align with international human rights standards, UNESCO is setting the stage for a more responsible and ethical digital ecosystem.

The recommendations include integrating regulators through collaborations and promoting global cooperation to harmonize regulations, expanding the Digital Literacy campaign to educate users about misinformation risks and online rights, ensuring inclusive access to diverse content in multiple languages and contexts, and monitoring and refining tech advancements and regulatory strategies as challenges evolve. To ultimately promote a true online information landscape.

Reference

- https://www.unesco.org/en/articles/online-disinformation-unesco-unveils-action-plan-regulate-social-media-platforms

- https://www.unesco.org/sites/default/files/medias/fichiers/2023/11/unesco_ipsos_survey.pdf

- https://dig.watch/updates/unesco-sets-out-strategy-to-tackle-misinformation-after-ipsos-survey

Introduction

In July 2025, the Digital Trust & Safety Partnership (DTSP) achieved a significant milestone with the formal acceptance of its Safe Framework Specification as an international standard, ISO/IEC 25389. This is the first globally recognised standard that is exclusively concerned with guaranteeing a secure online experience for the general public's use of digital goods and services.

Significance of the New Framework

Fundamentally, ISO/IEC 25389 provides organisations with an organised framework for recognising, controlling, and reducing risks associated with conduct or content. This standard, which was created under the direction of ISO/IEC's Joint Technical Committee 1 (JTC 1), integrates the best practices of DTSP and offers a precise way to evaluate organisational maturity in terms of safety and trust. Crucially, it offers the first unified international benchmark, allowing organisations globally to coordinate on common safety pledges and regularly assess progress.

Other Noteworthy Standards and Frameworks

While ISO/IEC 25389 is pioneering, it’s not the only framework shaping digital trust and safety:

- One of the main outcomes of the United Nations’ 2024 Summit for the Future was the UN's Global Digital Compact, which describes cross-border cooperation on secure and reliable digital environments with an emphasis on countering harmful content, upholding online human rights, and creating accountability standards.

- The World Economic Forum’s Digital Trust Framework defines the goals and values, such as cybersecurity, privacy, transparency, redressability, auditability, fairness, interoperability and safety, implicit to the concept of digital trust. It also provides a roadmap to digital trustworthiness that imbibes these dimensions.

- The Framework for Integrity, Security and Trust (FIST) launched at the Cybereace Summit 2023 at USI of India in New Delhi, calls for a multistakeholder approach to co-create solutions and best practices for digital trust and safety.

- While still in the finalisation stage for implementation rollout, India's Digital Personal Data Protection Act, 2023 (DPDP Act) and its Rules (2025) aim to strike a balance between individual rights and data processing needs by establishing a groundwork for data security and privacy.

- India is developing frameworks in cutting-edge technologies like artificial intelligence. Using a hub-and-spoke model under the IndiaAI Mission, the AI Safety Institute was established in early 2025 with the goal of creating standards for trustworthy, moral, and safe AI systems. Furthermore, AI standards with an emphasis on safety and dependability are being drafted by the Bureau of Indian Standards (BIS).

- Google's DigiKavach program (2023) and Google Safety Engineering Centre (GSEC) in Hyderabad are concrete efforts to support digital safety and fraud prevention in India's tech sector.

What It Means for India

India is already claiming its place in discussions about safety and trust around the world. Google's June 2025 safety charter for India, for example, highlights how India's distinct digital scale, diversity, and vast threat landscape provide insights that inform global cybersecurity strategies.

For India's digital ecosystem, ISO/IEC 25389 comes at a critical juncture. Global best practices in safety and trust are desperately needed as a result of the rapid adoption of digital technologies, including the growth of digital payments, e-governance, and artificial intelligence and a concomitant rise in instances of digital harms. Through its guidelines, ISO/IEC 25389 provides a reference benchmark that Indian startups, government agencies, and tech companies can use to improve their safety standards.

Conclusion

A global trust-and-safety standard like ISO/IEC 25389 is essential for making technology safer for people, even as we discuss the broader adoption of security and safety-by-design principles integrated into the processes of technological product development. India can improve user protection, build its reputation globally, and solidify its position as a key player in the creation of a safer, more resilient digital future by implementing this framework in tandem with its growing domestic regulatory framework (such as the DPDP Act and AI Safety policies).

References

- https://dtspartnership.org/the-safe-framework-specification/

- https://dtspartnership.org/press-releases/dtsps-safe-framework-published-as-an-international-standard/?

- https://www.weforum.org/stories/2024/04/united-nations-global-digital-compact-trust-security/?

- https://economictimes.indiatimes.com/tech/technology/google-releases-safety-charter-for-india-senior-exec-details-top-cyber-threat-actors-in-the-country/articleshow/121903651.cms?

- https://initiatives.weforum.org/digital-trust/framework

- https://government.economictimes.indiatimes.com/news/secure-india/the-launch-of-fist-framework-for-integrity-security-and-trust/103302090

Introduction

Assisted Reproductive Technology (“ART”) refers to a diverse set of medical procedures designed to aid individuals or couples in achieving pregnancy when conventional methods are unsuccessful. This umbrella term encompasses various fertility treatments, including in vitro fertilization (IVF), intrauterine insemination (IUI), and gamete and embryo manipulation. ART procedures involve the manipulation of both male and female reproductive components to facilitate conception.

The dynamic landscape of data flows within the healthcare sector, notably in the realm of ART, demands a nuanced understanding of the complex interplay between privacy regulations and medical practices. In this context, the Information Technology (Reasonable Security Practices And Procedures And Sensitive Personal Data Or Information) Rules, 2011, play a pivotal role, designating health information as "sensitive personal data or information" and underscoring the importance of safeguarding individuals' privacy. This sensitivity is particularly pronounced in the ART sector, where an array of personal data, ranging from medical records to genetic information, is collected and processed. The recent Assisted Reproductive Technology (Regulation) Act, 2021, in conjunction with the Digital Personal Data Protection Act, 2023, establishes a framework for the regulation of ART clinics and banks, presenting a layered approach to data protection.

A note on data generated by ART

Data flows in any sector are scarcely uniform and often not easily classified under straight-jacket categories. Consequently, mapping and identifying data and its types become pivotal. It is believed that most data flows in the healthcare sector are highly sensitive and personal in nature, which may severely compromise the privacy and safety of an individual if breached. The Information Technology (Reasonable Security Practices And Procedures And Sensitive Personal Data Or Information) Rules, 2011 (“SPDI Rules”) categorizes any information pertaining to physical, physiological, mental conditions or medical records and history as “sensitive personal data or information”; this definition is broad enough to encompass any data collected by any ART facility or equipment. These include any information collected during the screening of patients, pertaining to ovulation and menstrual cycles, follicle and sperm count, ultrasound results, blood work etc. It also includes pre-implantation genetic testing on embryos to detect any genetic abnormality.

But data flows extend beyond mere medical procedures and technology. Health data also involves any medical procedures undertaken, the amount of medicine and drugs administered during any procedure, its resultant side effects, recovery etc. Any processing of the above-mentioned information, in turn, may generate more personal data points relating to an individual’s political affiliations, race, ethnicity, genetic data such as biometrics and DNA etc.; It is seen that different ethnicities and races react differently to the same/similar medication and have different propensities to genetic diseases. Further, it is to be noted that data is not only collected by professionals but also by intelligent equipment like AI which may be employed by any facility to render their service. Additionally, dissemination of information under exceptional circumstances (e.g. medical emergency) also affects how data may be classified. Considerations are further nuanced when the fundamental right to identity of a child conceived and born via ART may be in conflict with the fundamental right to privacy of a donor to remain anonymous.

Intersection of Privacy laws and ART laws:

In India, ART technology is regulated by the Assisted Reproductive Technology (Regulation) Act, 2021 (“ART Act”). With this, the Union aims to regulate and supervise assisted reproductive technology clinics and ART banks, prevent misuse and ensure safe and ethical practice of assisted reproductive technology services. When read with the Digital Personal Data Protection Act, 2023 (“DPDP Act”) and other ancillary guidelines, the two legislations provide some framework regulations for the digital privacy of health-based apps.

The ART Act establishes a National Assisted Reproductive Technology and Surrogacy Registry (“National Registry”) which acts as a central database for all clinics and banks and their nature of services. The Act also establishes a National Assisted Reproductive Technology and Surrogacy Board (“National Board”) under the Surrogacy Act to monitor the implementation of the act and advise the central government on policy matters. It also supervises the functioning of the National Registry, liaises with State Boards and curates a code of conduct for professionals working in ART clinics and banks. Under the DPDP Act, these bodies (i.e. National Board, State Board, ART clinics and banks) are most likely classified as data fiduciaries (primarily clinics and banks), data processors (these may include National Board and State boards) or an amalgamation of both (these include any appropriate authority established under the ART Act for investigation of complaints, suspend or cancellation of registration of clinics etc.) depending on the nature of work undertaken by them. If so classified, then the duties and liabilities of data fiduciaries and processors would necessarily apply to these bodies. As a result, all bodies would necessarily have to adopt Privacy Enhancing Technologies (PETs) and other organizational measures to ensure compliance with privacy laws in place. This may be considered one of the most critical considerations of any ART facility since any data collected by them would be sensitive personal data pertaining to health, regulated by the Information Technology (Reasonable Security Practices And Procedures And Sensitive Personal Data Or Information) Rules, 2011 (“SPDI Rules 2011”). These rules provide for how sensitive personal data or information are to be collected, handled and processed by anyone.

The ART Act independently also provides for the duties of ART clinics and banks in the country. ART clinics and banks are required to inform the commissioning couple/woman of all procedures undertaken and all costs, risks, advantages, and side effects of their selected procedure. It mandatorily ensures that all information collected by such clinics and banks to not informed to anyone except the database established by the National Registry or in cases of medical emergency or on order of court. Data collected by clinics and banks (these include details on donor oocytes, sperm or embryos used or unused) are required to be detailed and must be submitted to the National Registry online. ART banks are also required to collect personal information of donors including name, Aadhar number, address and any other details. By mandating online submission, the ART Act is harmonized with the DPDP Act, which regulates all digital personal data and emphasises free, informed consent.

Conclusion

With the increase in active opt-ins for ART, data privacy becomes a vital consideration for all healthcare facilities and professionals. Safeguard measures are not only required on a corporate level but also on a governmental level. It is to be noted that in the 262 Session of the Rajya Sabha, the Ministry of Electronics and Information Technology reported 165 data breach incidents involving citizen data from January 2018 to October 2023 from the Central Identities Data Repository despite publicly denying. This discovery puts into question the safety and integrity of data that may be submitted to the National Registry database, especially given the type of data (both personal and sensitive information) it aims to collate. At present the ART Act is well supported by the DPDP Act. However, further judicial and legislative deliberations are required to effectively regulate and balance the interests of all stakeholders.

References

- The Information Technology (Reasonable Security Practices And Procedures And Sensitive Personal Data Or Information) Rules, 2011

- Caring for Intimate Data in Fertility Technologies https://dl.acm.org/doi/pdf/10.1145/3411764.3445132

- Digital Personal Data Protection Act, 2023

- https://www.wolterskluwer.com/en/expert-insights/pharmacogenomics-and-race-can-heritage-affect-drug-disposition