#FactCheck: A digitally altered video of actor Sebastian Stan shows him changing a ‘Tell Modi’ poster to one that reads ‘I Told Modi’ on a display panel.

Executive Summary:

A widely circulated video claiming to feature a poster with the words "I Told Modi" has gone viral, improperly connecting it to the April 2025 Pahalgam attack, in which terrorists killed 26 civilians. The altered Marvel Studios clip is allegedly a mockery of Operation Sindoor, the counterterrorism operation India initiated in response to the attack. This misinformation emphasizes how crucial it is to confirm information before sharing it online by disseminating misleading propaganda and drawing attention away from real events.

Claim:

A man can be seen changing a poster that says "Tell Modi" to one that says "I Told Modi" in a widely shared viral video. This video allegedly makes reference to Operation Sindoor in India, which was started in reaction to the Pahalgam terrorist attack on April 22, 2025, in which militants connected to The Resistance Front (TRF) killed 26 civilians.

Fact check:

Further research, we found the original post from Marvel Studios' official X handle, confirming that the circulating video has been altered using AI and does not reflect the authentic content.

By using Hive Moderation to detect AI manipulation in the video, we have determined that this video has been modified with AI-generated content, presenting false or misleading information that does not reflect real events.

Furthermore, we found a Hindustan Times article discussing the mysterious reveal involving Hollywood actor Sebastian Stan.

Conclusion:

It is untrue to say that the "I Told Modi" poster is a component of a public demonstration. The text has been digitally changed to deceive viewers, and the video is manipulated footage from a Marvel film. The content should be ignored as it has been identified as false information.

- Claim: Viral social media posts confirm a Pakistani military attack on India.

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

Introduction:

The Ministry of Civil Aviation, GOI, established the initiative ‘DigiYatra’ to ensure hassle-free and health-risk-free journeys for travellers/passengers. The initiative uses a single token of face biometrics to digitally validate identity, travel, and health along with any other data needed to enable air travel.

Cybersecurity is a top priority for the DigiYatra platform administrators, with measures implemented to mitigate risks of data loss, theft, or leakage. With over 6.5 million users, DigiYatra is an important step forward for India, in the direction of secure digital travel with seamless integration of proactive cybersecurity protocols. This blog focuses on examining the development, challenges and implications that stand in the way of securing digital travel.

What is DigiYatra? A Quick Overview

DigiYatra is a flagship initiative by the Government of India to enable paperless travel, reducing identity checks for a seamless airport experience. This technology allows the entry of passengers to be automatically processed based on a facial recognition system at all the checkpoints at the airports, including main entry, security check areas, aircraft boarding, and more.

This technology makes the boarding process quick and seamless as each passenger needs less than three seconds to pass through every touchpoint. Passengers’ faces essentially serve as their documents (ID proof and if required, Vaccine Proof) and their boarding passes.

DigiYatra has also enhanced airport security as passenger data is validated by the Airlines Departure Control System. It allows only the designated passengers to enter the terminal. Additionally, the entire DigiYatra Process is non-intrusive and automatic. In improving long-standing security and operational airport protocols, the platform has also significantly improved efficiency and output for all airport professionals, from CISF personnel to airline staff members.

Policy Origins and Framework

Rooted in the Government of India's Digital India campaign and enabled by the National Civil Aviation Policy (NCAP) 2016, DigiYatra aims to modernise air travel by integrating Aadhaar-based passenger identification. While Aadhaar is currently the primary ID, efforts are underway to include other identification methods. The platform, supported by stakeholders like the Airports Authority of India (26%) and private airports (14.8% each), must navigate stringent cybersecurity demands. Compliance with the Digital Personal Data Protection Act, 2023, ensures the secure use of sensitive facial recognition data, while the Aircraft (Security) Rules, 2023, mandate robust interoperability and data protection mechanisms across stakeholders. DigiYatra also aspires to democratise digital travel, extending its reach to underserved airports and non-tech-savvy travellers. As India refines its cybersecurity and privacy frameworks, learning from global best practices is essential to safeguarding data and ensuring seamless, secure air travel operations.

International Practices

Global practices offer crucial lessons to strengthen DigiYatra's cybersecurity and streamline the seamless travel experience. Initiatives such as CLEAR in the USA and Seamless Traveller initiatives in Singapore offer actionable insights into further expanding the system to its full potential. CLEAR is operational in 58 airports and has more than 17 million users. Singapore has made Seamless Traveller active since the beginning of 2024 and aims to have a 95% shift to automated lanes by 2026.

Some additional measures that India can adopt from international initiatives are regular audits and updates to the cybersecurity policies. Further, India can aim for a cross-border policy for international travel. By implementing these recommendations, DigiYatra can not only improve data security and operational efficiency but also establish India as a leader in global aviation security standards, ensuring trust and reliability for millions of travellers

CyberPeace Recommendations

Some recommendations for further improving upon our efforts for seamless and secure digital travel are:

- Strengthen the legislation on biometric data usage and storage.

- Collaborate with global aviation bodies to develop standardised operations.

- Cybersecurity technologies, such as blockchain for immutable data records, should be adopted alongside encryption standards, data minimisation practices, and anonymisation techniques.

- A cybersecurity-first culture across aviation stakeholders.

Conclusion

DigiYatra represents a transformative step in modernising India’s aviation sector by combining seamless travel with robust cybersecurity. Leveraging facial recognition and secure data validation enhances efficiency while complying with the Digital Personal Data Protection Act, 2023, and Aircraft (Security) Rules, 2023.

DigiYatra must address challenges like secure biometric data storage, adopt advanced technologies like blockchain, and foster a cybersecurity-first culture to reach its full potential. Expanding to underserved regions and aligning with global best practices will further solidify its impact. With continuous innovation and vigilance, DigiYatra can position India as a global leader in secure, digital travel.

References

- https://government.economictimes.indiatimes.com/news/governance/digi-yatra-operates-on-principle-of-privacy-by-design-brings-convenience-security-ceo-digi-yatra-foundation/114926799

- https://www.livemint.com/news/india/explained-what-is-digiyatra-how-it-will-work-and-other-questions-answered-11660701094885.html

- https://www.civilaviation.gov.in/sites/default/files/2023-09/ASR%20Notification_published%20in%20Gazette.pdf

Introduction

Cyber-attacks are another threat in this digital world, not exclusive to a single country, that could significantly disrupt global movements, commerce, and international relations all of which experienced first-hand when a cyber-attack occurred at Heathrow, the busiest airport in Europe, which threw their electronic check-in and baggage systems into a state of chaos. Not only were there chaos and delays at Heathrow, airports across Europe including Brussels, Berlin, and Dublin experienced delay and had to conduct manual check-ins for some flights further indicating just how interconnected the world of aviation is in today's world. Though Heathrow assured passengers that the "vast majority of flights" would operate, hundreds were delayed or postponed for hours as those passengers stood in a queue while nearly every European airport's flying schedule was also negatively impacted.

The Anatomy of the Attack

The attack specifically targeted Muse software by Collins Aerospace, a software built to allow various airlines to share check-in desks and boarding gates. The disruption initially perceived to be technical issues soon turned into a logistical nightmare, with airlines relying on Muse having to engage in horror-movie-worthy manual steps hand-tagging luggage, verifying boarding passes over the phone, and manually boarding passengers. While British Airways managed to revert to a backup system, most other carriers across Heathrow and partner airports elsewhere in Europe had to resort to improvised manual solutions.

The trauma was largely borne by the passengers. Stories emerged about travelers stranded on the tarmac, old folks left barely able to walk without assistance, and even families missing important connections. It served to remind everyone that the aviation world, with its schedules interlocked tightly across borders, can see even a localized system failure snowball into a continental-level crisis.

Cybersecurity Meets Aviation Infrastructure

In the last two decades, aviation has become one of the more digitally dependent industries in the world. From booking systems and baggage handling issues to navigation and air traffic control, digital systems are the invisible scaffold on which flight operations are supported. Though this digitalization has increased the scale of operations and enhanced efficiency, it must have also created many avenues for cyber threats. Cyber attackers increasingly realize that to target aviation is not just about money but about leverage. Just interfering with the check-in system of a major hub like Heathrow is more than just financial disruption; it causes panic and hits the headlines, making it much more attractive for criminal gangs and state-sponsored threat actors.

The Heathrow incident is like the worldwide IT crash in July 2024-thwarting activities of flights caused by a botched Crowdstrike update. Both prove the brittleness of digital dependencies in aviation, where one failure point triggering uncontrollable ripple effects spanning multiple countries. Unlike conventional cyber incidents contained within corporate networks, cyber-attacks in aviation spill on to the public sphere in real time, disturbing millions of lives.

Response and Coordination

Heathrow Airport first added extra employees to assist with manual check-in and told passengers to check flight statuses before traveling. The UK's National Cyber Security Centre (NCSC) collaborated with Collins Aerospace, the Department for Transport, and law enforcement agencies to investigate the extent and source of the breach. Meanwhile, the European Commission published a statement that they are "closely following the development" of the cyber incident while assuring passengers that no evidence of a "widespread or serious" breach has been observed.

According to passengers, the reality was quite different. Massive passenger queues, bewildering announcements, and departure time confirmations cultivated an atmosphere of chaos. The wrenching dissonance between the reassurances from official channel and Kirby needs to be resolved about what really happens in passenger experiences. During such incidents, technical restoration and communication flow are strategies for retaining public trust in incidents.

Attribution and the Shadow of Ransomware

As with many cyber-attacks, questions on its attribution arose quite promptly. Rumours of hackers allegedly working for the Kremlin escaped into the air quite possibly inside seconds of the realization, Cybersecurity experts justifiably advise against making conclusions hastily. Extortion ransomware gangs stand the last chance to hold the culprits, whereas state actors cannot be ruled out, especially considering Russian military activity under European airspace. Meanwhile, Collins Aerospace has refused to comment on the attack, its precise nature, or where it originated, emphasizing an inherent difficulty in cyberattribution.

What is clear is the way these attacks bestow criminal leverage and dollars. In previous ransomware attacks against critical infrastructure, cybercriminal gangs have extorted millions of dollars from their victims. In aviation terms, the stakes grow exponentially, not only in terms of money but national security and diplomatic relations as well as human safety.

Broader Implications for Aviation Cybersecurity

This incident brings to consideration several core resilience issues within aviation systems. Traditionally, the airports and airlines had placed premium on physical security, but today, the equally important concept of digital resilience has come into being. Systems such as Muse, which bind multiple airlines into shared infrastructure, offer efficiency but, at the same time, also concentrate that risk. A cyber disruption in one place will cascade across dozens of carriers and multiple airports, thereby amplifying the scale of that disruption.

The case also brings forth redundancy and contingency planning as an urgent concern. While BA systems were able to stand on backups, most other airlines could not claim that advantage. It is about time that digital redundancies, be it in the form of parallel systems or isolated backups or even AI-driven incident response frameworks, are built into aviation as standard practice and soon.

On the policy plane, this incident draws attention to the necessity for international collaboration. Aviation is therefore transnational, and cyber incidents standing on this domain cannot possibly be handled by national agencies only. Eurocontrol, the European Commission, and cross-border cybersecurity task forces must spearhead this initiative to ensure aviation-wide resilience.

Human Stories Amid a Digital Crisis

Beyond technical jargon and policy response, the human stories had perhaps the greatest impact coming from Heathrow. Passengers spoke of hours spent queuing, heading to funerals, and being hungry and exhausted as they waited for their flights. For many, the cyber-attack was no mere headline; instead, it was ¬ a living reality of disruption.

These stories reflect the fact that cybersecurity is no hunger strike; it touches people's lives. In critical sectors such as aviation, one hour of disruption means missed connections for passengers, lost revenue for airlines, and inculcates immense emotional stress. Crisis management must therefore entail technical recovery and passenger care, communication, and support on the ground.

Conclusion

The cybersecurity crisis of Heathrow and other European airports emphasizes the threat of cyber disruption on the modern legitimacy of aviation. The use of increased connectivity for airport processes means that any cyber disruption present, no matter how small, can affect scheduling issues regionally or on other continents, even threatening lives. The occurrences confirm a few things: a resilient solution should provide redundancy not efficiency; international networking and collaboration is paramount; and communicating with the traveling public is just as important (if not more) as the technical recovery process.

As governments, airlines, and technology providers analyse the disruption, the question is longer if aviation can withstand cyber threats, but to what extent it will be prepared to defend itself against those attacks. The Heathrow crisis is a reminder that the stake of cybersecurity is not just about a data breach or outright stealing of money but also about stealing the very systems that keep global mobility in motion. Now, the aviation industry is tested to make this disruption an opportunity to fortify the digital defences and start preparing for the next inevitable production.

References

- https://www.bbc.com/news/articles/c3drpgv33pxo

- https://www.theguardian.com/business/2025/sep/21/delays-continue-at-heathrow-brussels-and-berlin-airports-after-alleged-cyber-attack

- https://www.reuters.com/business/aerospace-defense/eu-agency-says-third-party-ransomware-behind-airport-disruptions-2025-09-22/

A photo featuring Bollywood actor Abhishek Bachchan and actress Aishwarya Rai is being widely shared on social media. In the image, the Kedarnath Temple is clearly visible in the background. Users are claiming that the couple recently visited the Kedarnath shrine for darshan.

Cyber Peace Foundation’s research found the viral claim to be false. Our research revealed that the image of Abhishek Bachchan and Aishwarya Rai is not real, but AI-generated, and is being misleadingly shared as a genuine photograph.

Claim

On January 14, 2026, a user on X (formerly Twitter) shared the viral image with a caption suggesting that all rumours had ended and that the couple had restarted their life together. The post further claimed that both actors were seen smiling after a long time, implying that the image was taken during their visit to Kedarnath Temple.

The post has since been widely circulated on social media platforms

Fact Check:

To verify the claim, we first conducted a keyword search on Google related to Abhishek Bachchan, Aishwarya Rai, and a Kedarnath visit. However, we did not find any credible media reports confirming such a visit.

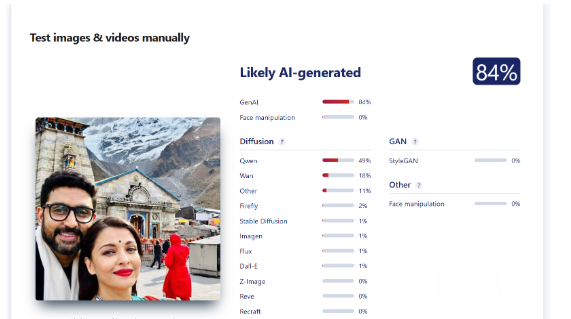

On closely examining the viral image, several visual inconsistencies raised suspicion about it being artificially generated. To confirm this, we scanned the image using the AI detection tool Sightengine. According to the tool’s analysis, the image was found to be 84 percent AI-generated.

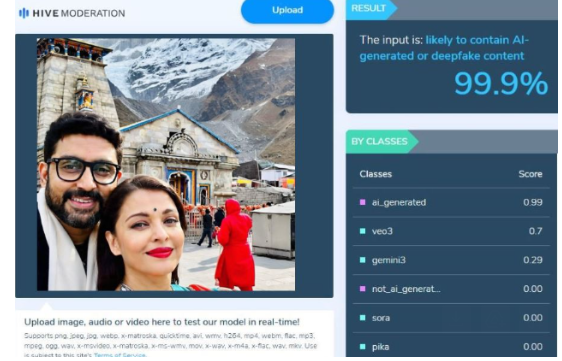

Additionally, we scanned the same image using another AI detection tool, HIVE Moderation. The results showed an even stronger indication, classifying the image as 99 percent AI-generated.

Conclusion

Our research confirms that the viral image showing Abhishek Bachchan and Aishwarya Rai at Kedarnath Temple is not authentic. The picture is AI-generated and is being falsely shared on social media to mislead users.