#FactCheck - Viral Video Falsely Attributes Statements on ‘Operation Sindoor’ to Army Chief

Executive Summary

A video widely circulated on social media claims to show a confrontation between a Zee News journalist and Chief of Army Staff (COAS) General Upendra Dwivedi during the Indian Army’s Annual Press Briefing 2026. The video alleges that General Dwivedi made sensitive remarks regarding ‘Operation Sindoor’, including claims that the operation was still ongoing and that diplomatic intervention by former US President Donald Trump had restricted India’s military response. Several social media users shared the clip while questioning the Indian Army’s operational decisions and demanding accountability over the alleged remarks. The CyberPeace concludes that the viral video claiming to show a discussion between a Zee News journalist and Chief of Army Staff General Upendra Dwivedi on ‘Operation Sindoor’ is misleading and digitally manipulated. Although the visuals were sourced from the Indian Army’s Annual Press Briefing 2026, the audio was artificially created and added later to misinform viewers. The Army Chief did not make any remarks regarding diplomatic interference or limitations on military action during the briefing.

Claim:

An X (formerly Twitter) user, Abbas Chandio (@AbbasChandio__), shared the video on January 14, asserting that it showed a Zee News journalist questioning the Army Chief about the status and outcomes of ‘Operation Sindoor’ during a recent press conference. In the clip, the journalist is purportedly heard challenging the Army Chief over his earlier statement that the operation was “still ongoing,” while the COAS is allegedly heard responding that diplomatic intervention during the conflict limited the Army’s ability to pursue further military action. Here is the link and archive link to the post, along with a screenshot.

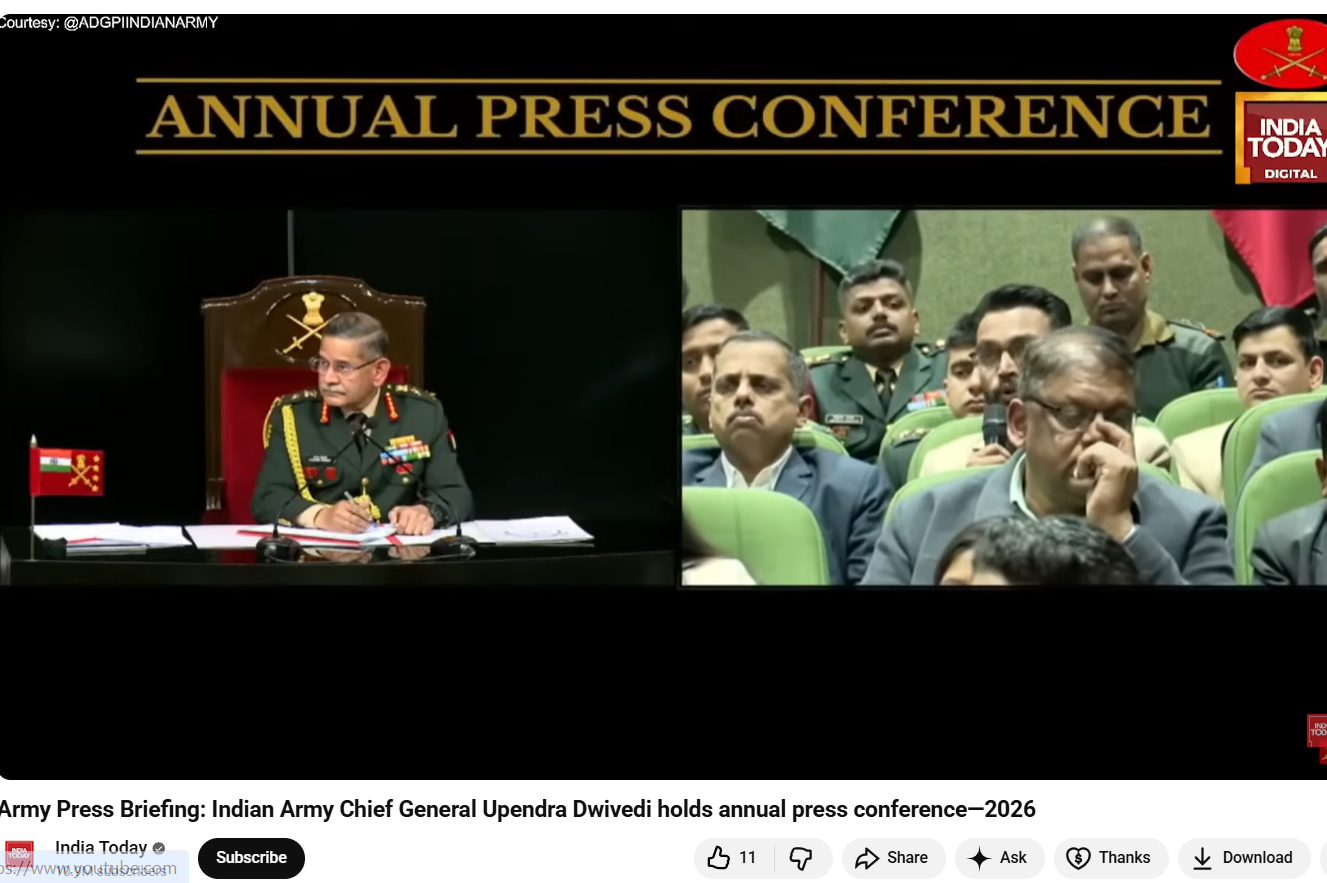

The reverse image search also directed to an extended version of the footage uploaded on the official YouTube channel of India Today. The original video was identified as coverage from the Indian Army’s Annual Press Conference 2026, held on January 13 in New Delhi and addressed by COAS General Upendra Dwivedi. Upon reviewing the original press briefing footage, CyberPeace found no instance where a Zee News journalist questioned the Army Chief about ‘Operation Sindoor’. There was also no mention of the statements attributed to General Dwivedi in the viral clip.

In the authentic footage, journalist Anuvesh Rath was seen raising questions related to defence procurement and modernization, not military operations or diplomatic interventions. Here is the link to the original video, along with a screenshot.

To further verify the claim, CyberPeace extracted the audio track from the viral video and analysed it using the AI-based voice detection tool Aurigin. The analysis revealed that the voice heard in the clip was artificially generated, indicating the use of synthetic or manipulated audio. This confirmed that while genuine visuals from the Army’s official press briefing were used, a fabricated audio track had been overlaid to falsely attribute controversial statements to the Army Chief and a Zee News journalist.

Conclusion

The CyberPeace concludes that the viral video claiming to show a discussion between a Zee News journalist and Chief of Army Staff General Upendra Dwivedi on ‘Operation Sindoor’ is misleading and digitally manipulated. Although the visuals were sourced from the Indian Army’s Annual Press Briefing 2026, the audio was artificially created and added later to misinform viewers. The Army Chief did not make any remarks regarding diplomatic interference or limitations on military action during the briefing. The video is a clear case of digital manipulation and misinformation, aimed at creating confusion and casting doubts over the Indian Army’s official position.