#FactCheck - Viral Photo of Dilapidated Bridge Misattributed to Kerala, Originally from Bangladesh

Executive Summary:

A viral photo on social media claims to show a ruined bridge in Kerala, India. But, a reality check shows that the bridge is in Amtali, Barguna district, Bangladesh. The reverse image search of this picture led to a Bengali news article detailing the bridge's critical condition. This bridge was built-in 2002 to 2006 over Jugia Khal in Arpangashia Union. It has not been repaired and experiences recurrent accidents and has the potential to collapse, which would disrupt local connectivity. Thus, the social media claims are false and misleading.

Claims:

Social Media users share a photo that shows a ruined bridge in Kerala, India.

Fact Check:

On receiving the posts, we reverse searched the image which leads to a Bengali News website named Manavjamin where the title displays, “19 dangerous bridges in Amtali, lakhs of people in fear”. We found the picture on this website similar to the viral image. On reading the whole article, we found that the bridge is located in Bangladesh's Amtali sub-district of Barguna district.

Taking a cue from this, we then searched for the bridge in that region. We found a similar bridge at the same location in Amtali, Bangladesh.

According to the article, The 40-meter bridge over Jugia Khal in Arpangashia Union, Amtali, was built in 2002 to 2006 and was never repaired. It is in a critical condition, causing frequent accidents and risking collapse. If the bridge collapses it will disrupt communication between multiple villages and the upazila town. Residents have made temporary repairs.

Hence, the claims made by social media users are fake and misleading.

Conclusion:

In conclusion, the viral photo claiming to show a ruined bridge in Kerala is actually from Amtali, Barguna district, Bangladesh. The bridge is in a critical state, with frequent accidents and the risk of collapse threatening local connectivity. Therefore, the claims made by social media users are false and misleading.

- Claim: A viral image shows a ruined bridge in Kerala, India.

- Claimed on: Facebook

- Fact Check: Fake & Misleading

Related Blogs

Introduction

A hacking operation has corrupted data on Madhya Pradesh's e-Nagarpalika portal, a vital online platform for paying civic taxes that serves 413 towns and cities in the state. Due to this serious security violation, the portal has been shut down. The incident occurred in December 2023. This affects citizens' access to vital online services like possessions, water, and municipal tax payments, as well as the issuing of obituaries and certain documents offered via online portal. Ransomware which is a type of malware encodes and conceals a victim's files, and data making it inaccessible and unreachable unless the attacker is paid a ransom. When ransomware initially appeared, encryption was the main method of preventing individuals' data from such threats.

The Intrusion and Database Corruption: Exposing the Breach's Scope

The extent of the assault on the e-Nagarpalika portal was revealed by the Principal Secretary of the Urban Administration and Housing Department of Madhya Pradesh, in a startling revelation. Cybercriminals carried out a highly skilled assault that led to the total destruction of the data infrastructure covering all 413 of the towns for which the website was responsible.

This significant breach represents a thorough infiltration into the core of the electronic civic taxation system, not just an arrangement. Because of the attackers' nefarious intent, the data integrity was compromised, raising questions about the safeguarding of private citizen data. The extent of the penetration reaches vital city services, causing a reassessment of the current cybersecurity safeguards in place.

In addition to raising concerns about the privacy of personal information, the hacked information system casts doubt on the availability of crucial municipal services. Among the vital services affected by this cyberattack are marriage licenses, birth and death documents, and the efficient handling of possessions, water, and municipal taxes.

The weaknesses of electronic systems, which are the foundation of contemporary civic services, are highlighted by this incident. Beyond the attack's immediate interruption, citizens now have to deal with concerns about the security of their information and the availability of essential services. This tragedy is a clear reminder of the urgent need for robust safety safeguards as authorities work hard to control the consequences and begin the process of restoration.

Offline Protections in Place

The concerned authority informed the general population that the offsite data, which has been stored up on recordings every three days, is secure despite the online attack. This preventive action emphasises how crucial offline restores are to lessening the effects of these kinds of cyberattacks. The choice to keep the e-Nagarpalika platform offline until a certain time highlights how serious the matter is and how urgently extensive reconstruction must be done to restore the online services offer

Effect on Civic Services

The e-Nagarpalika website is crucial to providing online municipal services, serving as an invaluable resource for citizens to obtain necessary paperwork and carry out diverse transactions. Civic organisations have been told to function offline while the portal remains unavailable until the infrastructure is fully operational. This interruption prompts worries about possible delays and obstacles citizens face when getting basic amenities during this time.

Examination and Quality Control

Information technology specialists are working diligently to look into the computer virus and recover the website, in coordination with the Madhya Pradesh State Electronic Development Corporation Limited, the state's cyber police, and the Indian Computer Emergency Response Team (CERT-In). Reassuringly for impacted citizens, authorities note that there is currently no proof of data leaks arising from the hack.

Conclusion

The computerised attack on the e-Nagarpalika portal in Madhya Pradesh exposes the weakness of computer networks. It has affected the essential services to public services offered via online portal. The hack, which exposed citizen data and interfered with vital services, emphasises how urgently strong safety precautions are needed. The tragedy is a clear reminder of the need to strengthen technology as authorities investigate and attempt to restore the system. One bright spot is that the offline defenses in place highlight the significance of backup plans in reducing the impact of cyberattacks. The ongoing reconstruction activities demonstrate the commitment to protecting public data and maintaining the confidentiality of essential city operations.

References

- https://government.economictimes.indiatimes.com/tag/cyber+attack

- https://www.techtarget.com/searchsecurity/definition/ransomware#:~:text=Ransomware%20is%20a%20type%20of,accessing%20their%20files%20and%20systems.

- https://www.business-standard.com/india-news/mp-s-e-nagarpalika-portal-suffers-cyber-attack-data-corrupted-officials-123122300519_1.html

- https://www.freepressjournal.in/bhopal/mp-govts-e-nagar-palika-portal-hacked-data-of-over-400-cities-leaked

Overview:

In today’s digital landscape, safeguarding personal data and communications is more crucial than ever. WhatsApp, as one of the world’s leading messaging platforms, consistently enhances its security features to protect user interactions, offering a seamless and private messaging experience

App Lock: Secure Access with Biometric Authentication

To fortify security at the device level, WhatsApp offers an app lock feature, enabling users to protect their app with biometric authentication such as fingerprint or Face ID. This feature ensures that only authorized users can access the app, adding an additional layer of protection to private conversations.

How to Enable App Lock:

- Open WhatsApp and navigate to Settings.

- Select Privacy.

- Scroll down and tap App Lock.

- Activate Fingerprint Lock or Face ID and follow the on-screen instructions.

Chat Lock: Restrict Access to Private Conversations

WhatsApp allows users to lock specific chats, moving them to a secured folder that requires biometric authentication or a passcode for access. This feature is ideal for safeguarding sensitive conversations from unauthorized viewing.

How to Lock a Chat:

- Open WhatsApp and select the chat to be locked.

- Tap on the three dots (Android) or More Options (iPhone).

- Select Lock Chat

- Enable the lock using Fingerprint or Face ID.

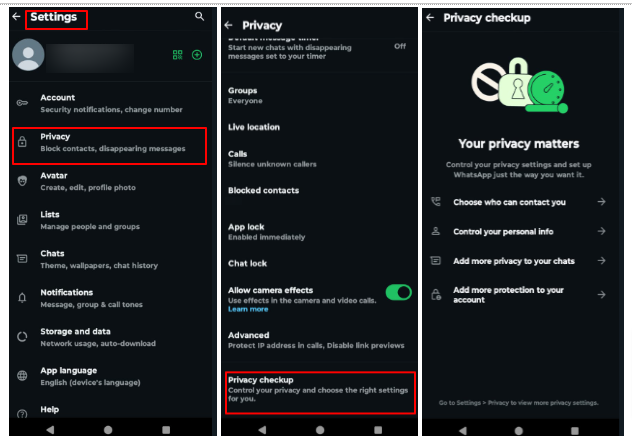

Privacy Checkup: Strengthening Security Preferences

The privacy checkup tool assists users in reviewing and customizing essential security settings. It provides guidance on adjusting visibility preferences, call security, and blocked contacts, ensuring a personalized and secure communication experience.

How to Run Privacy Checkup:

- Open WhatsApp and navigate to Settings.

- Tap Privacy.

- Select Privacy Checkup and follow the prompts to adjust settings.

Automatic Blocking of Unknown Accounts and Messages

To combat spam and potential security threats, WhatsApp automatically restricts unknown accounts that send excessive messages. Users can also manually block or report suspicious contacts to further enhance security.

How to Manage Blocking of Unknown Accounts:

- Open WhatsApp and go to Settings.

- Select Privacy.

- Tap to Advanced

- Enable Block unknown account messages

IP Address Protection in Calls

To prevent tracking and enhance privacy, WhatsApp provides an option to hide IP addresses during calls. When enabled, calls are routed through WhatsApp’s servers, preventing location exposure via direct connections.

How to Enable IP Address Protection in Calls:

- Open WhatsApp and go to Settings.

- Select Privacy, then tap Advanced.

- Enable Protect IP Address in Calls.

Disappearing Messages: Auto-Deleting Conversations

Disappearing messages help maintain confidentiality by automatically deleting sent messages after a predefined period—24 hours, 7 days, or 90 days. This feature is particularly beneficial for reducing digital footprints.

How to Enable Disappearing Messages:

- Open the chat and tap the Chat Name.

- Select Disappearing Messages.

- Choose the preferred duration before messages disappear.

View Once: One-Time Access to Media Files

The ‘View Once’ feature ensures that shared photos and videos can only be viewed a single time before being automatically deleted, reducing the risk of unauthorized storage or redistribution.

How to Send View Once Media:

- Open a chat and tap the attachment icon.

- Choose Camera or Gallery to select media.

- Tap the ‘1’ icon before sending the media file.

Group Privacy Controls: Manage Who Can Add You

WhatsApp provides users with the ability to control group invitations, preventing unwanted additions by unknown individuals. Users can restrict group invitations to ‘Everyone,’ ‘My Contacts,’ or ‘My Contacts Except…’ for enhanced privacy.

How to Adjust Group Privacy Settings:

- Open WhatsApp and go to Settings.

- Select Privacy and tap Groups.

- Choose from the available options: Everyone, My Contacts, or My Contacts Except

Conclusion

WhatsApp continuously enhances its security features to protect user privacy and ensure safe communication. With tools like App Lock, Chat Lock, Privacy Checkup, IP Address Protection, and Disappearing Messages, users can safeguard their data and interactions. Features like View Once and Group Privacy Controls further enhance confidentiality. By enabling these settings, users can maintain a secure and private messaging experience, effectively reducing risks associated with unauthorized access, tracking, and digital footprints. Stay updated and leverage these features for enhanced security.

Introduction

The world has been riding the wave of technological advancements, and the fruits it has born have impacted our lives. Technology, by its virtue, cannot be quantified as safe or unsafe it is the application and use of technology which creates the threats. Its times like this, the importance and significance of policy framework are seen in cyberspace. Any technology can be governed by means of policies and laws only. In this blog, we explore the issues raised by the EU for the tech giants and why the Indian Govt is looking into probing Whatsapp.

EU on Big Techs

Eu has always been seen to be a strong policy maker for cyberspace, and the same can be seen from the scope, extent and compliance of GDPR. This data protection bill is the holy grail for worldwide data protection bills. Apart from the GDPR, the EU has always maintained strong compliance demographics for the big tech as most of them have originated outside of Europe, and the rights of EU citizens come into priority above anything else.

New Draft Notification

According to the draft of the new notification, Amazon, Google, Microsoft and other non-European Union cloud service providers looking to secure an EU cybersecurity label to handle sensitive data can only do so via a joint venture with an EU-based company. The document adds that the cloud service must be operated and maintained from the EU, all customer data must be stored and processed in the EU, and EU laws take precedence over non-EU laws regarding the cloud service provider. Certified cloud services are operated only by companies based in the EU, with no entity from outside the EU having effective control over the CSP (cloud service provider) to mitigate the risk of non-EU interfering powers undermining EU regulations, norms and values.

This move from the EU is still in the draft phase however, it is expected to come into action soon as issues related to data breaches of EU citizens have been reported on numerous occasions. The document said the tougher rules would apply to personal and non-personal data of particular sensitivity where a breach may have a negative impact on public order, public safety, human life or health, or the protection of intellectual property.

How will it secure the netizens?

Since the EU has been the leading policy maker in cyberspace, it is often seen that the rules and policies of the EU are often replicated around the world. Hence this move comes at a critical time as the EU is looking towards safeguarding the EU netizens and the Cyber security industry in the EU by allowing them to collaborate with big tech while maintaining compliance. Cloud services can be protected by this mechanism, thus ensuring fewer instances of data breaches, thus contributing to a dip in cyber crimes and attacks.

The Indian Govt on WhatsApp

The Indian Govt has decided to probe Whatsapp and its privacy settings. One of the Indian Whatsapp users tweeted a screenshot of WhatsApp accessing the phone’s mic even when the phone was not in use, and the app was not open even in the background. The meta-owned Social messaging platform enjoys nearly 487 million users in India, making it their biggest market. The 2018 judgement on Whatsapp and its privacy issues was a landmark judgement, but the platform is in violation of the same.

The MoS, Ministry of Electronics and Information Technology, Rajeev Chandrashekhar, has already tweeted that the issue will be looked into and that they will be punished if the platform is seen violating the guidelines. The Digital Personal Data Protection Bill is yet to be tabled at the parliament. Still, despite the draft bill being public, big platforms must maintain the code of conduct to maintain compliance when the bill turns into an Act.

Threats for Indian Users

The Indian Whatsapp user contributes to the biggest user base un the world, and still, they are vulnerable to attacks on WhatsApp and now WhatsApp itself. The netizens are under the following potential threats –

- Data breaches

- Identity theft

- Phishing scams

- Unconsented data utilisation

- Violation of Right to Privacy

- Unauthorised flow of data outside India

- Selling of data to a third party without consent

The Indian netizen needs to stay vary of such issues and many more by practising basic cyber safety and security protocols and keeping a check on the permissions granted to apps, to keep track of one’s digital footprint.

Conclusion

Whether it’s the EU or Indian Government, it is pertinent to understand that the world powers are all working towards creating a safe and secured cyberspace for its netizens. The move made by the EU will act as a catalyst for change at a global level, as once the EU enforces the policy, the world will soon replicate it to safeguard their cyber interests, assets and netizens. The proactive stance of the Indian Government is a crucial sign that the things will not remain the same in the Indian Cyber ecosystem, and its upon the platforms and companies to ensure compliance, even in the absence of a strong legislation for cyberspace. The government is taking all steps to safeguard the Indian netizen, as the same lies in the souls and spirit of the new Digital India Bill, which will govern cyberspace in the near future. Still, till then, in order to maintain the synergy and equilibrium, it is pertinent for the platforms to be in compliance with the laws of natural justice.