#FactCheck - Viral Images of Indian Army Eating Near Border area Revealed as AI-Generated Fabrication

Executive Summary:

The viral social media posts circulating several photos of Indian Army soldiers eating their lunch in the extremely hot weather near the border area in Barmer/ Jaisalmer, Rajasthan, have been detected as AI generated and proven to be false. The images contain various faults such as missing shadows, distorted hand positioning and misrepresentation of the Indian flag and soldiers body features. The various AI generated tools were also used to validate the same. Before sharing any pictures in social media, it is necessary to validate the originality to avoid misinformation.

Claims:

The photographs of Indian Army soldiers having their lunch in extreme high temperatures at the border area near to the district of Barmer/Jaisalmer, Rajasthan have been circulated through social media.

Fact Check:

Upon the study of the given images, it can be observed that the images have a lot of similar anomalies that are usually found in any AI generated image. The abnormalities are lack of accuracy in the body features of the soldiers, the national flag with the wrong combination of colors, the unusual size of spoon, and the absence of Army soldiers’ shadows.

Additionally it is noticed that the flag on Indian soldiers’ shoulder appears wrong and it is not the traditional tricolor pattern. Another anomaly, soldiers with three arms, strengtheness the idea of the AI generated image.

Furthermore, we used the HIVE AI image detection tool and it was found that each photo was generated using an Artificial Intelligence algorithm.

We also checked with another AI Image detection tool named Isitai, it was also found to be AI-generated.

After thorough analysis, it was found that the claim made in each of the viral posts is misleading and fake, the recent viral images of Indian Army soldiers eating food on the border in the extremely hot afternoon of Badmer were generated using the AI Image creation tool.

Conclusion:

In conclusion, the analysis of the viral photographs claiming to show Indian army soldiers having their lunch in scorching heat in Barmer, Rajasthan reveals many anomalies consistent with AI-generated images. The absence of shadows, distorted hand placement, irregular showing of the Indian flag, and the presence of an extra arm on a soldier, all point to the fact that the images are artificially created. Therefore, the claim that this image captures real-life events is debunked, emphasizing the importance of analyzing and fact-checking before sharing in the era of common widespread digital misinformation.

- Claim: The photo shows Indian army soldiers having their lunch in extreme heat near the border area in Barmer/Jaisalmer, Rajasthan.

- Claimed on: X (formerly known as Twitter), Instagram, Facebook

- Fact Check: Fake & Misleading

Related Blogs

Introduction

As the sun rises on the Indian subcontinent, a nation teeters on the precipice of a democratic exercise of colossal magnitude. The Lok Sabha elections, a quadrennial event that mobilises the will of over a billion souls, is not just a testament to the robustness of India's democratic fabric but also a crucible where the veracity of information is put to the sternest of tests. In this context, the World Economic Forum's 'Global Risks Report 2024' emerges as a harbinger of a disconcerting trend: the spectre of misinformation and disinformation that threatens to distort the electoral landscape.

The report, a carefully crafted document that shares the insights of 1,490 experts from the interests of academia, government, business and civil society, paints a tableau of the global risks that loom large over the next decade. These risks, spawned by the churning cauldron of rapid technological change, economic uncertainty, a warming planet, and simmering conflict, are not just abstract threats but tangible realities that could shape the future of nations.

India’s Electoral Malice

India, as it strides towards the general elections scheduled in the spring of 2024, finds itself in the vortex of this hailstorm. The WEF survey positions India at the zenith of vulnerability to disinformation and misinformation, a dubious distinction that underscores the challenges facing the world's largest democracy. The report depicts misinformation and disinformation as the chimaeras of false information—whether inadvertent or deliberate—that are dispersed through the arteries of media networks, skewing public opinion towards a pervasive distrust in facts and authority. This encompasses a panoply of deceptive content: fabricated, false, manipulated and imposter.

The United States, the European Union, and the United Kingdom too, are ensnared in this web of varying degrees of misinformation. South Africa, another nation on the cusp of its own electoral journey, is ranked 22nd, a reflection of the global reach of this phenomenon. The findings, derived from a survey conducted over the autumnal weeks of September to October 2023, reveal a world grappling with the shadowy forces of untruth.

Global Scenario

The report prognosticates that as close to three billion individuals across diverse economies—Bangladesh, India, Indonesia, Mexico, Pakistan, the United Kingdom, and the United States—prepare to exercise their electoral rights, the rampant use of misinformation and disinformation, and the tools that propagate them, could erode the legitimacy of the governments they elect. The repercussions could be dire, ranging from violent protests and hate crimes to civil confrontation and terrorism.

Beyond the electoral arena, the fabric of reality itself is at risk of becoming increasingly polarised, seeping into the public discourse on issues as varied as public health and social justice. As the bedrock of truth is undermined, the spectre of domestic propaganda and censorship looms large, potentially empowering governments to wield control over information based on their own interpretation of 'truth.'

The report further warns that disinformation will become increasingly personalised and targeted, honing in on specific groups such as minority communities and disseminating through more opaque messaging platforms like WhatsApp or WeChat. This tailored approach to deception signifies a new frontier in the battle against misinformation.

In a world where societal polarisation and economic downturn are seen as central risks in an interconnected 'risks network,' misinformation and disinformation have ascended rapidly to the top of the threat hierarchy. The report's respondents—two-thirds of them—cite extreme weather, AI-generated misinformation and disinformation, and societal and/or political polarisation as the most pressing global risks, followed closely by the 'cost-of-living crisis,' 'cyberattacks,' and 'economic downturn.'

Current Situation

In this unprecedented year for elections, the spectre of false information looms as one of the major threats to the global populace, according to the experts surveyed for the WEF's 2024 Global Risk Report. The report offers a nuanced analysis of the degrees to which misinformation and disinformation are perceived as problems for a selection of countries over the next two years, based on a ranking of 34 economic, environmental, geopolitical, societal, and technological risks.

India, the land of ancient wisdom and modern innovation, stands at the crossroads where the risk of disinformation and misinformation is ranked highest. Out of all the risks, these twin scourges were most frequently selected as the number one risk for the country by the experts, eclipsing infectious diseases, illicit economic activity, inequality, and labor shortages. The South Asian nation's next general election, set to unfurl between April and May 2024, will be a litmus test for its 1.4 billion people.

The spectre of fake news is not a novel adversary for India. The 2019 election was rife with misinformation, with reports of political parties weaponising platforms like WhatsApp and Facebook to spread incendiary messages, stoking fears that online vitriol could spill over into real-world violence. The COVID-19 pandemic further exacerbated the issue, with misinformation once again proliferating through WhatsApp.

Other countries facing a high risk of the impacts of misinformation and disinformation include El Salvador, Saudi Arabia, Pakistan, Romania, Ireland, Czechia, the United States, Sierra Leone, France, and Finland, all of which consider the threat to be one of the top six most dangerous risks out of 34 in the coming two years. In the United Kingdom, misinformation/disinformation is ranked 11th among perceived threats.

The WEF analysts conclude that the presence of misinformation and disinformation in these electoral processes could seriously destabilise the real and perceived legitimacy of newly elected governments, risking political unrest, violence, and terrorism, and a longer-term erosion of democratic processes.

The 'Global Risks Report 2024' of the World Economic Forum ranks India first in facing the highest risk of misinformation and disinformation in the world at a time when it faces general elections this year. The report, released in early January with the 19th edition of its Global Risks Report and Global Risk Perception Survey, claims to reveal the varying degrees to which misinformation and disinformation are rated as problems for a selection of analyzed countries in the next two years, based on a ranking of 34 economic, environmental, geopolitical, societal, and technological risks.

Some governments and platforms aiming to protect free speech and civil liberties may fail to act effectively to curb falsified information and harmful content, making the definition of 'truth' increasingly contentious across societies. State and non-state actors alike may leverage false information to widen fractures in societal views, erode public confidence in political institutions, and threaten national cohesion and coherence.

Trust in specific leaders will confer trust in information, and the authority of these actors—from conspiracy theorists, including politicians, and extremist groups to influencers and business leaders—could be amplified as they become arbiters of truth.

False information could not only be used as a source of societal disruption but also of control by domestic actors in pursuit of political agendas. The erosion of political checks and balances and the growth in tools that spread and control information could amplify the efficacy of domestic disinformation over the next two years.

Global internet freedom is already in decline, and access to more comprehensive sets of information has dropped in numerous countries. The implication: Falls in press freedoms in recent years and a related lack of strong investigative media are significant vulnerabilities set to grow.

Advisory

Here are specific best practices for citizens to help prevent the spread of misinformation during electoral processes:

- Verify Information:Double-check the accuracy of information before sharing it. Use reliable sources and fact-checking websites to verify claims.

- Cross-Check Multiple Sources:Consult multiple reputable news sources to ensure that the information is consistent across different platforms.

- Be Wary of Social Media:Social media platforms are susceptible to misinformation. Be cautious about sharing or believing information solely based on social media posts.

- Check Dates and Context:Ensure that information is current and consider the context in which it is presented. Misinformation often thrives when details are taken out of context.

- Promote Media Literacy:Educate yourself and others on media literacy to discern reliable sources from unreliable ones. Be skeptical of sensational headlines and clickbait.

- Report False Information:Report instances of misinformation to the platform hosting the content and encourage others to do the same. Utilise fact-checking organisations or tools to report and debunk false information.

- Critical Thinking:Foster critical thinking skills among your community members. Encourage them to question information and think critically before accepting or sharing it.

- Share Official Information:Share official statements and information from reputable sources, such as government election commissions, to ensure accuracy.

- Avoid Echo Chambers:Engage with diverse sources of information to avoid being in an 'echo chamber' where misinformation can thrive.

- Be Responsible in Sharing:Before sharing information, consider the potential impact it may have. Refrain from sharing unverified or sensational content that can contribute to misinformation.

- Promote Open Dialogue:Open discussions should be promoted amongst their community about the significance of factual information and the dangers of misinformation.

- Stay Calm and Informed:During critical periods, such as election days, stay calm and rely on official sources for updates. Avoid spreading unverified information that can contribute to panic or confusion.

- Support Media Literacy Programs:Media Literacy Programs in schools should be promoted to provide individuals with essential skills to sail through the information sea properly.

Conclusion

Preventing misinformation requires a collective effort from individuals, communities, and platforms. By adopting these best practices, citizens can play a vital role in reducing the impact of misinformation during electoral processes.

References:

- https://thewire.in/media/survey-finds-false-information-risk-highest-in-india

- https://thesouthfirst.com/pti/india-faces-highest-risk-of-disinformation-in-general-elections-world-economic-forum/

Overview of the India-UK Joint Tech Security Initiative

India and the UK have been deepening their technological and security ties through various initiatives and agreements. One of the key developments in this partnership is the India-UK Joint Tech Security Initiative, which focuses on enhancing collaboration in areas like cybersecurity, artificial intelligence (AI),telecommunications, and critical technologies. Building upon the bilateral cooperation agenda set out in the India-UK Roadmap 2030, which seeks to bolster cooperation across various sectors, including trade, climate change, antidefense, the UK and India launched the Joint Tech Security Initiative (TSI) on July 24, 2024. This initiative will priorities collaboration in critical and emerging technologies across priority sectors. Coordinating with the national security agencies of both countries, the TSI will set priority areas and identify interdependencies for cooperation on critical and emerging technologies. This, in turn, will help build meaningful technology value chain partnerships between India & the UK.

The TSI will be coordinated by the National Security Advisors (NSAs) of both countries through existing and new dialogues. The NSAswill set priority areas and identify interdependencies for cooperation on critical and emerging tech, helping build meaningful technology value chain partnerships between the two countries. Progress made on the initiative will be reviewed on a half-yearly basis at the Deputy NSA level. A bilateral mechanism will be established led by India's Ministry of External Affairs and the UK government for promotion of trade in critical and emerging technologies, including resolution of relevant licensing or regulatory issues. Both countries view this TSI as a platform and a strong signal of intent to build and grow sustainable and tangible partnerships across priority tech sectors. They will explore how to build a deeper strategic partnership between UK and Indian research and technology centres and Incubators, enhance cooperation across UK and India tech and innovation ecosystems, and create a channel for industry and academia to help shape the TSI.

The UK and India are launching new bilateral initiatives to expand and deepen their technology security partnership. These initiatives will focus on various domains, including telecoms, critical minerals, semiconductors, and energy security.

In telecoms, the UK and India will build a new Future Telecoms Partnership, focusing on joint research on future telecoms, open RAN systems, testbed linkups, telecoms security, spectrum innovation, software and systems architecture. This will include collaboration between UK's SONIC Labs, India's Centre for Development of Telematics (C-DOT), and Dot's Telecoms Startup Mission.

In critical minerals, the UK and India will expand their collaboration on critical minerals, working together to improve supply chain resilience, explore possible research and development and technology partnerships along the complete critical minerals value chain, and share best practices on ESG standards. They will establish a roadmap for cooperation and establish a UK-India ‘critical minerals’ community of academics, innovators, and industry.

Key Areas of Collaboration:

- Strengthening cybersecurity defense and enhancing resilience through joint cybersecurity exercises and information-sharing and developing common standards and best practices while collaborating with their respective organisations, ie, CERT-In and NCSC.

- Promotion of ethical AI development and deployment with AI ethics guidelines and frameworks, and efforts encouraging academic collaborations. Support for new partnerships between UK and Indian research organizations alongside existing joint programmes using AI to tackle global challenges.

- Building secure and resilient telecom infrastructure with a focus on security and exchange of expertise and regulatory cooperation. Collaboration on Open Radio Access Networks tech to name as an example.

- Critical and emerging technologies development by advancing research and innovation in the quantum, semiconductors and biotechnology niches. Promoting and investing in tech startups and innovation ecosystems. Engaging in policy dialogues on tech governance and standards.

- Digital economy and trade facilitation to promote economic growth by enhancing frameworks and agreements for it. Collaborating on digital payment systems and fintech solutions and most importantly promoting data protection and privacy standards.

Outlook and Impact on the Industry

The initiative sets out a new approach for how the UK and India work together on the defining technologies of this decade. These include areas such as telecoms, critical minerals, AI, quantum, health/biotechnology, advanced materials and semiconductors. While the initiative looks promising, several challenges need to be addressed such as the need to put robust regulatory frameworks in place, and develop a balanced approach for data privacy and information exchange in the cross-border data flows. It is imperative to install mechanisms that ensure that intellectual property is protected while the facilitation of technology transfer is not hampered. Above all, geopolitical risks need to be navigated in a manner that the tensions are reduced and a stable partnership grows. The Initiative builds on a series of partnerships between India and the UK, as well as between industry and academia. Abilateral mechanism, led by India’s Ministry of External Affairs and the UK government, will promote trade in critical and emerging technologies, including the resolution of relevant licensing or regulatory issues.

Conclusion

This initiative, at its core, will drive forward a bilateral partnership that is framed on boosting economic growth and deepening cooperation across key issues including trade, technology, education, culture and climate. By combining their strengths, the UK and India are poised to create a robust framework for technological innovation and security that could serve as a model for international cooperation in tech.

References

- https://www.hindustantimes.com/india-news/india-uk-launch-joint-tech-security-initiative-101721876539784.html

- https://www.gov.uk/government/publications/uk-india-technology-security-initiative-factsheet/uk-india-technology-security-initiative-factsheet

- https://www.business-standard.com/economy/news/india-uk-unveil-futuristic-technology-security-initiative-to-seal-fta-soon-124072500014_1.htm

- https://bharatshakti.in/india-uk-technology-security-initiative/

A video circulating widely on social media claims that Defence Minister Rajnath Singh compared the Rashtriya Swayamsevak Sangh (RSS) with the Afghan Taliban. The clip allegedly shows Singh stating that both organisations share a common ideology and belief system and therefore “must walk together.” However, a research by the CyberPeace found that the video is digitally manipulated, and the audio attributed to Rajnath Singh has been fabricated using artificial intelligence.

Claim

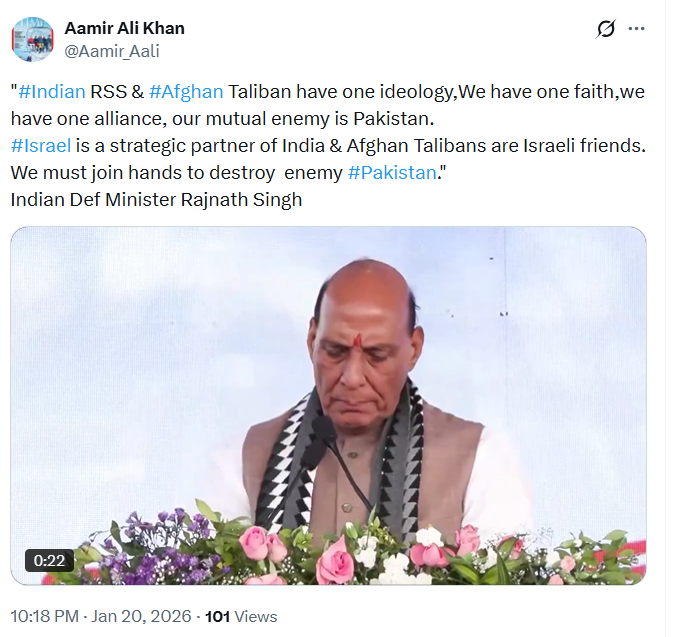

An X user, Aamir Ali Khan (@Aamir_Aali), on January 20 shared a video of Defence Minister Rajnath Singh, claiming that he drew parallels between the Rashtriya Swayamsevak Sangh (RSS) and the Afghan Taliban. The user alleged that Singh stated both organisations follow a similar ideology and belief system and therefore must “walk together.” The post further quoted Singh as allegedly saying: “Indian RSS & Afghan Taliban have one ideology, we have one faith, we have one alliance, our mutual enemy is Pakistan. Israel is a strategic partner of India & Afghan Taliban are Israeli friends. We must join hands to destroy the enemy Pakistan.” Here is the link and archive link to the post, along with a screenshot.

Fact Check:

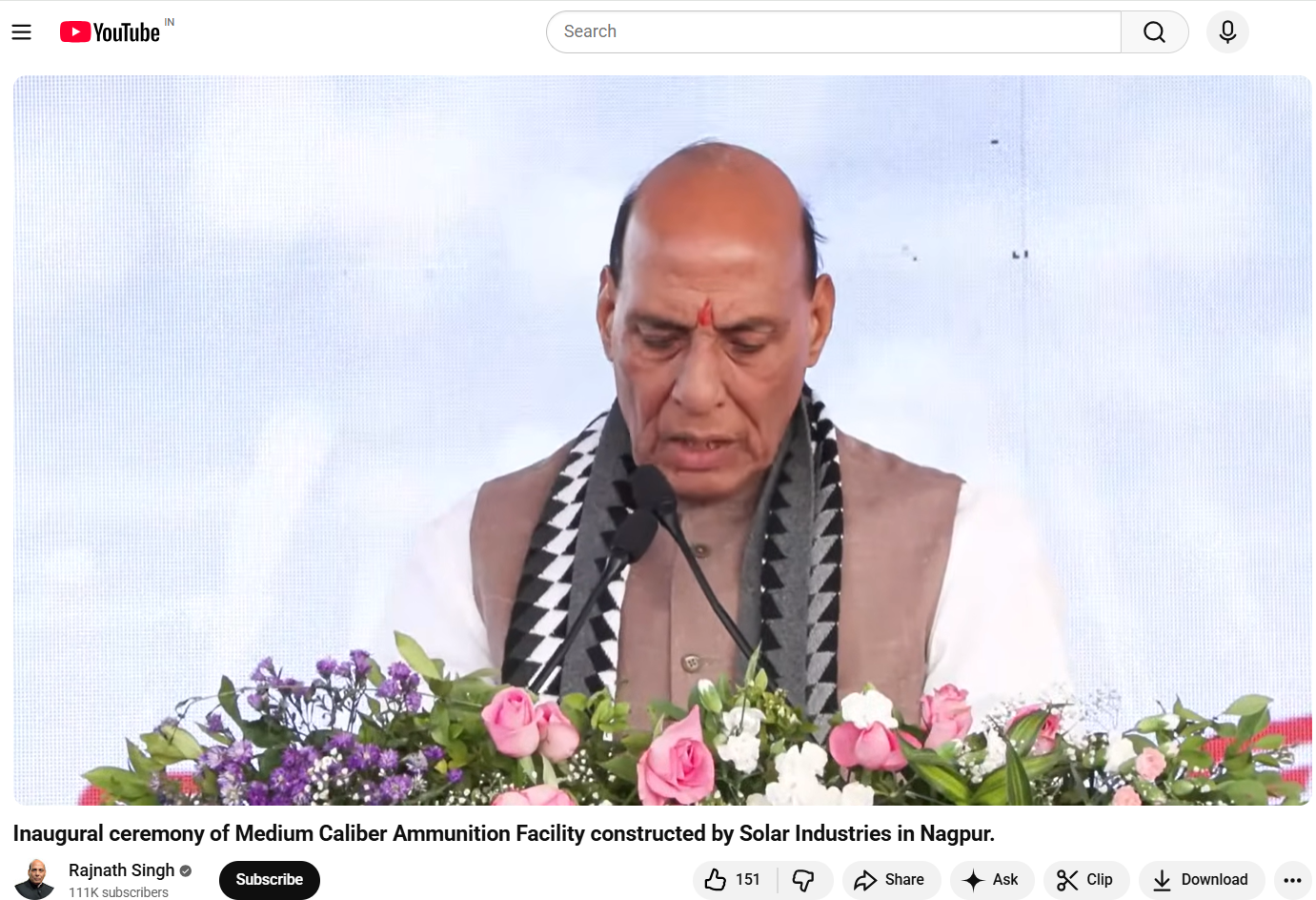

To verify the claim, the CyberPeace conducted a Google Lens search using keyframes extracted from the viral video. This search led to an extended version of the same footage uploaded on the official YouTube channel of Rajnath Singh. The original video was traced back to the inaugural ceremony of the Medium Calibre Ammunition Facility, constructed by Solar Industries in Nagpur. Upon reviewing the complete, unedited speech, the Desk found no instance where Rajnath Singh made any remarks comparing the RSS with the Afghan Taliban or spoke about shared ideology, alliances, or Pakistan in the manner claimed.

In the authentic footage, the Defence Minister spoke about:

" India’s push for Aatmanirbharta (self-reliance) in defence manufacturing

Strengthening domestic ammunition production

Positioning India as a global hub for defence exports "

The statements attributed to him in the viral clip were entirely absent from the original speech.

Here is the link to the original video, along with a screenshot.

In the next stage of the research , the audio track from the viral video was extracted and analysed using the AI voice detection tool Aurigin. This confirmed that the original visuals were misused and overlaid with a synthetic voice track to create a misleading narrative.

Conclusion

The CyberPeace concluded that the viral video claiming Defence Minister Rajnath Singh compared the RSS with the Afghan Taliban is false and misleading. The video has been digitally manipulated, with an AI-generated audio track falsely attributed to Singh. The Defence Minister made no such remarks during the Nagpur event, and the claim circulating online is fabricated.