#FactCheck - Digitally Altered Video of Olympic Medalist, Arshad Nadeem’s Independence Day Message

Executive Summary:

A video of Pakistani Olympic gold medalist and Javelin player Arshad Nadeem wishing Independence Day to the People of Pakistan, with claims of snoring audio in the background is getting viral. CyberPeace Research Team found that the viral video is digitally edited by adding the snoring sound in the background. The original video published on Arshad's Instagram account has no snoring sound where we are certain that the viral claim is false and misleading.

Claims:

A video of Pakistani Olympic gold medalist Arshad Nadeem wishing Independence Day with snoring audio in the background.

Fact Check:

Upon receiving the posts, we thoroughly checked the video, we then analyzed the video in TrueMedia, an AI Video detection tool, and found little evidence of manipulation in the voice and also in face.

We then checked the social media accounts of Arshad Nadeem, we found the video uploaded on his Instagram Account on 14th August 2024. In that video, we couldn’t hear any snoring sound.

Hence, we are certain that the claims in the viral video are fake and misleading.

Conclusion:

The viral video of Arshad Nadeem with a snoring sound in the background is false. CyberPeace Research Team confirms the sound was digitally added, as the original video on his Instagram account has no snoring sound, making the viral claim misleading.

- Claim: A snoring sound can be heard in the background of Arshad Nadeem's video wishing Independence Day to the people of Pakistan.

- Claimed on: X,

- Fact Check: Fake & Misleading

Related Blogs

.webp)

Introduction

Deepfake have become a source of worry in an age of advanced technology, particularly when they include the manipulation of public personalities for deceitful reasons. A deepfake video of cricket star Sachin Tendulkar advertising a gaming app recently went popular on social media, causing the sports figure to deliver a warning against the widespread misuse of technology.

Scenario of Deepfake

Sachin Tendulkar appeared in the deepfake video supporting a game app called Skyward Aviator Quest. The app's startling quality has caused some viewers to assume that the cricket legend is truly supporting it. Tendulkar, on the other hand, has resorted to social media to emphasise that these videos are phony, highlighting the troubling trend of technology being abused for deceitful ends.

Tendulkar's Reaction

Sachin Tendulkar expressed his worry about the exploitation of technology and advised people to report such videos, advertising, and applications that spread disinformation. This event emphasises the importance of raising knowledge and vigilance about the legitimacy of material circulated on social media platforms.

The Warning Signs

The deepfake video raises questions not just for its lifelike representation of Tendulkar, but also for the material it advocates. Endorsing gaming software that purports to help individuals make money is a significant red flag, especially when such endorsements come from well-known figures. This underscores the possibility of deepfakes being utilised for financial benefit, as well as the significance of examining information that appears to be too good to be true.

How to Protect Yourself Against Deepfakes

As deepfake technology advances, it is critical to be aware of potential signals of manipulation. Here are some pointers to help you spot deepfake videos:

- Look for artificial facial movements and expressions, as well as lip sync difficulties.

- Body motions and Posture: Take note of any uncomfortable body motions or discrepancies in the individual's posture.

- Lip Sync and Audio Quality: Look for mismatches between the audio and lip motions.

- background and Content: Consider the video's background, especially if it has a popular figure supporting something in an unexpected way.

- Verify the legitimacy of the video by verifying the official channels or accounts of the prominent person.

Conclusion

The popularity of deepfake videos endangers the legitimacy of social media material. Sachin Tendulkar's response to the deepfake in which he appears serves as a warning to consumers to remain careful and report questionable material. As technology advances, it is critical that individuals and authorities collaborate to counteract the exploitation of AI-generated material and safeguard the integrity of online information.

Reference

- https://www.news18.com/tech/sachin-tendulkar-disturbed-by-his-new-deepfake-video-wants-swift-action-8740846.html

- https://www.livemint.com/news/india/sachin-tendulkar-becomes-latest-victim-of-deepfake-video-disturbing-to-see-11705308366864.html

Executive Summary:

Recently, CyberPeace faced a case involving a fraudulent Android application imitating the Punjab National Bank (PNB). The victim was tricked into downloading an APK file named "PNB.apk" via WhatsApp. After the victim installed the apk file, it resulted in unauthorized multiple transactions on multiple credit cards.

Case Study: The Attack: Social Engineering Meets Malware

The incident started when the victim clicked on a Facebook ad for a PNB credit card. After submitting basic personal information, the victim receives a WhatsApp call from a profile displaying the PNB logo. The attacker, posing as a bank representative, fakes the benefits and features of the Credit Card and convinces the victim to install an application named PNB.apk. The so called bank representative sent the app through WhatsApp, claiming it would expedite the credit card application. The application was installed in the mobile device as a customer care application. It asks for permissions such as to send or view SMS messages. The application opens only if the user provides this permission.

It extracts the credit card details from the user such as Full Name, Mobile Number, complain, on further pages irrespective of Refund, Pay or Other. On further processing, it asks for other information such as credit card number, expiry date and cvv number.

Now the scammer has access to all the details of the credit card information, access to read or view the sms to intercept OTPs.

The victim, thinking they were securely navigating the official PNB website, was unaware that the malware was granting the hacker remote access to their phone. This led to ₹4 lakhs worth of 11 unauthorized transactions across three credit cards.

The Investigation & Analysis:

Upon receiving the case through CyberPeace helpline, the CyberPeace Research Team acted swiftly to neutralize the threat and secure the victim’s device. Using a secure remote access tool, we gained control of the phone with the victim’s consent. Our first step was identifying and removing the malicious "PNB.apk" file, ensuring no residual malware was left behind.

Next, we implemented crucial cyber hygiene practices:

- Revoking unnecessary permissions – to prevent further unauthorized access.

- Running antivirus scans – to detect any remaining threats.

- Clearing sensitive data caches – to remove stored credentials and tokens.

The CyberPeace Helpline team assisted the victim to report the fraud to the National Cybercrime Portal and helpline (1930) and promptly blocked the compromised credit cards.

The technical analysis for the app was taken ahead and by using the md5 hash file id. This app was marked as malware in virustotal and it has all the permissions such as Send/Receive/Read SMS, System Alert Window.

In the similar way, we have found another application in the name of “Axis Bank” which is circulated through whatsapp which is having similar permission access and the details found in virus total are as follows:

Recommendations:

This case study implies the increasingly sophisticated methods used by cybercriminals, blending social engineering with advanced malware. Key lessons include:

- Be vigilant when downloading the applications, even if they appear to be from legitimate sources. It is advised to install any application after checking through an application store and not through any social media.

- Always review app permissions before granting access.

- Verify the identity of anyone claiming to represent financial institutions.

- Use remote access tools responsibly for effective intervention during a cyber incident.

By acting quickly and following the proper protocols, we successfully secured the victim’s device and prevented further financial loss.

Executive Summary

A video circulating on social media claims that Jammu and Kashmir Deputy Chief Minister Surinder Choudhary described Prime Minister Narendra Modi as an agent of Pakistan’s Inter-Services Intelligence (ISI). In the viral clip, Choudhary is allegedly heard accusing the Prime Minister of pushing Kashmir towards Pakistan and claiming that even pro-India Kashmiris are disillusioned with Modi’s policies.

However, research by the CyberPeace research wing has found that the video is digitally manipulated. While the visuals are genuine and taken from a real media interaction, the audio has been fabricated and falsely overlaid to misattribute inflammatory remarks to the Deputy Chief Minister.

Claim

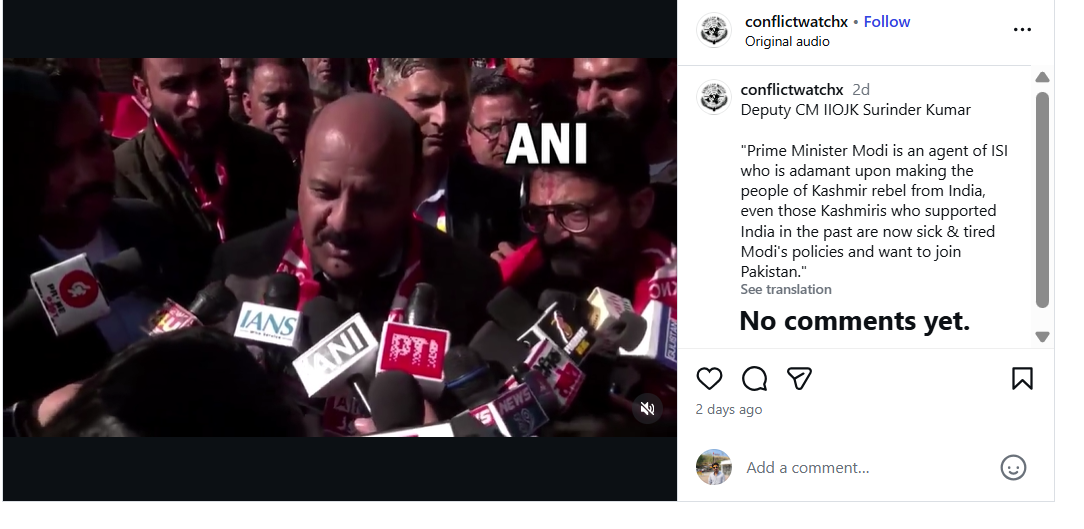

An Instagram account named Conflict Watch shared the video on January 20, claiming that J&K Deputy Chief Minister Surinder Choudhary had called Prime Minister Modi an ISI agent. The video purportedly quoted Choudhary as saying that Modi was elected with Pakistan’s support and that Kashmir would soon become part of Pakistan due to his policies.

Here is the link and archive link to the post, along with a screenshot.

Fact Check:

To verify the claim, the Desk conducted a Google Lens search, which led to a video uploaded on January 20, 2026, on the official YouTube channel of Jammu and Kashmir–based news outlet JKUpdate. The footage was an extended version of the viral clip and featured identical visuals. The original video showed Surinder Choudhary addressing the media on the sidelines of the inaugural two-day JKNC Convention of Block Presidents and Secretaries in the Jammu province. A review of the full media interaction revealed that Choudhary did not make any statements calling Prime Minister Modi an ISI agent or suggesting that Kashmir should join Pakistan.

Instead, in the original footage, Choudhary was seen criticising former Jammu and Kashmir Chief Minister and PDP leader Mehbooba Mufti for supporting the BJP during the bifurcation of Jammu and Kashmir and Ladakh into two Union Territories. He also spoke about the challenges faced by the region after the abrogation of Article 370 and demanded the restoration of full statehood for Jammu and Kashmir. During the interaction, Choudhary said that anyone attempting to divide Jammu and Kashmir at the state or regional level was effectively following Pakistan’s agenda and Jinnah’s two-nation theory. He added that such individuals could not be considered patriots.

Here is the link to the video, along with a screenshot.

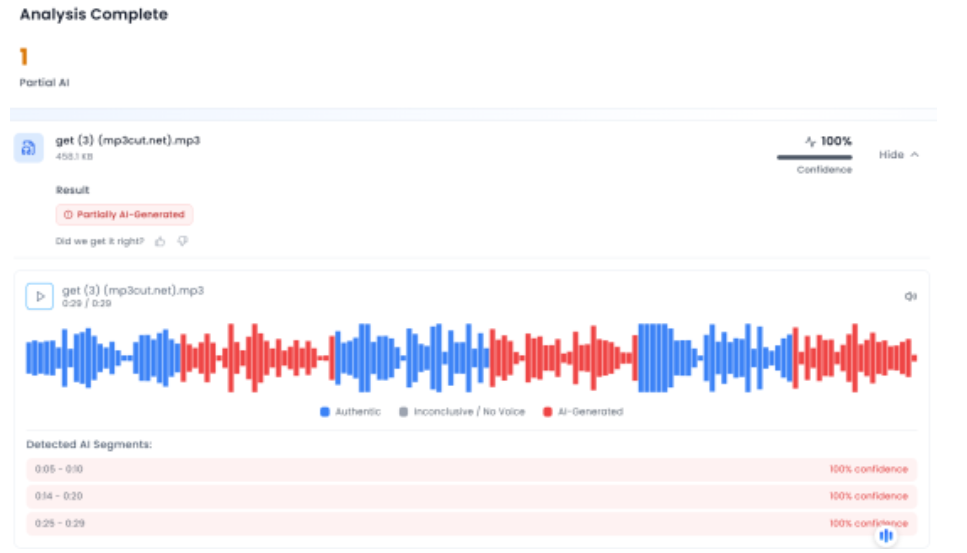

In the next phase of the research , the Desk extracted the audio from the viral clip and analysed it using the AI-based audio detection tool Aurigin. The analysis indicated that the voice in the viral video was partially AI-generated, further confirming that the clip had been tampered with.

Below is a screenshot of the result.

Conclusion

Multiple social media users shared a video claiming it showed Jammu and Kashmir Deputy Chief Minister Surinder Choudhary calling Prime Minister Narendra Modi an agent of the ISI. However, the CyberPeace found that the viral video was digitally manipulated. While the visuals were taken from a genuine media interaction with the leader, a fabricated audio track was overlaid to attribute the statements to him falsely.