#FactCheck - AI-Generated Image Falsely Linked to Mira–Bhayandar Bridge

Executive Summary

Mumbai’s Mira–Bhayandar bridge has recently been in the news due to its unusual design. In this context, a photograph is going viral on social media showing a bus seemingly stuck on the bridge. Some users are also sharing the image while claiming that it is from Sonpur subdivision in Bihar. However, an research by the CyberPeace has found that the viral image is not real. The bridge shown in the image is indeed the Mira–Bhayandar bridge, which is under discussion because its design causes it to suddenly narrow from four lanes to two lanes. That said, the bridge is not yet operational, and the viral image showing a bus stuck on it has been created using Artificial Intelligence (AI).

Claim

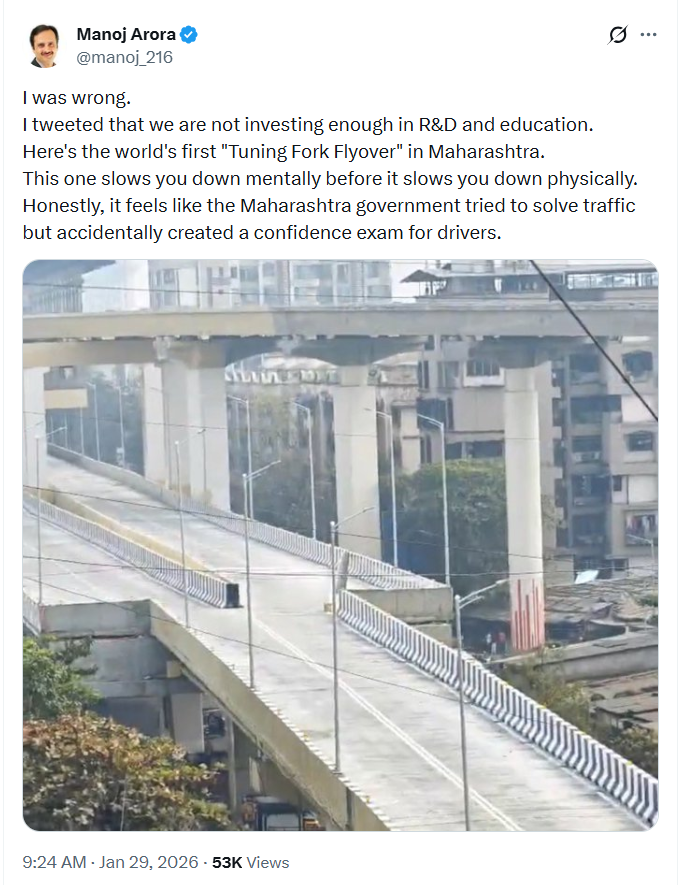

An Instagram user shared the viral image on January 29, 2026, with the caption:“Are Indian taxpayers happy to see that this is funded by their money?” The link, archive link, and screenshot of the post can be seen below.

Fact Check:

To verify the claim, we first conducted a Google Lens reverse image search. This led us to a post shared by X (formerly Twitter) user Manoj Arora on January 29. While the bridge structure in that image matches the viral photo, no bus is visible in the original post.This raised suspicion that the viral image had been digitally manipulated.

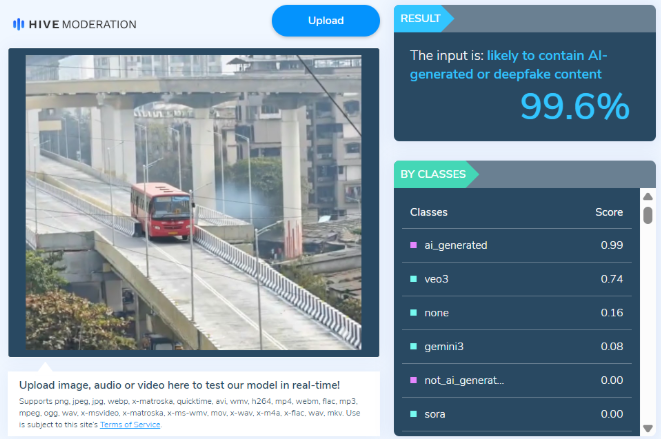

We then ran the viral image through the AI detection tool Hive Moderation, which flagged it as over 99% likely to be AI-generated

Conclusion

The CyberPeace research confirms that while the Mira–Bhayandar bridge is real and has been in the news due to its design, the viral image showing a bus stuck on the bridge has been created using AI tools. Therefore, the image circulating on social media is misleading.

Related Blogs

Introduction

In today's era of digitalised community and connections, social media has become an integral part of our lives. we use social media to connect with our friends and family, and social media is also used for business purposes. Social media offers us numerous opportunities and ease to connect and communicate with larger communities. While it also poses some challenges, while we use social media, we come across issues such as inappropriate content, online harassment, online stalking, account hacking, misuse of personal information or data, privacy issues, fake accounts, Intellectual property violation issues, abusive and dishearted content, content against the terms and condition policy of the platform and more. To deal with such issues, social media entities have proper reporting mechanisms and set terms and conditions guidelines to effectively prevent such issues and by addressing them in the best possible way by platform help centre or reporting mechanism.

The Role of Help Centers in Resolving User Complaints:

The help centres are established on platforms to address user complaints and provide satisfactory assistance or resolution. Addressing user complaints is a key component of maintaining a safe and secure digital environment for users. Platform-centric help centres play a vital role in providing users with a resource to seek assistance and report their issues.

Some common issues reported on social media:

- Reporting abusive content: Users can report content that they find abusive, offensive, or in violation of platform policies. These reports are reviewed by the help centre.

- Reporting CSAM (Child Sexual Abuse Material): CSAM content can be reported to platform help centre. Social media platforms have stringent policies in place to address such concerns and ensure a safe digital environment for everyone, including children.

- Reporting Misinformation or Fake News: With the proliferation of misinformation online, users can report content that they find or suspect misleading or false information and Fact-checking bodies are employed to assess the accuracy of reported content.

- Content violating intellectual property rights: If there is a violation or infringement of any intellectual property work, it can be reported on the platform.

- Violence of commercial policies: Products listed on social media platforms are also needed to comply with the platform’s Commercial Policies.

Submitting a Complaint to the Indian Grievance Officer for Facebook:

A user can report his issue through the below-mentioned websites:

The user can go to the Facebook Help Center, where go to the "Reporting a Problem” section, then by clicking on Reporting a Problem, Choose the Appropriate Issue that best describes your complaint. For example, if you have encountered inappropriate or abusive content, select the ‘I found inappropriate or abusive content’ option.

Here is a list of issues which you can report on Facebook:

- My account has been hacked.

- I've lost access to a page or a group I used to manage.

- I've found a fake profile or a profile that's pretending to be me.

- I am being bullied or harassed.

- I found inappropriate or abusive content.

- I want to report content showing me in nudity/partial nudity or in a sexual act.

- I (or someone I am legally responsible for) appear in content that I do not want to be displayed.

- I am a law enforcement official seeking to access user data.

- I am a government official or a court officer seeking to submit an order, notice or direction.

- I want to download my personal data or report an issue with how Facebook is processing my data.

- I want to report an Intellectual Property infringement.

- I want to report another issue.

Then, describe your issues and attach supporting evidence such as screenshots, then submit your report. After submitting a report, you will receive a confirmation that your report has been submitted to the platform. The platform will review the complaint within the stipulated time period, and users can also check the status of their filed complaint. Appropriate action will be taken by platforms after reviewing such complaints. If it violates any standard policy, terms & conditions, or privacy policies of the platform, the platform will take down that content or will take any other appropriate action.

Conclusion:

It is important to be aware of your rights in a digital landscape and report such issues to the platform. It is essential to understand how to report your issues or grievances on social media platforms effectively. By using the help centre or reporting mechanism of the platform, users can effectively file their complaints on the platform and contribute to a safer and more responsible online environment. Social media platforms have their compliance framework and privacy and policy guidelines in place to ensure the compliance framework for community standards and legal requirements. So, whenever you encounter an issue on social media, report it on the platform and contribute to a safer digital environment on social media platforms.

References:

- https://www.cyberyodha.org/2023/09/how-to-submit-complaint-to-indian.html

- https://transparency.fb.com/en-gb/enforcement/taking-action/complaints-handling-process/

- https://www.facebook.com/help/contact/278770247037228

- https://www.facebook.com/help/263149623790594

Introduction

Words come easily, but not necessarily the consequences that follow. Imagine a 15-year-old child on the internet hoping that the world will be nice to him and help him gain confidence, but instead, someone chooses to be mean on the internet, or the child becomes the victim of a new kind of cyberbullying, i.e., online trolling. The consequences of trolling can have serious repercussions, including eating disorders, substance abuse, conduct issues, body dysmorphia, negative self-esteem, and, in tragic cases, self-harm and suicide attempts in vulnerable individuals. The effects of online trolling can include anxiety, depression, and social isolation. This is one example, and hate speech and online abuse can touch anyone, regardless of age, background, or status. The damage may take different forms, but its impact is far-reaching. In today’s digital age, hate speech spreads rapidly through online platforms, often amplified by AI algorithms.

As we celebrate today, i.e., 18th June, the International Day for Countering Hate Speech, if we have ever been mean to someone on the internet, we pledge never to repeat that kind of behaviour, and if we have been the victim, we will stand against the perpetrator and report it.

This year, the theme for the International Day for Countering Hate Speech is “Hate Speech and Artificial Intelligence Nexus: Building coalitions to reclaim inclusive and secure environments free of hatred. UN Secretary-General Antonio Guterres, in his statement, said, “Today, as this year’s theme reminds us, hate speech travels faster and farther than ever, amplified by Artificial Intelligence. Biased algorithms and digital platforms are spreading toxic content and creating new spaces for harassment and abuse."

Coded Convictions: How AI Reflects and Reinforces Ideologies

Algorithms have swiftly taken the place of feelings; they tamper with your taste, and they do so with a lighter foot, invisibly. They are becoming an important component of social media user interaction and content distribution. While these tools are designed to improve user experience, they frequently inadvertently spread divisive ideologies and push extremist propaganda. This amplification can strengthen the power of extremist organisations, spread misinformation, and deepen societal tensions. This phenomenon, known as “algorithmic radicalisation,” demonstrates how social media companies may utilise a discriminating content selection approach to entice people down ideological rabbit holes and shape their ideas. AI-driven algorithms often prioritise engagement over ethics, enabling divisive and toxic content to trend and placing vulnerable groups, especially youth and minorities, at risk. The UN’s Strategy and Plan of Action on Hate Speech, launched on June 18, 2019, recognises that while AI holds promise for early detection and prevention of harmful speech, it also demands stringent human rights safeguards. Without regulation, these tools can themselves become purveyors of bias and exclusion.

India’s Constitutional Resolve and Civilizational Ethos against Hate

India has always taken pride in being inclusive and united rather than divided. As far as hate speech is concerned, India's stand is no different. The United Nations, India believes in the same values as its international counterpart. Although India has won many battles against hate speech, the war is not over and is now more prominent than ever due to the advancement in communication technologies. In India, while the right to freedom of speech and expression is protected under Article 19(1)(a), its exercise is limited subject to reasonable restrictions under Article 19(2). Landmark rulings such as Ramji Lal Modi v. State of U.P. and Amish Devgan v. UOI have clarified that speech can be curbed if it incites violence or undermines public order. Section 69A of the IT Act, 2000, empowers the government to block content, and these principles are also reflected in Section 196 of the BNS, 2023 (153A IPC) and Section 299 of the BNS, 2023 (295A IPC). Platforms are also required to track down the creators of harmful content and remove it within a reasonable hour and fulfil their due diligence requirements under IT rules.

While there is no denying that India needs to be well-equipped and prepared normatively to tackle hate propaganda and divisive forces. India’s rich culture and history, rooted in philosophies of Vasudhaiva Kutumbakam (the world is one family) and pluralistic traditions, have long stood as a beacon of tolerance and coexistence. By revisiting these civilizational values, we can resist divisive forces and renew our collective journey toward harmony and peaceful living.

CyberPeace Message

The ultimate goal is to create internet and social media platforms that are better, safer and more harmonious for each individual, irrespective of his/her/their social and cultural background. CyberPeace stands resolute on promoting digital media literacy, cyber resilience, and consistently pushing for greater accountability for social media platforms.

References

- https://www.un.org/en/observances/countering-hate-speech

- https://www.artemishospitals.com/blog/the-impact-of-trolling-on-teen-mental-health

- https://www.orfonline.org/expert-speak/from-clicks-to-chaos-how-social-media-algorithms-amplify-extremism

- https://www.techpolicy.press/indias-courts-must-hold-social-media-platforms-accountable-for-hate-speech/

Introduction

In the vast, cosmic-like expanse of international relations, a sphere marked by the gravitational pull of geopolitical interests, a singular issue has emerged, casting a long shadow over the fabric of Indo-Canadian diplomacy. It is a narrative spun from an intricate loom, interlacing the yarns of espionage and political machinations, shadowboxing with the transient, yet potent, specter of state-sanctioned violence. The recent controversy undulating across this geopolitical landscape owes its origins to the circulation of claims which the Indian Ministry of External Affairs (MEA) vehemently dismisses as a distorted tapestry of misinformation—a phantasmagoric fable divorced from reality.

This maelstrom of contention orbits around the alleged existence of a 'secret memo', a document reportedly dispatched with stealth from the helm of the Indian government to its consulates peppered across the vast North American continent. This mysterious communique, assuming its spectral presence within the report, was described as a directive catalyzing a 'sophisticated crackdown scheme' against specific Sikh diaspora organizations. A proclamation that MEA has repudiated with adamantine certainty, branding the report as a meticulously fabricated fiction.

THE MEA Stance

The official statement from the Indian Ministry of External Affairs (MEA) emerged as a paragon of clarity cutting through the dense fog of accusations, 'We strongly assert that such reports are fake and emphatically concocted. The referenced memo is non-existent. This narrative is a chapter in the protracted saga of a disinformation campaign aimed against India.' The outlet responsible for airing this contentious story, as per the Indian authorities, has a historical penchant for circulating narratives aligned with the interests of rival intelligence agencies, particularly those associated with Pakistani strategic circles—a claim infusing yet another complex layer to the situation at hand.

The report that catapulted itself onto the stage with the force of an untamed tempest insists the 'secret memo' was decked with several names—all belonging to individuals under the hawk-like gaze of Indian intelligence.

The Plague of Disinformation

The profoundly intricate confluence of diplomacy is one that commands grace, poise, and an acute sense of balance—nations effortlessly tip-toeing around sensitivities, proffering reciprocity and an equitable stance within the grand ballroom of international affairs. Hence, when S. Jaishankar, India's Minister of External Affairs, found himself fielding inquiries on the perceived inconsistent treatment afforded to Canada compared to the US—despite similar claims emanating from both—his response was the embodiment of diplomatic discretion: 'As far as Canada is concerned, there was a glaring absence of specific evidence or inputs provided to us. The robust question of equitable treatment between two nations, where only one has furnished substantive input and the other has not, is naturally unmerited.'

The articulation from the Ministry's spokesperson, Arindam Bagchi, further solidified India's stance. He calls into question the credibility of The Intercept—the publication that initially disseminated the report—accusing it of acting as a vessel for 'invented narratives' propagated under the auspices of Pakistani intelligence interests.

Conclusion

In the grand theater of international politics, the distinction between reality and deception is frequently obscured by the heavy drapes of secrecy and diplomatic guile. The persistent denial by the Indian government of any 'secret memo' serves as a critical reminder of the blurred lines between narrative and counter-narrative in the global concert of power and persuasion. As observant spectators within the arena of world politics, we are endowed with the unenviable task of untangling the convoluted web of claims and counterclaims, hoping to uncover the enduring truths that linger therein. In this domain of authentic and imaginary tales, the only unwavering certainty is the persistent rhythm of diplomatic interplay and the subtle shadows it casts upon the international stage. The Ministry of External Affairs fact-checked a claim on the secret memo, rubbishing it as fake and fabricated. The government has said there is a deliberate disinformation campaign that has been on against India.

References

- https://timesofindia.indiatimes.com/india/mea-denies-report-it-issued-secret-memo-on-nijjar-to-missions/articleshow/105884217.cms?from=mdr

- https://www.hindustantimes.com/india-news/india-denies-secret-memo-against-nijjar-report-peddled-by-pak-intelligence-101702229753576.html