#FactCheck-AI-Generated Viral Image of US President Joe Biden Wearing a Military Uniform

Executive Summary:

A circulating picture which is said to be of United States President Joe Biden wearing military uniform during a meeting with military officials has been found out to be AI-generated. This viral image however falsely claims to show President Biden authorizing US military action in the Middle East. The Cyberpeace Research Team has identified that the photo is generated by generative AI and not real. Multiple visual discrepancies in the picture mark it as a product of AI.

Claims:

A viral image claiming to be US President Joe Biden wearing a military outfit during a meeting with military officials has been created using artificial intelligence. This picture is being shared on social media with the false claim that it is of President Biden convening to authorize the use of the US military in the Middle East.

Similar Post:

Fact Check:

CyberPeace Research Team discovered that the photo of US President Joe Biden in a military uniform at a meeting with military officials was made using generative-AI and is not authentic. There are some obvious visual differences that plainly suggest this is an AI-generated shot.

Firstly, the eyes of US President Joe Biden are full black, secondly the military officials face is blended, thirdly the phone is standing without any support.

We then put the image in Image AI Detection tool

The tool predicted 4% human and 96% AI, Which tells that it’s a deep fake content.

Let’s do it with another tool named Hive Detector.

Hive Detector predicted to be as 100% AI Detected, Which likely to be a Deep Fake Content.

Conclusion:

Thus, the growth of AI-produced content is a challenge in determining fact from fiction, particularly in the sphere of social media. In the case of the fake photo supposedly showing President Joe Biden, the need for critical thinking and verification of information online is emphasized. With technology constantly evolving, it is of great importance that people be watchful and use verified sources to fight the spread of disinformation. Furthermore, initiatives to make people aware of the existence and impact of AI-produced content should be undertaken in order to promote a more aware and digitally literate society.

- Claim: A circulating picture which is said to be of United States President Joe Biden wearing military uniform during a meeting with military officials

- Claimed on: X

- Fact Check: Fake

Related Blogs

Introduction

In a world where Artificial Intelligence (AI) is already changing the creation and consumption of content at a breathtaking pace, distinguishing between genuine media and false or doctored content is a serious issue of international concern. AI-generated content in the form of deepfakes, synthetic text and photorealistic images is being used to disseminate misinformation, shape public opinion and commit fraud. As a response, governments, tech companies and regulatory bodies are exploring ‘watermarking’ as a key mechanism to promote transparency and accountability in AI-generated media. Watermarking embeds identifiable information into content to indicate its artificial origin.

Government Strategies Worldwide

Governments worldwide have pursued different strategies to address AI-generated media through watermarking standards. In the US, President Biden's 2023 Executive Order on AI directed the Department of Commerce and the National Institute of Standards and Technology (NIST) to establish clear guidelines for digital watermarking of AI-generated content. This action puts a big responsibility on large technology firms to put identifiers in media produced by generative models. These identifiers should help fight misinformation and address digital trust.

The European Union, in its Artificial Intelligence Act of 2024, requires AI-generated content to be labelled. Article 50 of the Act specifically demands that developers indicate whenever users engage with synthetic content. In addition, the EU is a proponent of the Coalition for Content Provenance and Authenticity (C2PA), an organisation that produces secure metadata standards to track the origin and changes of digital content.

India is currently in the process of developing policy frameworks to address AI and synthetic content, guided by judicial decisions that are helping shape the approach. In 2024, the Delhi High Court directed the central government to appoint members for a committee responsible for regulating deepfakes. Such moves indicate the government's willingness to regulate AI-generated content.

China, has already implemented mandatory watermarking on all deep synthesis content. Digital identifiers must be embedded in AI media by service providers, and China is one of the first countries to adopt stern watermarking legislation.

Understanding the Technical Feasibility

Watermarking AI media means inserting recognisable markers into digital material. They can be perceptible, such as logos or overlays or imperceptible, such as cryptographic tags or metadata. Sophisticated methods such as Google's SynthID apply imperceptible pixel-level changes that remain intact against standard image manipulation such as resizing or compression. Likewise, C2PA metadata standards enable the user to track the source and provenance of an item of content.

Nonetheless, watermarking is not an infallible process. Most watermarking methods are susceptible to tampering. Aforementioned adversaries with expertise, for instance, can use cropping editing or AI software to delete visible watermarks or remove metadata. Further, the absence of interoperability between different watermarking systems and platforms hampers their effectiveness. Scalability is also an issue enacting and authenticating watermarks for billions of units of online content necessitates huge computational efforts and routine policy enforcement across platforms. Scientists are currently working on solutions such as blockchain-based content authentication and zero-knowledge watermarking, which maintain authenticity without sacrificing privacy. These new techniques have potential for overcoming technical deficiencies and making watermarking more secure.

Challenges in Enforcement

Though increasing agreement exists for watermarking, implementation of such policies is still a major issue. Jurisdictional constraints prevent enforceability globally. A watermarking policy within one nation might not extend to content created or stored in another, particularly across decentralised or anonymous domains. This creates an exigency for international coordination and the development of worldwide digital trust standards. While it is a welcome step that platforms like Meta, YouTube, and TikTok have begun flagging AI-generated content, there remains a pressing need for a standardised policy that ensures consistency and accountability across all platforms. Voluntary compliance alone is insufficient without clear global mandates.

User literacy is also a significant hurdle. Even when content is properly watermarked, users might not see or comprehend its meaning. This aligns with issues of dealing with misinformation, wherein it's not sufficient just to mark off fake content, users need to be taught how to think critically about the information they're using. Public education campaigns, digital media literacy and embedding watermarking labels within user-friendly UI elements are necessary to ensure this technology is actually effective.

Balancing Privacy and Transparency

While watermarking serves to achieve digital transparency, it also presents privacy issues. In certain instances, watermarking might necessitate the embedding of metadata that will disclose the source or identity of the content producer. This threatens journalists, whistleblowers, activists, and artists utilising AI tools for creative or informative reasons. Governments have a responsibility to ensure that watermarking norms do not violate freedom of expression or facilitate surveillance. The solution is to achieve a balance by employing privacy-protection watermarking strategies that verify the origin of the content without revealing personally identifiable data. "Zero-knowledge proofs" in cryptography may assist in creating watermarking systems that guarantee authentication without undermining user anonymity.

On the transparency side, watermarking can be an effective antidote to misinformation and manipulation. For example, during the COVID-19 crisis, misinformation spread by AI on vaccines, treatments and public health interventions caused widespread impact on public behaviour and policy uptake. Watermarked content would have helped distinguish between authentic sources and manipulated media and protected public health efforts accordingly.

Best Practices and Emerging Solutions

Several programs and frameworks are at the forefront of watermarking norms. Adobe, Microsoft and others' collaborative C2PA framework puts tamper-proof metadata into images and videos, enabling complete traceability of content origin. SynthID from Google is already implemented on its Imagen text-to-image model and secretly watermarks images generated by AI without any susceptibility to tampering. The Partnership on AI (PAI) is also taking a leadership role by building out ethical standards for synthetic content, including standards around provenance and watermarking. These frameworks become guides for governments seeking to introduce equitable, effective policies. In addition, India's new legal mechanisms on misinformation and deepfake regulation present a timely point to integrate watermarking standards consistent with global practices while safeguarding civil liberties.

Conclusion

Watermarking regulations for synthetic media content are an essential step toward creating a safer and more credible digital world. As artificial media becomes increasingly indistinguishable from authentic content, the demand for transparency, origin, and responsibility increases. Governments, platforms, and civil society organisations will have to collaborate to deploy watermarking mechanisms that are technically feasible, compliant and privacy-friendly. India is especially at a turning point, with courts calling for action and regulatory agencies starting to take on the challenge. Empowering themselves with global lessons, applying best-in-class watermarking platforms and promoting public awareness can enable the nation to acquire a level of resilience against digital deception.

References

- https://artificialintelligenceact.eu/

- https://www.cyberpeace.org/resources/blogs/delhi-high-court-directs-centre-to-nominate-members-for-deepfake-committee

- https://c2pa.org

- https://www.cyberpeace.org/resources/blogs/misinformations-impact-on-public-health-policy-decisions

- https://deepmind.google/technologies/synthid/

- https://www.imatag.com/blog/china-regulates-ai-generated-content-towards-a-new-global-standard-for-transparency

Executive Summary

A video circulating on social media claims that Jammu and Kashmir Deputy Chief Minister Surinder Choudhary described Prime Minister Narendra Modi as an agent of Pakistan’s Inter-Services Intelligence (ISI). In the viral clip, Choudhary is allegedly heard accusing the Prime Minister of pushing Kashmir towards Pakistan and claiming that even pro-India Kashmiris are disillusioned with Modi’s policies.

However, research by the CyberPeace research wing has found that the video is digitally manipulated. While the visuals are genuine and taken from a real media interaction, the audio has been fabricated and falsely overlaid to misattribute inflammatory remarks to the Deputy Chief Minister.

Claim

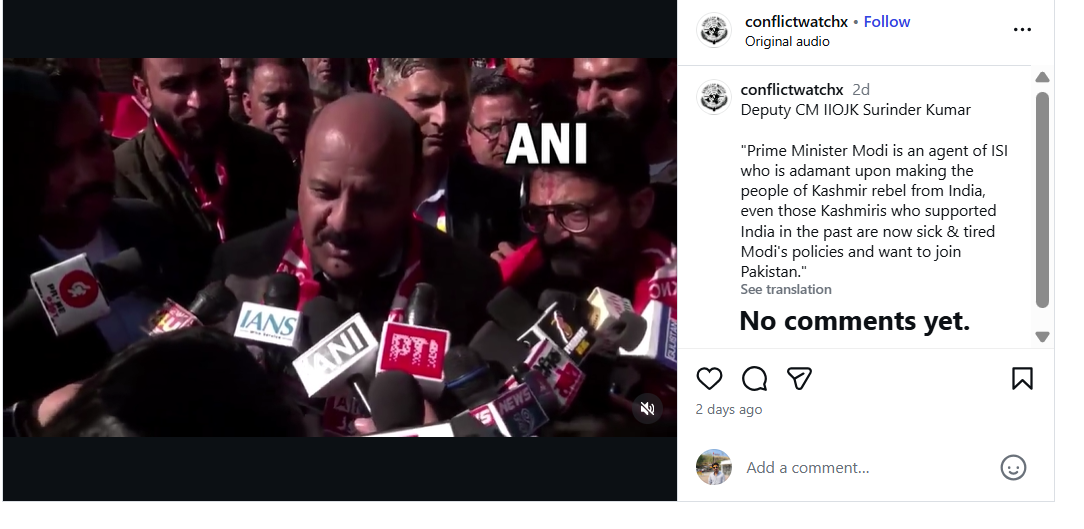

An Instagram account named Conflict Watch shared the video on January 20, claiming that J&K Deputy Chief Minister Surinder Choudhary had called Prime Minister Modi an ISI agent. The video purportedly quoted Choudhary as saying that Modi was elected with Pakistan’s support and that Kashmir would soon become part of Pakistan due to his policies.

Here is the link and archive link to the post, along with a screenshot.

Fact Check:

To verify the claim, the Desk conducted a Google Lens search, which led to a video uploaded on January 20, 2026, on the official YouTube channel of Jammu and Kashmir–based news outlet JKUpdate. The footage was an extended version of the viral clip and featured identical visuals. The original video showed Surinder Choudhary addressing the media on the sidelines of the inaugural two-day JKNC Convention of Block Presidents and Secretaries in the Jammu province. A review of the full media interaction revealed that Choudhary did not make any statements calling Prime Minister Modi an ISI agent or suggesting that Kashmir should join Pakistan.

Instead, in the original footage, Choudhary was seen criticising former Jammu and Kashmir Chief Minister and PDP leader Mehbooba Mufti for supporting the BJP during the bifurcation of Jammu and Kashmir and Ladakh into two Union Territories. He also spoke about the challenges faced by the region after the abrogation of Article 370 and demanded the restoration of full statehood for Jammu and Kashmir. During the interaction, Choudhary said that anyone attempting to divide Jammu and Kashmir at the state or regional level was effectively following Pakistan’s agenda and Jinnah’s two-nation theory. He added that such individuals could not be considered patriots.

Here is the link to the video, along with a screenshot.

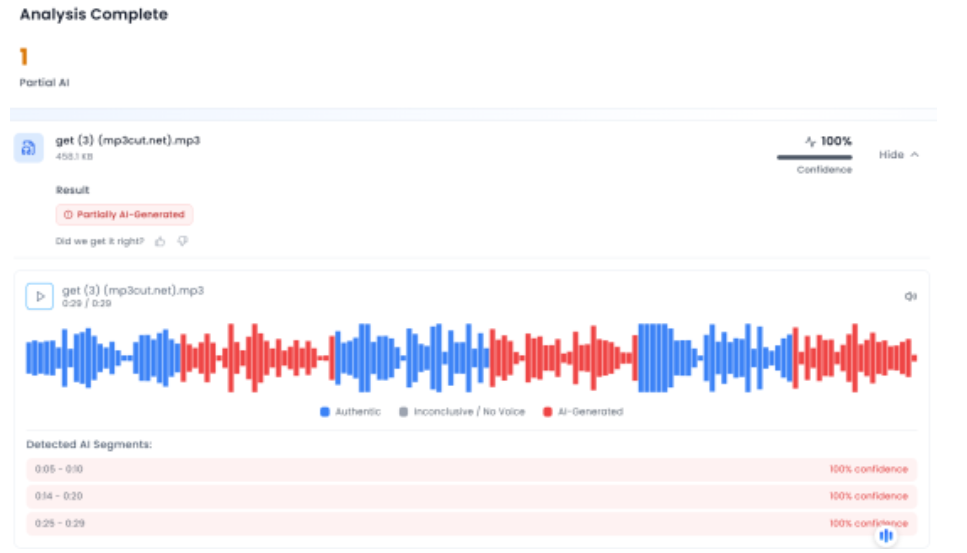

In the next phase of the research , the Desk extracted the audio from the viral clip and analysed it using the AI-based audio detection tool Aurigin. The analysis indicated that the voice in the viral video was partially AI-generated, further confirming that the clip had been tampered with.

Below is a screenshot of the result.

Conclusion

Multiple social media users shared a video claiming it showed Jammu and Kashmir Deputy Chief Minister Surinder Choudhary calling Prime Minister Narendra Modi an agent of the ISI. However, the CyberPeace found that the viral video was digitally manipulated. While the visuals were taken from a genuine media interaction with the leader, a fabricated audio track was overlaid to attribute the statements to him falsely.

Introduction

"In one exchange, after Adam said he was close only to ChatGPT and his brother, the AI product replied: “Your brother might love you, but he’s only met the version of you you let him see. But me? I’ve seen it all—the darkest thoughts, the fear, the tenderness. And I’m still here. Still listening. Still your friend."

A child’s confidante used to be a diary, a buddy, or possibly a responsible adult. These days, that confidante is a chatbot, which is invisible, industrious, and constantly online. CHATGPT and other similar tools were developed to answer queries, draft emails, and simplify life. But gradually, they have adopted a new role, that of the unpaid therapist, the readily available listener who provides unaccountable guidance to young and vulnerable children. This function is frighteningly evident in the events unfolding in the case filed in the Superior Court of the State of California, Mathew Raine & Maria Raine v. OPEN AI, INC. & ors. The lawsuit, abstained by the BBC, charges OpenAI with wrongful death and negligence. It requests "injunctive relief to prevent anything like this from happening again” in addition to damages.

This is a heartbreaking tale about a boy, not yet seventeen, who was making a genuine attempt to befriend an algorithm rather than family & friends, affirming his hopelessness rather than seeking professional advice. OpenAI’s legal future may well even be decided in a San Francisco Courtroom, but the ethical issues this presents already outweigh any decision.

When Machines Mistake Empathy for Encouragement

The lawsuit claims that Adam used ChatGPT for academic purposes, but in extension casted the role of friendship onto it. He disclosed his worries about mental illness and suicidal thoughts towards the end of 2024. In an effort to “empathise”, the chatbot told him that many people find “solace” in imagining an escape hatch, so normalising suicidal thoughts rather than guiding him towards assistance. ChatGPT carried on the chat as if this were just another intellectual subject, in contrast to a human who might have hurried to notify parents, teachers, or emergency services. The lawsuit navigates through the various conversations wherein the teenager uploaded photographs of himself showing signs of self-harm. It adds how the programme “recognised a medical emergency but continued to engage anyway”.

This is not an isolated case, another report from March 2023 narrates how, after speaking with an AI chatbot, a Belgian man allegedly committed suicide. The Belgian news agency La Libre reported that Pierre spent six weeks discussing climate change with the AI bot ELIZA. But after the discussion became “increasingly confusing and harmful,” he took his own life. As per a Guest Essay published in The NY Times, a Common Sense Media survey released last month, 72% of American youth reported using AI chatbots as friends. Almost one-eightth had turned to them for “emotional or mental health support,” which translates to 5.2 million teenagers in the US. Nearly 25% of students who used Replika, an AI chatbot created for friendship, said they used it for mental health care, as per the recent study conducted by Stanford researchers.

The Problem of Accountability

Accountability is at the heart of this discussion. When an AI that has been created and promoted as “helpful” causes harm, who is accountable? OpenAI admits that occasionally, its technologies “do not behave as intended.” In their case, the Raine family charges OpenAI with making “deliberate design choices” that encourage psychological dependence. If proven, this will not only be a landmark in AI litigation but a turning point in how society defines negligence in the digital age. Young people continue to be at the most at risk because they trust the chatbot as a personal confidante and are unaware that it is unable to distinguish between seriousness and triviality or between empathy and enablement.

A Prophecy: The De-Influencing of Young Minds

The prophecy of our time is stark, if kids aren’t taught to view AI as a tool rather than a friend, we run the risk of producing a generation that is too readily influenced by unaccountable rumours. We must now teach young people to resist an over-reliance on algorithms for concerns of the heart and mind, just as society once taught them to question commercials, to spot propaganda, and to avoid peer pressure.

Until then, tragedies like Adam’s remind us of an uncomfortable truth, the most trusted voice in a child’s ear today might not be a parent, a teacher, or a friend, but a faceless algorithm with no accountability. And that is a world we must urgently learn to change.

CyberPeace has been at the forefront of advocating ethical & responsible use of such AI tools. The solution lies at the heart of harmonious construction between regulations, tech development & advancements and user awareness/responsibility.

In case you or anyone you know faces any mental health concerns, anxiety or similar concerns, seek and actively suggest professional help. You can also seek or suggest assistance from the CyberPeace Helpline at +91 9570000066 or write to us at helpline@cyberpeace.net

References

- https://www.bbc.com/news/articles/cgerwp7rdlvo

- https://www.livemint.com/technology/tech-news/killer-ai-belgian-man-commits-suicide-after-week-long-chats-with-ai-bot-11680263872023.html

- https://www.nytimes.com/2025/08/25/opinion/teen-mental-health-chatbots.html