Introduction

As various technological developments enable our phones to take on a greater role, these devices, along with the applications they host, also become susceptible to greater risks. Recently, Zimperium, a tech company that provides security services for mobiles and applications from threats like malware, phishing, etc., has announced its identification of a malware that is targeted toward stealing information from Indian Banks. The Indian Express reports that data from over 25 million devices has been exfiltrated, making it increasingly dangerous, just going by the it has affected so far.

Understanding the Threat: The Case of FatBoyPanel

A malware is a malicious software that is a file or a program, intentionally harmful to a network, server, computer, and other devices. It is also of various types; however, in the context of the aforementioned case, it is a Trojan horse i.e., a file/program designed to trick the victim into assuming it to be a legitimate software program that is trying to gain access. They are able to execute malicious functions on a device as soon as they are activated post-installation.

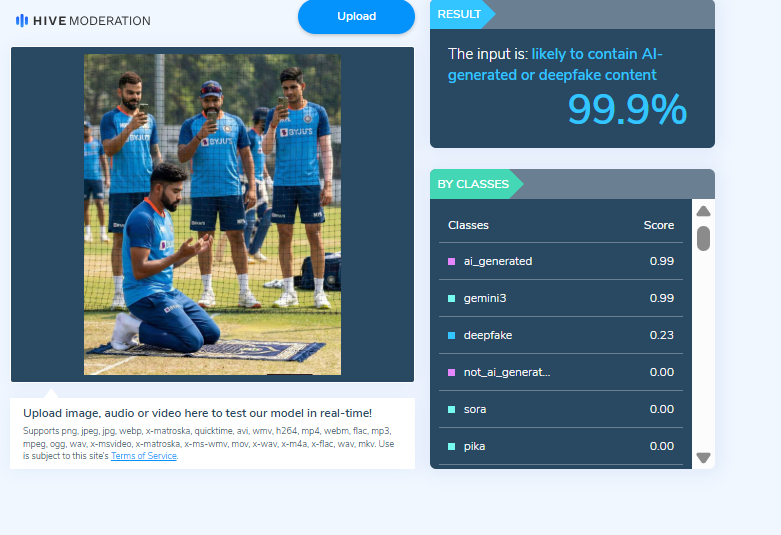

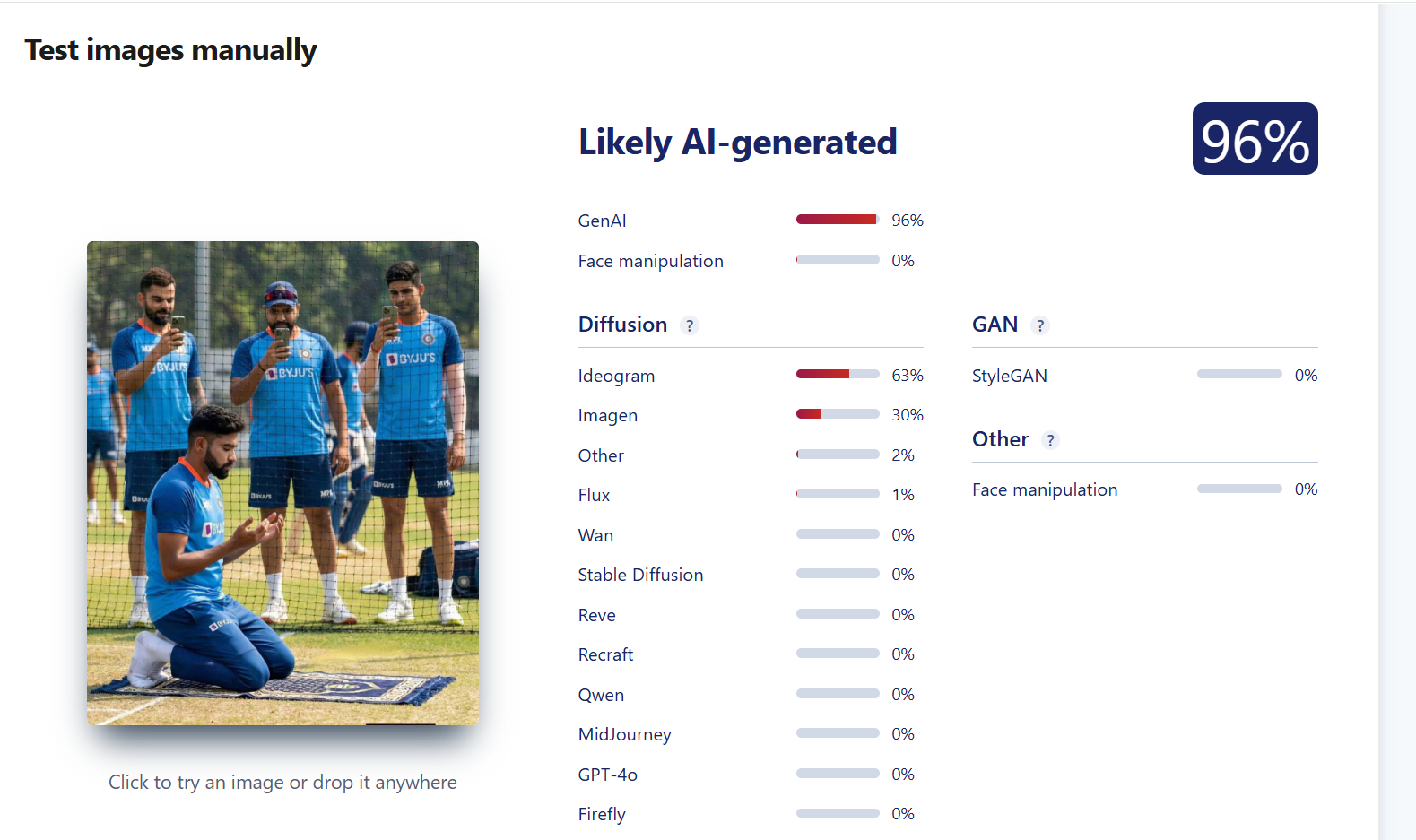

The FatBoyPanel, as it is called, is a malware management system that carried out a massive cyberattack, targeting Indian mobile users and their bank details. Their modus operandi included the process of social engineering, wherein attackers posed as bank officials who called their target and warned them that if no immediate action was taken to update their bank details, their account would be suspended immediately. On panicking and asking for instructions, they were told to download a banking application from the link sent in the form of an Android Package Kit (APK) file (that requires one to enable “Install from Unknown Sources” ) and install it. Various versions of similar incidents were acted on by other attackers, all to trick the target into downloading the file sent. The apps sent through the links are fake, and once installed, they immediately ask for critical permissions such as access to contacts, device storage, overlay permissions (to show fake login pages over real apps), and access to SMS messages (to steal OTPs and banking alerts). This aids in capturing text messages (especially OTPs related to banks), read stored files, monitor app usage, etc. This data is stolen and then sent to the FatBoyPanel backend, where hackers are able to see real-time data on their dashboard, which they can further download and sell. FatBoyPanel is a C&C (command and control) server that acts as a centralised control room.

Protecting Yourself: Essential Precautions in the Digital Realm

Although there are various other types of malware, how one must deal with them remains the same. Following are a few instructions that one can practice in order to stay safe:

- Be cautious with app downloads: Only download apps from official app stores (Google Play Store, Apple App Store). Even then, check the developer's reputation, app permissions, and user reviews before installing.

- Keep your operating system and apps updated: Updates often include security patches that protect against known vulnerabilities.

- Be wary of suspicious links and attachments: Avoid clicking on links or opening attachments in unsolicited emails, SMS messages, or social media posts. Verify the sender's authenticity before interacting.

- Enable multi-factor authentication (MFA) wherever possible: While malware like FatBoyPanel can sometimes bypass OTP-based MFA, it still adds an extra layer of security against many other threats.

- Use strong and unique passwords: Employ a combination of uppercase and lowercase letters, numbers, and symbols for all your online accounts. Avoid reusing passwords across different platforms.

- Install and maintain a reputable mobile security app: These apps can help detect and remove malware, as well as warn you about malicious websites and links (Bitdefender, etc.)

- Regularly review app permissions and give access judiciously: Check what permissions your installed apps have and revoke any that seem unnecessary or excessive.

- Educate yourself and stay informed: Keep up-to-date with the latest cybersecurity threats and best practices.

Conclusion

The emergence of malware management systems indicates just how sophisticated the attackers have become over the years. Vigilance at the level of the general public is recommended, but so are increasing efforts in awareness regarding such methods of crime, as people continue to remain vulnerable in aspects related to cybersecurity. Sensitive information at stake, we must take steps to sensitise and better prepare the public to deal with the growing landscape of the digital world.

References