#FactCheck - Viral Claim About Nitish Kumar’s Resignation Over UGC Protests Is Misleading

Executive Summary

A news video is being widely circulated on social media with the claim that Bihar Chief Minister Nitish Kumar has resigned from his post in protest against the ongoing UGC-related controversy. Several users are sharing the clip while alleging that Kumar stepped down after opposing the issue. However, CyberPeace research has found the claim to be false. The researchrevealed that the video being shared is from 2022 and has no connection whatsoever with the UGC or any recent protests related to it. An old video has been misleadingly linked to a current issue to spread misinformation on social media.

Claim:

An Instagram user shared a video on January 26 claiming that Bihar Chief Minister Nitish Kumar had resigned. The post further alleged that the news was first aired on Republic channel and that Kumar had submitted his resignation to then-Governor Phagu Chauhan. The link to the post, its archived version, and screenshots can be seen below. (Links as provided)

Fact Check:

To verify the claim, CyberPeace first conducted a keyword-based search on Google. No credible or established media organisation reported any such resignation, clearly indicating that the viral claim lacked authenticity.

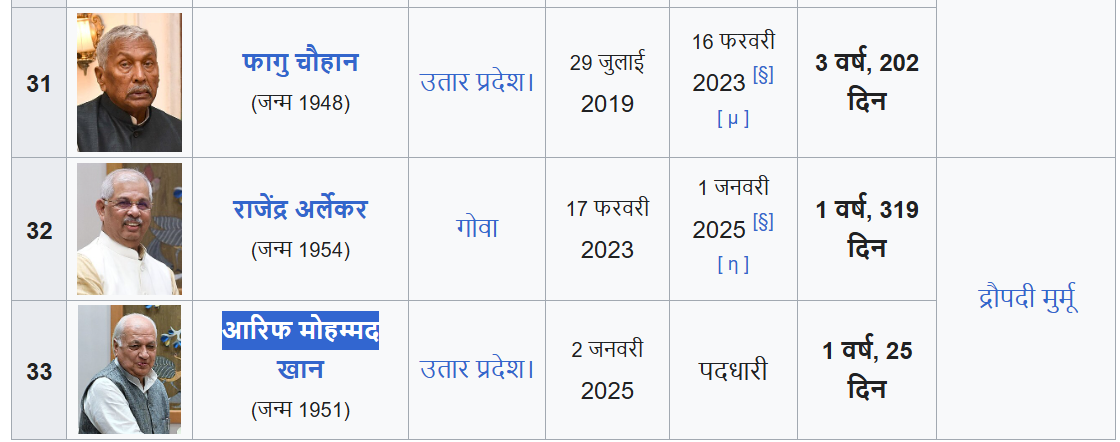

Further, the voiceover in the viral video states that Nitish Kumar handed over his resignation to Governor Phagu Chauhan. However, Phagu Chauhan ceased to be the Governor of Bihar in February 2023. The current Governor of Bihar is Arif Mohammad Khan, making the claim in the video factually incorrect and misleading.

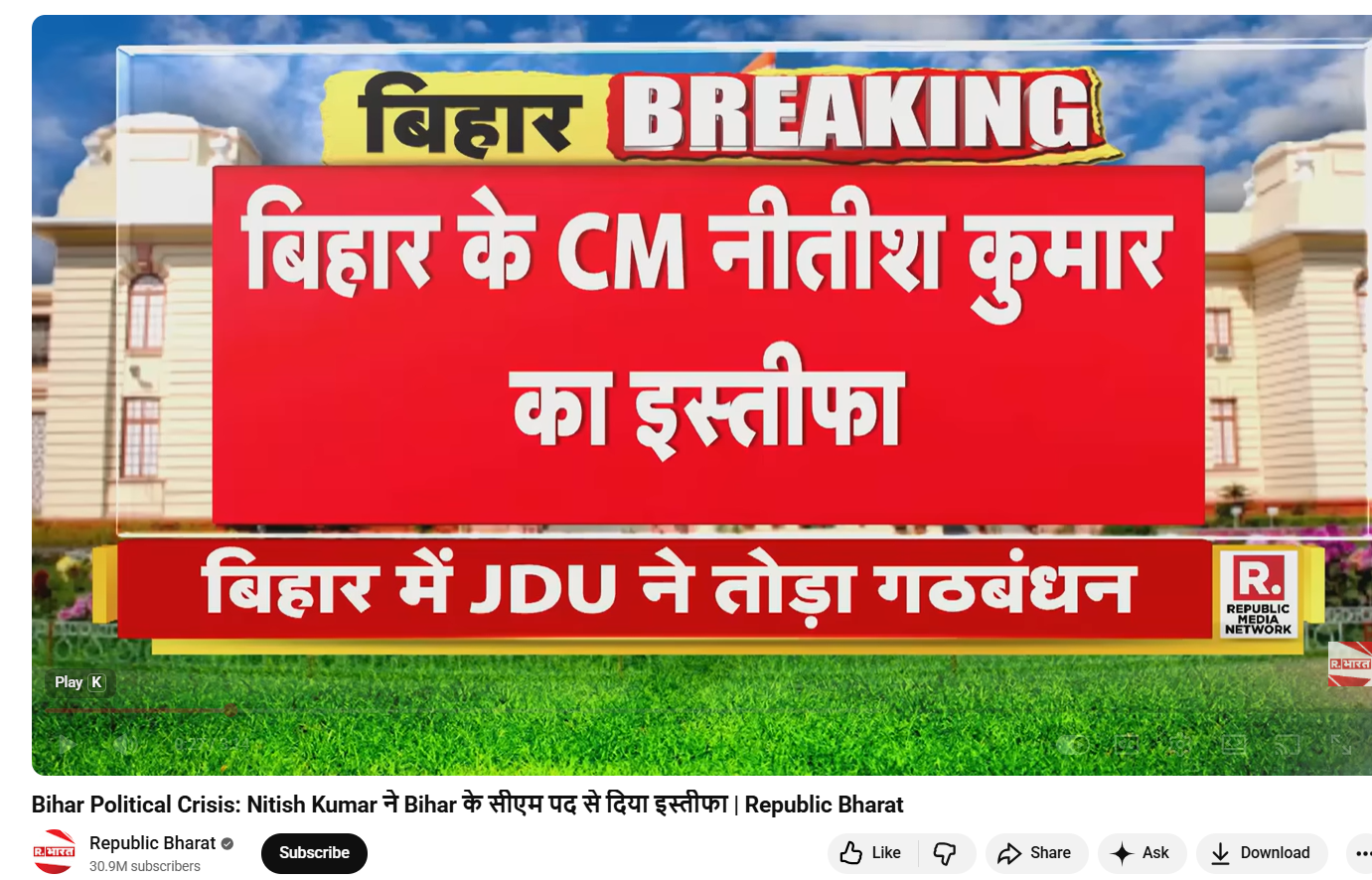

In the next step, keyframes from the viral video were extracted and reverse-searched using Google Lens. This led to the official YouTube channel of Republic Bharat, where the full version of the same video was found. The video was uploaded on August 9, 2022. This clearly establishes that the clip circulating on social media is not recent and is being shared out of context.

Conclusion

CyberPeace’s research confirms that the viral video claiming Nitish Kumar resigned over the UGC issue is false. The video dates back to 2022 and has no link to the current UGC controversy. An old political video has been deliberately circulated with a misleading narrative to create confusion on social media.

Related Blogs

In a recent ruling, a U.S. federal judge sided with Meta in a copyright lawsuit brought by a group of prominent authors who alleged that their works were illegally used to train Meta’s LLaMA language model. While this seems like a significant legal victory for the tech giant, it may not be so. Rather, this is a good case study for creators in the USA to refine their legal strategies and for policymakers worldwide to act quickly to shape the rules of engagement between AI and intellectual property.

The Case: Meta vs. Authors

In Kadrey v. Meta, the plaintiffs alleged that Meta trained its LLaMA models on pirated copies of their books, violating copyright law. However, U.S. District Judge Vince Chhabria ruled that the authors failed to prove two critical things: that their copyrighted works had been used in a way that harmed their market and that such use was not “transformative.” In fact, the judge ruled that converting text into numerical representations to train an AI was sufficiently transformative under the U.S. fair use doctrine. He also noted that the authors’ failure to demonstrate economic harm undermined their claims. Importantly, he clarified that this ruling does not mean that all AI training data usage is lawful, only that the plaintiffs didn’t make a strong enough case.

Meta even admitted that some data was sourced from pirate sites like LibGen, but the Judge still found that fair use could apply because the usage was transformative and non-exploitative.

A Tenuous Win

Chhabria’s decision emphasised that this is not a blanket endorsement of using copyrighted content in AI training. The judgment leaned heavily on the procedural weakness of the case and not necessarily on the inherent legality of Meta’s practices.

Policy experts are warning that U.S. courts are currently interpreting AI training as fair use in narrow cases, but the rulings may not set the strongest judicial precedent. The application of law could change with clearer evidence of commercial harm or a more direct use of content.

Moreover, the ruling does not address whether authors or publishers should have the right to opt out of AI model training, a concern that is gaining momentum globally.

Implications for India

The case highlights a glaring gap in India’s copyright regime: it is outdated. Since most AI companies are located in the U.S., courts have had the opportunity to examine copyright in the context of AI-generated content. India has yet to start. Recently, news agency ANI filed a case alleging copyright infringement against OpenAI for training on its copyrighted material. However, the case is only at an interim stage. The final outcome of the case will have a significant impact on the legality of these language models being able to use copyrighted material for training.

Considering that India aims to develop “state-of-the-art foundational AI models trained on Indian datasets” under the IndiaAI Mission, the lack of clear legal guidance on what constitutes fair dealing when using copyrighted material for AI training is a significant gap.

Thus, key points of consideration for policymakers include:

- Need for Fair Dealing Clarity: India’s fair-dealing provisions under the Copyright Act, 1957, are narrower than U.S. fair use. The doctrine may have to be reviewed to strike a balance between this law and the requirement of diverse datasets to develop foundational models rooted in Indian contexts. A parallel concern regarding data privacy also arises.

- Push for Opt-Out or Licensing Mechanisms: India should consider whether to introduce a framework that requires companies to license training data or provide an opt-out system for creators, especially given the volume of Indian content being scraped by global AI systems.

- Digital Public Infrastructure for AI: India’s policymakers could take this opportunity to invest in public datasets, especially in regional languages, that are both high quality and legally safe for AI training.

- Protecting Local Creators: India needs to ensure that its authors, filmmakers, educators and journalists are protected from having their work repurposed without compensation, since power asymmetries between Big Tech and local creators can lead to exploitation of the latter.

Conclusion

The ruling in Meta’s favour is just one win for the developer. The real questions about consent, compensation and creative control remain unanswered. Meanwhile, the lesson for India is urgent: it needs AI policies that balance innovation with creator rights and provide legal certainty and ethical safeguards as it accelerates its AI ecosystem. Further, as global tech firms race ahead, India must not remain a passive data source; it must set the terms of its digital future. This will help the country move a step closer to achieving its goal of building sovereign AI capacity and becoming a hub for digital innovation.

References

- https://www.theguardian.com/technology/2025/jun/26/meta-wins-ai-copyright-lawsuit-as-us-judge-rules-against-authors

- https://www.wired.com/story/meta-scores-victory-ai-copyright-case/

- https://www.cnbc.com/2025/06/25/meta-llama-ai-copyright-ruling.html

- https://www.mondaq.com/india/copyright/1348352/what-is-fair-use-of-copyright-doctrine

- https://www.pib.gov.in/PressReleasePage.aspx?PRID=2113095#:~:text=One%20of%20the%20key%20pillars,models%20trained%20on%20Indian%20datasets.

- https://www.ndtvprofit.com/law-and-policy/ani-vs-openai-delhi-high-court-seeks-responses-on-copyright-infringement-charges-against-chatgpt

Introduction

Cybersecurity threats have been globally prevalent for quite some time now. All nations, organisations and individuals stand at risk from new and emerging potential cybersecurity threats, putting finances, privacy, data, identities and sometimes human lives at stake. The latest Data Breach Report by IBM revealed that nearly a staggering 83% of organisations experienced more than one data breach instance during 2022. As per the 2022 Data Breach Investigations Report by Verizon, the total number of global ransomware attacks surged by 13%, indicating a concerning rise equal to the last five years combined. The statistics clearly showcase how the future is filled with potential threats as we advance further into the digital age.

Who is Okta?

Okta is a secure identity cloud that links all your apps, logins and devices into a unified digital fabric. Okta has been in existence since 2009 and is based out of San Francisco, USA and has been one of the leading service providers in the States. The advent of the company led to early success based on the high-quality services and products introduced by them in the market. Although Okta is not as well-known as the big techs, it plays a vital role in big organisations' cybersecurity systems. More than 18,000 users of the identity management company's products rely on it to give them a single login for the several platforms that a particular business uses. For instance, Zoom leverages Okta to provide "seamless" access to its Google Workspace, ServiceNow, VMware, and Workday systems with only one login, thus showing how Okta is fundamental in providing services to ease the human effort on various platforms. In the digital age, such organisations are instrumental in leading the pathway to innovation and entrepreneurship.

The Okta Breach

The last Friday, 20 October, Okta reported a hack of its support system, leading to chaos and havoc within the organisation. The result of the hack can be seen in the market in the form of the massive losses incurred by Okta in the stock exchange.

Since the attack, the company's market value has dropped by more than $2 billion. The well-known incident is the most recent in a long line of events connected to Okta or its products, which also includes a wave of casino invasions that caused days-long disruptions to hotel rooms in Las Vegas, casino giants Caesars and MGM were both affected by hacks as reported earlier this year. Both of those attacks, targeting MGM and Caesars’ Okta installations, used a sophisticated social engineering attack that went through IT help desks.

What can be done to prevent this?

Cybersecurity attacks on organisations have become a very common occurrence ever since the pandemic and are rampant all across the globe. Major big techs have been successful in setting up SoPs, safeguards and precautionary measures to protect their companies and their digital assets and interests. However, the Medium, Mico and small business owners are the most vulnerable to such unknown high-intensity attacks. The governments of various nations have established Computer Emergency Response Teams to monitor and investigate such massive-scale cyberattacks both on organisations and individuals. The issue of cybersecurity can be better addressed by inculcating the following aspects into our daily digital routines:

- Team Upskilling: Organisations need to be critical in creating upskilling avenues for employees pertaining to cybersecurity and threats. These campaigns should be run periodically, focusing on both the individual and organisational impact of any threat.

- Reporting Mechanism for Employees and Customers: Business owners and organisations need to deploy robust, sustainable and efficient reporting mechanisms for both employees well as customers. The mechanism will be fundamental in pinpointing the potential grey areas and threats in the cyber security mechanism as well. A dedicated reporting mechanism is now a mandate by a lot of governments around the world as it showcases transparency and natural justice in terms of legal remedies.

- Preventive, Precautionary and Recovery Policies: Organisations need to create and deploy respective preventive, precautionary and recovery policies in regard to different forms of cyber attacks and threats. This will be helpful in a better understanding of threats and faster response in cases of emergencies and attacks. These policies should be updated regularly, keeping in mind the emerging technologies. Efficient deployment of the policies can be done by conducting mock drills and threat assessment activities.

- Global Dialogue Forums: It is pertinent for organisations and the industry to create a community of cyber security enthusiasts from different and diverse backgrounds to address the growing issues of cyberspace; this can be done by conducting and creating global dialogue forums, which will act as the beacon of sharing best practices, advisories, threat assessment reports, potential threats and attacks thus establishing better inter-agency and inter-organisation communication and coordination.

- Data Anonymisation and Encryption: Organisations should have data management/processing policies in place for transparency and should always store data in an encrypted and anonymous manner, thus creating a blanket of safety in case of any data breach.

- Critical infrastructure: The industry leaders should push the limits of innovation by setting up state-of-the-art critical cyber infrastructure to create employment, innovation, and entrepreneurship spirit among the youth, thus creating a whole new generation of cyber-ready professionals and dedicated netizens. Critical infrastructures are essential in creating a safe, secure, resilient and secured digital ecosystem.

- Cysec Audits & Sandboxing: All organisations should establish periodic routines of Cybersecurity audits, both by internal and external entities, to find any issue/grey area in the security systems. This will create a more robust and adaptive cybersecurity mechanism for the organisation and its employees. All tech developing and testing companies need to conduct proper sandboxing exercises for all or any new tech/software creation to identify its shortcomings and flaws.

Conclusion

In view of the rising cybersecurity attacks on organisations, especially small and medium companies, a lot has been done, and a lot more needs to be done to establish an aspect of safety and security for companies, employees and customers. The impact of the Okta breach very clearly show how cyber attacks can cause massive repercussion for any organisation in the form of monetary loss, loss of business, damage to reputation and a lot of other factors. One should take such instances as examples and learnings for ourselves and prepare our organisation to combat similar types of threats, ultimately working towards preventing these types of threats and eradicating the influence of bad actors from our digital ecosystem altogether.

References:

- https://hbr.org/2023/05/the-devastating-business-impacts-of-a-cyber-breach#:~:text=In%202022%2C%20the%20global%20average,legal%20fees%2C%20and%20audit%20fees.

- https://www.okta.com/intro-to-okta/#:~:text=Okta%20is%20a%20secure%20identity,use%20to%20work%2C%20instantly%20available.

- https://www.cyberpeace.org/resources/blogs/mgm-resorts-shuts-down-it-systems-after-cyberattack

Introduction

According to a draft of the Digital Personal Data Protection Bill, 2023, the Indian government may have the authority to reduce the age at which users can agree to data processing to 14 years. Companies requesting consent to process children’s data, on the other hand, must demonstrate that the information is handled in a “verifiably safe” manner.

The Central Government might change the age limit for consent

The proposed Digital Personal Data Protection Bill 2022 in India attempts to protect child’s personal data under the age of 14 through several provisions. The proposed lower age of consent in India under the Digital Personal Data Protection Bill 2022 is to loosen relevant norms and fulfil the demands of Internet corporations. After a year, the government may reconsider the definition of a child with the goal of expanding coverage to children under the age of 14. The proposed shift in the age of consent has elicited varied views, with some experts suggesting that it might potentially expose children to data processing concerns.

The definition of a child is understood to have been amended in the data protection Bill, which is anticipated to be submitted in Parliament’s Monsoon session, to an “individual who has not completed the age of eighteen years or such lower age as the central government may notify.” A child was defined as an “individual who has not completed eighteen years of age” in the 2022 draft.

Under deemed consent, the government has also added the 'legitimate business interest' clause

This clause allows businesses to process personal data without obtaining explicit consent if it is required for their legitimate business interests. The measure recognises that corporations have legitimate objectives, such as innovation, that can be pursued without jeopardising privacy.

Change in Data Protection Boards

The Digital Personal Data Protection Bill 2022, India’s new plan to secure personal data, represents a significant shift in strategy by emphasising outcomes rather than legislative compliance. This amendment will strengthen the Data Protection Board’s position, as its judgments on noncompliance complaints will establish India’s first systematic jurisprudence on data protection. The Cabinet has approved the bill and may be introduced in Parliament in the Monsoon session starting on July 20.

The draft law leaves the selection of the Data Protection Board’s chairperson and members solely to the discretion of the central government, making it a central government set-up board. The government retains control over the board’s composition, terms of service, and so on. The bill does specify, however, that the Data Protection Board would be completely independent and will have a strictly adjudicatory procedure to adjudicate data breaches. It has the same status as a civil court, and its rulings can be appealed.

India's first regulatory body in Charge of preserving privacy

Some expected amendments to the law include a blacklist of countries to which Indian data cannot be transferred and fewer penalties for data breaches. The bill’s scope is limited to processing digital personal data within Indian territory, which means that any offline personal data and anything not digitised will be exempt from the legislation’s jurisdiction. Furthermore, the measure is silent on the governance of digital paper records.

Conclusion

The Digital Personal Data Protection Bill 2022 is a much-needed piece of legislation that will replace India’s current data protection regime and assist in preserving individuals’ rights. Central Government is looking for a change in the age for consent from 18 to 14 years. The bill underlines the need for verifiable parental consent before processing a child’s personal data, including those under 18. This section seeks to ensure that parents or legal guardians have a say in the processing of their child’s personal data.