#FactCheck - Viral Video Showing Man Frying Bhature on His Stomach Is AI-Generated

A video circulating on social media shows a man allegedly rolling out bhature on his stomach and then frying them in a pan. The clip is being shared with a communal narrative, with users making derogatory remarks while falsely linking the act to a particular community.

CyberPeace Foundation’s research found the viral claim to be false. Our probe confirms that the video is not real but has been created using artificial intelligence (AI) tools and is being shared online with a misleading and communal angle.

Claim

On January 5, 2025, several users shared the viral video on social media platform X (formerly Twitter). One such post carried a communal caption suggesting that the person shown in the video does not belong to a particular community and making offensive remarks about hygiene and food practices..

- The post link and archived version can be viewed here: https://x.com/RightsForMuslim/status/2008035811804291381

- Archive Link: https://archive.ph/lKnX5

Fact Check:

Upon closely examining the viral video, several visual inconsistencies and unnatural movements were observed, raising suspicion about its authenticity. These anomalies are commonly associated with AI-generated or digitally manipulated content.

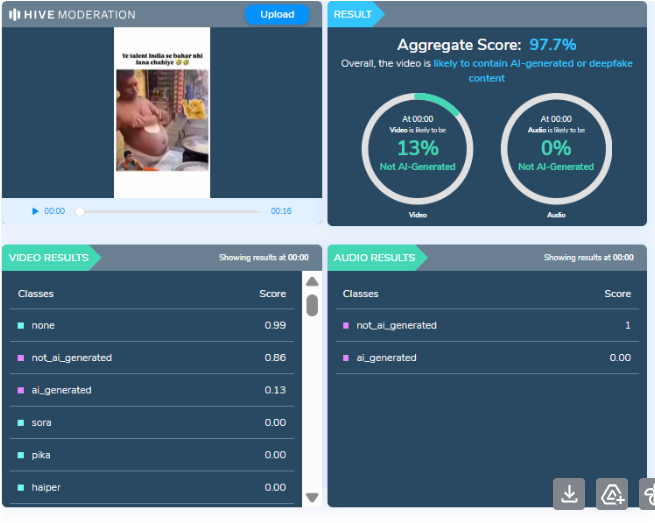

To verify this, the video was analysed using the AI detection tool HIVE Moderation. According to the tool’s results, the video was found to be 97 percent AI-generated, strongly indicating that it was not recorded in real life but synthetically created.

Conclusion

CyberPeace Foundation’s research clearly establishes that the viral video is AI-generated and does not depict a real incident. The clip is being deliberately shared with a false and communal narrative to mislead users and spread misinformation on social media. Users are advised to exercise caution and verify content before sharing such sensational and divisive material online.

Related Blogs

Amid the popularity of OpenAI’s ChatGPT and Google’s announcement of introducing its own Artificial Intelligence chatbot called Bard, there has been much discussion over how such tools can impact India at a time when the country is aiming for an AI revolution.

During the Budget Session, Finance Minister Nirmala Sitharaman talked about AI, while her colleague, Minister of State (MoS) for Electronics and Information Technology Rajeev Chandrasekhar discussed it at the India Stack Developer Conference.

While Sitharaman stated that the government will establish three centres of excellence in AI in the country, Chandrashekhar at the event mentioned that India Stack, which includes digital solutions like Aadhaar, Digilocker and others, will become more sophisticated over time with the inclusion of AI.

As AI chatbots become the buzzword, News18 discusses with experts how such tech tools will impact India.

AI IN INDIA

Many experts believe that in a country like India, which is extremely diverse in nature and has a sizeable population, the introduction of technologies and their right adoption can bring a massive digital revolution.

For example, Manoj Gupta, Cofounder of Plotch.ai, a full-stack AI-enabled SaaS product, told News18 that Bard is still experimental and not open to everyone to use while ChatGPT is available and can be used to build applications on top of it.

He said: “Conversational chatbots are interesting since they have the potential to automate customer support and assisted buying in e-commerce. Even simple banking applications can be built that can use ChatGPT AI models to answer queries like bank balance, service requests etc.”

According to him, such tools could be extremely useful for people who are currently excluded from the digital economy due to language barriers.

Ashwini Vaishnaw, Union Minister for Communications, Electronics & IT, has also talked about using such tools to reduce communication issues. At World Economic Forum in Davos, he said: “We integrated our Bhashini language AI tool, which translates from one Indian language to another Indian language in real-time, spoken and text everything. We integrated that with ChatGPT and are seeing very good results.”

‘DOUBLE-EDGED SWORD’

Sundar Balasubramanian, Managing Director, India & SAARC, at Check Point Software, told News18 that generative AI like ChatGPT is a “double-edged sword”.

According to him, used in the right way, it can help developers write and fix code quicker, enable better chat services for companies, or even be a replacement for search engines, revolutionising the way people search for information.

“On the flip side, hackers are also leveraging ChatGPT to accelerate their bad acts and we have already seen examples of such exploitations. ChatGPT has lowered the bar for novice hackers to enter the field as they are able to learn quicker and hack better through asking the AI tool for answers,” he added.

Balasubramanian also stated that CPR has seen the quality of phishing emails improve tremendously over the past 3 months, making it increasingly difficult to discern between legitimate sources and a targeted phishing scam.

“Despite the emergence of the use of generative AI impacting cybercrime, Check Point is continually reminding organisations and individuals of the significance of being vigilant as ChatGPT and Codex become more mature, it can affect the threat landscape, for both good and bad,” he added.

While the real-life applications of ChatGPT include several things ranging from language translation to explaining tricky math problems, Balasubramanian said it can also be used for making the work of cyber researchers and developers more efficient.

“Generative AI or tools like ChatGPT can be used to detect potential threats by analysing large amounts of data and identifying patterns that may indicate malicious activity. This can help enterprises quickly identify and respond to a potential threat before it escalates to something more,” he added.

POSITIVE FACTORS

Major Vineet Kumar, Founder and Global President of CyberPeace Foundation, believes that the deployment of AI chatbots has proven to be highly beneficial in India, where a booming economy and increasing demand for efficient customer service have led to a surge in their use. According to him, both ChatGPT and Bard have the potential to bring significant positive change to various industries and individuals in India.

“ChatGPT has already made an impact by revolutionising customer service, providing instant and accurate support, and reducing wait time. It has automated tedious and complicated tasks for businesses and educational institutions, freeing up valuable time for more significant activities. In the education sector, ChatGPT has also improved learning experiences by providing quick and reliable information to students and educators,” he added.

He also said there are several possible positive impacts that the AI chatbots, ChatGPT and Bard, could have in India and these include improved customer experience, increased productivity, better access to information, improved healthcare, improved access to education and better financial services.

Reference Link : https://www.news18.com/news/explainers/confused-about-chatgpt-bard-experts-tell-news18-how-openai-googles-ai-chatbots-may-impact-india-7026277.html

Introduction

Generative AI, particularly deepfake technology, poses significant risks to security in the financial sector. Deepfake technology can convincingly mimic voices, create lip-sync videos, execute face swaps, and carry out other types of impersonation through tools like DALL-E, Midjourney, Respeecher, Murf, etc, which are now widely accessible and have been misused for fraud. For example, in 2024, cybercriminals in Hong Kong used deepfake technology to impersonate the Chief Financial Officer of a company, defrauding it of $25 million. Surveys, including Regula’s Deepfake Trends 2024 and Sumsub reports, highlight financial services as the most targeted sector for deepfake-induced fraud.

Deepfake Technology and Its Risks to Financial Systems

India’s financial ecosystem, including banks, NBFCs, and fintech companies, is leveraging technology to enhance access to credit for households and MSMEs. The country is a leader in global real-time payments and its digital economy comprises 10% of its GDP. However, it faces unique cybersecurity challenges. According to the RBI’s 2023-24 Currency and Finance report, banks cite cybersecurity threats, legacy systems, and low customer digital literacy as major hurdles in digital adoption. Deepfake technology intensifies risks like:

- Social Engineering Attacks: Information security breaches through phishing, vishing, etc. become more convincing with deepfake imagery and audio.

- Bypassing Authentication Protocols: Deepfake audio or images may circumvent voice and image-based authentication systems, exposing sensitive data.

- Market Manipulation: Misleading deepfake content making false claims and endorsements can harm investor trust and damage stock market performance.

- Business Email Compromise Scams: Deepfake audio can mimic the voice of a real person with authority in the organization to falsely authorize payments.

- Evolving Deception Techniques: The usage of AI will allow cybercriminals to deploy malware that can adapt in real-time to carry out phishing attacks and inundate targets with increased speed and variations. Legacy security frameworks are not suited to countering automated attacks at such a scale.

Existing Frameworks and Gaps

In 2016, the RBI introduced cybersecurity guidelines for banks, neo-banking, lending, and non-banking financial institutions, focusing on resilience measures like Board-level policies, baseline security standards, data leak prevention, running penetration tests, and mandating Cybersecurity Operations Centres (C-SOCs). It also mandated incident reporting to the RBI for cyber events. Similarly, SEBI’s Cybersecurity and Cyber Resilience Framework (CSCRF) applies to regulated entities (REs) like stock brokers, mutual funds, KYC agencies, etc., requiring policies, risk management frameworks, and third-party assessments of cyber resilience measures. While both frameworks are comprehensive, they require updates addressing emerging threats from generative AI-driven cyber fraud.

Cyberpeace Recommendations

- AI Cybersecurity to Counter AI Cybercrime: AI-generated attacks can be designed to overwhelm with their speed and scale. Cybercriminals increasingly exploit platforms like LinkedIn, Microsoft Teams, and Messenger, to target people. More and more organizations of all sizes will have to use AI-based cybersecurity for detection and response since generative AI is becoming increasingly essential in combating hackers and breaches.

- Enhancing Multi-factor Authentication (MFA): With improving image and voice-generation/manipulation technologies, enhanced authentication measures such as token-based authentication or other hardware-based measures, abnormal behaviour detection, multi-device push notifications, geolocation verifications, etc. can be used to improve prevention strategies. New targeted technological solutions for content-driven authentication can also be implemented.

- Addressing Third-Party Vulnerabilities: Financial institutions often outsource operations to vendors that may not follow the same cybersecurity protocols, which can introduce vulnerabilities. Ensuring all parties follow standardized protocols can address these gaps.

- Protecting Senior Professionals: Senior-level and high-profile individuals at organizations are at a greater risk of being imitated or impersonated since they hold higher authority over decision-making and have greater access to sensitive information. Protecting their identity metrics through technological interventions is of utmost importance.

- Advanced Employee Training: To build organizational resilience, employees must be trained to understand how generative and emerging technologies work. A well-trained workforce can significantly lower the likelihood of successful human-focused human-focused cyberattacks like phishing and impersonation.

- Financial Support to Smaller Institutions: Smaller institutions may not have the resources to invest in robust long-term cybersecurity solutions and upgrades. They require financial and technological support from the government to meet requisite standards.

Conclusion

According to The India Cyber Threat Report 2025 by the Data Security Council of India (DSCI) and Seqrite, deepfake-enabled cyberattacks, especially in the finance and healthcare sectors, are set to increase in 2025. This has the potential to disrupt services, steal sensitive data, and exploit geopolitical tensions, presenting a significant risk to the critical infrastructure of India.

As the threat landscape changes, institutions will have to continue to embrace AI and Machine Learning (ML) for threat detection and response. The financial sector must prioritize robust cybersecurity strategies, participate in regulation-framing procedures, adopt AI-based solutions, and enhance workforce training, to safeguard against AI-enabled fraud. Collaborative efforts among policymakers, financial institutions, and technology providers will be essential to strengthen defenses.

Sources

- https://sumsub.com/newsroom/deepfake-cases-surge-in-countries-holding-2024-elections-sumsub-research-shows/

- https://www.globenewswire.com/news-release/2024/10/31/2972565/0/en/Deepfake-Fraud-Costs-the-Financial-Sector-an-Average-of-600-000-for-Each-Company-Regula-s-Survey-Shows.html

- https://www.sipa.columbia.edu/sites/default/files/2023-05/For%20Publication_BOfA_PollardCartier.pdf

- https://edition.cnn.com/2024/02/04/asia/deepfake-cfo-scam-hong-kong-intl-hnk/index.html

- https://www.rbi.org.in/Commonman/English/scripts/Notification.aspx?Id=1721

- https://elplaw.in/leadership/cybersecurity-and-cyber-resilience-framework-for-sebi-regulated-entities/

- https://economictimes.indiatimes.com/tech/artificial-intelligence/ai-driven-deepfake-enabled-cyberattacks-to-rise-in-2025-healthcarefinance-sectors-at-risk-report/articleshow/115976846.cms?from=mdr

Introduction:

Former Egyptian MP Ahmed Eltantawy was targeted with Cytrox’s predator spyware through links sent via SMS and WhatsApp. Former Egyptian MP Ahmed Eltantawy has been targeted with Cytrox’s Predator spyware in a campaign believed to be state-sponsored cyber espionage. After Eltantawy made his intention to run for president in the 2024 elections known, the targeting took place between May and September 2023. The spyware was distributed using links sent via SMS and WhatsApp, network injection, and visits to certain websites by Eltantawy. The Citizen Lab examined the assaults with the help of Google's Threat Analysis Group (TAG), and they were able to acquire an iPhone zero-day exploit chain that was designed to be used to install spyware on iOS versions up to 16.6.1.

Investigation: The Ahmed Eltantawy Incident

Eltantawy's device was forensically examined by The Citizen Lab, which uncovered several efforts to use Cytrox's Predator spyware to target him. In the investigation, The Citizen Lab and TAG discovered an iOS exploit chain utilised in the attacks against Eltantawy. They started a responsible disclosure procedure with Apple, and as a consequence, it resulted in the release of updates patching the vulnerabilities used by the exploit chain. Mobile zero-day exploit chains may be quite expensive, with black market values for them exceeding millions of dollars. The Citizen Lab also identified several domain names and IP addresses associated with Cytrox’s Predator spyware. Additionally, a network injection method was also utilised to get the malware onto Eltantawy's phone, according to the study. He would be discreetly routed to a malicious website using network injection when he went to certain websites that weren't HTTPS.

What is Cyber Espionage?

Cyber espionage, also referred to as cyber spying, is a sort of cyberattack in which an unauthorised user tries to obtain confidential or sensitive information or intellectual property (IP) for financial gain, business benefit, or political objectives.

Apple's Response: A Look at iOS Vulnerability Patching

Users are advised to keep their devices up-to-date and enable lockdown Mode on iPhones. Former Egyptian MP targeted with predator spyware ahead of 2024 presidential run hence Update your macOS Ventura, iOS, and iPadOS devices, as Apple has released emergency updates to address the flaws. Apple has Released Emergency Updates Amid Citizen Lab’s Disclosure. Apple has issued three emergency updates for iOS, iPadOS (1), and macOS Ventura (2).

The updates address the following vulnerabilities:

CVE-2023-41991,

CVE-2023-41992,

CVE-2023-41993.

Apple customers are advised to immediately install these emergency security updates to protect themselves against potential targeted spyware attacks. By updating promptly, users will ensure that their devices are secure and cannot be compromised by such attacks exploiting these particular zero-day vulnerabilities. Hence it is advisable to maintain up-to-date software and enable security features in your Apple devices.

Conclusion:

Ahmed Eltantawy, a former Egyptian MP and presidential candidate, was targeted with Cytrox’s Predator spyware after announcing his bid for the presidency. He was targeted by Cytrox Predator Spyware Campaign. Such an incident is believed to be State-Sponsored Cyber Espionage. The incident raises the question of loss of privacy and shows the mala fide intention of the political opponents. The investigation Findings reveal that Ahmed Eltantawy was the victim of a sophisticated cyber espionage campaign that leveraged Cytrox’s Predator spyware. Apple advised that all users are urged to update their Apple devices. This case raises alarming concerns about the lack of controls on the export of spyware technologies and underscores the importance of security updates and lockdown modes on Apple devices.

References:

- https://uksnackattack.co.uk/predator-in-the-wires-ahmed-eltantawy-targeted-by-predator-spyware-upon-presidential-ambitions-announcement

- https://citizenlab.ca/2023/09/predator-in-the-wires-ahmed-eltantawy-targeted-with-predator-spyware-after-announcing-presidential-ambitions/#:~:text=Between%20May%20and%20September%202023,in%20the%202024%20Egyptian%20elections.

- https://thehackernews.com/2023/09/latest-apple-zero-days-used-to-hack.html

- https://www.hackread.com/zero-day-ios-exploit-chain-predator-spyware/