#FactCheck - Viral Video of Sachin Tendulkar Commenting on Joe Root Is AI-Generated

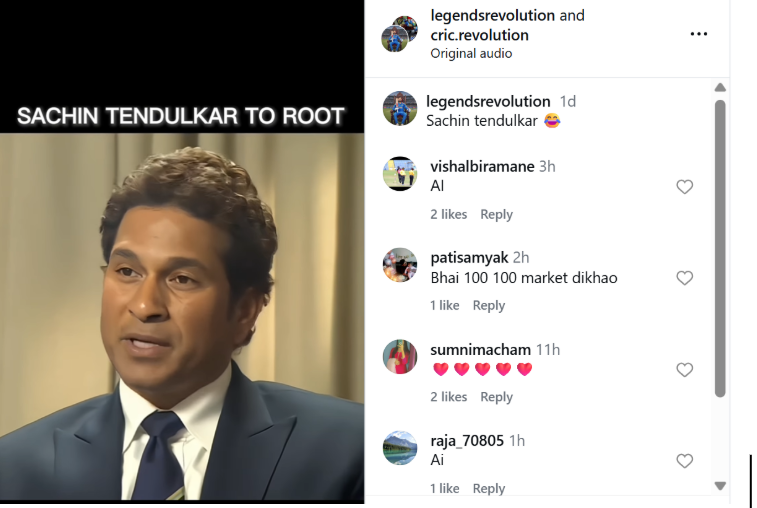

A video circulating on social media claims to show former Indian cricketer Sachin Tendulkar commenting on England batter Joe Root’s batting feats. In the clip, Tendulkar is allegedly heard saying that if Joe Root continues scoring centuries, even his (Tendulkar’s) record would be broken. The video further claims that Tendulkar says if Root scores another century, he would give up the bat’s grip, after which the clip abruptly ends.

Users sharing the video are claiming that Sachin Tendulkar has taken a dig at Joe Root through this remark.

Cyber Peace Foundation’s research found the claim to be misleading. Our research clearly establishes that the viral video is not authentic but has been created using Artificial Intelligence (AI) tools and is being shared online with a false narrative.

CLAIM

On January 5, 2025, several users shared the viral video on Instagram, claiming it shows Sachin Tendulkar making remarks about Joe Root’s century-scoring spree.

(Post link and archive link available.)

FACT CHECK

To verify the claim, we extracted keyframes from the viral video and conducted a Google Reverse Image Search. This led us to an interview of Sachin Tendulkar published on the official BBC News YouTube channel on November 18, 2013. The visuals from that interview match exactly with those seen in the viral clip.

This establishes that the visuals used in the viral video are old and have been repurposed with manipulated audio to create a misleading narrative.

Further, Joe Root made his Test debut in 2012. At that time, he had not scored multiple Test centuries and was nowhere close to Sachin Tendulkar’s record tally of hundreds. This timeline itself makes the viral claim factually incorrect.

(Link to the original BBC interview available.)

https://www.youtube.com/watch?v=v6Rz4pgR9UQ

Upon closely examining the viral clip, we noticed that Sachin Tendulkar’s voice sounded unnatural and inconsistent. This raised suspicion of audio manipulation.

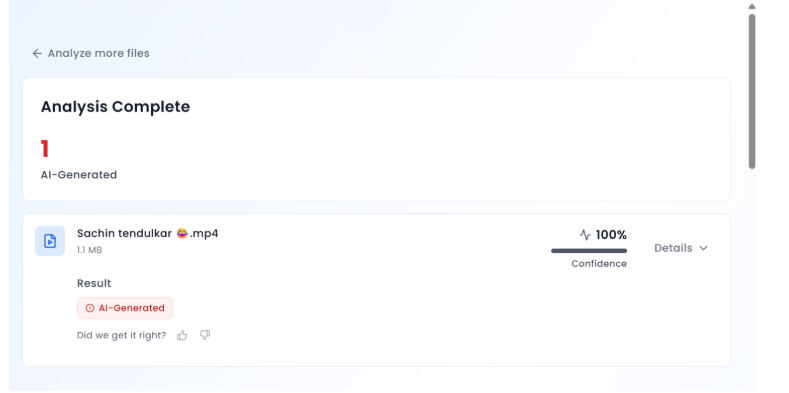

We then ran the viral video through an AI detection tool, Aurigin AI. According to the results, the audio in the video was found to be 100 percent AI-generated, confirming that Tendulkar never made the statements attributed to him in the clip.

Conclusion

Our research confirms that the viral video claiming Sachin Tendulkar commented on Joe Root’s centuries is fake. The video has been created using AI-generated audio and misleadingly combined with visuals from a 2013 interview. Users are sharing this manipulated clip on social media with a false claim.

Related Blogs

Introduction

Public infrastructure has traditionally served as the framework for civilisation, transporting people, money, and ideas across time and space, from the iron veins of transcontinental railroads to the unseen arteries of the internet. In democracies where free markets and public infrastructure co-exist, this framework has not only facilitated but also accelerated progress. Digital Public Infrastructure (DPI), which powers inclusiveness, fosters innovation, and changes citizens from passive recipients to active participants in the digital age, is emerging as the new civic backbone as we move away from highways and towards high-speed data.

DPI makes it possible for innovation at the margins and for inclusion at scale by providing open-source, interoperable platforms for identities, payments, and data exchange. Examples of how the Global South is evolving from a passive consumer of technology to a creator of globally replicable governance models are India’s Aadhaar (digital identification), UPI (real-time payments), and DigiLocker (data empowerment). As the ‘digital commons’ emerges, DPI does more than simply link users; it also empowers citizens, eliminates inefficiencies from the past, and reimagines the creation and distribution of public value in the digital era.

Securing the Digital Infrastructure: A Contemporary Imperative

As humans, we are already the inhabitants of the future, we stand at the temporal threshold for reform. Digital Infrastructure is no longer just a public good. It’s now a strategic asset, akin to oil pipelines in the 20th century. India is recognised globally for the introduction of “India Stack”, through which the face of digital payments has also been changed. The economic value contributed by DPIs to India’s GDP is predicted to reach 2.9-4.2 percent by 2030, having already reached 0.9% in 2022. Its role in India’s economic development is partly responsible for its success; among emerging market economies, it helped propel India to the top of the revenue administrations’ digitalisation index. The other portion has to do with how India’s social service delivery has changed across the board. By enabling digital and financial inclusion, it has increased access to education (DIKSHA) and is presently being developed to offer agricultural (VISTAAR) and digital health (ABDM) services.

Securing the Foundations: Emerging Threats to Digital Public Infrastructure

The rising prominence of DPI is not without its risks, as adversarial forces are developing with comparable sophistication. The core underpinnings of public digital systems are the target of a new generation of cyber threats, ranging from hostile state actors to cybercriminal syndicates. The threats pose a great risk to the consistent development endeavours of the government. To elucidate, targeted attacks on Biometric databases, AI-based Misinformation and Psychological Warfare, Payment System Hacks, State-sponsored malware, cross-border phishing campaigns, surveillance spyware and Sovereign Malware are modern-day examples of cyber threats.

To secure DPI, a radical rethink beyond encryption methods and perimeter firewalls is needed. It requires an understanding of cybersecurity that is systemic, ethical, and geopolitical. Democracy, inclusivity, and national integrity are all at risk from DPI. To preserve the confidence and promise of digital public infrastructure, policy frameworks must change from fragmented responses to coordinated, proactive and people-centred cyber defence policies.

CyberPeace Recommendations

Powering Progress, Ignoring Protection: A Precarious Path

The Indian government is aware that cyberattacks are becoming more frequent and sophisticated in the nation. To address the nation’s cybersecurity issues, the government has implemented a number of legislative, technical, and administrative policy initiatives. While the initiatives are commendable, there are a few Non-Negotiables that need to be in place for effective protection:

- DPIs must be declared Critical Information Infrastructure. In accordance with the IT Act, 2000, the DPI (Aadhaar, UPI, DigiLocker, Account Aggregator, CoWIN, and ONDC) must be designated as Critical Information Infrastructure (CII) and be supervised by the NCIIPC, just like the banking, energy, and telecom industries. Give NCIIPC the authority to publish required security guidelines, carry out audits, and enforce adherence to the DPI stack, including incident response protocols tailored to each DPI.

- To solidify security, data sovereignty, and cyber responsibility, India should spearhead global efforts to create a Global DPI Cyber Compact through the “One Future Alliance” and the G20. To ensure interoperable cybersecurity frameworks for international DPI projects, promote open standards, cross-border collaboration on threat intelligence, and uniform incident reporting guidelines.

- Establish a DPI Threat Index to monitor vulnerabilities, including phishing attacks, efforts at biometric breaches, sovereign malware footprints, spikes in AI misinformation, and patterns in payment fraud. Create daily or weekly risk dashboards by integrating data from state CERTs, RBI, UIDAI, CERT-In, and NPCI. Use machine learning (ML) driven detection systems.

- Make explainability audits necessary for AI/ML systems used throughout DPI to make sure that the decision-making process is open, impartial, and subject to scrutiny (e.g., welfare algorithms, credit scoring). Use the recently established IndiaAI Safety Institute in line with India’s AI mission to conduct AI audits, establish explanatory standards, and create sector-specific compliance guidelines.

References

- https://orfamerica.org/newresearch/dpi-catalyst-private-sector-innovation?utm_source=chatgpt.com

- https://www.institutmontaigne.org/en/expressions/indias-digital-public-infrastructure-success-story-world

- https://www.pib.gov.in/PressReleasePage.aspx?PRID=2116341

- https://www.pib.gov.in/PressReleaseIframePage.aspx?PRID=2033389

- https://www.governancenow.com/news/regular-story/dpi-must-ensure-data-privacy-cyber-security-citizenfirst-approach

.webp)

Introduction

Privacy has become a concern for netizens and social media companies have access to a user’s data and the ability to use the said data as they see fit. Meta’s business model, where they rely heavily on collecting and processing user data to deliver targeted advertising, has been under scrutiny. The conflict between Meta and the EU traces back to the enactment of GDPR in 2018. Meta is facing numerous fines for not following through with the regulation and mainly failing to obtain explicit consent for data processing under Chapter 2, Article 7 of the GDPR. ePrivacy Regulation, which focuses on digital communication and digital data privacy, is the next step in the EU’s arsenal to protect user privacy and will target the cookie policies and tracking tech crucial to Meta's ad-targeting mechanism. Meta’s core revenue stream is sourced from targeted advertising which requires vast amounts of data for the creation of a personalised experience and is scrutinised by the EU.

Pay for Privacy Model and its Implications with Critical Analysis

Meta came up with a solution to deal with the privacy issue - ‘Pay or Consent,’ a model that allows users to opt out of data-driven advertising by paying a subscription fee. The platform would offer users a choice between free, ad-supported services and a paid privacy-enhanced experience which aligns with the GDPR and potentially reduces regulatory pressure on Meta.

Meta presently needs to assess the economic feasibility of this model and come up with answers for how much a user would be willing to pay for the privacy offered and shift Meta’s monetisation from ad-driven profits to subscription revenues. This would have a direct impact on Meta’s advertisers who use Meta as a platform for detailed user data for targeted advertising, and would potentially decrease ad revenue and innovate other monetisation strategies.

For the users, increased privacy and greater control of data aligning with global privacy concerns would be a potential outcome. While users will undoubtedly appreciate the option to avoid tracking, the suggestion does beg the question that the need to pay might become a barrier. This could possibly divide users between cost-conscious and privacy-conscious segments. Setting up a reasonable price point is necessary for widespread adoption of the model.

For the regulators and the industry, a new precedent would be set in the tech industry and could influence other companies’ approaches to data privacy. Regulators might welcome this move and encourage further innovation in privacy-respecting business models.

The affordability and fairness of the ‘pay or consent’ model could create digital inequality if privacy comes at a digital cost or even more so as a luxury. The subscription model would also need clarifications as to what data would be collected and how it would be used for non-advertising purposes. In terms of market competition, competitors might use and capitalise on Meta’s subscription model by offering free services with privacy guarantees which could further pressure Meta to refine its offerings to stay competitive. According to the EU, the model needs to provide a third way for users who have ads but are a result of non-personalisation advertising.

Meta has further expressed a willingness to explore various models to address regulatory concerns and enhance user privacy. Their recent actions in the form of pilot programs for testing the pay-for-privacy model is one example. Meta is actively engaging with EU regulators to find mutually acceptable solutions and to demonstrate its commitment to compliance while advocating for business models that sustain innovation. Meta executives have emphasised the importance of user choice and transparency in their future business strategies.

Future Impact Outlook

- The Meta-EU tussle over privacy is a manifestation of broader debates about data protection and business models in the digital age.

- The EU's stance on Meta’s ‘pay or consent’ model and any new regulatory measures will shape the future landscape of digital privacy, leading to other jurisdictions taking cues and potentially leading to global shifts in privacy regulations.

- Meta may need to iterate on its approach based on consumer preferences and concerns. Competitors and tech giants will closely monitor Meta’s strategies, possibly adopting similar models or innovating new solutions. And the overall approach to privacy could evolve to prioritise user control and transparency.

Conclusion

Consent is the cornerstone in matters of privacy and sidestepping it violates the rights of users. The manner in which tech companies foster a culture of consent is of paramount importance in today's digital landscape. As the exploration by Meta in the ‘pay or consent’ model takes place, it faces both opportunities and challenges in balancing user privacy with business sustainability. This situation serves as a critical test case for the tech industry, highlighting the need for innovative solutions that respect privacy while fostering growth with the specificity of dealing with data protection laws worldwide, starting with India’s Digital Personal Data Protection Act, of 2023.

Reference:

- https://ciso.economictimes.indiatimes.com/news/grc/eu-tells-meta-to-address-consumer-fears-over-pay-for-privacy/111946106

- https://www.wired.com/story/metas-pay-for-privacy-model-is-illegal-says-eu/

- https://edri.org/our-work/privacy-is-not-for-sale-meta-must-stop-charging-for-peoples-right-to-privacy/

- https://fortune.com/2024/04/17/meta-pay-for-privacy-rejected-edpb-eu-gdpr-schrems/

Introduction

In the past few decades, technology has rapidly advanced, significantly impacting various aspects of life. Today, we live in a world shaped by technology, which continues to influence human progress and culture. While technology offers many benefits, it also presents certain challenges. It has increased dependence on machines, reduced physical activity, and encouraged more sedentary lifestyles. The excessive use of gadgets has contributed to social isolation. Different age groups experience the negative aspects of the digital world in distinct ways. For example, older adults often face difficulties with digital literacy and accessing information. This makes them more vulnerable to cyber fraud. A major concern is that many older individuals may not be familiar with identifying authentic versus fraudulent online transactions. The consequences of such cybercrimes go beyond financial loss. Victims may also experience emotional distress, reputational harm, and a loss of trust in digital platforms.

Why Senior Citizens Are A Vulnerable Target

Digital exploitation involves a variety of influencing tactics, such as coercion, undue influence, manipulation, and frequently some sort of deception, which makes senior citizens easy targets for scammers. Senior citizens have been largely neglected in research on this burgeoning type of digital crime. Many of our parents and grandparents grew up in an era when politeness and trust were very common, making it difficult for them to say “no” or recognise when someone was attempting to scam them. Seniors who struggle with financial stability may be more likely to fall for scams promising financial relief or security. They might encounter obstacles in learning to use new technologies, mainly due to unfamiliarity. It is important to note that these factors do not make seniors weak or incapable. Rather, it is the responsibility of the community to recognise and address the unique vulnerabilities of our senior population and work to prevent them from falling victim to scams.

Senior citizens are the most susceptible to social engineering attacks. Scammers may impersonate people, such as family members in distress, government officials, and deceive seniors into sending money or sharing personal information. Some of them are:

- The grandparent scam

- Tech support scam

- Government impersonation scams

- Romance scams

- Digital arrest

Protecting Senior Citizens from Digital Scams

As a society, we must focus on educating seniors about common cyber fraud techniques such as impersonation of family members or government officials, the use of fake emergencies, or offers that seem too good to be true. It is important to guide them on how to verify suspicious calls and emails, caution them against sharing personal information online, and use real-life examples to enhance their understanding.

Larger organisations and NGOs can play a key role in protecting senior citizens from digital scams by conducting fraud awareness training, engaging in one-on-one conversations, inviting seniors to share their experiences through podcasts, and organising seminars and workshops specifically for individuals aged 60 and above.

Safety Tips

In today's digital age, safeguarding oneself from cyber threats is crucial for people of all ages. Here are some essential steps everyone should take at a personal level to remain cyber secure:

- Ensuring that software and operating systems are regularly updated allows users to benefit from the latest security fixes, reducing their vulnerability to cyber threats.

- Avoiding the sharing of personal information online is also essential. Monitoring bank statements is equally important, as it helps in quickly identifying signs of potential cybercrime. Reviewing financial transactions and reporting any unusual activity to the bank can assist in detecting and preventing fraud.

- If suspicious activity is suspected, it is advisable to contact the company directly using a different phone line. This is because cybercriminals can sometimes keep the original line open, leading individuals to believe they are speaking with a legitimate representative. In such cases, attackers may impersonate trusted organisations to deceive users and gain sensitive information.

- If an individual becomes a victim of cybercrime, they should take immediate action to protect their personal information and seek professional guidance.

- Stay calm and respond swiftly and wisely. Begin by collecting and preserving all evidence—this includes screenshots, suspicious messages, emails, or any unusual activity. Report the incident immediately to the police or through an official platform like www.cybercrime.gov.in and the helpline number 1930.

- If financial information is compromised, the affected individual must alert their bank or financial institution without delay to secure their accounts. They should also update passwords and implement two-factor authentication as additional safeguards.

Conclusion: Collective Action for Cyber Dignity and Inclusion

Elder abuse in the digital age is an invisible crisis. It’s time we bring it into the spotlight and confront it with education, empathy, and collective action. Safeguarding senior citizens from cybercrime necessitates a comprehensive approach that combines education, vigilance, and technological safeguards. By fostering awareness and providing the necessary tools and support, we can empower senior citizens to navigate the digital world safely and confidently. Let us stand together to support these initiatives, to be the guardians our elders deserve, and to ensure that the digital world remains a place of opportunity, not exploitation.

REFERENCES -

- https://portal.ct.gov/ag/consumer-issues/hot-scams/the-grandparents-scam

- https://www.fbi.gov/how-we-can-help-you/scams-and-safety/common-frauds-and-scams/tech-support-scams

- https://consumer.ftc.gov/articles/how-avoid-government-impersonation-scam

- https://www.jpmorgan.com/insights/fraud/fraud-mitigation/helping-your-elderly-and-vulnerable-loved-ones-avoid-the-scammers

- https://www.fbi.gov/how-we-can-help-you/scams-and-safety/common-frauds-and-scams/romance-scams

- https://www.jpmorgan.com/insights/fraud/fraud-mitigation/helping-your-elderly-and-vulnerable-loved-ones-avoid-the-scammers