#FactCheck: False Claims of Fireworks in Dubai International Stadium celebrating India’s Champions Trophy Victory 2025

Executive Summary:

A misleading video claiming to show fireworks at Dubai International Cricket Stadium following India’s 2025 ICC Champions Trophy win has gone viral, causing confusion among viewers. Our investigation confirms that the video is unrelated to the cricket tournament. It actually depicts the fireworks display from the December 2024 Arabian Gulf Cup opening ceremony at Kuwait’s Jaber Al-Ahmad Stadium. This incident underscores the rapid spread of outdated or misattributed content, particularly in relation to significant sports events, and highlights the need for vigilance in verifying such claims.

Claim:

The circulated video claims fireworks and a drone display at Dubai International Cricket Stadium after India's win in the ICC Champions Trophy 2025.

Fact Check:

A reverse image search of the most prominent keyframes in the viral video led it back to the opening ceremony of the 26th Arabian Gulf Cup, which was hosted by Jaber Al-Ahmad International Stadium in Kuwait on December 21, 2024. The fireworks seen in the video correspond to the imagery in this event. A second look at the architecture of the stadium also affirms that the venue is not Dubai International Cricket Stadium, as asserted. Additional confirmation from official sources and media outlets verifies that there was no such fireworks celebration in Dubai after India's ICC Champions Trophy 2025 win. The video has therefore been misattributed and shared with incorrect context.

Fig: Claimed Stadium Picture

Conclusion:

A viral video claiming to show fireworks at Dubai International Cricket Stadium after India's 2025 ICC Champions Trophy win is misleading. Our research confirms the video is from the December 2024 Arabian Gulf Cup opening ceremony at Kuwait’s Jaber Al-Ahmad Stadium. A reverse image search and architectural analysis of the stadium debunk the claim, with official sources verifying no such celebration took place in Dubai. The video has been misattributed and shared out of context.

- Claim: Fireworks in Dubai celebrate India’s Champions Trophy win.

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

Introduction:

The Ministry of Civil Aviation, GOI, established the initiative ‘DigiYatra’ to ensure hassle-free and health-risk-free journeys for travellers/passengers. The initiative uses a single token of face biometrics to digitally validate identity, travel, and health along with any other data needed to enable air travel.

Cybersecurity is a top priority for the DigiYatra platform administrators, with measures implemented to mitigate risks of data loss, theft, or leakage. With over 6.5 million users, DigiYatra is an important step forward for India, in the direction of secure digital travel with seamless integration of proactive cybersecurity protocols. This blog focuses on examining the development, challenges and implications that stand in the way of securing digital travel.

What is DigiYatra? A Quick Overview

DigiYatra is a flagship initiative by the Government of India to enable paperless travel, reducing identity checks for a seamless airport experience. This technology allows the entry of passengers to be automatically processed based on a facial recognition system at all the checkpoints at the airports, including main entry, security check areas, aircraft boarding, and more.

This technology makes the boarding process quick and seamless as each passenger needs less than three seconds to pass through every touchpoint. Passengers’ faces essentially serve as their documents (ID proof and if required, Vaccine Proof) and their boarding passes.

DigiYatra has also enhanced airport security as passenger data is validated by the Airlines Departure Control System. It allows only the designated passengers to enter the terminal. Additionally, the entire DigiYatra Process is non-intrusive and automatic. In improving long-standing security and operational airport protocols, the platform has also significantly improved efficiency and output for all airport professionals, from CISF personnel to airline staff members.

Policy Origins and Framework

Rooted in the Government of India's Digital India campaign and enabled by the National Civil Aviation Policy (NCAP) 2016, DigiYatra aims to modernise air travel by integrating Aadhaar-based passenger identification. While Aadhaar is currently the primary ID, efforts are underway to include other identification methods. The platform, supported by stakeholders like the Airports Authority of India (26%) and private airports (14.8% each), must navigate stringent cybersecurity demands. Compliance with the Digital Personal Data Protection Act, 2023, ensures the secure use of sensitive facial recognition data, while the Aircraft (Security) Rules, 2023, mandate robust interoperability and data protection mechanisms across stakeholders. DigiYatra also aspires to democratise digital travel, extending its reach to underserved airports and non-tech-savvy travellers. As India refines its cybersecurity and privacy frameworks, learning from global best practices is essential to safeguarding data and ensuring seamless, secure air travel operations.

International Practices

Global practices offer crucial lessons to strengthen DigiYatra's cybersecurity and streamline the seamless travel experience. Initiatives such as CLEAR in the USA and Seamless Traveller initiatives in Singapore offer actionable insights into further expanding the system to its full potential. CLEAR is operational in 58 airports and has more than 17 million users. Singapore has made Seamless Traveller active since the beginning of 2024 and aims to have a 95% shift to automated lanes by 2026.

Some additional measures that India can adopt from international initiatives are regular audits and updates to the cybersecurity policies. Further, India can aim for a cross-border policy for international travel. By implementing these recommendations, DigiYatra can not only improve data security and operational efficiency but also establish India as a leader in global aviation security standards, ensuring trust and reliability for millions of travellers

CyberPeace Recommendations

Some recommendations for further improving upon our efforts for seamless and secure digital travel are:

- Strengthen the legislation on biometric data usage and storage.

- Collaborate with global aviation bodies to develop standardised operations.

- Cybersecurity technologies, such as blockchain for immutable data records, should be adopted alongside encryption standards, data minimisation practices, and anonymisation techniques.

- A cybersecurity-first culture across aviation stakeholders.

Conclusion

DigiYatra represents a transformative step in modernising India’s aviation sector by combining seamless travel with robust cybersecurity. Leveraging facial recognition and secure data validation enhances efficiency while complying with the Digital Personal Data Protection Act, 2023, and Aircraft (Security) Rules, 2023.

DigiYatra must address challenges like secure biometric data storage, adopt advanced technologies like blockchain, and foster a cybersecurity-first culture to reach its full potential. Expanding to underserved regions and aligning with global best practices will further solidify its impact. With continuous innovation and vigilance, DigiYatra can position India as a global leader in secure, digital travel.

References

- https://government.economictimes.indiatimes.com/news/governance/digi-yatra-operates-on-principle-of-privacy-by-design-brings-convenience-security-ceo-digi-yatra-foundation/114926799

- https://www.livemint.com/news/india/explained-what-is-digiyatra-how-it-will-work-and-other-questions-answered-11660701094885.html

- https://www.civilaviation.gov.in/sites/default/files/2023-09/ASR%20Notification_published%20in%20Gazette.pdf

A video of Bollywood actor Salman Khan is being widely circulated on social media, in which he can allegedly be heard saying that he will soon join Asaduddin Owaisi’s party, the All India Majlis-e-Ittehadul Muslimeen (AIMIM). Along with the video, a purported image of Salman Khan with Asaduddin Owaisi is also being shared. Social media users are claiming that Salman Khan is set to join the AIMIM party.

CyberPeace research found the viral claim to be false. Our research revealed that Salman Khan has not made any such statement, and that both the viral video and the accompanying image are AI-generated.

Claim

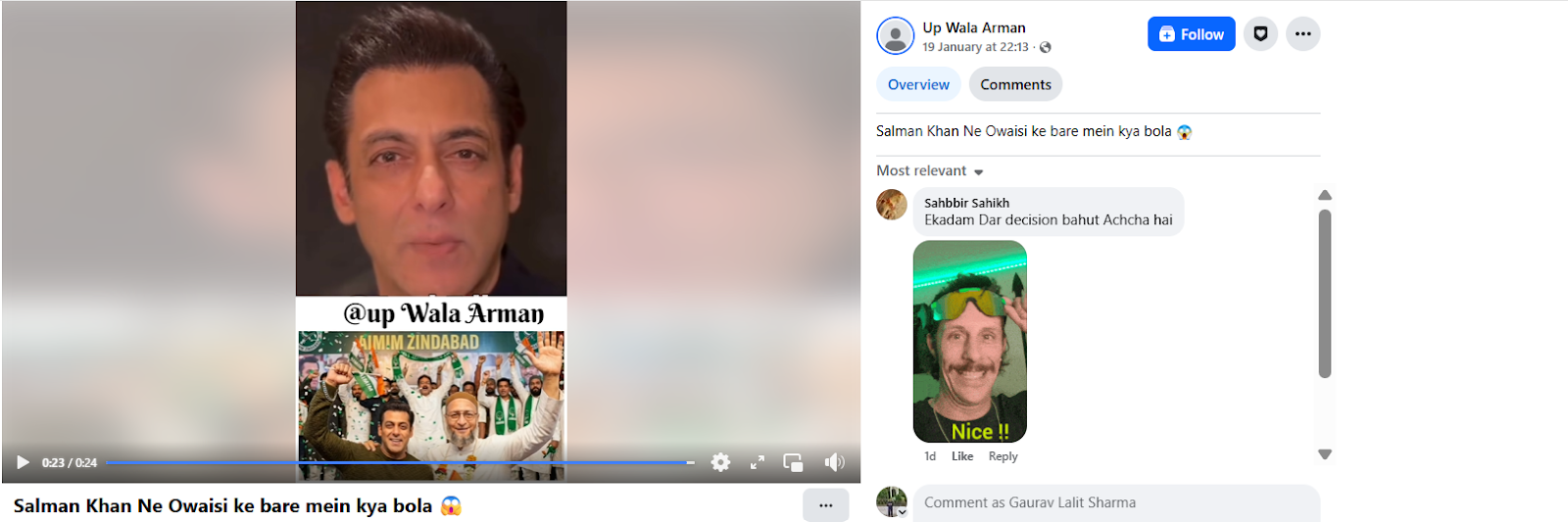

Social media users claim that Salman Khan has announced his decision to join AIMIM.On 19 January 2026, a Facebook user shared the viral video with the caption, “What did Salman say about Owaisi?” In the video, Salman Khan can allegedly be heard saying that he is going to join Owaisi’s party. (The link to the post, its archived version, and screenshots are available.)

Fact Check:

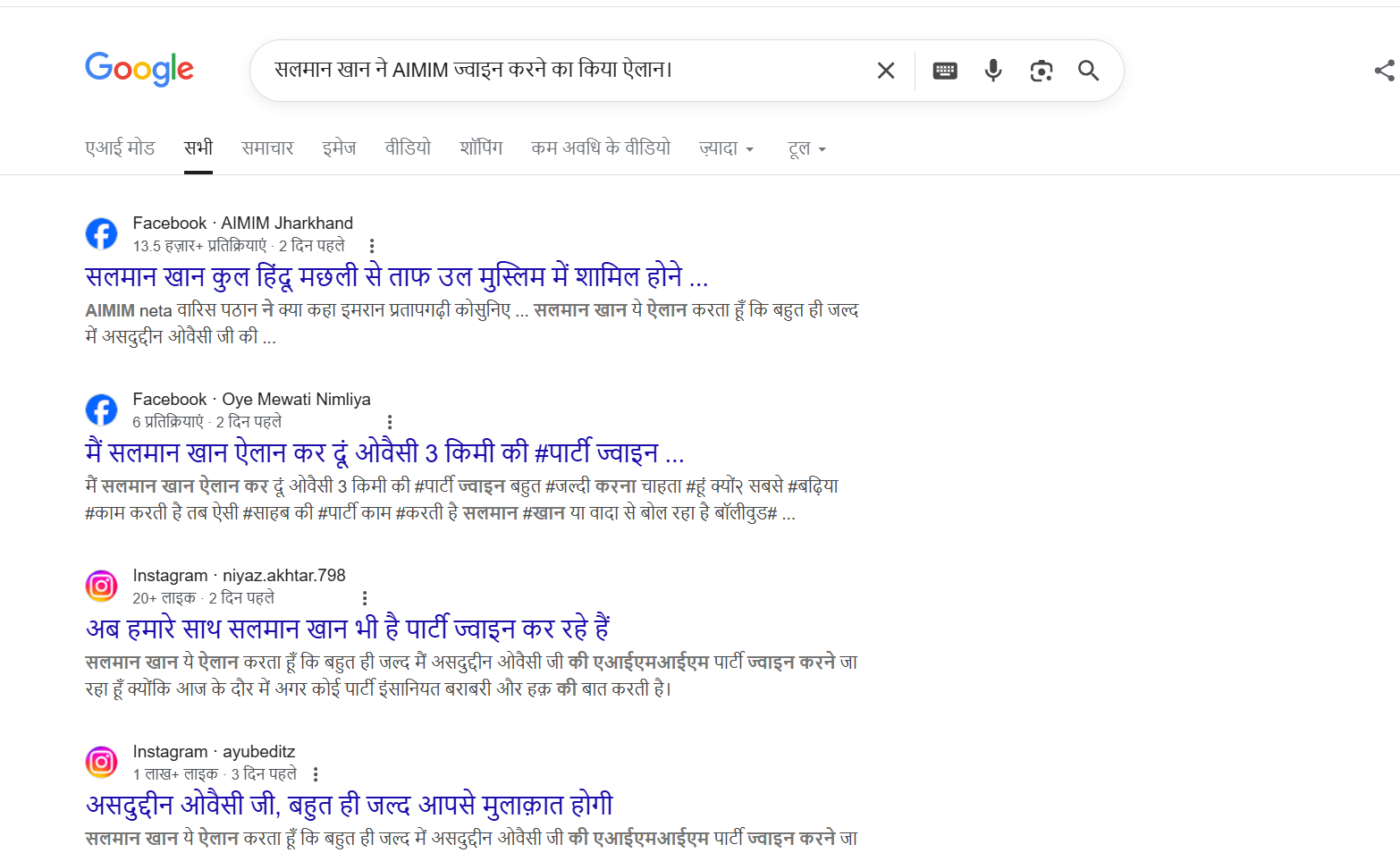

To verify the claim, we first searched Google using relevant keywords. However, no credible or reliable media reports were found supporting the claim that Salman Khan is joining AIMIM.

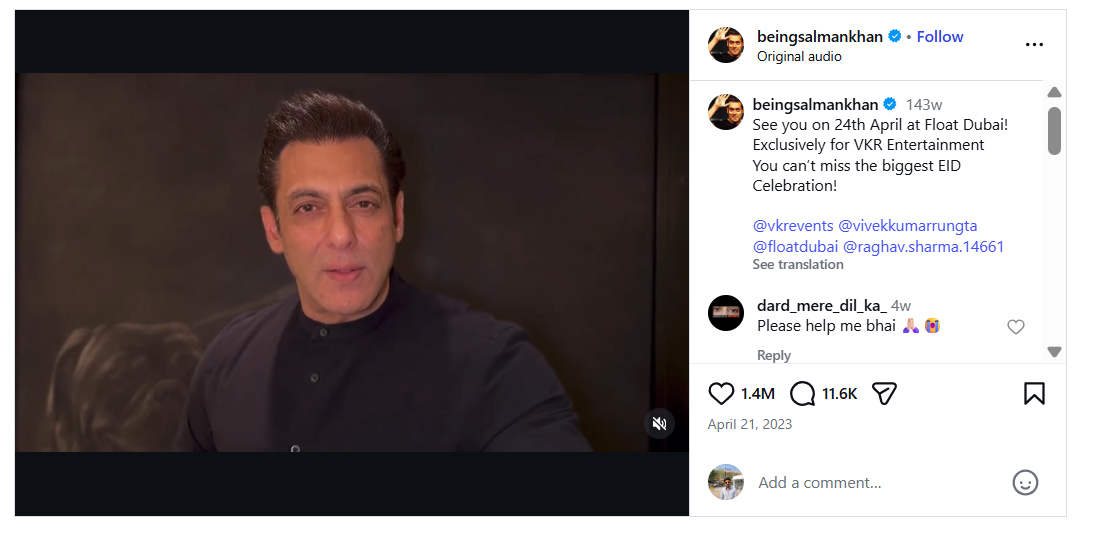

In the next step of verification, we extracted key frames from the viral video and conducted a reverse image search using Google Lens. This led us to a video posted on Salman Khan’s official Instagram account on 21 April 2023. In the original video, Salman Khan is seen talking about an event scheduled to take place in Dubai. A careful review of the full video confirmed that no statement related to AIMIM or Asaduddin Owaisi is made.

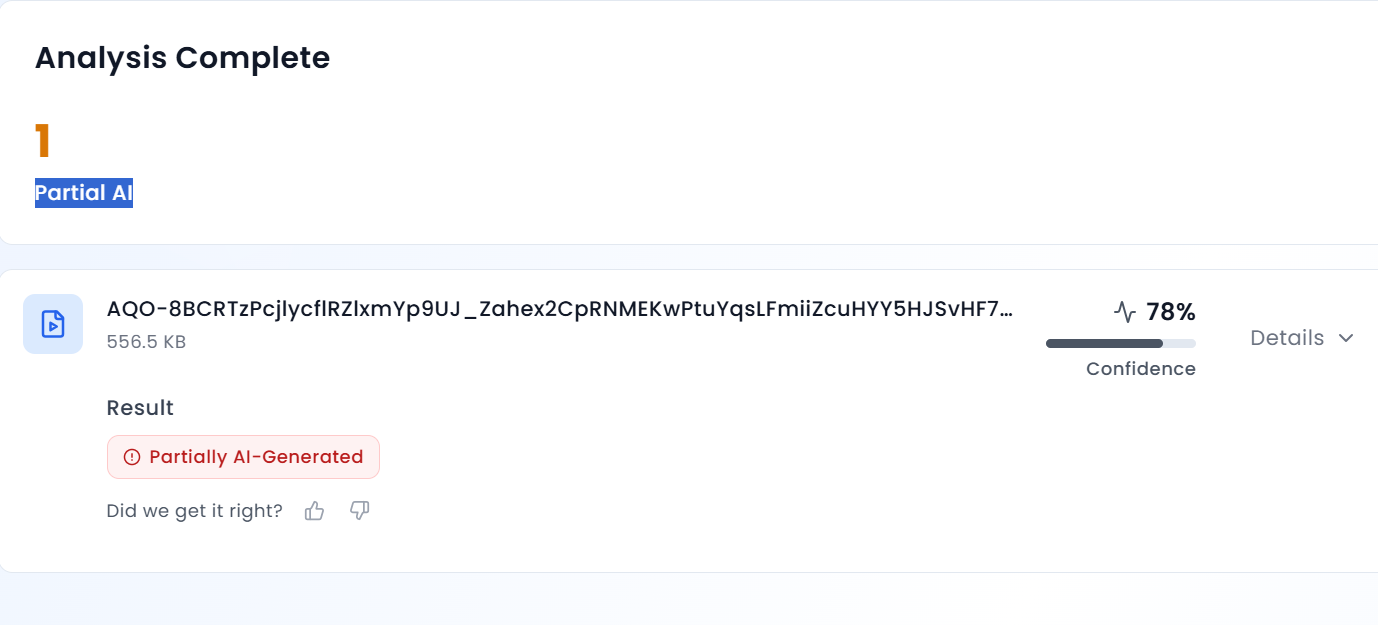

Further analysis of the viral clip revealed that Salman Khan’s voice sounds unnatural and robotic. To verify this, we scanned the video using AURGIN AI, an AI-generated content detection tool. According to the tool’s analysis, the viral video was generated using artificial intelligence.

Conclusion

Salman Khan has not announced that he is joining the AIMIM party. The viral video and the image circulating on social media are AI-generated and manipulated.

Introduction

The debate between free speech and social responsibility is one of the oldest, long-running debates in history. Free speech is considered to be at the heart of every democracy. It is considered the “mother” of all other freedoms, enshrined in Article 19(1)(a) of the Indian Constitution under Part III: Fundamental Rights. It takes various shapes and forms according to the sociopolitical context of society. Evelyn Beatrice Hall, a prominent English writer of the 19th century, laid the foundation of every democracy when she wrote in her book, "I disapprove of what you say, but I willdefend to the death your right to say it." The drastic misuse of social media to disseminate propaganda and fakenews makes it a marketplace of half-baked truth, becoming the antithesis ofwhat early philosophers dreamed of for a democratic modern age. Losethe ethics, and there you have it, the modern conceptualisation of freedom ofspeech and expression in the digital age. The right to freedom of speech andexpression is one of the most fundamental rights, but its exercise is notunfettered, and certain limits are placed upon this right under Art. 19 (2).Every right comes with a corresponding duty, and the exercise of such freedomalso puts the citizenry under the responsibility not to violate the rights ofothers and not to use the media to demean any other person.

SocialMedia: The New Public Square or a Weaponised Echo Chamber

InIndia, Art. 19(1)(a) of the constitution guarantees the right to freedom ofspeech and expression, but it is not absolute. Under Art. 19(2), this right issubject to reasonable restrictions in the interest of public order, decency,morality, and national security. This is construed as a freedom for everyindividual to freely express their opinions, but not to incite violence, spreadfalsehoods, or harm others’ dignity. Unfortunately, the boundaries betweenthese are increasingly blurred.

Thedissemination of unfiltered media and the strangulation of innocence by pushingoften vulgar and obscene content down the throats of individuals, withoutverifying the age and gender profile of the social media user, is a big farcein the name of free speech and a conscious attempt by the intermediaries andsocial media platforms such as Facebook, Instagram, Threads, etc., to wriggleout of their responsibility. A prime example is when Meta’s Mark Zuckerberg, on7th January 2025, gave a statement asserting less intervention into what peoplefind on its social media platforms as the new “best practice”. While lessinterference would have worked in a generation that merely operated on thediffering, dissenting, and raw ideas bred by the minds of differentindividuals, it is not the case for this day and age. There has been asignificant rise in cases where social media platforms have been used as abattleground for disputes, spreading communal violence, misinformation, anddisinformation.

Thereis no debate about the fact that social media platforms have fostered a globalexpression, making the world a global village, bringing everyone together. Onthe other hand, the platforms have become the epicentre of computer-basedcrimes, where children and teenagers often become prey to these crimes,cyberbullying, and cyberstalking.

Rising Importance of Platform Accountability

Themost pertinent question that is to be asked with a conscious mind is whether anunregulated media is a reflection of Freedom of Speech, a right given to us byour constitution under Article. 19(1)(a), or whether free speech is just a garbby big stakeholders, and we are all victims of an impending infodemic andvictims of AI algorithms, because, as per the reports that surfaced during theCovid-19 pandemic, India saw a dramatic 214% rise in false information. Anotherreport by the UNESCO-Ipsos survey revealed that 85% of Indian respondentsencounter online hate speech, with around 64% pointing to social media as aprimary source.

While the focus on platform accountability is critical, it is equally important to recognise that the right to free speech is not absolute. Therefore, users also bear a constitutional responsibility while exercising this right. Free expression in a democratic society must be accompanied by civic digital behaviour, which includes refraining from spreading hate speech, misinformation, or engaging in harmful conduct online. The most recent example of this is the case of Ranveer Gautam Allahabadia vs. UOI (popularly known as “Latent Case”); the court came down heavily on the hosts and makers of the show and made its position crystal clear by stating, “there is nothinglike a fundamental right on platter...the fundamental rights are all followedby a duty...unless those people understand duty, there is no [...] deal withthat kind of elements...if somebody wants to enjoy fundamental rights, thiscountry gives a guarantee to enjoy, but guarantee is with a duty so thatguarantee will involve performing that duty also” .

The Way Forward: CyberPeace Suggests

In order to realise the benefits and derive the true benefits from the rights we are provided, especially the one in discussion, i.e., Freedom of Speech and Expression, the government and the designated intermediaries and regulators have to prepare both roadmaps, one for “Platform Accountability” and one for "User Accountability”, wherein the regulators with a reasonable foresight should conduct Algorithm Risk Audits which is a technique to make algorithms and there effects on content feeds visible. It can be an effective tool and an objective manner to compare how algorithms are automatically pushing different content to different users in an unfair or unbalanced way. As for user accountability, “Digital Literacy” is the way forward, ensuring that social media remains a marketplace of ideas and does not become a minefield of misfires.