The EU's Digital Services Act Pioneering a Safer Cyberspace

Introduction

Beginning with the premise that the advent of the internet has woven a rich but daunting digital web, intertwining the very fabric of technology with the variegated hues of human interaction, the EU has stepped in as the custodian of this ever-evolving tableau. It is within this sprawling network—a veritable digital Minotaur's labyrinth—that the European Union has launched a vigilant quest, seeking not merely to chart its enigmatic corridors but to instil a sense of order in its inherent chaos.

The Digital Services Act (DSA) is the EU's latest testament to this determined pilgrimage, a voyage to assert dominion over the nebulous realms of cyberspace. In its latest sagacious move, the EU has levelled its regulatory lance at the behemoths of digital indulgence—Pornhub, XVideos, and Stripchat—monarchs in the realm of adult entertainment, each commanding millions of devoted followers.

Applicability of DSA

Graced with the moniker of Very Large Online Platforms (VLOPs), these titans of titillation are now facing the complex weave of duties delineated by the DSA, a legislative leviathan whose coils envelop the shadowy expanses of the internet with an aim to safeguard its citizens from the snares and pitfalls ensconced within. Like a vigilant Minotaur, the European Commission, the EU's executive arm, stands steadfast, enforcing compliance with an unwavering gaze.

The DSA is more than a mere compilation of edicts; it encapsulates a deeper, more profound ethos—a clarion call announcing that the wild frontiers of the digital domain shall be tamed, transforming into enclaves where the sanctity of individual dignity and rights is zealously championed. The three corporations, singled out as the pioneers to be ensnared by the DSA's intricate net, are now beckoned to embark on an odyssey of transformation, realigning their operations with the EU's noble envisioning of a safeguarded internet ecosystem.

The Paradigm Shift

In a resolute succession, following its first decree addressing 19 Very Large Online Platforms and Search Engines, the Commission has now ensconced the trinity of adult content purveyors within the DSA's embrace. The act demands that these platforms establish intuitive user mechanisms for reporting illicit content, prioritize communications from entities bestowed with the 'trusted flaggers' title, and elucidate to users the rationale behind actions taken to restrict or remove content. Paramount to the DSA's ethos, they are also tasked with constructing internal mechanisms to address complaints, forthwith apprising law enforcement of content hinting at criminal infractions, and revising their operational underpinnings to ensure the confidentiality, integrity, and security of minors.

But the aspirations of the DSA stretch farther, encompassing a realm where platforms are agents against deception and manipulation of users, categorically eschewing targeted advertisement that exploits sensitive profiling data or is aimed at impressionable minors. The platforms must operate with an air of diligence and equitable objectivity, deftly applying their terms of use, and are compelled to reveal their content moderation practices through annual declarations of transparency.

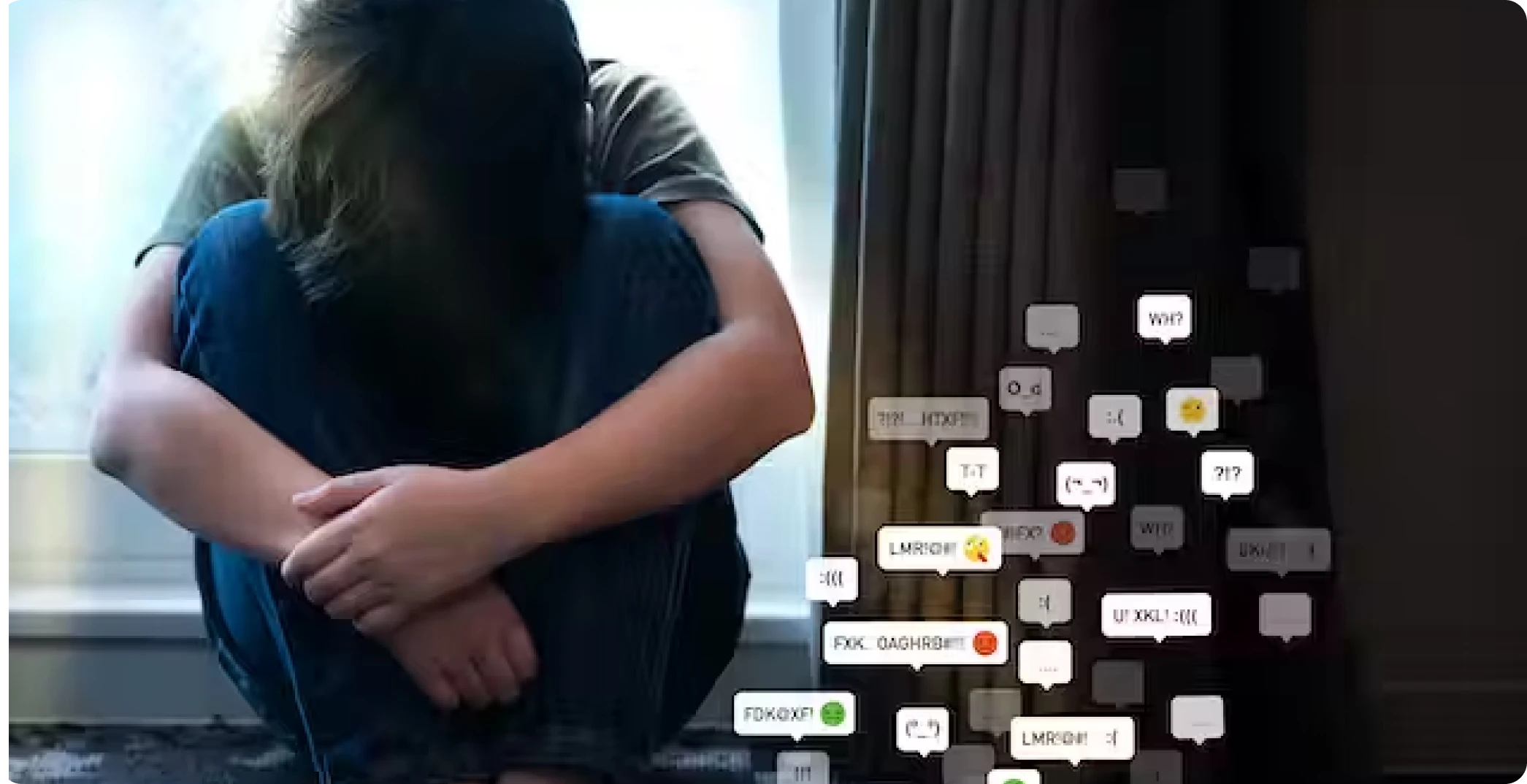

The DSA bestows upon the designated VLOPs an even more intensive catalogue of obligations. Within a scant four months of their designation, Pornhub, XVideos, and Stripchat are mandated to implement measures that both empower and shield their users—especially the most vulnerable, minors—from harms that traverse their digital portals. Augmented content moderation measures are requisite, with critical risk analyses and mitigation strategies directed at halting the spread of unlawful content, such as child exploitation material or the non-consensual circulation of intimate imagery, as well as curbing the proliferation and repercussions of deepfake-generated pornography.

The New Rules

The DSA enshrines the preeminence of protecting minors, with a staunch requirement for VLOPs to contrive their services so as to anticipate and enfeeble any potential threats to the welfare of young internet navigators. They must enact operational measures to deter access to pornographic content by minors, including the utilization of robust age verification systems. The themes of transparency and accountability are amplified under the DSA's auspices, with VLOPs subject to external audits of their risk assessments and adherence to stipulations, the obligation to maintain accessible advertising repositories, and the provision of data access to rigorously vetted researchers.

Coordinated by the Commission in concert with the Member States' Digital Services Coordinators, vigilant supervision will be maintained to ensure the scrupulous compliance of Pornhub, Stripchat, and XVideos with the DSA's stringent directives. The Commission's services are poised to engage with the newly designated platforms diligently, affirming that initiatives aimed at shielding minors from pernicious content, as well as curbing the distribution of illegal content, are effectively addressed.

The EU's monumental crusade, distilled into the DSA, symbolises a pledge—a testament to its steadfast resolve to shepherd cyberspace, ensuring the Minotaur of regulation keeps the bedlam at a manageable compass and the sacrosanctity of the digital realm inviolate for all who meander through its infinite expanses. As we cast our gazes toward February 17, 2024—the cusp of the DSA's comprehensive application—it is palpable that this legislative milestone is not simply a set of guidelines; it stands as a bold, unflinching manifesto. It beckons the advent of a novel digital age, where every online platform, barring small and micro-enterprises, will be enshrined in the lofty ideals imparted by the DSA.

Conclusion

As we teeter on the edge of this nascent digital horizon, it becomes unequivocally clear: the European Union's Digital Services Act is more than a mundane policy—it is a pledge, a resolute statement of purpose, asserting that amid the vast, interwoven tapestry of the internet, each user's safety, dignity, and freedoms are enshrined and hold the intrinsic significance meriting the force of the EU's legislative guard. Although the labyrinth of the digital domain may be convoluted with complexity, guided by the DSA's insightful thread, the march toward a more secure, conscientious online sphere forges on—resolute, unerring, one deliberate stride at a time.

References

https://ec.europa.eu/commission/presscorner/detail/en/ip_23_6763https://www.breakingnews.ie/world/three-of-the-biggest-porn-sites-must-verify-ages-under-eus-new-digital-law-1566874.html